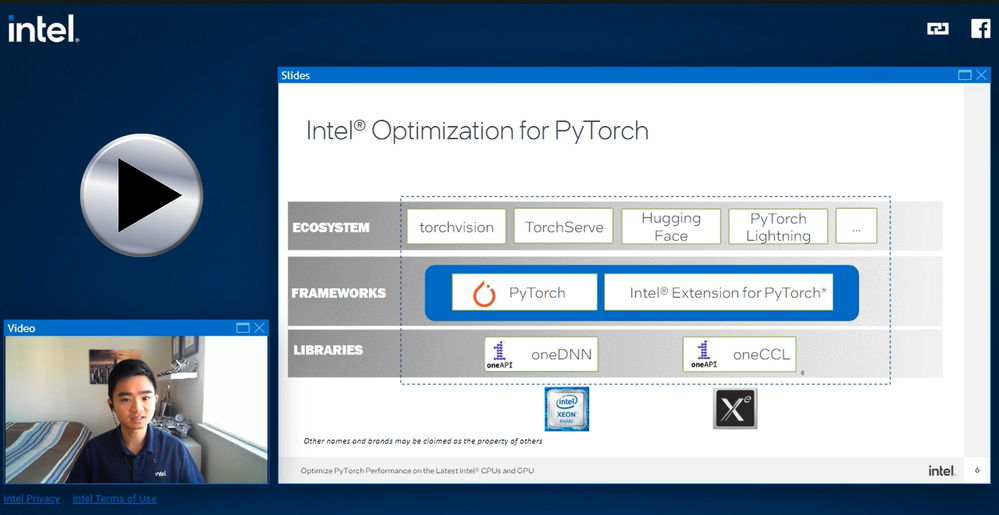

With the launch of the 4th Gen Intel® Xeon® Scalable processors, as well as the Intel® Xeon® CPU Max Series and Intel® Data Center GPU Max Series, AI developers can now take advantage of some significant performance optimization strategies on these hardware platforms in relation to PyTorch*. The Intel® Optimization for PyTorch* utilizes two libraries for accelerated computing: Intel® oneAPI Deep Neural Network Library (oneDNN) and Intel® oneAPI Collective Communications Library (oneCCL). OneDNN is used for optimizing operators in deep learning, such as convolution and pooling, computing vectorization, and multi-threading, among other means, in order to improve the efficiency of system resources. OneCCL optimizes the distributed training of newer and deeper models across multiple nodes and enables efficient implementations of important operators like reduce, all-reduce, and all-gather.

At a framework level, Intel® has collaborated with Meta, the developer of PyTorch*, to contribute optimized code directly to PyTorch’s* main branch on GitHub* to get enhanced performance right out of the box. There is also the Intel® Extension for PyTorch*, which optimizes PyTorch* in the form of a plugin and allows developers to take advantage of Intel’s® latest hardware platforms even before the code is merged officially into PyTorch*.

On the ecosystem side, the latest optimizations support commonly used PyTorch* model libraries such as torchvision* and Hugging Face*. The Intel® Extension for PyTorch* is also integrated into TorchServe* enabling out-of-the-box performance gains when deploying to production environments.

The Intel® Optimization for PyTorch* follows a three-pronged approach that includes operator optimization, graph optimization, and runtime optimization techniques. Operator optimizations can be broken down into three methods: vectorization, which enables more efficient usage and distribution of computing resources for individual calculations; parallelization, which allows for simultaneous calculations; and lastly memory layout affects where data is stored and thus can be tuned for better cache locality. Graph optimizations mainly involve fusing together common operators and constant folding, which is used to reduce the number of operations between operators and thus provide better cache locality. Runtime optimizations on the CPU include runtime extensions that can reduce time loss caused by the inefficient communication amongst cores by binding computing threads to specific cores. On the GPU, there are some runtime options that can be configured for use in debugging or execution.

The Intel® Extension for PyTorch* plugin is open sourced on GitHub* and includes instructions for running the CPU version and the GPU version. PyTorch* provides two execution modes: Eager mode and graph mode. In the former, operators in a model are executed immediately upon being encountered. It focuses on operators and is advantageous in debugging models as it allows developers to visually spot what operators are running and thus verify if resultant output is correct. In graph mode, operators must first be synthesized into a graph and then be compiled and executed as a whole. In deployment performance is key, and thus graph mode will tell PyTorch* to detect the structure of the overall model and use methods such as operator fusion and constant folding to modify and merge model structure to reduce time loss from any invalid operations and ultimately improve performance. The Intel® Extension for PyTorch* will also detect the instruction set architecture of the hardware that is being used during runtime and automatically use the most suitable operators for calculation, specifically those such as Intel® Advanced Vector Extensions 512 with Vector Neural Network Instructions (VNNI) and Intel® Advanced Matrix Extensions (AMX). These are both new instruction sets supported by the new 4th Gen Intel® Xeon® Scalable Processors.

See the video: Optimize PyTorch* Performance on the Latest Intel® CPUs and GPUs

As long as artificial intelligence continues to dominate all facets of research and technological innovation, AI software development technologies and methodologies will of course follow suit and innovate just the same. The key improvements and optimizations developed for the Intel® Optimization for PyTorch* and especially the Intel® Extension for PyTorch* in conjunction with the new 4th Gen Intel® Xeon® Scalable Processors are perfect examples of what makes AI software development and research so enriching and vital to the continued evolution of many industries. The new tools and techniques being developed are in turn being used and developed further by the very developers who keep AI at the cutting edge of technological innovation.

About our experts

Alex Sin

AI Software Solutions Engineer

Intel

In June 2022, Alex joined SATG AIA AIPC’s AI Customer Engineering team as an AI Software Solutions Engineer and has been enabling customers to build their AI applications using Intel’s hardware architectures and software stacks. He provides technical consulting on using the Intel AI Analytics Toolkit on Intel® Xeon® Scalable Processors to optimize accelerated computing in AI and machine learning. Previously Alex worked at Viasat developing and testing microcontroller and FPGA-based embedded security systems used by the government in radios, war fighters, and the navy. Alex holds Bachelors and Masters Degree in Electrical Engineering from UCLA.

Pramod Pai

AI Software Solutions Engineer

Intel

Pramod Pai is an AI Software Solutions Engineer at Intel who enables customers to optimize their Machine Learning workflows using solutions from Intel®. His areas of focus include Intel® oneAPI AI Analytics Toolkit, and Intel® Extension for PyTorch*. He holds a Master’s degree in Information Systems from Northeastern University.

Austin Webb

Video Specialist/Motion Graphics Designer

Intel

Austin is a Video producer and visual designer based in Portland, Oregon. His background is in sports entertainment, having worked for the San Jose Sharks and the Portland Timbers. Currently he works on the Intel Dev Zone Studio Team building graphics and animations for various video needs. At his core, he’s passionate about producing captivating visuals and great storytelling. Away from work he’s either hanging out with his daughter, working on house projects or experimenting in the kitchen with my wife.

AI Software Marketing Engineer creating insightful content surrounding the cutting edge AI and ML technologies and software tools coming out of Intel

AI Software Marketing Engineer creating insightful content surrounding the cutting edge AI and ML technologies and software tools coming out of Intel

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.