I recently hosted the webinar Prompt-Driven Efficiencies for LLMs, where my guest Eduardo Alvarez, Senior AI Solutions Engineer at Intel, unpacked some techniques for prompt-driven performance efficiencies. These techniques need to deliver the following business results.

- Serving more users with quality responses the first time.

- Provide higher levels of user support while maintaining data privacy.

- Improving operational efficiency and cost with prompt economization.

Eduardo presented three techniques available to developers:

- Prompt engineering consists of different prompt methods to generate higher-quality answers.

- Retrieval-augmented generation improves the prompt with additional context to reduce the burden on end users.

- Prompt economization techniques increase the efficiency of data movement through the GenAI pipeline.

Effective prompting can reduce the number of model inferencing (and costs) while improving the quality of the results more quickly.

Prompt engineering: Improving model results

Let’s start with a prompt engineering framework for LLMs: Learning Based, Creative Prompting, and Hybrid.

The Learning Based technique encompasses one shot and few shot prompts. This technique gives the model context and teaches it with examples in the prompt for the model to learn. Zero-shot prompt is when the prompt asks the model information it has already been trained on. A one-shot or few-shot prompting provides context, teaching the model new information to receive more accurate results from the LLM.

Under the Creative Prompting category, techniques such as negative prompting or iterative prompting can deliver more accurate responses. Negative prompting provides a boundary for the model response, while iterative prompting provides follow-up prompts that allow the model to learn over the series of prompts.

The Hybrid Prompting approach may combine any or all of the above.

The advantages of these methods come with the caveat that they require users to have the expertise to know these techniques and provide the context to produce high-quality prompts.

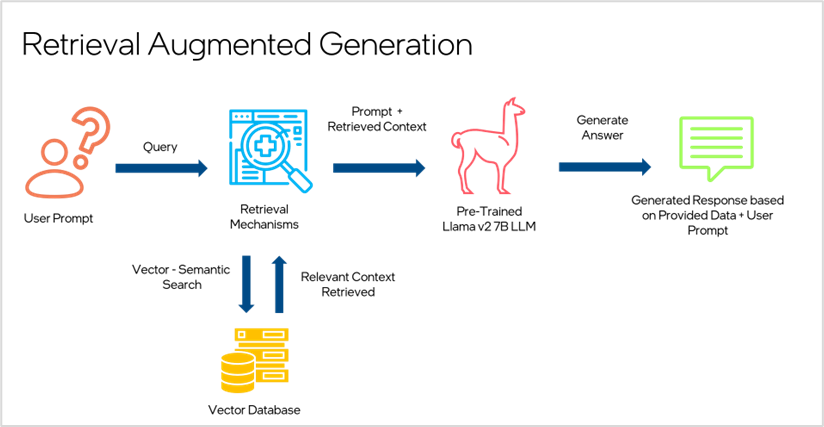

Retrieval augmented generation: Increase accuracy with company data

LLMs are usually trained on the general corpus of internet data, not data specific to your business. Adding enterprise data with retrieval augmented generation (RAG) into the prompt of LLM workflow will deliver more relevant results. This workflow includes embedding enterprise data into a vector database for prompt context retrieval; the prompt and the retrieved context are then sent to the LLM to generate the response. RAG gives you the benefit of your data in the LLM without retraining the model, so your data remains private, and you save on additional compute training costs.

Prompt economization: Delivering value while saving money

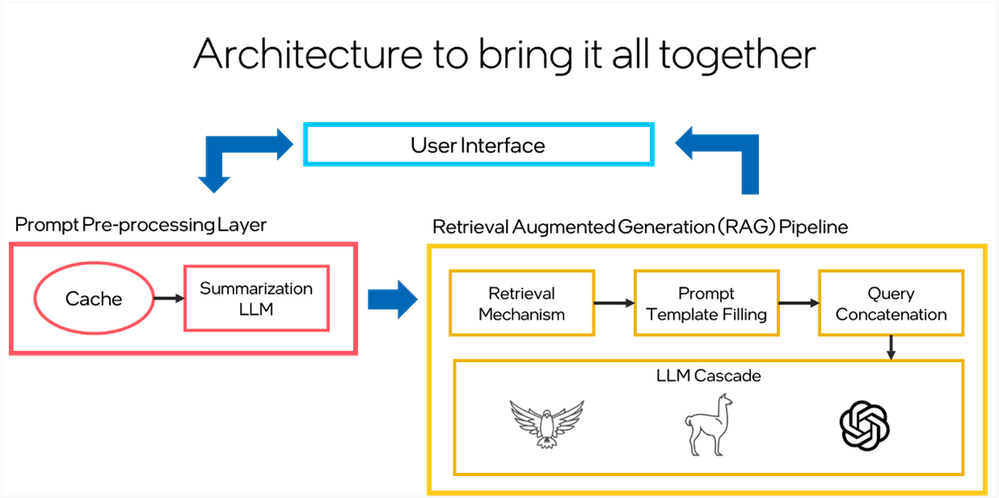

The last technique is focused on different prompt methods to economize on model inferencing.

- Token summarization uses local models to reduce the number of tokens per user prompt passed to the LLM service, which reduces costs for APIs that charge on a per-token basis.

- Completion caching stores answers to commonly asked questions in cache so they don’t need to occupy inference resources to be generated each time they are asked.

- Query concatenation combines multiple queries into a single LLM submission to reduce the overhead that accumulates per-query basis, such as pipeline overhead and prefill processing.

- LLM cascades run queries first on simpler LLMs and scores them for quality, escalating to larger, more expensive models only for those that require it. This technique reduces the average compute requirements per query.

Putting it all together

Ultimately, model throughput is limited by the amount of compute memory and power. But it’s not just about throughput, accuracy and efficiency are key to affecting generative AI results. LLM prompt architecture can be a combination of the above techniques customized to your business needs.

For a more detailed discussion on prompt engineering and efficiencies, watch the webinar here.

Interested in learning more about AI models and generative AI? Sign up for a free trial on Intel Developer Cloud.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.