Author: Basem Barakat

Contributor: Patrick Foley

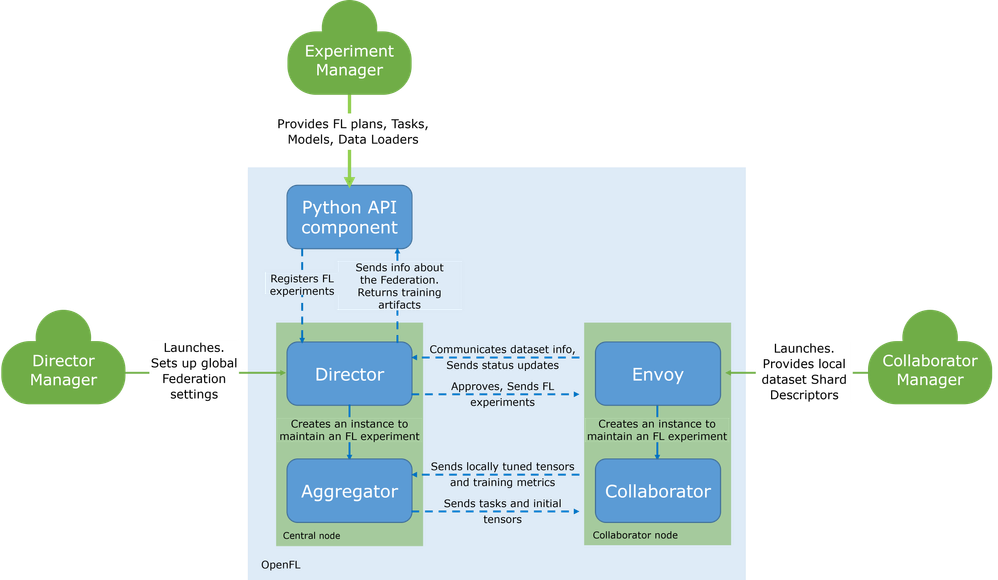

1. Introduction to Federated Learning:

Today, we're excited to introduce Open Federated Learning (OpenFL) for Gaudi 2 instances. In this blog post, we are sharing a step-by-step guide to show you how easy it is to access OpenFL for Gaudi 2 instances via Intel Developer Cloud.

Intel Gaudi 2 AI accelerators support Open Federated Learning OpenFL, a Python library for federated learning that enables collaborative training without sharing sensitive information.

OpenFL is a Deep Learning agnostic framework. Training of statistical models may be done with any deep learning framework, such as TensorFlow* or PyTorch*, via a plugin mechanism.

OpenFL is a community-supported project originally developed by Intel Labs and the Intel Internet of Things Group. The team would like to encourage any contributions, notes, or requests to improve the documentation.

2. Overview of Intel® Gaudi® AI Accelerator:

The Intel Gaudi 2 Processor for Deep Learning workloads is a class of AI accelerator that significantly increases the Deep Learning workload training and inference performance.

2.1 Intel Gaudi Accelerator Architecture

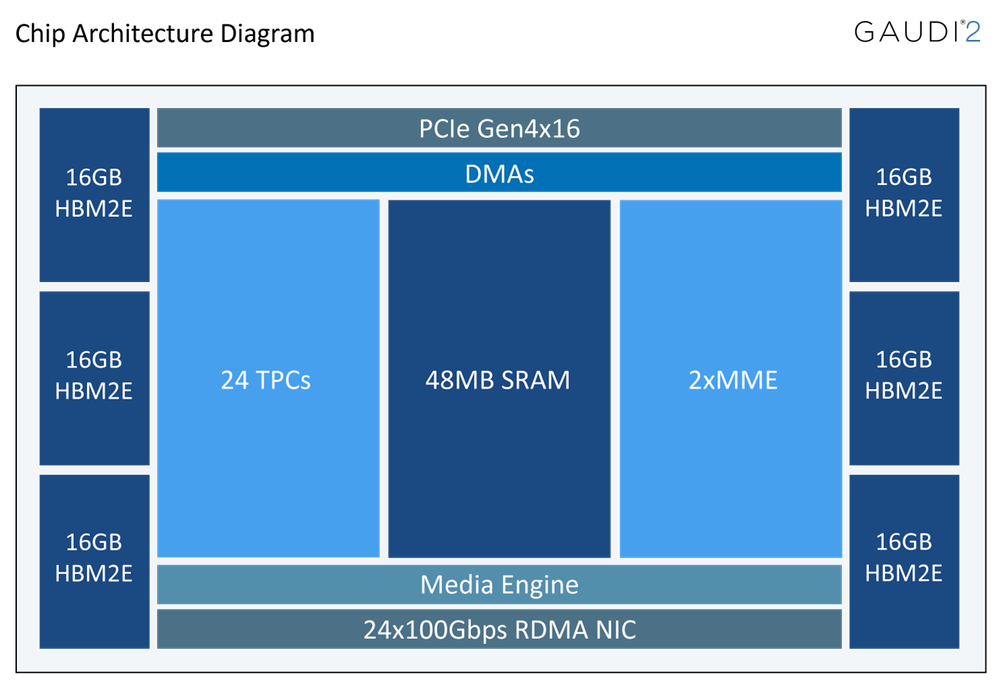

The Intel Gaudi architecture includes three main subsystems - compute, memory, and networking - and is designed from the ground up for accelerating DL training and inference workloads.

The compute architecture is heterogeneous and includes two compute engines – a Matrix Multiplication Engine (MME) and a fully programmable Tensor Processor Core (TPC) cluster. The MME is responsible for doing all operations that can be lowered to Matrix Multiplication (fully connected layers, convolutions, batched-GEMM), while the TPC, a VLIW SIMD processor tailor-made for deep learning operations, is used to accelerate everything else.

Its heterogeneous architecture comprises a cluster of fully programmable Tensor Processing Cores (TPC), associated development tools and libraries, and a configurable Matrix Math engine. The TPC core is a VLIW SIMD processor with an instruction set and hardware tailored to serve training workloads efficiently. It is programmable, providing the user with maximum flexibility to innovate, coupled with many workload-oriented features, such as:

- GEMM operation acceleration

- Tensor addressing

- Latency hiding capabilities.

- Random number generation

- Advanced implementation of special functions

The Intel Gaudi accelerator architecture is the first DL training processor that has integrated RDMA over Converged Ethernet (RoCE v2) engines on-chip. These engines play a critical role in the inter-processor communication needed during the training process. This native integration of RoCE allows customers to use the same scaling technology, both inside the server and rack (scale-up) and to scale across racks (scale-out). The networking connection can be implemented directly between Gaudi processors or through any number of standard Ethernet switches.

2.2 Intel Gaudi 2 Processor

The Gaudi 2 processor offers 2.4 Terabits of networking bandwidth with the native integration on-chip of 24 x 100 Gbps RoCE V2 RDMA NICs, enabling inter-Gaudi communication via direct routing or via standard Ethernet switching. The Gaudi 2 memory subsystem includes 96 GB of HBM2E memory delivering 2.45 TB/sec bandwidth, in addition to 48 MB of local SRAM with sufficient bandwidth to allow MME, TPC, DMAs, and RDMA NICs to operate in parallel.

Specifically for vision applications, the Intel Gaudi 2 accelerator has integrated media decoders that operate independently and can handle the entire pre-processing pipe in all popular formats – HEVC, H.264, VP9, JPEG, as well as post-decode image transformations needed to prepare the data for the AI pipeline.

Intel Gaudi 2 accelerator supports all popular data types required for deep learning: FP32, TF32, BF16, FP16, and FP8 (both E4M3 and E5M2). In the MME, all data types are accumulated into an FP32 accumulator.

Figure 1 Intel Gaudi 2 Processor High-level Architecture

2.3 Intel Gaudi Accelerator PyTorch development and adaptation overview.

For a detailed discussion on PyTorch porting, please consult the Gaudi2 Pytorch Porting instructions:

In short, the porting process could summarized in the following steps:

1. Import Habana Torch Library:

import habana_frameworks.torch.core as htcore

2. Target the Gaudi HPU device:

device = torch.device("hpu")3. Add mark_step(). In Lazy mode, mark_step() must be added in all training scripts right after loss.backward() and optimizer.step().

htcore.mark_step()

One can see that the required changes are straightforward, with only a handful of lines of code required, as described above.

3. Intel Developer Cloud introduction and overview:

The Intel Developer Cloud comprises a cluster of deep learning servers powered by Intel hardware, including the Intel Gaudi 2 accelerators.

A developer can follow these steps to access the Intel Developer Cloud:

- Go to the Intel Developer Cloud and follow the directions in the Getting Started section:

- Register as an Enterprise account and enroll in the program by entering your customer information and reviewing the terms and conditions. If you are a developer affiliated with a university or government institution, simply identify your affiliation as such. Also, consider the Intel Partner Alliance Membership programs

- Setup ssh access keys to access the Developer Cloud

- You will need to get Cloud Credits by entering your payment information or redeeming a coupon

- You can then Sign In and access the Intel Gaudi 2 instance in the Hardware Catalog

Please access our Intel Developer Cloud Quick Start Guide for detailed steps to connect to the Developer Cloud and run models.

4. OpenFL with Intel Gaudi 2 Accelerator experiment coding details:

Here, I would like to work on the exercise of applying the Federated Learning framework to MedMNIST 2D accelerated with Intel Gaudi 2 accelerators, PyTorch MedMNIST_2D.

We shall discuss an implementation example of utilizing Intel Gaudi 2 AI accelerators and the Federated Learning Framework in accelerating the Pytorch version of the MedMNIST 2D as described in detail in arXiv and its python package PyPI.

The MedMNIST v2 is a large-scale MNIST-like collection of standardized biomedical images, including 12 datasets for 2D and 6 datasets for 3D. Images are pre-processed into 28 x 28 (2D) or 28 x 28 x 28 (3D) with classification labels. Covering primary data modalities in biomedical images, MedMNIST v2 is designed to perform classification on lightweight 2D and 3D images with various data scales.

4.1 Intel Developer Cloud setup:

This example was tested on the Intel Developer Cloud utilizing the Intel Gaudi 2 accelerator instance.

For accessing the Intel Gaudi 2 accelerator instances on the Intel Developer Cloud, follow the instructions discussed in section 3 above.

The Intel Gaudi accelerator instance in the Intel Developer Cloud comes with SynapseAI SW Stack for Intel Gaudi 2 accelerators installed.

Furthermore, we used the Habana-based Docker container built using the Dockerfile base discussed below:

Let's create a Dockerfile with the following content and name it Dockerfile_Habana:

FROM vault.habana.ai/gaudi-docker/1.12.0/ubuntu20.04/habanalabs/pytorch-installer-2.0.1:latest

ENV HABANA_VISIBLE_DEVICES=all

ENV OMPI_MCA_btl_vader_single_copy_mechanism=none

ENV DEBIAN_FRONTEND="noninteractive" TZ=Etc/UTC

RUN apt-get update && apt-get install -y tzdata bash-completion \

python3-pip openssh-server vim git iputils-ping net-tools curl bc gawk \

&& rm -rf /var/lib/apt/lists/*

RUN pip install numpy \

&& pip install jupyterlab \

&& pip install matplotlib \

&& pip install openfl

RUN git clone https://github.com/securefederatedai/openfl.git /root/openfl

WORKDIR /root

This base container comes with HPU Pytorch packages already installed.

Build the above container and then launch it using:

export GAUDI_DOCKER_IMAGE="gaudi-docker-ubuntu20.04-openfl"

docker build -t ${GAUDI_DOCKER_IMAGE} -f Dockerfile_Habana .

docker run --net host -id --name openfl_gaudi_run ${GAUDI_DOCKER_IMAGE} bash

Then access the container bash shell using:

docker exec -it openfl_gaudi_run bash

Once inside the container, ensure the openfl repo is cloned! Otherwise, clone the openfl repo using:

git clone https://github.com/securefederatedai/openfl.git

Then check if the openfl package is installed:

pip list | grep openfl

If not, then install it using:

pip install openfl

4.2 Step-by-step running the MedMNIST_2D tutorial:

1. Split terminal into 3 terminals:

1) Terminal for the director.

2) Terminal for the envoy.

3) Terminal for the experiment

2. Do the following in each terminal:

cd openfl/openfl-tutorials/interactive_api/HPU/PyTorch_MedMNIST_2D

3. In the first terminal, run the director

cd director

./start_director.sh

4. In the second terminal, install requirements and run the envoy:

cd envoy

pip install -r requirements.txt

./start_envoy.sh env_one envoy_config.yaml

Optional: Run a second envoy in an additional terminal:

Ensure step 2 is complete for this terminal as well.

cd envoy

./start_envoy.sh env_two envoys_config.yaml

5. In the third terminal (or fourth terminal, if you choose to do two envoys), run the Jupyter Notebook:

cd workspace

jupyter lab --allow-root HPU_Pytorch_MedMNIST_2D.ipynb

From your local host PC, port forward the Jupyter port to your local host so you can view it in your local browser using:

ssh -NL 8888:127.0.0.1:8888 gaudi2_node

The guadi2_node is your remote gaudi2 node. A Jupyter Server URL will appear in your terminal. In your local browser, proceed to that link. Once the webpage loads, click on the Pytorch_MedMNIST_2D.ipynb file.

To run the experiment, select the icon that looks like two triangles to “Restart Kernel and Run All Cells”.

You will notice activity in your terminals as the experiments run, and when the experiment is finished, the director terminal will display a message that the experiment was finished successfully.

5. Conclusion:

The above exercise introduced the AI application developer to the subtle concept of the OpenFL in applying the Federated Learning framework. Although the framework was developed to preserve privacy and data security while training models on diverse and distributed data sets, the framework can easily be applied to other fields with similar data privacy and security requirements.

Also, this example illustrated the simplicity of utilizing the Intel Gaudi 2 accelerator instance on the Intel Developer Cloud to speed up the validation of the deep learning models and systems.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.