Santiago Miret is an AI research scientist at Intel Labs, where he focuses on developing artificial intelligence solutions and exploring the intersection of AI and the physical sciences. Kin Long Kelvin Lee is a machine learning engineer in Intel’s Data Center and AI Group (DCAI) working on showcasing how Intel hardware can be used to effectively deploy AI solutions to scientific problems.

Highlights:

- Intel Labs and Intel DCAI researchers demonstrated how advanced AI models can be trained on 4th Generation Intel® Xeon® Scalable Processors to achieve competitive modeling performance on materials property prediction tasks.

- Researchers showed that pre-training on a synthetic task can increase modeling performance for a variety of materials property prediction tasks on two datasets supported by the Open MatSci ML Toolkit.

Following Intel Labs’ release of the Open MatSci ML Toolkit 1.0 in 2023, researchers at Intel Labs and Intel’s Data Center and AI (DCAI) group showcased the capabilities of the library in a paper accepted at the AI4S workshop, which was held in conjunction with SC 23: The International Conference for High Performance Computing, Networking, Storage and Analysis. At this selective workshop, the paper showcased how advanced artificial intelligence (AI) models can be trained on 4th Generation Intel Xeon Scalable Processors to achieve competitive modeling performance generalized across a diverse set of materials property prediction tasks. In this paper, we proposed a new pre-training task based on classifying point structures generated from crystallographic symmetry groups. Additionally, we analyzed the benefits and shortcomings of such an approach for downstream modeling performance, all of which is successfully achieved solely and efficiently on CPUs.

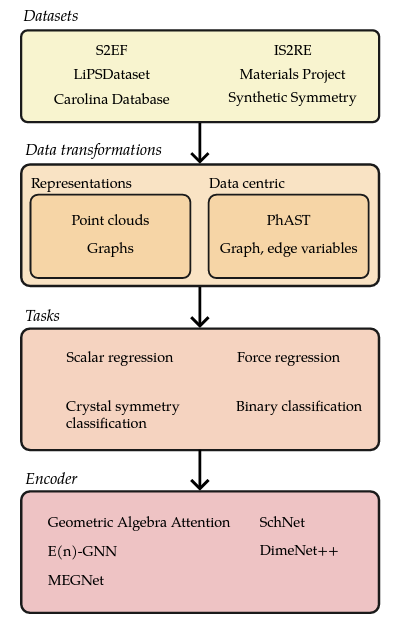

The Open MatSci ML Toolkit provides a set of software engineering utilities to train advanced AI models for materials science tasks. One of the strengths of the toolkit is the ability to integrate multiple types of data for various types of tasks, which is critical to successful training of AI models for materials science. Furthermore, the toolkit contains built-in support for a common set of geometric deep learning architectures that are continuously updated as new state-of-the-art models are released. Figure 1 shows the general utilities and abstractions (datasets, tasks, and models) supported in Open MatSci ML Toolkit 1.0. Several widely used materials science datasets have been incorporated, providing the basic building blocks for experimentation.

Figure 1. Open MatSci ML Toolkit engineering utilities.

Training Models at Scale Using 4th Generation Intel Xeon Scalable Processors

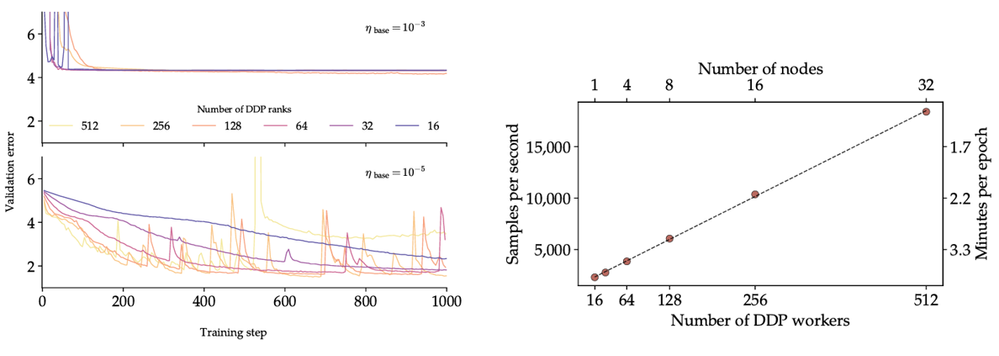

The Open MatSci ML Toolkit is designed to support training of models at various scales of compute (for example, laptop, workstation, and data center) and to seamlessly transition between these scales. To demonstrate that capability, we performed training of an equivariant graph neural network, E(n)-GNN. Using multiple 4th Generation Intel Xeon Scalable Processor CPUs, we investigated how adding parallel computing of multiple CPU processes affects the training of the neural network. The charts in Figures 2a and 2b show that as we increased the number of processes in a distributed data parallel (DDP) training setting, we achieved linear scaling in data throughput with parallelization. In some cases, we achieved greater stability of neural network training as shown by lower validation error.

Figure 2a. On left: Training dynamics of E(n)-GNN with larger parallel DDP workers. Figure 2b. On right: Data throughput of samples with increased parallelization of DDP workers.

However, when training with the largest number of ranks, the stability of the training worsens, highlighting the need to take a principled approach for highly distributed AI model training to manage trade-offs between training speed and stability. Research from Meta AI has shown similar effects when training large language models, and this instability has been attributed to divergence in the well-known Adam family of optimizers. Our results here show that similar phenomena may occur in distributed graph neural network training, requiring more research to overcome these issues.

Analyzing Modeling Performance of Synthetic Pre-Training

In addition to showcasing the training capabilities of advanced CPUs, we investigated the utility of pre-training AI models on an auxiliary symmetry prediction task. This approach has shown prior success in computer vision where synthetic data is often used to imbue AI models with generalized knowledge about the dataset, which is followed by subsequent fine-tuning for a specific task.

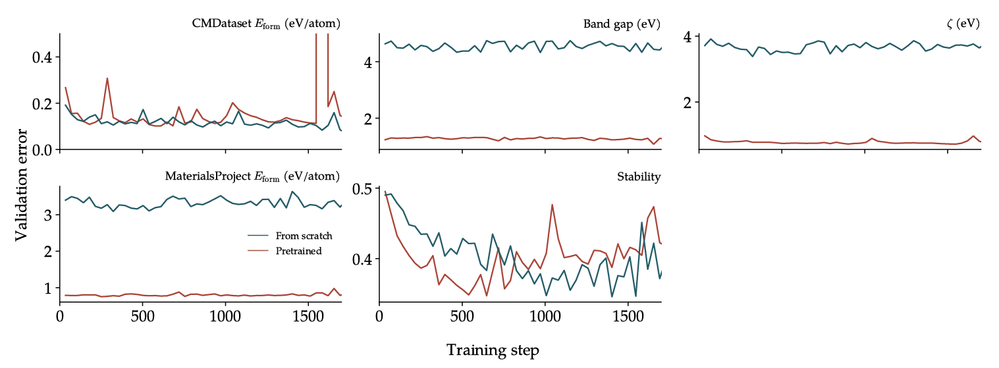

Figure 3. Modeling benefits of pre-training. Pre-training on symmetry increases performance on different tasks.

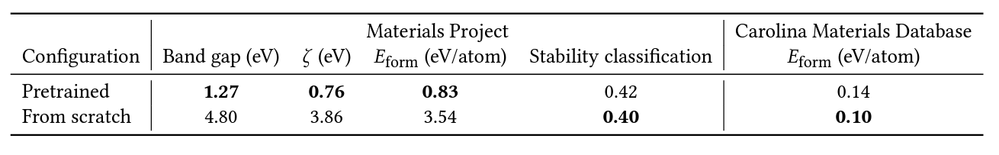

Figure 4. Performance of training strategies on various tasks with pretrained models generally outperforming models that are trained from scratch.

The results in Figures 3 and 4 show that pre-training on our proposed synthetic task can increase modeling performance for a variety of materials property prediction tasks on two datasets supported by the Open MatSci ML Toolkit. While the increase is not uniform, it shows that auxiliary tasks can imbue the E(n)-GNN model with general knowledge that is useful for solving prediction tasks for multiple properties across multiple datasets.

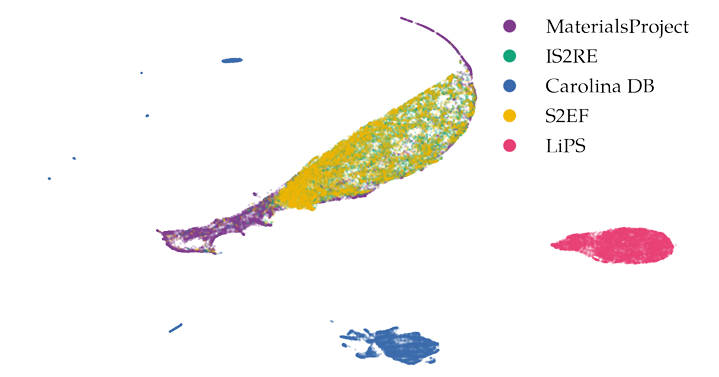

Figure 5. Uniform manifold approximation and projection (UMAP) visualization of each dataset implemented in the Open MatSci ML Toolkit. Each point represents a structure contained within its dataset, embedded with an E(n)-GNN encoder pretrained on synthetic constructs.

Further analysis of the model revealed that pre-training can cluster the representations of various datasets. In Figure 5, we see that the embeddings for three datasets (OpenCatalyst-S2EF, OpenCatalyst-IS2RE, and Materials Project) cluster closely together while the embeddings for two additional datasets (LiPS and Carolina DB) separate into distinct clusters. This analysis can be useful for understanding how different datasets may represent other physical and chemical aspects relevant to the materials they stand for, and how to potentially design future tasks and datasets for training more powerful geometric deep learning models for materials science.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.