Author: Farhaan Mohideen, Product Management, Intel Corporation

Introduction

Computer vision at the edge is rapidly becoming a key feature in many business verticals helping solve critical business problems. It is opening many opportunities to analyse essential data and provides valuable information in real-time or near real-time to act upon them immediately. Unlike traditional project deployments, Computer Vision projects (and AI projects in general) take much longer to move from proof of concept to production. Successful implementations are bringing increased automation, increased operational efficiency, reduced costs, reduced wastage, and increased predictability. The complexity and the value realization of computer vision use cases are transforming industries.

Computer Vision Pipeline

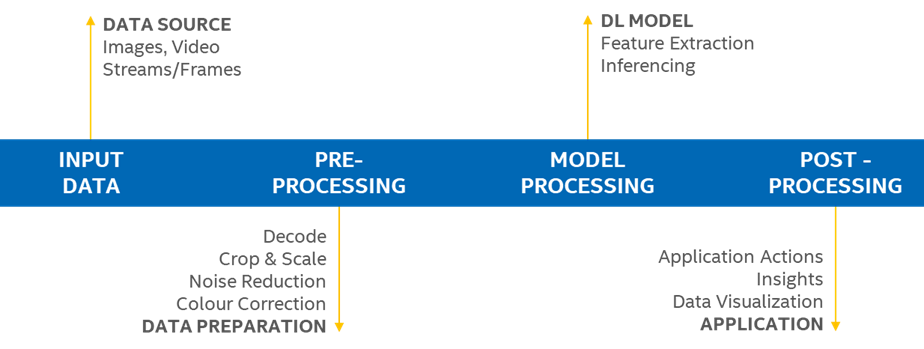

As the number of software applications using computer vision increases, there are a consistent set of steps that must be executed. Almost all automated visual tasks use Convolutional Neural Networks (CNNs), a family of Deep Learning algorithms used for processing images. The process starts with acquiring images or video streams from cameras. These are then pre-processed to ensure the images are converted to a form that is scaled to a consistent input of a certain pixel size. Next, use the trained deep learning model to extract the required features and run inferencing for the particular use case. The inferencing result can be used by an application to determine actions it must perform. The description above has been simplified to set the context and not get into the details of each step.

Figure 1: Computer Vision Pipeline

Camera Usage in Computer Vision

Developing and deploying applications that use computer vision, has many infrastructural dependencies. This includes the cameras, network, client devices, and the edge server and/or cloud instance.

Focusing mainly on the cameras, when developing a solution, software vendors (ISVs) pay close attention to challenges during the deployment and maintenance of their product. Some broadly categorize challenges are:

- Existing infrastructures may already have cameras installed. Although applications are pre-validated with specific branded cameras, these may not be the ones available in the client infrastructure.

- Cameras come in all shapes and sizes and most critically there are different types of cameras ranging from IP cameras, USB cameras, and depth sensing cameras that may require support

- Cameras are not provisioned and made available in a consistent manner. There will be additional custom work required to identify and pick up the images/video streams

- There will be no single interface to manage and control cameras across the customer site. At scale, this quickly becomes quite a mammoth task when configurations need to be updated.

Software Product Differentiation

Generally, product solutions built for specific use cases differentiate between application functionality, ease of use, deployment model, the reputation of the software vendor, cost, and licensing model. In the case of AI applications, this would extend further in terms of model accuracy for the particular use case, and hardware TCO required to run inferencing, edge training, and model training.

The activities highlighted in the computer vision pipeline and the camera usage considerations above do not help differentiate a product solution. This is a common requirement that is a necessity to deploying and maintaining the application.

Camera Management and Inferencing

The EdgeX Foundry open-source community embarked on a journey to address the common deployment requirements of cameras for AI applications. The objective was to develop features to manage the camera lifecycle supporting IP Cameras (ONVIF), USB, and Depth Sensing cameras. The focus was to reduce the overhead on ISVs when developing software applications and make the developer and deployment experience much easier.

ONVIF Support

The team focused on IP cameras, where the open industry forum had defined ONVIF as a set of standardized interfaces that camera vendors can follow to ensure interoperability between cameras. The ONVIF profile specifications have defined mandatory and conditional features in order for brands to qualify as ONVIF compliant.

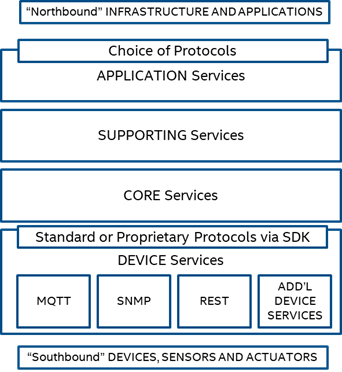

The EdgeX architecture consists of 4 tiers of services. Application Services in the “Northbound” communicate with infrastructure and applications, and Device Services in the “Southbound” communicate with Devices, Sensors, and Actuators. Core Services and Support Services are in between Application services and Device Service.

The job of the device service is to interact with the various IoT sensors and collect data. For this initiative, a new device service was created to support ONVIF-compliant IP Cameras. This enables ONVIF-compliant cameras to be provisioned and managed in a consistent fashion.

ONVIF Camera Device Service

The ONVIF camera device microservice abstracts the device-specific camera interface and provides a consistent set of services for ONVIF-compliant cameras. The service supports the following through RESTful APIs:

- EdgeX Device Discovery: Camera device microservice probes the network and adds camera devices to the Core metadata when pre-defined conditions are satisfied

- Application Device Discovery: Applications can query the core metadata for devices and associated configurations

- Application Device Configuration: Set configuration with username, password, IP address and initiate ONVIF capabilities through REST APIs. This includes stream URI, Pan-Tilt-Zoom (PTZ), firmware updates)

The service has also been integrated into an Inferencing engine to run the entire computer vision pipeline, with all the results being published over MQTT. Applications can subscribe to the topic and pick up the results as they come through in real-time.

- Pipeline Control: the application initiates Video Analytics Pipeline through an HTTP Post request

- Publish Inference Events/Data: Analytics inferences are formatted and passed to the destination message bus specified in the request

- Export Data: Publish prepared (transformed, enriched, filtered, etc.) and groomed (formatted, compressed, encrypted, etc.) data to external systems

Summary

There are certain functionalities that don’t add a tremendous amount of value to an ISVs product. However, they are necessary features to maintain and manage the platform. This set of features has been implemented under the EdgeX foundry as an open-source device service. Adopting the ONVIF device service will provide a higher level of flexibility to work with any camera brand supporting ONVIF and this will also ensure future changes to camera brands supporting ONVIF will not require further integration or code being added

Currently, the code for ONVIF-compliant IP cameras has been completed. Some work on USB cameras has also been- kicked off but not completed. The EdgeX community invites ISVs to join the community to add, adopt, and improve these device services. More adoption, code contribution, and maintenance will help reduce development time and cost for all.

Please use the links below to dive into the details and download the code for the ONVIF Camera Device Service.

References:

- EdgeX Foundry - https://www.edgexfoundry.org/

- ONVIF camera device service – https://github.com/edgexfoundry/device-onvif-camera

- ONVIF Specification - https://www.onvif.org/

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.