Introducing a New Step-by-Step Guide

Looking at the origins of OpenMP*, it was designed to provide an easy-to-implement straightforward pragma and directive-driven approach to introducing highly parallel execution.

Its intent and purpose is to do so without being tied to a specific programming language, operating system, or hardware platform. Thus, it helps to streamline parallel software development and supersede tedious low-level multi-threaded programming solutions.

OpenMP was originally targeted towards controlling capable and completely independent processors with shared memory, such as the multi-core CPUs that form the backbone of most of our modern computational environments, whether it be your home PC or an exascale compute cluster.

GPU architecture is different:

- There is no single shared memory space

- They have thousands of cores

- Cores have interdependencies

- Thread overhead can be very small and lightweight

None of this, however, fundamentally contradicts the OpenMP approach.

Starting with OpenMP 4.0 in 2013, the ability to offload blocks of code was introduced in the form of an execution model for devices, and the

#pragma omp target (C/C++)

or

!$omp target / !$omp end target (Fortran)

constructs transferring control flow to the GPU target devices. Given that OpenMP is based on a model of forking parallel regions from a primary thread, parallel regions will then be defined for the offload GPU as a next step. More and more features supporting GPU offload, advanced memory allocation, and device execution scheduling have been introduced ever since.

Today, with OpenMP 5.x, this parallelism framework effectively provides an abstraction layer permitting the use of OpenMP for GPU-based accelerated compute regardless of the targeted GPU hardware as long as a runtime library exists.

OpenMP keeps growing and evolving, maintaining and expanding its importance in high-performance computing. It is the de facto standard solution for many-GPU offload compute using Fortran.

Just as with other accelerated compute many-core offload approaches, keeping cross-device data transfers to a minimum and optimizing them for low latency is key.

To facilitate the ability of software developers to exploit the full potential of multicore hardware with OpenMP, we created a new

Application Offload and OpenMP* Optimization Portal using Intel® Tools

|

The OpenMP Offload Workflow

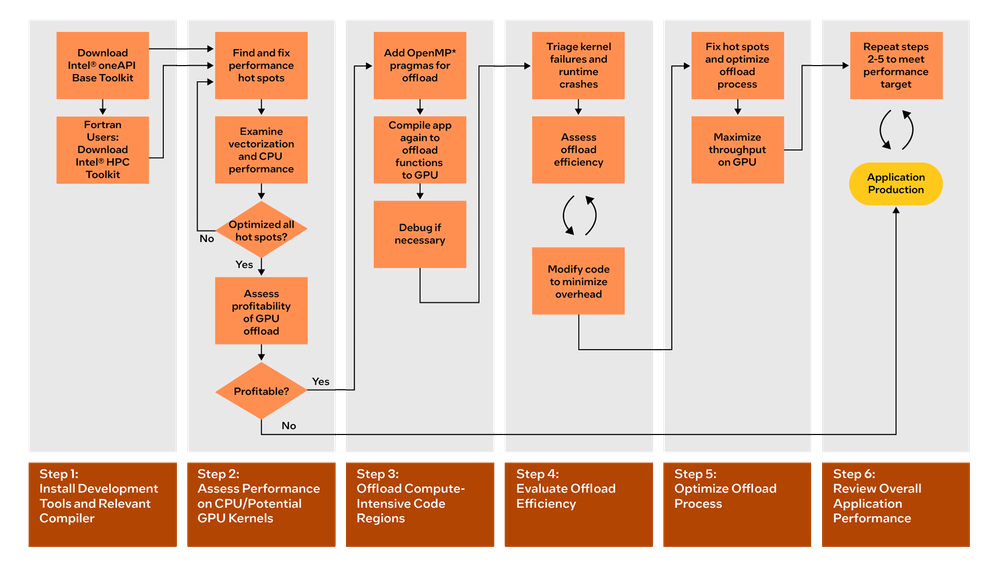

The Offload and Optimize OpenMP* Applications page provides a detailed learning path and workflow to demonstrate offload and optimization of an OpenMP application on Intel® GPUs using developer tools from Intel.

It uses real, fully accessible GitHub*-hosted, and comprehensive example code (ISO3DFD OpenMP Offload sample) to illustrate and guide you through the steps towards highly performant GPU offload acceleration.

The key steps are:

- Install Development Tools and an Appropriate Compiler

- Assess Application Performance on CPU and Potential GPU Kernels

- Offload Compute-Intensive Code Regions in Kernels

- Evaluate Offload Efficiency

- Optimize the Offload Process

- Review Overall Application Performance

After you read through the Guided OpenMP Offload Workflow and check out its example code, you will be ready to apply the learnings and recipes we provide to your complex applications and tune their performance.

For initial performance assessment, the workflow introduces Intel® VTune™ Profiler hotspot analysis.

The Vectorization and Code Insights Perspective and CPU/Memory Roofline Insights of the Intel® Advisor will give you the CPU-centric performance baseline and tuning guidance that can then be used as a reference for the potential benefits OpenMP Offload Modelling will highlight.

You will then see how to introduce OpenMP offload kernels to a GPU using Intel® Compilers, followed by additional fine-tuning iteratively increasing offload efficiency until you reach the desired overall application performance.

Next Steps

Let us start bringing OpenMP accelerator offload to your applications and taking advantage of the potential for significant performance benefits executing compute-intensive, highly parallel code on one or many GPUs.

The development tools used as part of the Offload and Optimize OpenMP* Applications workflow that will help you streamline the introduction of OpenMP GPU offload to your codebase are:

- Intel® DPC++/C++ Compiler included in the Intel® oneAPI Base Toolkit (Base Kit).

- Intel® Fortran Compiler included with the Intel® HPC Toolkit. For full functionality, download this toolkit in addition to the Base Kit.

- Intel® Advisor (included with the Base Kit)

- Intel® VTune™ Profiler (included with the Base Kit)

- Profiling Tools Interfaces for Intel GPUs.

Additional Resources

- Offload and Optimize OpenMP* Applications with Intel® Tools

- Guided iso3dfd OpenMP Offload Sample

- OpenMP Offload Tuning Guide

- OpenMP Offload Programming Model for C, C++, and Fortran

- Training Modules to Learn the Basics of OpenMP Offload

- oneAPI GPU Optimization Guide

- VTune™ Profiler OpenMP Code Analysis Methods Cookbook Recipe

Rob enables developers to streamline programming efforts across multiarchitecture compute devices for high performance applications taking advantage of Intel's family of development tools. He has extensive 20+ years of experience in technical consulting, software architecture and platform engineering working in IoT, edge, embedded software and hardware developer enabling.

Rob enables developers to streamline programming efforts across multiarchitecture compute devices for high performance applications taking advantage of Intel's family of development tools. He has extensive 20+ years of experience in technical consulting, software architecture and platform engineering working in IoT, edge, embedded software and hardware developer enabling.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.