- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I run the benchmark_app on my PC, and inference time on GPU is slower (295 ms) than CPU (31 ms). Is there any setting I am missing?

(anomalib) C:\Users\me>benchmark_app -m omz_models/intel/asl-recognition-0004/FP16/asl-recognition-0004.xml -d GPU -hint throughput

[Step 1/11] Parsing and validating input arguments

[ WARNING ] -nstreams default value is determined automatically for a device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 2/11] Loading OpenVINO

[ INFO ] OpenVINO:

API version............. 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ INFO ] Device info

GPU

Intel GPU plugin........ version 2022.1

Build................... 2022.1.0-7019-cdb9bec7210-releases/2022/1

[Step 3/11] Setting device configuration

[Step 4/11] Reading network files

[ INFO ] Read model took 78.13 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model input 'input' precision f32, dimensions ([N,C,D,H,W]): 1 3 16 224 224

[ INFO ] Model output 'output' precision f32, dimensions ([...]): 1 100

[Step 7/11] Loading the model to the device

[ INFO ] Compile model took 8302.98 ms

[Step 8/11] Querying optimal runtime parameters

[ INFO ] DEVICE: GPU

[ INFO ] AVAILABLE_DEVICES , ['0']

[ INFO ] RANGE_FOR_ASYNC_INFER_REQUESTS , (1, 2, 1)

[ INFO ] RANGE_FOR_STREAMS , (1, 2)

[ INFO ] OPTIMAL_BATCH_SIZE , 1

[ INFO ] MAX_BATCH_SIZE , 1

[ INFO ] FULL_DEVICE_NAME , Intel(R) UHD Graphics 630 (iGPU)

[ INFO ] OPTIMIZATION_CAPABILITIES , ['FP32', 'BIN', 'FP16']

[ INFO ] GPU_UARCH_VERSION , 9.0.0

[ INFO ] GPU_EXECUTION_UNITS_COUNT , 24

[ INFO ] PERF_COUNT , False

[ INFO ] GPU_ENABLE_LOOP_UNROLLING , True

[ INFO ] CACHE_DIR ,

[ INFO ] COMPILATION_NUM_THREADS , 16

[ INFO ] NUM_STREAMS , 1

[ INFO ] PERFORMANCE_HINT_NUM_REQUESTS , 0

[ INFO ] DEVICE_ID , 0

[Step 9/11] Creating infer requests and preparing input data

[ INFO ] Create 4 infer requests took 31.64 ms

[ WARNING ] No input files were given for input 'input'!. This input will be filled with random values!

[ INFO ] Fill input 'input' with random values

[Step 10/11] Measuring performance (Start inference asynchronously, 4 inference requests, inference only: True, limits: 60000 ms duration)

[ INFO ] Benchmarking in inference only mode (inputs filling are not included in measurement loop).

[ INFO ] First inference took 85.86 ms

[Step 11/11] Dumping statistics report

Count: 816 iterations

Duration: 60549.62 ms

Latency:

Median: 295.50 ms

AVG: 296.06 ms

MIN: 152.10 ms

MAX: 344.38 ms

Throughput: 13.48 FPS

(anomalib) C:\Users\me>benchmark_app -m omz_models/intel/asl-recognition-0004/FP16/asl-recognition-0004.xml -d CPU -hint latency

[Step 1/11] Parsing and validating input arguments

[ WARNING ] -nstreams default value is determined automatically for a device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 2/11] Loading OpenVINO

[ INFO ] OpenVINO:

API version............. 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ INFO ] Device info

CPU

openvino_intel_cpu_plugin version 2022.1

Build................... 2022.1.0-7019-cdb9bec7210-releases/2022/1

[Step 3/11] Setting device configuration

[Step 4/11] Reading network files

[ INFO ] Read model took 34.31 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model input 'input' precision f32, dimensions ([N,C,D,H,W]): 1 3 16 224 224

[ INFO ] Model output 'output' precision f32, dimensions ([...]): 1 100

[Step 7/11] Loading the model to the device

[ INFO ] Compile model took 232.60 ms

[Step 8/11] Querying optimal runtime parameters

[ INFO ] DEVICE: CPU

[ INFO ] AVAILABLE_DEVICES , ['']

[ INFO ] RANGE_FOR_ASYNC_INFER_REQUESTS , (1, 1, 1)

[ INFO ] RANGE_FOR_STREAMS , (1, 16)

[ INFO ] FULL_DEVICE_NAME , Intel(R) Core(TM) i7-10700 CPU @ 2.90GHz

[ INFO ] OPTIMIZATION_CAPABILITIES , ['FP32', 'FP16', 'INT8', 'BIN', 'EXPORT_IMPORT']

[ INFO ] CACHE_DIR ,

[ INFO ] NUM_STREAMS , 1

[ INFO ] INFERENCE_NUM_THREADS , 0

[ INFO ] PERF_COUNT , False

[ INFO ] PERFORMANCE_HINT_NUM_REQUESTS , 0

[Step 9/11] Creating infer requests and preparing input data

[ INFO ] Create 1 infer requests took 0.00 ms

[ WARNING ] No input files were given for input 'input'!. This input will be filled with random values!

[ INFO ] Fill input 'input' with random values

[Step 10/11] Measuring performance (Start inference asynchronously, 1 inference requests, inference only: True, limits: 60000 ms duration)

[ INFO ] Benchmarking in inference only mode (inputs filling are not included in measurement loop).

[ INFO ] First inference took 63.08 ms

[Step 11/11] Dumping statistics report

Count: 1917 iterations

Duration: 60029.65 ms

Latency:

Median: 31.37 ms

AVG: 31.22 ms

MIN: 22.52 ms

MAX: 51.78 ms

Throughput: 31.93 FPS1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Spiovesan,

This thread will no longer be monitored since we have provided information. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Spiovesan,

Thanks for reaching out.

Firstly, your inference above is comparing GPU (throughput mode) and CPU (latency mode).

For your information, by default, the Benchmark App is inferencing in asynchronous mode. The calculated latency measures the total inference time (ms) required to process the number of inference requests.

In addition, 4 inference requests are created when running Benchmark App on CPU with default parameters while running Benchmark App on GPU with default parameters, 16 inference requests are created.

You can re-run Benchmark App by setting the same inference requests when inferencing on CPU and GPU and compare the inference results again. The result might be inconsistent however it is acceptable where the value is quite close for both devices.

benchmark_app -m asl-recognition-0004.xml -d CPU -hint latency/throughput -nireq 4

benchmark_app -m asl-recognition-0004.xml -d GPU -hint latency/throughput -nireq 4

Below is my validation on CPU and GPU:

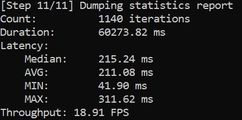

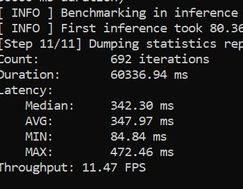

1)Latency mode

CPU:

GPU:

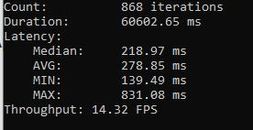

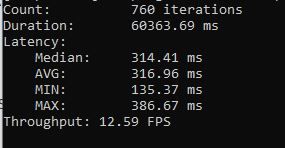

2) Throughput mode

CPU:

GPU:

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Spiovesan,

This thread will no longer be monitored since we have provided information. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page