- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have trouble running mpirun (2019.5.281) on a node with Intel Xeon Gold processors. Running a simple 'mpirun hostname' command results in a single hydra_pmi_proxy process hanging endlessly and using 100% of core power without any error messages. Hostname is not returned.

The command below:

$ I_MPI_DEBUG=16 I_MPI_HYDRA_DEBUG=on FI_LOG_LEVEL=debug mpirun hostname

returns this and no other error messages:

[mpiexec@balerion] Launch arguments: /opt/intel/compilers_and_libraries_2019.5.281/linux/mpi/intel64/bin//hydra_bstrap_proxy --upstream-host balerion --upstream-port 43589 --pgid 0 --launcher ssh --launcher-number 0 --base-path /opt/intel/compilers_and_libraries_2019.5.281/linux/mpi/intel64/bin/ --tree-width 16 --tree-level 1 --time-left -1 --collective-launch 1 --debug --proxy-id 0 --node-id 0 --subtree-size 1 --upstream-fd 7 /opt/intel/compilers_and_libraries_2019.5.281/linux/mpi/intel64/bin//hydra_pmi_proxy --usize -1 --auto-cleanup 1 --abort-signal 9

I tried setting some environmental variables as suggested for similar (but slightly different) problems, e.g. setting FI_PROVIDER=tcp, but it didn't help.

Exactly the same version of MPI works without issues on older Broadwell cpus (same cluster, same linux version) and even on a brand new Intel Xeon Silver node. I would really appreciate any clues and suggestions about the possible origin of this problem and how it could be solved.

Thank you in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Santosh,

Thank you so much for taking time to help me. In the end, it turned out that our linux (Gentoo) kernel was compiled with incorrect settings, which hindered the communication between the processes. We missed something at the configuration step. Essentially, any version of Intel MPI didn't work for us, even though OpenMPI did. When tested on another linux distribution in live mode, Intel MPI started to work and we recompiled our Gentoo kernel with a functional configuration, which solved the problems. I still had to export FI_PROVIDER=tcp (or mlx) to enable proper running of programs using the MPI environment. We are still not sure what was the original source of the problem with the old kernel since the configuration contains many variables, packages and drivers.

Thanks once again!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

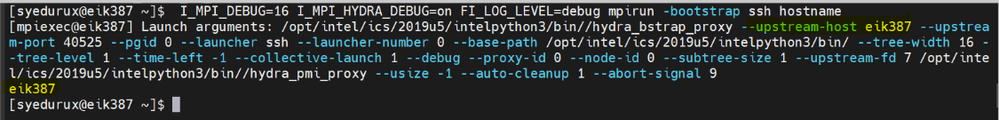

We tried running the below command on a node with Intel(R) Xeon(R) Gold 6342 CPU using Intel MPI 2019.5.281 and it is working fine at our end.

I_MPI_DEBUG=16 I_MPI_HYDRA_DEBUG=on FI_LOG_LEVEL=debug mpirun -bootstrap ssh hostname

output from my end:

Could you please try running a sample hello world program(attached below) using the below command and share with us the complete debug log?

mpiicc hello.c

I_MPI_DEBUG=16 I_MPI_HYDRA_DEBUG=on FI_LOG_LEVEL=debug mpirun -bootstrap ssh -n 2 ./a.out

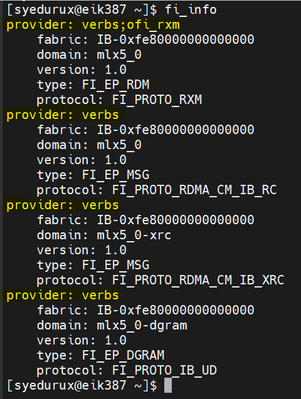

Also, could you please provide the output for the below command?

fi_info

sample output from my end:

You can try any one of the providers from the fi_info output to set the value of FI_PROVIDER. Usually, to run an MPI job on a single node, specifying FI_PROVIDER is not essential.

Example:

export FI_PROVIDER=verbs

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answer!

I do not have an up to date license for mpiicc and just intend to use runtime, so I cannot compile the hello.c code. However, hostname and any other command/program executed in the suggested way (e.g. date, echo etc.) doesn't produce any output and spawns the hanging hydra_pmi_proxy process. Every time, I need to abort mpirun with ctrl+c and then kill hydra_pmi_proxy.

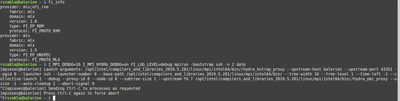

I'm attaching a screenshot including the output of fi_info:

~ $ fi_info

provider: mlx;ofi_rxm

fabric: mlx

domain: mlx

version: 1.0

type: FI_EP_RDM

protocol: FI_PROTO_RXM

provider: mlx

fabric: mlx

domain: mlx

version: 1.5

type: FI_EP_UNSPEC

protocol: FI_PROTO_MLX

My node is equipped with the Intel(R) Xeon(R) Gold 6248R CPU.

Thanks,

Rafal

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Since a simple "mpirun hostname" command is failing, there might be some installation (or) Intel MPI setup issues at your end.

We recommend you try re-installing the existing Intel MPI library and try again (or) you can upgrade to the latest Intel MPI Library which is available as a standalone product and as part of the Intel® oneAPI HPC Toolkit.

For downloading HPC Toolkit refer to the below link:

https://www.intel.com/content/www/us/en/developer/tools/oneapi/hpc-toolkit-download.html

Refer to the below link on how to start with Intel MPI Library:

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Santosh,

Thank you so much for taking time to help me. In the end, it turned out that our linux (Gentoo) kernel was compiled with incorrect settings, which hindered the communication between the processes. We missed something at the configuration step. Essentially, any version of Intel MPI didn't work for us, even though OpenMPI did. When tested on another linux distribution in live mode, Intel MPI started to work and we recompiled our Gentoo kernel with a functional configuration, which solved the problems. I still had to export FI_PROVIDER=tcp (or mlx) to enable proper running of programs using the MPI environment. We are still not sure what was the original source of the problem with the old kernel since the configuration contains many variables, packages and drivers.

Thanks once again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Glad to know that your issue is resolved. Thanks for sharing the solution with us. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page