- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

import time

from mpi4py import MPI

comm = MPI.COMM_WORLD

if comm.rank != 0:

time.sleep(1)

print('rank 1 exit...')

else:

time.sleep(10000)

print('rank 0 exit...')In the above program, the processes did not exit after the execution of the non-0 process, they all waited for the 0 process to finish and exit together. But these non-0 processes will occupy computing resources.

When using mpi4py under intel mpi, how can I achieve that each process launched exits normally after completing its own task, and not wait for all other processes to finish before exiting together?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>"how can I achieve that each process launched exits normally after completing its own task, and not wait for all other processes to finish before exiting together?"

All processes must call the MPI_Finalize routine before exiting. If not, it would result in an undefined behavior as said by @dalcinl .

Implementing your scenario would result in an undefined behavior & it is not recommended as it is not following the guidelines of MPI.

Thanks & Regards,

Santosh

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in the Intel communities.

By default, each process will exit normally after completing its own task, and will not wait for any other processes to finish before exiting.

Please refer to the below code in which each process will perform its own task and exits normally without waiting for other processes to finish:

import time

from mpi4py import MPI

comm = MPI.COMM_WORLD

if comm.rank != 0:

time.sleep(1)

print('rank [',comm.rank,'] started...',sep="")

else:

time.sleep(10)

print('rank [',comm.rank,'] started...',sep="")

print('rank [',comm.rank,'] ended...',sep="")

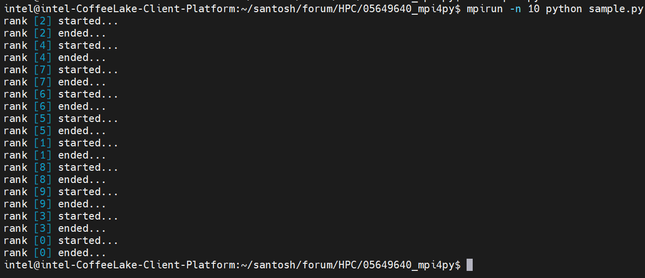

Output:

If we want all the processes to exit together, we can use the Barrier() to synchronize the processes as shown in the below code:

import time

from mpi4py import MPI

comm = MPI.COMM_WORLD

if comm.rank != 0:

time.sleep(1)

print('rank [',comm.rank,'] started...',sep="")

else:

time.sleep(10)

print('rank [',comm.rank,'] started...',sep="")

comm.Barrier()

print('rank [',comm.rank,'] ended...',sep="")

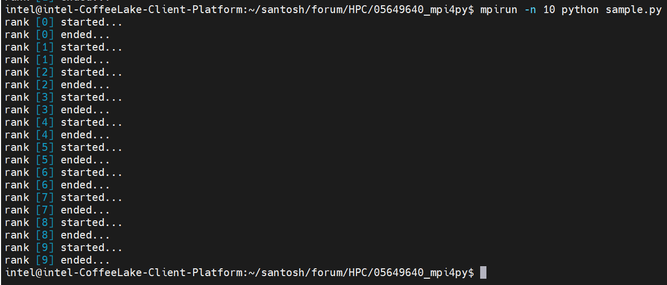

Output:

If this resloves your issue, make sure to accept this as a solution. This would help others with similar issues. Thank you!

Best Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, thank you for your reply. However, didn't see where your code is. Secondly, have you tried the code I posted, maybe mpi4py will automatically call MPI_final function when exiting the process.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

import time

from mpi4py import MPI

comm = MPI.COMM_WORLD

if comm.rank != 0:

time.sleep(1)

print('rank [',comm.rank,'] started...',sep="")

else:

time.sleep(10) # I think the sleep time of this process should be extended, and a tool like "top" should be used to observe whether other short-lived processes exit after completing the sleep. If the time the process sleeps is very short, the result of this program is your output. However, it is impossible to determine from the output alone whether other short-term processes are waiting for this long-term process to end.

print('rank [',comm.rank,'] started...',sep="")

print('rank [',comm.rank,'] ended...',sep="")- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I posted the sample codes along with the outputs in my previous post. But, it might took sometime to reflect at your end. So, could you please check my previous post again? If you still unable to see the code, then please let us know so that we will repost.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By default, mpi4py automatically intializes and finalizes MPI. As the call to `MPI_Finalize` is collective, your process 0 blocks at `MPI_Finalize` waiting for process 1 to reach the call.

If you do not want mpi4py to automatically finalize MPI, you can do the following:

import mpi4py

mpi4py.rc.finalize = False

from mpi4py import MPI

Nonetheless, be aware that this way your code may be non-portable to other MPI implementations. To the best of my understanding, MPI_Finalize() should be called for clean parallel termination of your application.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(py3.9) ➜ /share mpirun -n 10 python test.py

rank 1 exit...

rank 1 exit...

rank 1 exit...

rank 1 exit...

rank 1 exit...

rank 1 exit...

rank 1 exit...

rank 1 exit...

rank 1 exit... #all 10 processes exit immediately including process 0

According to your kind of solution, I launched 10 processes. 9 non-zero processes exited, and all processes of the whole program also exited (but, at this time, process 0 has not finished executing!)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry, but something is wrong. All your lines say `rank 1 exited`. You are most likely not running exactly the same code you posted before.

Anyway, I have to insist: you are trying to use MPI in a way it was not designed to work. All MPI processes should eventually call MPI_Finalize() before ending execution, and MPI_Finalize() is a collective and blocking operation.

Perhaps you should comment on what exactly you are trying to accomplish so that we can provide some informed advice on the best approach to tackle your needs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

import mpi4py

mpi4py.rc.finalize = False

from mpi4py import MPI

import sys

import time

comm = MPI.COMM_WORLD

if comm.rank != 0:

time.sleep(5)

print('rank 1 exit...')

else:

time.sleep(10000)

print('rank 0 exit...')I am using this code. What are your run result using this code?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Right now I'm running MPICH on macOS, I don't have access to my Linux workstation. I get output from all ranks but zero. I'm not sure why process 0 is not printing. Perhaps is just a I/O thing, or mpiexec is killing the rogue process 0.

In any case, that's what happen when you ignore the rules: you get undefined and weird behavior. I insist, do yourself a favor and DO NOT rely on my suggestion to avoid the blocking call to MPI_Finalize(). Otherwise, you may get a piece of code that works today, but it may stop working in the next Intel MPI version update, not to mention if you ever try to use a different MPI implementation or even platform/computing environment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If as you say, my original question is not resolved ah, we are back to the beginning of the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>"how can I achieve that each process launched exits normally after completing its own task, and not wait for all other processes to finish before exiting together?"

All processes must call the MPI_Finalize routine before exiting. If not, it would result in an undefined behavior as said by @dalcinl .

Implementing your scenario would result in an undefined behavior & it is not recommended as it is not following the guidelines of MPI.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for accepting our solution. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page