- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Edit: The problem is solved.

I had to add -fsycl-targets=spir64_gen-unknown-unknown-sycldevice switch and now the program is running as expected irrespective of kernel code present in included libraries.

I noticed that adding -fsycl-link-targets=spir64_gen-unknown-unknown-sycldevice also solves the issue but -fsycl-link did not help. Is there any information on what these switches do?

--------------------------------------

I am porting blender/cycles library to oneapi. I have two files that contain around 30-40 functions which call different OneAPI functions like creating buffers, images and then call kernel. These are part of a library and none of these functions ever gets called.

Now I added a simple function to my test file which just adds two small buffers (barely 1K each)

Code:

void util_add(int *a, int *b, int *c, sycl::nd_item<3> item_ct1)

{

int x = item_ct1.get_local_range().get(2) * item_ct1.get_group(2) +

item_ct1.get_local_id(2);

int y = item_ct1.get_local_range().get(1) * item_ct1.get_group(1) +

item_ct1.get_local_id(1);

int i = y * item_ct1.get_global_range().get(2) + x;

c[i] = a[i] + b[i];

}

#define SIZE_X 8

#define SIZE_Y 4

#define SIZE (SIZE_X*SIZE_Y)

void util_mem_test(int *ap, int *bp, int *cp)

{

std::iota(ap, ap+SIZE, 0);

std::iota(bp, bp+SIZE, 0);

std::cout << "util_mem_test:: \n";

sycl::queue &q_ct1 = dpct::get_current_device().default_queue();

try{

// execution parameters

sycl::range<3> blocks(SIZE_X, SIZE_Y, 1);

sycl::range<3> threads(1, 1, 1);

// prepare arguments and launch thread

q_ct1.submit([=](sycl::handler &cgh) {

auto dpct_global_range = blocks * threads;

cgh.parallel_for/* <class memory_test> */(

sycl::nd_range<3>(

sycl::range<3>(dpct_global_range.get(2), dpct_global_range.get(1),

dpct_global_range.get(0)),

sycl::range<3>(threads.get(2), threads.get(1), threads.get(0))),

[=, a= ap, b = bp, c = cp](sycl::nd_item<3> item_ct1) {

util_add(a, b, c, item_ct1);

});

}).wait();

}

catch (cl::sycl::exception e)

{

/* In the case of an exception being throw, print error message and return error . */

std::cout <<"sycl::exception: " << e.what() << "\n";

return;

}

catch (std::exception e)

{

std::cout << "std::exception: " << e.what() << "\n";

return;

}

for(int i=0; i< SIZE; i++)

{

std::cout <<std::hex << /* ap[i] << "+" << bp[i] << "=" << */ cp[i] << " ";

}

std::cout << "\n";

}

void util_sycl_test()

{

int *ap, *bp, *cp;

dpct::dpct_malloc(&ap, SIZE * sizeof(int));

dpct::dpct_malloc(&bp, SIZE * sizeof(int));

dpct::dpct_malloc(&cp, SIZE * sizeof(int));

util_mem_test(ap, bp, cp);

}

When I call this function, it goes fine till it reaches cgh.parallel_for but then system becomes non responsive for around 2 minutes while memory consumption keeps on increasing. When it reaches around 11 GB, the program displays error message

util_mem_test::

LLVM ERROR: out of memory

Aborted (core dumped)

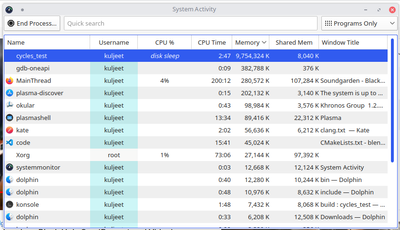

In system activity, cycles_test is already at 9.7GB.

If I remove those 2 files containing oneapi code from my library, it works fine again. I'm not sure what's happening. Any idea what I can do to see what the problem is?

Edit: I tried something to figure out what might be going on. I commented out all the kernel code and enabled only 1 function's kernel code and I am still facing the same issue. I think may be when it loads any kernel code, it tries to compile all the kernel code that it sees across all the modules at run time. My kernel code is pretty big and it is calling many functions which are defined inside header files. So may be it is excessive code length that is causing the issue of system running out of memory.

Is there any way to generate compiled kernel code at compile time only so that at run time it only has to push the kernel code to GPU when invoked?

Regards,

Gagan

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

As you rightly pointed out, the device code needs to be generated at compile time in your case, also known as Ahead of time (AOT) compilation (generates device specific image). The usual JIT (just in time) compilation loads a generic SPIR-V image, which generates device code at runtime.

-fsycl-link flag instructs the compiler to link the device code without fully linking the host code. In other words, if you only make changes to the host code, you don't have to compile the device code again. But here, it doesn't take any targets, thus making it similar to JIT i.e linking generic SPIR-V image. -fsycl-link-targets on the other hand accepts a triple that produces linked device images at compile time.

If -fsycl-targets is specified, the driver will invoke the host compiler and a number of SYCL device compilers for targets specified in the -fsycl-targets option. If -fsycl-targets is not specified, then single SPIR-V target is assumed, and single device compiler for this target is invoked.

Kindly refer to the below links for more information:

Programming guide:

https://software.intel.com/content/www/us/en/develop/documentation/oneapi-programming-guide/top.html

DPC++ flags:

Thanks,

Rahul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Any updates on this? Could you let me know if I can close this thread from my end?

Thanks,

Rahul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Rahul,

Yeas you can close this thread. The issue was resolved.

Regards,

Gagan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the confirmation. Intel will no longer monitor this thread. Any further discussion on this thread will be considered as a community discussion.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page