- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

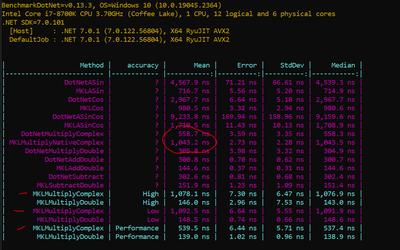

I've just discovered MKL and have created a few benchmarks in C#/.NET7 comparing vector operations in C# using a simple loop with the equivalent operation using MKL.

Using 8700k, .NET 7 Visual Studio 17.4.2

Nuget package "intelmkl.redist.win-x64" Version="2022.2.1.19754"

400 element vector

Initially things looked good:

multiply double: vsMul 2.5* faster

Cos: 3* faster

Asin: 6* faster

but then:

multiple complex: 1/2 speed

I then found this post: // https://community.intel.com/t5/Intel-oneAPI-Math-Kernel-Library/Sequential-VML-performance/m-p/780873#M1461

and assumed that was the answer. However testing with performance mode only achieved speed parity with a C# loop for complex multiplication.

Should I expect vzMul to run faster than the equivalent for loop in C#? If not why not?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

A gentle reminder:

Has the information provided helped? Could you please let us know if we could close this case on our end?

Best Regards,

Shanmukh.SS

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Phillip,

Thanks for posting on Intel communities.

Based on our existing solution, we need to install extra components which takes a bit of time to check and try reproducing at our end. Post-installation, we will update you on the findings.

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, no problem. You need VS 17.4.2, with probably a basic workload.

I've put my benchmark code here; PhilPJL/MklBenchmark (github.com)

In general I notice that performance is better for larger vectors.

Trig functions are faster than a C# loop and much faster for large vectors >10k.

Multiply double is faster for medium vectors say 400 and possibly slower for small ~40

Multiply complex is slower unless using performance mode or large vectors.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

We have set up the environment. However, we were unable to build the source code successfully, which might be due to a mismatch in environment support i.e. .NET 6.0 VS17.2.2 vs target sdk .NET 7.0 VS17.4.2. We will get back to you soon with an update.

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

We have set up the environment. However, we were unable to build the source code successfully, which might be due to a mismatch in environment support i.e. .NET 6.0 VS17.2.2 vs target sdk .NET 7.0 VS17.4.2. We will get back to you soon with an update.

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip Lee,

As the latest MKL NuGet package is available, Could you please update to the latest NuGet package file(2023.0.0.25930) and let us know if the issue persists?

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, yes it does persist with a vector size of 400. I've updated the repo with the changes.

Also high vs low accuracy makes little difference.

I think your version numbers don't conform to semantic versioning standards and are confusing Visual Studio

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

Thanks a lot for the update.

Could you please let us know if we could merge the below 2 requests into this Case if it's feasible to you? (as the other 2 are too with a similar code base) So that you could follow up on the issue better?

- https://community.intel.com/t5/Intel-oneAPI-Math-Kernel-Library/Is-it-possible-to-use-the-embedded-Gpu-with-vml/m-p/1437768#M33989

- https://community.intel.com/t5/Intel-oneAPI-Math-Kernel-Library/Semantic-versioning/m-p/1443243#M34107

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok go ahead (although they are separate issues in my view).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

We are discussing your issue internally. We will get back to you with an update soon!

Regrets for the delay in response.

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

Should I expect vzMul to run faster than the equivalent for loop in C#?

>>The VM implementation is usually more accurate than compiler implementations of this operation (compiler implementations are often 'naive', and may cause spurious exceptions). The additional accuracy in the VM implementation comes with the price of somewhat lower performance, at least for certain vector lengths.

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

A gentle reminder:

We have heard back from you for a while. Could you please let us know if you need any other information or let us know if we could close this case at our end?

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the implementation from disassembling C#:

public static Complex operator *(Complex left, Complex right)

{

// Multiplication: (a + bi)(c + di) = (ac -bd) + (bc + ad)i

double result_realpart = (left.m_real * right.m_real) - (left.m_imaginary * right.m_imaginary);

double result_imaginarypart = (left.m_imaginary * right.m_real) + (left.m_real * right.m_imaginary);

return new Complex(result_realpart, result_imaginarypart);

}

Are you saying that your C code does something in addition to that?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

HA/LA zmul is much more accurate than compiler implementation, especially in cases close to cancellation. Moreover, zmul properly reports all overflow, underflow, and error domain cases. This is not the issue, it is the MKL VM feature.

Use VM EP accuracy flavor or compiler's complex multiplication when full accuracy is not required for maximum performance.

There would be a significant performance difference between C# for loop and oneMKL. However, When the problem size increased to ~10^5, vzmul started to be the faster one. We should expect MKL vzmul to be faster, especially vzmul uses all the cores by default.

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

A gentle reminder:

Has the information provided helped? Could you please let us know if we could close this case on our end?

Best Regards,

Shanmukh.SS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Philip,

Thanks for accepting our solution. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Best Regards,

Shanmukh.SS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page