- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The test environment uses dual-channel Intel(R) Xeon(R) Silver 4410Y, turns on NUMA, and the configuration is as follows:

Each CPU has 12 physical cores and each physical core has 2 hyper-threads;

Clock frequency: 2.10GHz

L1 dcache: 1.1 MB

L1 icache: 768 KB

L2 cache: 48MB

L3 cache: 60MB

Local DDR Memory Type: DDR5-4800, Sumsung;

Local CXL Memory Type: DDR4-3200, Micron;

Local DDR Memory Channel: 8

Local CXL Memory Channel: 2

Local DDR Memory Size: 32Gx8 per channel, 1 on each channel

Local CXL Memory Size: Single channel 16G, only 1 memory module, single channel

The software environment configuration is as follows:

OS: Linux 6.15.4

GCC: 11.4.0

2 issues I discovered while testing my current server using MLC:

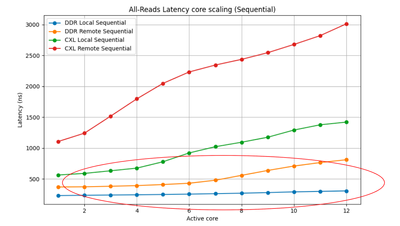

Question 1: When the CPU on the NUMA0 node accesses the DDR memory on the NUMA1 node, as the number of parallel threads increases, the bandwidth gradually increases to the bottleneck, and the delay also increases; but conversely, the CPU on the NUMA1 node accesses the DDR memory on the NUMA0 node. When using DDR memory, the bandwidth increases to the bottleneck, but the latency does not change significantly, with a very small increase. The scripts used are the same except that the bound CPU and memory are on different NUMA nodes. What may be the reason for this?

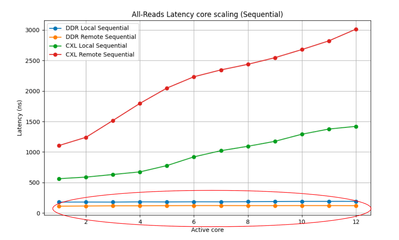

Question 2: Is the latency of the CPU on the NUMA1 node accessing local memory higher than Remote DDR?

The monitoring tool shows that there is no problem with the binding of CPU and memory. The principle of MLC delay test is to create a timer at the beginning of the test, then continuously execute the load instruction to access the memory of the specified size, and record the number of load instructions run during this stage and the total running time.

The test script for CPU binding on the NUMA1 node is as follows:

The test script for CPU binding on the NUMA0 node is as follows:

What is the reason for this phenomenon? Is there a problem with my test script or do I not understand the principles of MLC testing? Please reply as soon as possible.

- Tags:

- mlc

Link Copied

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page