In order to understand the advantages of the new built-in AI acceleration engines on Intel hardware, it is important to first understand the following two datatypes used in AI/ML workloads: the short precision datatypes INT8 and BF16. These two datatypes are better for inferencing than the default datatype FP32 more commonly associated with AI/ML inferencing. FP32 has a higher memory footprint and higher latency, whereas low-precision models with INT8 or BF16 result in faster computation speeds, so why is it still the default over INT8 or BF16? Well, to optimize and support these two efficient datatypes, HW needs special features/instructions. Intel provides them in the form of Intel® Advanced Matrix Extensions (Intel® AMX) on Intel® Xeon® 4th Gen Scalable processors and Intel® Xᵉ Matrix Extensions (Intel® XMX) on Intel® Data Center GPU Max Series or Intel® Data Center GPU Flex Series.

Anything Goes: Datatypes Free-for-all

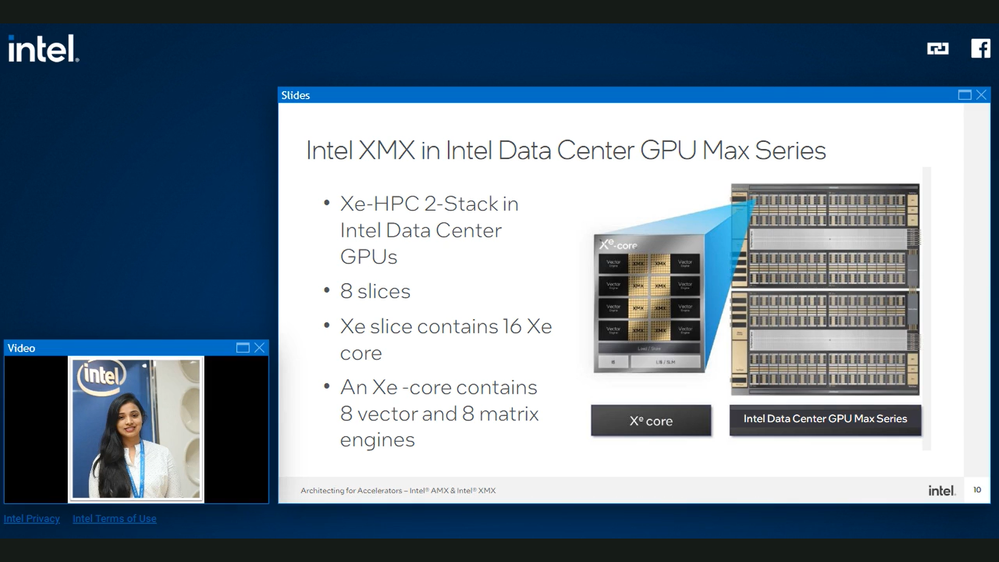

One way to enable the support of these datatypes is the SYCL based coding abstraction SYCL Joint Matrix Extension, which is invoked on Intel® AMX and Intel® XMX to ensure portability and performance improvements to the relevant code. Intel® AMX consists of extensions to the x86 instruction set architecture (ISA) for microprocessors using two-dimensional registers called tiles upon which accelerators can perform operations. On the GPU side, Intel® Xᵉ Matrix Extensions, also known as DPAS, specializes in executing dot product and accumulate operations on 2D systolic arrays. It supports a wide range of datatypes including U8, S8, U4, S4, U2, S2, INT8, FP16, BF16, and TF32. Both instruction sets require having Intel® oneAPI Base Toolkit version 2023.1.0 or later installed.

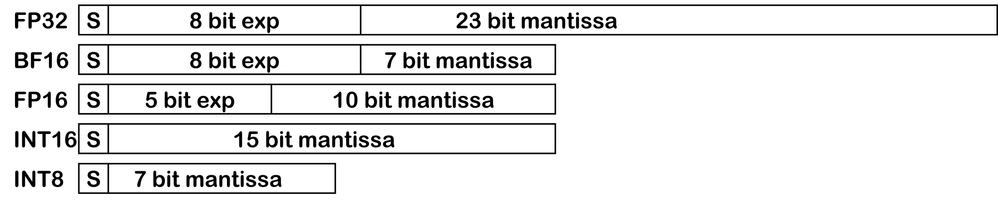

Some of the most commonly used 16-bit formats and 8-bit formats are 16-bit IEEE floating point (fp16), bfloat16 (bf16), 16-bit integer (int16), 8-bit integer (int8), and 8-bit Microsoft* floating point (ms-fp8). Figure 1 below visualizes the differences between some of these formats.

Figure 1. Various numerical datatype representations. s represents the sign bit. Also, note that FP32 and BF16 provide the same dynamic range but FP32 provides higher precision due to the larger mantissa.

Invoking Intel® AMX and Intel® XMX

Intel® AMX and Intel® XMX are invoked at various levels of abstractions to ultimately create the seamless optimizations seen by the end user. Closer to the hardware level is the CPU and GPU assembly layer providing the machine instructions, followed by the CPU and GPU intrinsics levels that encompass the variables and operations. At a more abstract coding level, above the intrinsics but below the libraries, there is the Joint Matrix API, which is a SYCL extension implemented to invoke Intel® AMX and Intel® XMX through SYCL code. This way of coding enables users to scale their matrix programming across several vendors invoking the systolic implementation of the respective platforms. A level up from here is where libraries such as the Intel® oneAPI Deep Neural Network Library (oneDNN) and Intel® oneAPI Math Kernel Library (oneMKL) are situated. At this moment this is enabled only for Intel Hardware, but scalable across supported CPUs and GPUs. It is thus from the Intel® AMX and Intel® XMX hardware level all the way up through the libraries level that Intel is making the acceleration magic possible for the end users. Application programmers will be more focused on algorithmic improvements at the highest abstractions, such as the framework and AI applications levels.

Implementing Joint Matrix for Tensor Hardware Programming

Diving into the main innovation, the new SYCL Joint Matrix Extension for tensor hardware programming unifies targets like Intel® Advanced Matrix Extensions (Intel® AMX) for CPUs, Intel® Xe Matrix Extensions (Intel® XMX) for GPUs, and NVIDIA* Tensor Cores. The joint matrix extension consists of the following functions: joint_matrix_load and joint_matrix_store for explicit memory operations, joint_matrix_fill for matrix initialization, joint_matrix_mad for the multiplication and addition operations; and lastly get_wi_data for element wise operations, which splits the matrix into its work items and performs operations. Furthermore, to reach optimal performance levels on Intel® XMX, the GEMM kernel must be written in a way that continuously feeds Intel® XMX with the data needed to perform the most multiplication and additions operations as possible per cycle. (See the optimization guide here.)

While frameworks like TensorFlow and libraries like oneDNN are often enough to handle most user needs, there are users who want to build their own neural networks applications, but these libraries and frameworks may appear too high-level and too heavy (very large libraries) and do not provide a clear pathway for developers to create custom optimizations. Furthermore, these large frameworks are often too slow-paced in implementing support for the frequent additions and changes to available operations and tools in the evolving machine learning space. As such, users may consider using joint matrix with its lower level of abstraction than the frameworks, providing performance, productivity, and fusion capabilities while still retaining portability across different systems by using only one code to target different tensor hardware. For developers looking for more control over their neural networking projects and would like to try it out, you can find the SYCL Joint Matrix Extension specification here. We also encourage you to check out Intel’s other AI Tools and Framework optimizations and learn about the unified, open, standards-based oneAPI programming model that forms the foundation of Intel’s AI Software Portfolio.

See the video: Architecting for Accelerators

About our experts

Subarnarekha Ghosal

Technical Consulting Engineer

Intel

Subarnarekha is a Software Technology Consulting Engineer responsible for helping internal and external customers succeed on Intel platforms using Intel software development tools specifically Intel compilers. She uses code modernization techniques for optimal code performance on the Intel Central Processing Unit (CPU) and Intel Graphics Processing Unit (GPU). Subarna has a master’s degree in Computer Science and is working with C++ technology and Computer Architecture since last 7+ years.

Mayur Pandey

Technical Consulting Engineer

Intel

Mayur is a Software Technical Consulting Engineer at Intel with 12+ years of experience on different proprietary and open-source compilers and compiler tools with the main focus being the Compiler Frontend and Optimizer. He also has experience in Application Benchmarking, Performance Evaluation, and Tuning of applications on HPC clusters and Enablement of HPC Applications on different architectures.

AI Software Marketing Engineer creating insightful content surrounding the cutting edge AI and ML technologies and software tools coming out of Intel

AI Software Marketing Engineer creating insightful content surrounding the cutting edge AI and ML technologies and software tools coming out of Intel

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.