Zhipeng Cai is a research scientist at Intel Labs. His research mainly focuses on fundamental computer vision and machine learning problems such as robust geometric perception, neural rendering, generative models and continual learning.

Highlights:

- Researchers at Intel Labs, in collaboration with Xiamen University and DJI, have introduced GIM, the first framework that can use unlabeled internet videos to train foundation AI models for zero-shot image matching.

- GIM can steadily and significantly improve state-of-the-art* by using more and more videos for training – a scaling law.

- GIM has been accepted to ICLR 2024 as a spotlight presentation, an award given only to the top 5% of conference papers.

One of the most fundamental computer vision problems is corresponding pixels between two images, and image matching aims to solve this challenge. However, existing AI models cannot generalize to unseen scenarios due to the lack of labeled data. Hence, they typically train separate models for different scene types.

Utilizing unlabeled videos from the internet, our Generalizable Image Matcher (GIM) first enables a single image matching model to generalize to various zero-shot data. The performance of the model then continually grows with the video data size. This novel technology can revolutionize many industrial applications, such as 3D reconstruction, neural rendering (NeRFs/Gaussian Splattings), autonomous driving and so on.

Existing Approaches

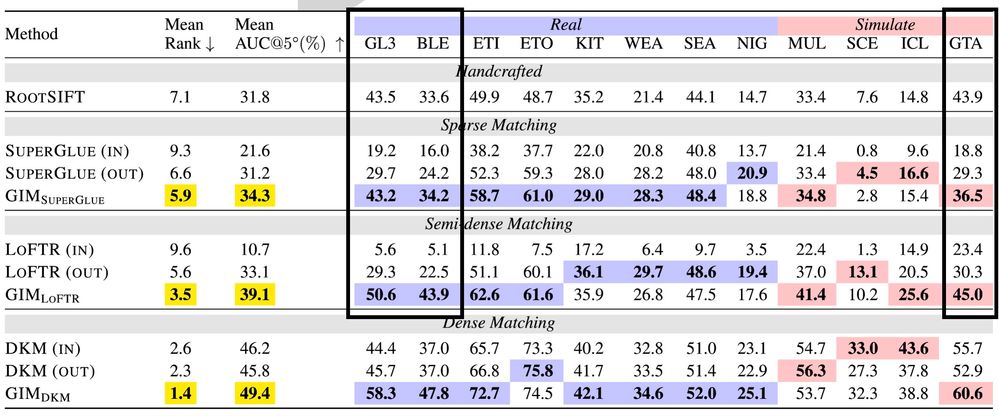

Image matching methods can be roughly categorized as 1) hand crafted and 2) learning-based methods. Hand-crafted methods rely on heuristics, which struggle with challenging data with small overlaps, large motions or texture-less regions. Meanwhile, hand-crafted methods can only generate sparse matching at key points. Learning-based methods can provide dense matching and handle challenging cases. However, the lack of diverse and large scale labeled data makes them generalize poorly to unseen scenarios. Our experiments with GIM show that for many in-the-wild datasets, learning-based methods perform worse than hand-crafted methods (see Figure 1 below).

Self-training on Internet Videos

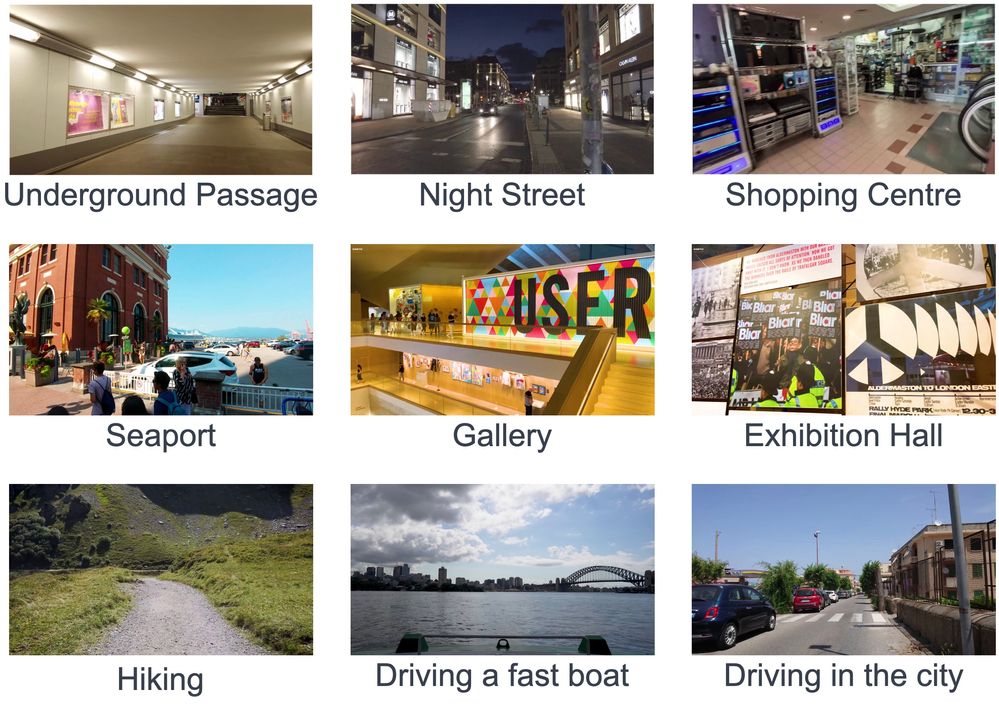

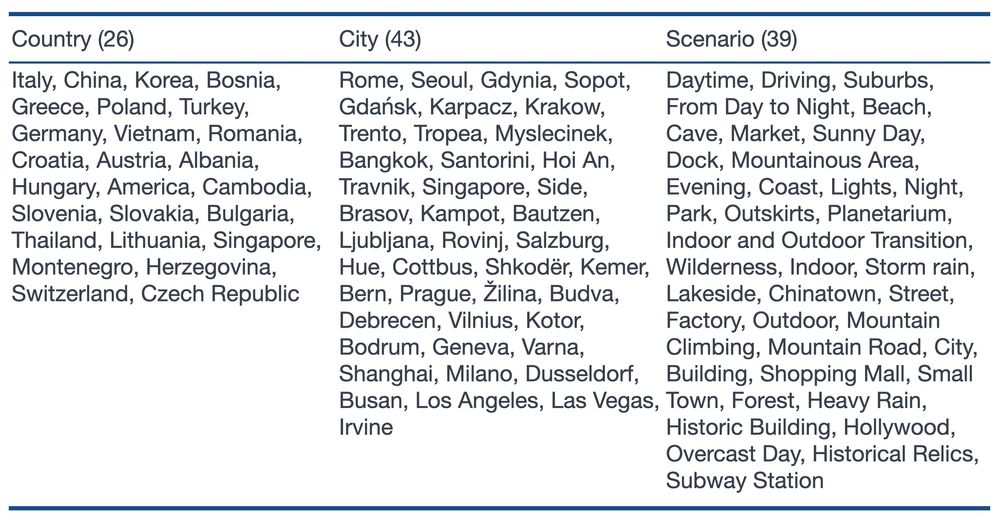

GIM aims to train a single model that can generalize to diverse zero-shot data. The key idea is to leverage internet videos that are naturally diverse, large scale and easy to access. GIM uses tourism videos from YouTube, which cover various countries, scene types and weather conditions (as shown below).

GIM applies self-training to generate effective supervision signals on unlabeled video data given a new image matching architecture and a set of downloaded videos. GIM first trains the model on standard image matching datasets (e.g., MegaDepth), then multiple complementary image matching methods are used together with the trained model to find dense candidate correspondences between nearby video frames. Robust fitting is applied to filter outliers in the candidate correspondences, and leveraging the temporal coherence of video frames, GIM further propagates the filtered correspondences to distant video frames to enhance the supervision signal. Furthermore, strong data augmentations are added to the video data during training.

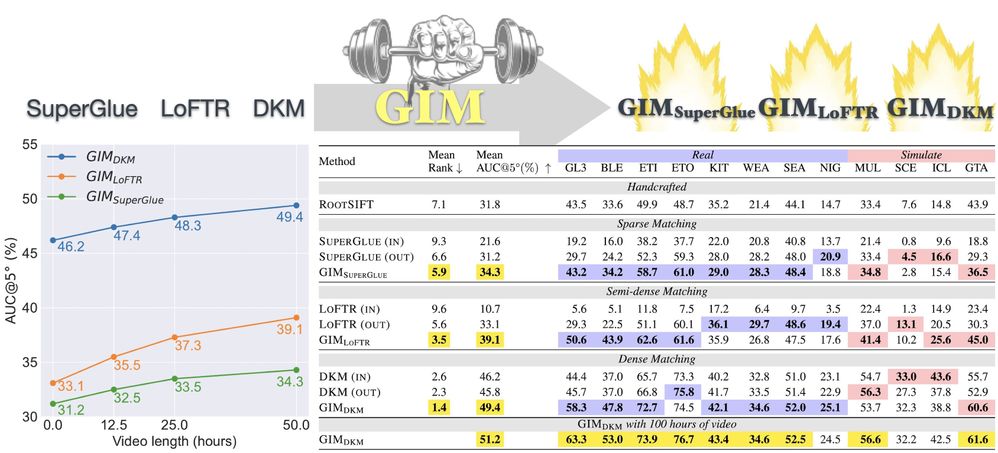

Results

Our results showed that using 50 hours of YouTube videos, GIM significantly improved the performance of three state-of-the-art architectures, namely DKM, LoFTR and SuperGLUE. A scaling law is observed, as the performance improved steadily and significantly with the video data size and has not saturated yet at 50 hours. With 100 hours of YouTube video, GIM further improved overall performance from 49.4% AUC to 51.2%*.

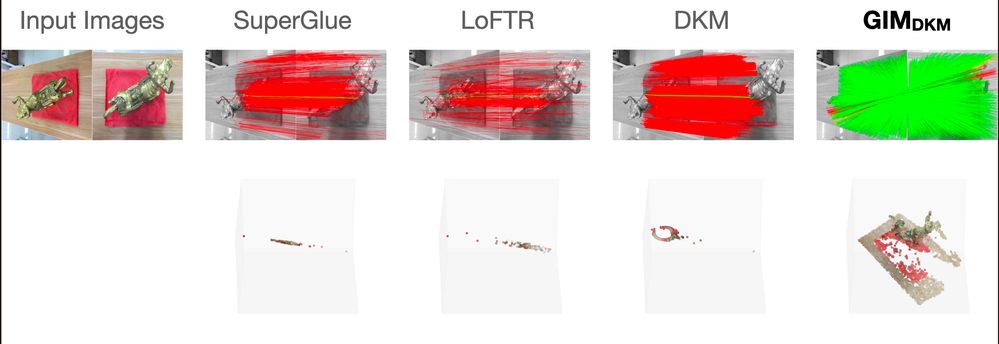

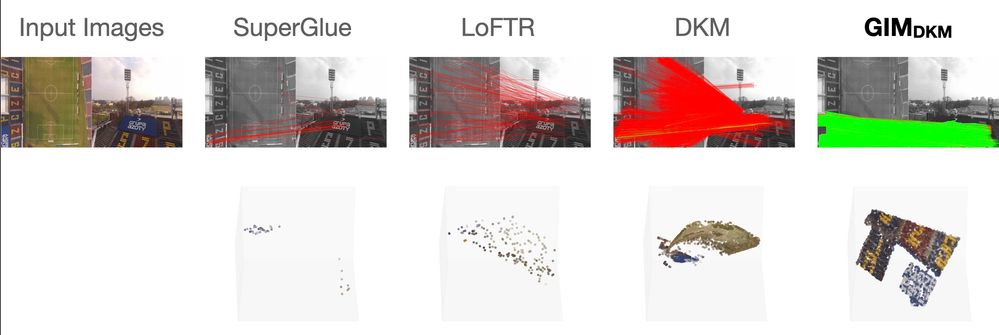

The foundation model obtained from GIM can benefit many downstream applications. We first show two view reconstruction results. GIM is the only method that remains robust on these zero-shot data.

Figure 5. Two view matching results without using RANSAC for outlier filtering. Green lines indicate correct matches and redlines indicate incorrect matches. GIM is the only method that can find sufficient number of reliable matches*.

Figure 6. Matching results with small overlaps.

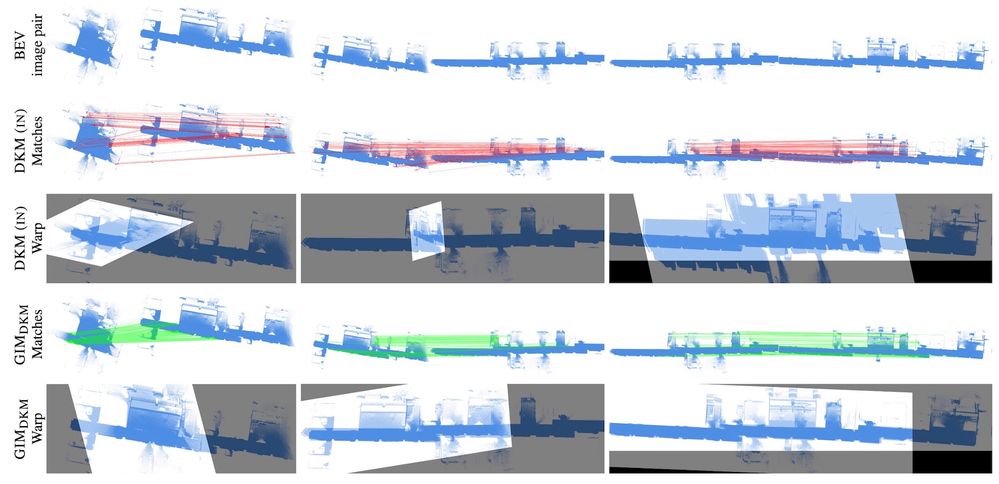

An emerging ability of GIM is that it can even be used to align the Bird-Eye-View of 3D point clouds, which has never been achieved before.

Figure 7. Point cloud BEV image matching. GIMDKM even successfully matches BEV images projected from point clouds despite never being trained for it*.

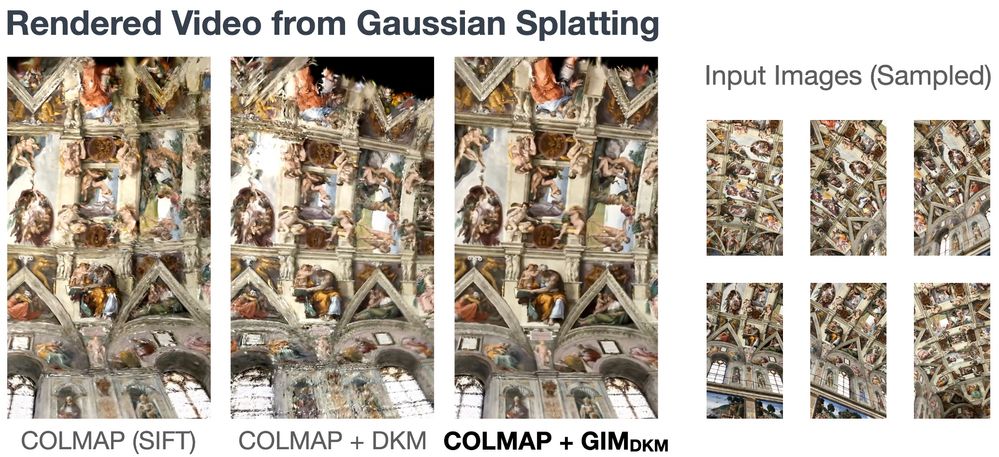

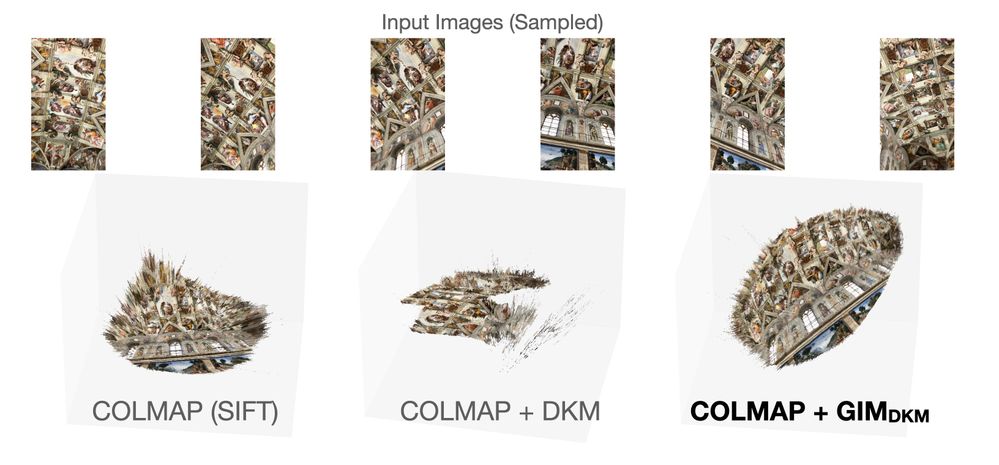

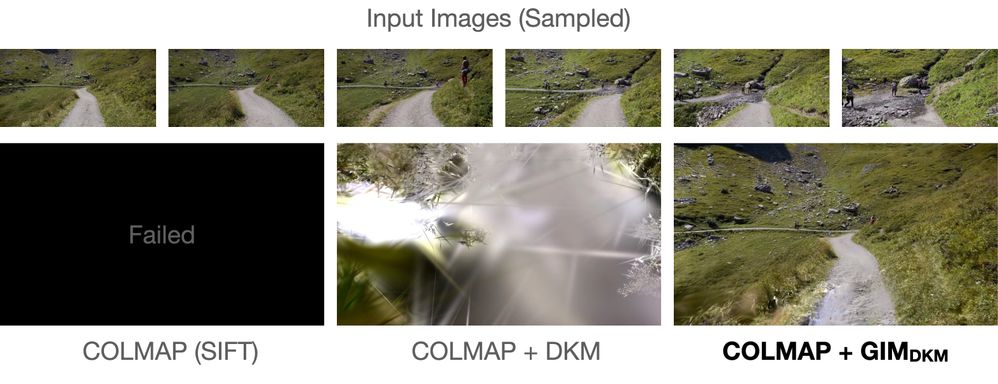

Improve COLMAP

COLMAP is the standard tool for structure-from-motion and 3D reconstruction. By replacing the SIFT matching with GIM, we can significantly enhance the output quality*.

Many neural rendering methods like Gaussian Splatting use the output of COLMAP as the input. Using GIM to improve the robustness of COLMAP, we can also improve the neural rendering quality on challenging data*.

Figure 10. In an example where all methods can reconstruct the scene, a lower noise level of GIM translates to sharper and cleaner renderings*.

Conclusion

Overall, our experiments demonstrate the broader significance and generalizability of GIM. We have successfully demonstrated that GIM achieves a relative zero-shot performance improvement of several state-of-the-art architectures with only 50 hours of YouTube videos. Yet, performance continues to improve consistently with the amount of video data and generalizes well to extreme cross-domain data*. GIM also has the potential to benefit numerous down-stream tasks, including visual localization, homography estimation, and 3D reconstruction, even comparing to in-domain baselines on their specific domains. We are honored to receive a spotlight presentation for this work at ICLR 2024 and are excited to share more about the potential impact GIM can have on the field of computer vision.

Try our model for yourself here.

*: Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.

I am interested in general machine learning and computer vision problems. During PhD, I was interested in robust geometric perception, which estimates computer vision models (correspondences between images, poses, 3D reconstructions) given outlier contaminated data. I was specifically interested in designing efficient algorithms that have optimality guarantees, i.e., guarantee to return the best solution. After joining Intel, my interests shift towards a mixture of learning and vision, where I study various problems such as 1) learning-based perception (feature matching, finding correspondences, pose estimation, depth estimation etc) 2) Continual Learning 3) Generative models (e.g., novel view synthesis, image/3D scene generation). My work has been selected as one of the 12 best papers at ECCV'18.

I am interested in general machine learning and computer vision problems. During PhD, I was interested in robust geometric perception, which estimates computer vision models (correspondences between images, poses, 3D reconstructions) given outlier contaminated data. I was specifically interested in designing efficient algorithms that have optimality guarantees, i.e., guarantee to return the best solution. After joining Intel, my interests shift towards a mixture of learning and vision, where I study various problems such as 1) learning-based perception (feature matching, finding correspondences, pose estimation, depth estimation etc) 2) Continual Learning 3) Generative models (e.g., novel view synthesis, image/3D scene generation). My work has been selected as one of the 12 best papers at ECCV'18.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.