- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

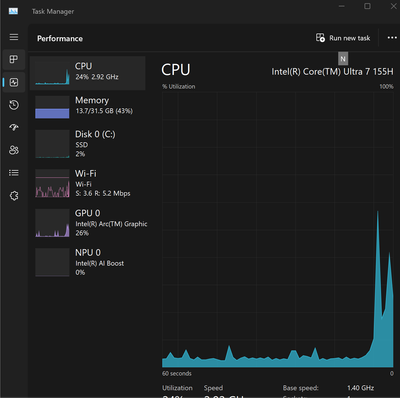

We are trying to run some of the models on Intel Core Ultra system NPU and GPU, we observed that when we inferenced the model on GPU, CPU utilizations were high whereas GPU utilizations were very low, also we checked if layers are falling back to the CPU device but for all the layers it shows it was running on GPU.

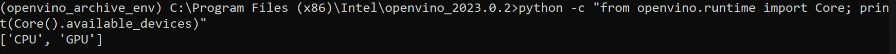

Also we are unable to accelerate NPU, when we checked for available devices it shows CPU and GPU only. NPU driver was already installed, Could you please help us on how to accelerate NPUs using OpenVino Intel and how to increase the GPU utilizations

Thanks,

Shravanthi J

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shravanthi J,

Can you check whether your OpenVINO includes the openvino_intel_npu_plugin.dll file? If not, please download the latest release file version via OpenVINO Runtime archive file for Windows and try out the NPU plugin again.

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shravanthi J,

Thanks for reaching out. May I know which OpenVINO version and the system you are using? Intel® NPU device requires a proper driver to be installed in the system. Make sure you use the most recent supported driver for your hardware setup. You may refer to these Configurations for Intel® NPU with OpenVINO™.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

I am using openvino version 2023.3.0 and the system Intel(R) Core(TM) Ultra 7 155H.

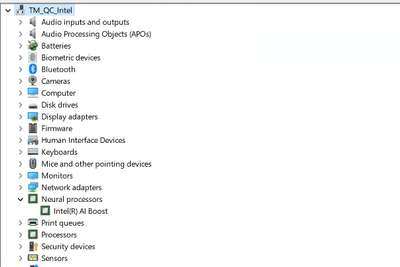

I see that NPU driver already installed

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shravanthi J,

May I know what application you are running? Currently, only the models with static shapes are supported on NPU. When running your application, change the device name to "NPU" and run.

Meanwhile, OpenVINO allows for asynchronous execution, enabling concurrent processing of multiple inference requests. This can enhance GPU utilization and improve throughput. You may check these Working with GPUs in OpenVINO to learn how to accelerate inference with GPUs in OpenVINO

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

When i list the devices it does not show NPU at all.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shravanthi J,

Can you check whether your OpenVINO includes the openvino_intel_npu_plugin.dll file? If not, please download the latest release file version via OpenVINO Runtime archive file for Windows and try out the NPU plugin again.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

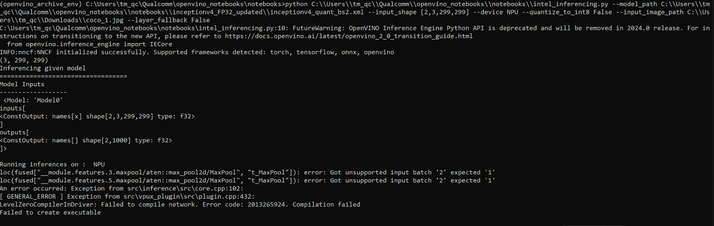

Thanks we are able to accelerate NPU now, but when trying to run model with batch size 2 on NPU it fails. The mode is static still it fails for batch 2 or any other batch except 1 and below is the error.

Thanks,

Shravanthi J

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shravanthi J,

Good to hear that you are able to accelerate the NPU now. I noticed you have submitted the same issue in the thread below:

I will close this ticket and we will continue providing support on that thread. This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page