- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I installed OpenVINO with pip3 and tried the OpenVINO Runtime API Python tutorial. The first lines of the tutorial are

from openvino.runtime import Core

ie = Core()

devices = ie.available_devices

for device in devices:

device_name = ie.get_property(device, "FULL_DEVICE_NAME")

print(f"{device}: {device_name}")

and they produce a

builtins.ModuleNotFoundError: No module named 'openvino.runtime'; 'openvino' is not a package

error. I'm using Ubuntu 20.04, Python 3.8.10 and OpenVINO 2023.0.0. Can you tell me what is wrong? How to make the import work? I do not use any kind of virtual environment and my pip was upgraded to the latest version before installing openvino.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pip install openvino-dev

It is usually better to be in an activated python environment before you do the install

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the suggestion. I uninstalled openvino and installed openvino-dev but I get the same error. I noticed that opencv-python is installed with openvino. I uninstalled it and tried opencd-contrib-python, also that did not work. Now after uninstalling opencv-python, installing opencv-contrib-python, uninstalling opencv-contrib-python and installing opencv-python I get a

builtins.AttributeError: partially initialized module 'openvino' has no attribute '__path__' (most likely due to a circular import)

error as well in addition to the previous error.

EDIT:This happens also when I install openvino-dev from virtual environment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jvkloc,

I am able to download OpenVINO™ from PyPI Repository on Ubuntu 20.04 machine.

Can you check whether OpenVINO™ package has been installed correctly to your Python site-package by running the following command?

pip show openvino-dev

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I get the following output with the command you gave:

Name: openvino-dev

Version: 2023.0.0

Summary: OpenVINO(TM) Development Tools

Home-page: https://docs.openvino.ai/latest/index.html

Author: Intel® Corporation

Author-email: openvino_pushbot@intel.com

License: OSI Approved :: Apache Software License

Location: /home/jvkloc/.local/lib/python3.9/site-packages

Requires: texttable, jstyleson, requests, numpy, networkx, openvino-telemetry, pyyaml, addict, defusedxml, opencv-python, pillow, tqdm, openvino, opencv-python, networkx, scipy

Required-by:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jvkloc,

From the output, I can see that OpenVINO™ package is installed correctly. But you still faced import issue which I never meet before.

As an alternative, I would suggest you to install OpenVINO™ Runtime on Linux from an Archive File.

However, OpenVINO™ Development Tools can be installed via pypi.org only, which OpenVINO™ Development Tools contains Model Optimizer components: model conversion API, Model Downloader and other Open Model Zoo tools

Could you please check whether Model Optimizer can be imported by running the following command:

mo -h

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do not understand how to see if Model Optimizer can be imported or not from this mo -h output:

usage: main.py [options]

optional arguments:

-h, --help show this help message and exit

--framework FRAMEWORK

Name of the framework used to train the input model.

Framework-agnostic parameters:

--model_name MODEL_NAME, -n MODEL_NAME

Model_name parameter passed to the final create_ir transform. This parameter is used to name a network in a generated IR and output .xml/.bin files.

--output_dir OUTPUT_DIR, -o OUTPUT_DIR

Directory that stores the generated IR. By default, it is the directory from where the Model Optimizer is launched.

--freeze_placeholder_with_value FREEZE_PLACEHOLDER_WITH_VALUE

Replaces input layer with constant node with provided value, for example: "node_name->True". It will be DEPRECATED in future releases. Use --input option to specify

a value for freezing.

--static_shape Enables IR generation for fixed input shape (folding `ShapeOf` operations and shape-calculating sub-graphs to `Constant`). Changing model input shape using the

OpenVINO Runtime API in runtime may fail for such an IR.

--use_new_frontend Force the usage of new Frontend of Model Optimizer for model conversion into IR. The new Frontend is C++ based and is available for ONNX* and PaddlePaddle* models.

Model optimizer uses new Frontend for ONNX* and PaddlePaddle* by default that means `--use_new_frontend` and `--use_legacy_frontend` options are not specified.

--use_legacy_frontend

Force the usage of legacy Frontend of Model Optimizer for model conversion into IR. The legacy Frontend is Python based and is available for TensorFlow*, ONNX*,

MXNet*, Caffe*, and Kaldi* models.

--input_model INPUT_MODEL, -m INPUT_MODEL, -w INPUT_MODEL

Tensorflow*: a file with a pre-trained model (binary or text .pb file after freezing). Caffe*: a model proto file with model weights.

--input INPUT Quoted list of comma-separated input nodes names with shapes, data types, and values for freezing. The order of inputs in converted model is the same as order of

specified operation names. The shape and value are specified as comma-separated lists. The data type of input node is specified in braces and can have one of the

values: f64 (float64), f32 (float32), f16 (float16), i64 (int64), i32 (int32), u8 (uint8), boolean (bool). Data type is optional. If it's not specified explicitly

then there are two options: if input node is a parameter, data type is taken from the original node dtype, if input node is not a parameter, data type is set to f32.

Example, to set `input_1` with shape [1,100], and Parameter node `sequence_len` with scalar input with value `150`, and boolean input `is_training` with `False`

value use the following format: "input_1[1,100],sequence_len->150,is_training->False". Another example, use the following format to set input port 0 of the node

`node_name1` with the shape [3,4] as an input node and freeze output port 1 of the node "node_name2" with the value [20,15] of the int32 type and shape [2]:

"0:node_name1[3,4],node_name2:1[2]{i32}->[20,15]".

--output OUTPUT The name of the output operation of the model or list of names. For TensorFlow*, do not add :0 to this name.The order of outputs in converted model is the same as

order of specified operation names.

--input_shape INPUT_SHAPE

Input shape(s) that should be fed to an input node(s) of the model. Shape is defined as a comma-separated list of integer numbers enclosed in parentheses or square

brackets, for example [1,3,227,227] or (1,227,227,3), where the order of dimensions depends on the framework input layout of the model. For example, [N,C,H,W] is

used for ONNX* models and [N,H,W,C] for TensorFlow* models. The shape can contain undefined dimensions (? or -1) and should fit the dimensions defined in the input

operation of the graph. Boundaries of undefined dimension can be specified with ellipsis, for example [1,1..10,128,128]. One boundary can be undefined, for example

[1,..100] or [1,3,1..,1..]. If there are multiple inputs in the model, --input_shape should contain definition of shape for each input separated by a comma, for

example: [1,3,227,227],[2,4] for a model with two inputs with 4D and 2D shapes. Alternatively, specify shapes with the --input option.

--batch BATCH, -b BATCH

Set batch size. It applies to 1D or higher dimension inputs. The default dimension index for the batch is zero. Use a label 'n' in --layout or --source_layout option

to set the batch dimension. For example, "x(hwnc)" defines the third dimension to be the batch.

--mean_values MEAN_VALUES

Mean values to be used for the input image per channel. Values to be provided in the (R,G,B) or [R,G,B] format. Can be defined for desired input of the model, for

example: "--mean_values data[255,255,255],info[255,255,255]". The exact meaning and order of channels depend on how the original model was trained.

--scale_values SCALE_VALUES

Scale values to be used for the input image per channel. Values are provided in the (R,G,B) or [R,G,B] format. Can be defined for desired input of the model, for

example: "--scale_values data[255,255,255],info[255,255,255]". The exact meaning and order of channels depend on how the original model was trained. If both

--mean_values and --scale_values are specified, the mean is subtracted first and then scale is applied regardless of the order of options in command line.

--scale SCALE, -s SCALE

All input values coming from original network inputs will be divided by this value. When a list of inputs is overridden by the --input parameter, this scale is not

applied for any input that does not match with the original input of the model. If both --mean_values and --scale are specified, the mean is subtracted first and

then scale is applied regardless of the order of options in command line.

--reverse_input_channels [REVERSE_INPUT_CHANNELS]

Switch the input channels order from RGB to BGR (or vice versa). Applied to original inputs of the model if and only if a number of channels equals 3. When

--mean_values/--scale_values are also specified, reversing of channels will be applied to user's input data first, so that numbers in --mean_values and

--scale_values go in the order of channels used in the original model. In other words, if both options are specified, then the data flow in the model looks as

following: Parameter -> ReverseInputChannels -> Mean apply-> Scale apply -> the original body of the model.

--source_layout SOURCE_LAYOUT

Layout of the input or output of the model in the framework. Layout can be specified in the short form, e.g. nhwc, or in complex form, e.g. "[n,h,w,c]". Example for

many names: "in_name1([n,h,w,c]),in_name2(nc),out_name1(n),out_name2(nc)". Layout can be partially defined, "?" can be used to specify undefined layout for one

dimension, "..." can be used to specify undefined layout for multiple dimensions, for example "?c??", "nc...", "n...c", etc.

--target_layout TARGET_LAYOUT

Same as --source_layout, but specifies target layout that will be in the model after processing by ModelOptimizer.

--layout LAYOUT Combination of --source_layout and --target_layout. Can't be used with either of them. If model has one input it is sufficient to specify layout of this input, for

example --layout nhwc. To specify layouts of many tensors, names must be provided, for example: --layout "name1(nchw),name2(nc)". It is possible to instruct

ModelOptimizer to change layout, for example: --layout "name1(nhwc->nchw),name2(cn->nc)". Also "*" in long layout form can be used to fuse dimensions, for example

"[n,c,...]->[n*c,...]".

--compress_to_fp16 [COMPRESS_TO_FP16]

If the original model has FP32 weights or biases, they are compressed to FP16. All intermediate data is kept in original precision. Option can be specified alone as

"--compress_to_fp16", or explicit True/False values can be set, for example: "--compress_to_fp16=False", or "--compress_to_fp16=True"

--extensions EXTENSIONS

Paths or a comma-separated list of paths to libraries (.so or .dll) with extensions. For the legacy MO path (if `--use_legacy_frontend` is used), a directory or a

comma-separated list of directories with extensions are supported. To disable all extensions including those that are placed at the default location, pass an empty

string.

--transform TRANSFORM

Apply additional transformations. Usage: "--transform transformation_name1[args],transformation_name2..." where [args] is key=value pairs separated by semicolon.

Examples: "--transform LowLatency2" or "--transform Pruning" or "--transform LowLatency2[use_const_initializer=False]" or "--transform "MakeStateful[param_res_names=

{'input_name_1':'output_name_1','input_name_2':'output_name_2'}]" Available transformations: "LowLatency2", "MakeStateful", "Pruning"

--transformations_config TRANSFORMATIONS_CONFIG

Use the configuration file with transformations description. Transformations file can be specified as relative path from the current directory, as absolute path or

as arelative path from the mo root directory.

--silent [SILENT] Prevent any output messages except those that correspond to log level equals ERROR, that can be set with the following option: --log_level. By default, log level is

already ERROR.

--log_level {CRITICAL,ERROR,WARN,WARNING,INFO,DEBUG,NOTSET}

Logger level of logging massages from MO. Expected one of ['CRITICAL', 'ERROR', 'WARN', 'WARNING', 'INFO', 'DEBUG', 'NOTSET'].

--version Version of Model Optimizer

--progress [PROGRESS]

Enable model conversion progress display.

--stream_output [STREAM_OUTPUT]

Switch model conversion progress display to a multiline mode.

TensorFlow*-specific parameters:

--input_model_is_text [INPUT_MODEL_IS_TEXT]

TensorFlow*: treat the input model file as a text protobuf format. If not specified, the Model Optimizer treats it as a binary file by default.

--input_checkpoint INPUT_CHECKPOINT

TensorFlow*: variables file to load.

--input_meta_graph INPUT_META_GRAPH

Tensorflow*: a file with a meta-graph of the model before freezing

--saved_model_dir SAVED_MODEL_DIR

TensorFlow*: directory with a model in SavedModel format of TensorFlow 1.x or 2.x version.

--saved_model_tags SAVED_MODEL_TAGS

Group of tag(s) of the MetaGraphDef to load, in string format, separated by ','. For tag-set contains multiple tags, all tags must be passed in.

--tensorflow_custom_operations_config_update TENSORFLOW_CUSTOM_OPERATIONS_CONFIG_UPDATE

TensorFlow*: update the configuration file with node name patterns with input/output nodes information.

--tensorflow_object_detection_api_pipeline_config TENSORFLOW_OBJECT_DETECTION_API_PIPELINE_CONFIG

TensorFlow*: path to the pipeline configuration file used to generate model created with help of Object Detection API.

--tensorboard_logdir TENSORBOARD_LOGDIR

TensorFlow*: dump the input graph to a given directory that should be used with TensorBoard.

--tensorflow_custom_layer_libraries TENSORFLOW_CUSTOM_LAYER_LIBRARIES

TensorFlow*: comma separated list of shared libraries with TensorFlow* custom operations implementation.

Caffe*-specific parameters:

--input_proto INPUT_PROTO, -d INPUT_PROTO

Deploy-ready prototxt file that contains a topology structure and layer attributes

--caffe_parser_path CAFFE_PARSER_PATH

Path to Python Caffe* parser generated from caffe.proto

--k K Path to CustomLayersMapping.xml to register custom layers

--disable_omitting_optional [DISABLE_OMITTING_OPTIONAL]

Disable omitting optional attributes to be used for custom layers. Use this option if you want to transfer all attributes of a custom layer to IR. Default behavior

is to transfer the attributes with default values and the attributes defined by the user to IR.

--enable_flattening_nested_params [ENABLE_FLATTENING_NESTED_PARAMS]

Enable flattening optional params to be used for custom layers. Use this option if you want to transfer attributes of a custom layer to IR with flattened nested

parameters. Default behavior is to transfer the attributes without flattening nested parameters.

MXNet-specific parameters:

--input_symbol INPUT_SYMBOL

Symbol file (for example, model-symbol.json) that contains a topology structure and layer attributes

--nd_prefix_name ND_PREFIX_NAME

Prefix name for args.nd and argx.nd files.

--pretrained_model_name PRETRAINED_MODEL_NAME

Name of a pretrained MXNet model without extension and epoch number. This model will be merged with args.nd and argx.nd files

--save_params_from_nd [SAVE_PARAMS_FROM_ND]

Enable saving built parameters file from .nd files

--legacy_mxnet_model [LEGACY_MXNET_MODEL]

Enable MXNet loader to make a model compatible with the latest MXNet version. Use only if your model was trained with MXNet version lower than 1.0.0

--enable_ssd_gluoncv [ENABLE_SSD_GLUONCV]

Enable pattern matchers replacers for converting gluoncv ssd topologies.

Kaldi-specific parameters:

--counts COUNTS Path to the counts file

--remove_output_softmax [REMOVE_OUTPUT_SOFTMAX]

Removes the SoftMax layer that is the output layer

--remove_memory [REMOVE_MEMORY]

Removes the Memory layer and use additional inputs outputs instead

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My reply including mo -h output never seems to appear on this page. Maybe there's a length limit to posts? Anyway, it is quite long output and I don't know how to check if Model Optimizer can be imported or not. What am I looking for int the mo -h output?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

find . -name "openvino_dev-2022.3.1.dist-info"

If that does not find anything try this

sudo find / -name "openvino_dev-2022.3.1.dist-info"

One of these shoud find something. Go the the directory where the file is and list its contents. The directory should be under the standard python paths or under a python virtual environment directory.

If it is not then python will never find the openvino module.

Once again I strongly recommend doing all the pip installations in an activated python virtual environment. It much easier to find stuff then. All the installations will then be under the directory that you created when the virtual environment was created.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The openvino_dev-2023.0.0.dist-info is found:

/home/jvkloc/.local/lib/python3.9/site-packages/openvino_dev-2023.0.0.dist-info/Looks ok path to me, that's where other packages are, too. When I run python -c "from openvino.runtime import Core" I get

Traceback (most recent call last):

File "/usr/lib/command-not-found", line 28, in <module>

from CommandNotFound import CommandNotFound

File "/usr/lib/python3/dist-packages/CommandNotFound/CommandNotFound.py", line 19, in <module>

from CommandNotFound.db.db import SqliteDatabase

File "/usr/lib/python3/dist-packages/CommandNotFound/db/db.py", line 5, in <module>

import apt_pkg

ModuleNotFoundError: No module named 'apt_pkg'I got the same error also after

sudo apt-get install --reinstall python3-aptThe files in openvino_dev-2023.0.0.dist-info are

dev-third-party-programs.txt

entry_points.txt

INSTALLER

LICENSE

METADATA

omz-third-party-programs.txt

readme.txt

RECORD

top_level.txt

WHEEL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After some adventures I don't get any output with

python3 -c "from openvino.runtime import Core"But when I run the code

from openvino.runtime import Core

ie = Core()

devices = ie.available_devices

for device in devices:

device_name = ie.get_property(device, "FULL_DEVICE_NAME")

print(f"{device}: {device_name}")I still get

builtins.AttributeError: partially initialized module 'openvino' has no attribute '__path__' (most likely due to a circular import)

During handling of the above exception, another exception occurred:

builtins.ModuleNotFoundError: No module named 'openvino.runtime'; 'openvino' is not a packageIt is the import line that causes the errors. So I didn't get anywhere from the initial situation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

pip install python-apt

Once again I would suggest that start again with a python virtual environment.

python -m venv myopenvino-dev

source myopenvino-dev/bin/activate

pip install openvino-dev

python

>>>from openvino.runtime import Core

...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your patience. Now the code works in terminal:

(openvino) jvkloc@lenovo:~$ python

Python 3.9.5 (default, Nov 23 2021, 15:27:38)

[GCC 9.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from openvino.runtime import Core

>>> ie = Core()

>>> devices = ie.available_devices

>>> for device in devices:

... device_name = ie.get_property(device, "FULL_DEVICE_NAME")

... print(f"{device}: {device_name}")

...

CPU: Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz

>>> quit()

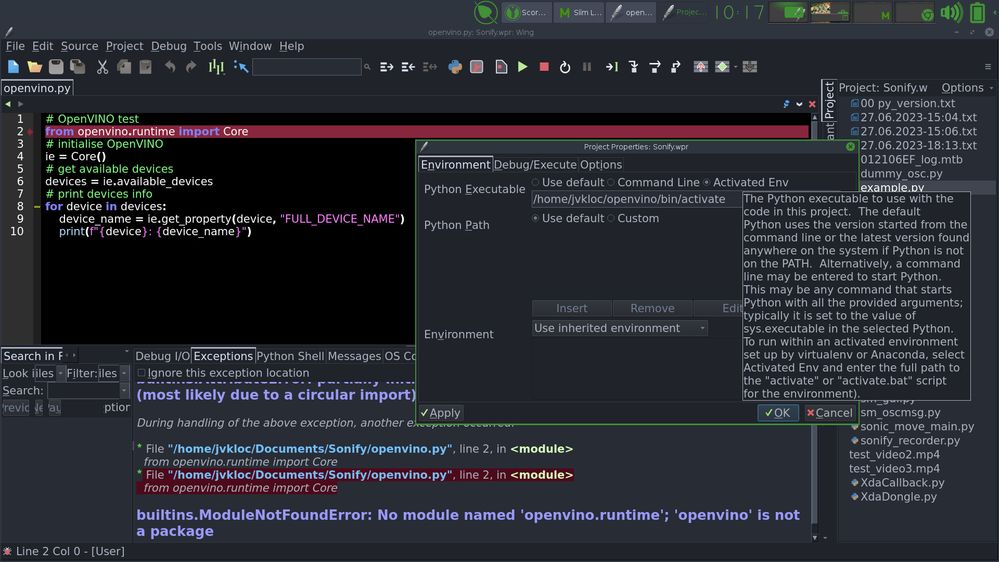

(openvino) jvkloc@lenovo:~$However, I still get the same errors in my IDE (Wing Personal 9, version 9.0.2.1), also after setting the project to use the openvino venv created fro the install:

Is there some additional setup needed for using venv in IDEs?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The answer is no but your ide needs to run the activate script. It looks like your ide is telling you what to do to acheive this.. The activate script needs to be run as a sequence of commands in the same shell instance that will be running python. Thats why when you do this from a shell command line you use the 'source' command. I am guessing that what is being described in your screenshot is how to do that. I think it may also be an idea to stop it using any inherited environment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In my experience (mostly with Eclipse), you need to add the Python interpreter PATH in which venv is created. The IDE is attempting to import from the site-packages of the main Python install.

The program execution from within the IDE needs to however search for imports at the venv site-packages. Typically, ~/.venv/<project venv dir>/lib/<python-version>/site-packages/ (sometimes lib64 instead of lib). In Ubuntu the path may be different, but still needs to point at the venv site-packages location. Change the path for the Python interpreter and it should work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the advice. I tried with lib and lib64 with no success. Also, this Wing tutorial says that only the Python Executable needs to be set for virtualenv (I tried also with virtualenv, instead of venv, but no success).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jvkloc,

Sorry for the confusion, I was trying to explain what is going on under the hood. The tutorial is correct, you only need to specify where the interpreter is located. The site-packages will be found accordingly whether under lib, lib64, or anywhere else.

I am not familiar with your IDE, but it should have a setting for the project's Python interpreter. You need to set that to the python executable file under the virtual environment.

If you are running Linux, with the virtual environment enabled, run the command "which python". It will show you the path you need to set in the IDE.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jvkloc,

Yes, you are able to import Model Optimizer.

Besides, you are doing the right way in using the existing virtualenv with Wing.

However, you still keep getting the same error:

builtins.ModuleNotFoundError: No module named 'openvino.runtime'; 'openvino' is not a package

This is because you are running a Python script which its name conflicts with the package name.

Please rename your Python script (home/jvkloc/Documents/Sonify/openvino.py) to any name except ‘openvino’.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally the correct answer to my problem. Thank you. Renaming the file did it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jvkloc,

Thank you for confirming your problem is resolved. I am glad to hear everything is going well.

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page