- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

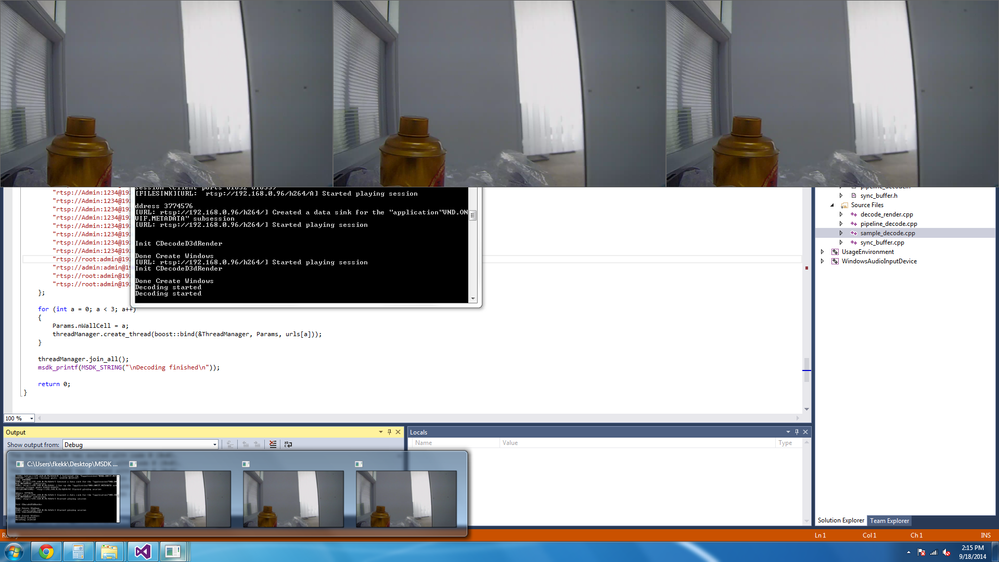

I managed to modify the sample_decode from Intel by multithreading the Media SDK Pipeline for multi channel video decoding. The Intel Media SDK is doing great even with 16 channel HD Videos (running on Intel Xeon E3).

However, I am clueless now on how to combine all the decoded frame, so that it render on the same windows (different region in same windows) rather than each Media SDK Thread create and render on its on windows. I was trying to create the windows in the main thread but the system crash when Media SDK trying to render the decoded frame (assuming since the windows and video frame are in different thread).

Therefore, any suggestion on the architecture of the whole system would be grateful.

Thank you, soo.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Soo,

I was suggested this workaround by my colleague and it is pretty neat - You can use the DirectX Composition capability to achieve what you are doing. There is a lot of documentation on Microsoft on how to use composition. Here is one such page: http://msdn.microsoft.com/en-us/library/windows/desktop/hh706320(v=vs.85).aspx . This way, your surfaces will not have to be copied from GPU to CPU and back for rendering; and you will achieve the your desired effect. (Also, if you are using Media SDK for decode alone, you could look into DXVA decode/rendering on Microsoft webpages).

For completeness sake, here is some info of video and system surfaces; and sw composition.

When using HW acceleration ("-hw" option in samples and tutorials), it is recommended to use video memory for best performance. You can specify that using MFXVideoParam.IOPattern = MFX_IOPATTERN_OUT_VIDEO_MEMORY (for decode). The output of the decoder is mfxFrameSurface1* pmfxOutSurface. Until this point, we are using the video surfaces. You can access the decoded frame using pmfxOutSurface->pData pointer. Once you access this pointer and use it in your code, you have implicitly requested video->system memory copy (since this code executed on CPU, and the data will move from GPU to CPU). This is all done automatically, but the latency will be noticeable. For example, in our tutorials, we write the decoded frames to an output file using WriteFrame() function implemented in software- we are basically copying the frame from GPU to CPU here. Hope this helps your understanding.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello there,

Thanks for your question. It looks like you are looking for a solution where you can display multiple video streams on a single window (or on one stream). If that is the case, Composition is a good feature that VPP provides (it is not supported on Windows OS currently). Composition allows you to display multiple video streams on one output surface, by giving you the flexibility of choosing the size of each individual stream, and the location it must go on the output stream. You can find information on Composition in the doc/mediasdk-man.pdf or the dev guide. I am hoping to publish a Composition article with code example soon on our website. If you need it more urgently, feel free to post a follow-up question and I will send you the information.

If you are working on Windows OS and are looking for a way to Composite, currently we recommend developers use their own software implementation. One other implementation option is - Instead of opening a window to display each stream after it is decoder, one can smartly write individual stream to a file. By smartly I mean, write the raw frames to the file as though you are compositing them. For example, you can modify the file write function such that each stream to appear next to each other, or one below the other etc.,

If I misunderstood your question, or you need more information - feel free to post it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just noticed that your title says "DirectX", so unfortunately composition is not going to work. If you are flexible moving the implementation to Linux, you surely can use the feature.

Anyway, let me know if you need any more details, will surely try to help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SRAVANTHI K. (Intel) wrote:

one can smartly write individual stream to a file

Transfer UNCOMPRESSED video via file??? Cool! It's a great coming technology!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I should have been a little more clear I suppose. I was suggesting implementing composition in software for the desired results. Obviously it will not as fast as the hardware solution and yes it will have to deal with copying from video to system memory, but depending on the use-case, input and the resources, it may be a decent workaround. But yes, currently Media SDK does not support Composition on Windows.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SRAVANTHI K. (Intel) wrote:

I should have been a little more clear I suppose. I was suggesting implementing composition in software for the desired results. Obviously it will not as fast as the hardware solution and yes it will have to deal with copying from video to system memory, but depending on the use-case, input and the resources, it may be a decent workaround. But yes, currently Media SDK does not support Composition on Windows.

Dear Sravanthi,

Thanks for the detail explanation, we are running on Windows unfortunately. Could you advise how could we copy the decoded frame from the video memory back to the system memory effectively?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Soo,

I was suggested this workaround by my colleague and it is pretty neat - You can use the DirectX Composition capability to achieve what you are doing. There is a lot of documentation on Microsoft on how to use composition. Here is one such page: http://msdn.microsoft.com/en-us/library/windows/desktop/hh706320(v=vs.85).aspx . This way, your surfaces will not have to be copied from GPU to CPU and back for rendering; and you will achieve the your desired effect. (Also, if you are using Media SDK for decode alone, you could look into DXVA decode/rendering on Microsoft webpages).

For completeness sake, here is some info of video and system surfaces; and sw composition.

When using HW acceleration ("-hw" option in samples and tutorials), it is recommended to use video memory for best performance. You can specify that using MFXVideoParam.IOPattern = MFX_IOPATTERN_OUT_VIDEO_MEMORY (for decode). The output of the decoder is mfxFrameSurface1* pmfxOutSurface. Until this point, we are using the video surfaces. You can access the decoded frame using pmfxOutSurface->pData pointer. Once you access this pointer and use it in your code, you have implicitly requested video->system memory copy (since this code executed on CPU, and the data will move from GPU to CPU). This is all done automatically, but the latency will be noticeable. For example, in our tutorials, we write the decoded frames to an output file using WriteFrame() function implemented in software- we are basically copying the frame from GPU to CPU here. Hope this helps your understanding.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Sravanthi,

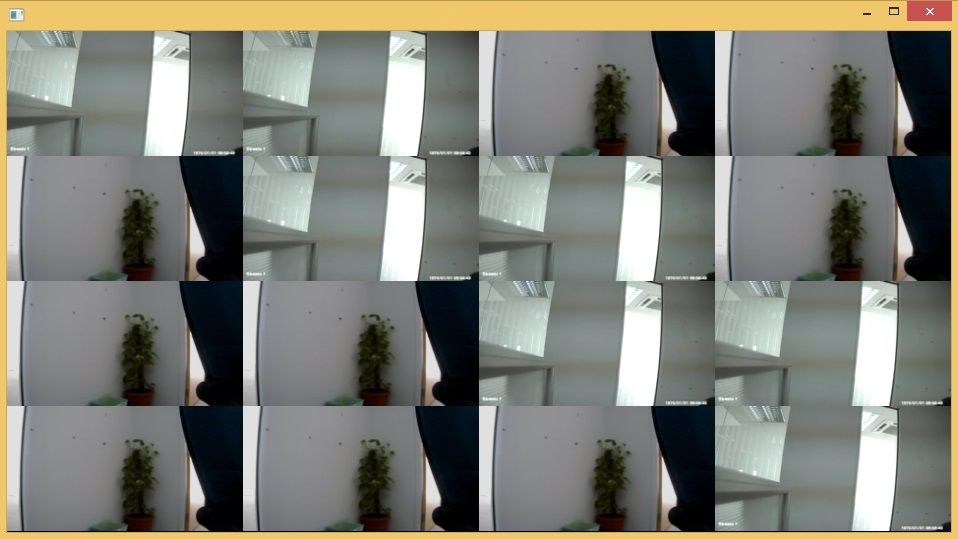

Thank you so much for the information. We finally managed to solve the issue and are very satisfy with the performance Intel MSDK capable to deliver.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Soo,

Very glad you were able to achieve the desired effect with MSDK for HD videos (and 16 streams!).

For my understanding, can you please let me know how you implemented this - did you take our suggestion of using DirectX Composition capability, or did you implement (software) composition effect in MSDK as we discussed earlier?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

SRAVANTHI K. (Intel) wrote:

Hello Soo,

Very glad you were able to achieve the desired effect with MSDK for HD videos (and 16 streams!).

For my understanding, can you please let me know how you implemented this - did you take our suggestion of using DirectX Composition capability, or did you implement (software) composition effect in MSDK as we discussed earlier?

Actually I tested both methods. The software composition consumes about 30% of CPU while the directX composition only consumes 10% (for 16 x 720p H264 streams at 25 fps).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, thanks for the information.

On a side note - Since you have two neat implementations, if you would like your code to be useful for many more people, I would highly encourage you publish an article with your code. Once you publish and send the link to me, I can verify and point people to it on the fourm as a resource. Here is the link - https://software.intel.com/en-us/node/add/article.

Thanks again.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page