Along with the rise of convolutional neural networks, we are starting to see the emergence of dedicated inference accelerators. Many edge and cloud inference workloads already employ multiple accelerators. But even in more traditional consumer applications, the combination of CPU and GPU is ubiquitous (consider Intel-based platforms with integrated graphics processors), and the automatic utilization of both devices is increasingly appealing.

However, without proper implementation, integrating more accelerators is not a simple live-migration, but requires changing the code and the balancing logic of the application. Fortunately, the Intel® Distribution of OpenVINO™ toolkit provides a solution that’s already unified across various Intel architectures and now supports automatic multi-device inference, paving the path toward scalable accelerator integration.

Key performance benefits of the automatic multi-device inference support include:

- Improved throughput across multiple devices compared to single-device execution

- More consistent performance, since devices can now share the inference burden. If one device becomes busy with tasks other than inference (e.g. decoding), other devices automatically handle more of the load

- Support for variations in end-user systems that doesn’t require hard-coding the usage of each device. Notice that with the new InferenceEngine API it is also possible to enumerate all devices and create the multi-device on top

Configuring the Multi-Device

Multi-Device support is a part of the toolkit’s InferenceEngine core functionality. Its setup has two major steps:

- Configuration of each individual device as usual (e.g. number of inference threads, for the CPU). Notice that the new InferenceEngine “core” API allows you to to create handy aliases for the devices.

- Defining the multi-device on top of the (prioritized) list of the configured devices. This is the only change that you need in your application. Notice that the priorities of the devices can be later changed in the run-time without stopping the inference.

Finally, the app simply communicates with the multi-device just like with any other device. For example, it automatically loads the network, creates as many requests as needed to saturate the specific multi-device instance. See further details and code examples in the documentation.

Multi-Device Execution

Internal multi-device logic automatically assigns individual inference requests to available computational devices to execute the requests in parallel.

Notice that the application execution logic is left unchanged. You don't need to explicitly load the network to each device, keep a separate per-device queue, balance the inference requests between queues, etc. From the application point of view, this is just another device that handles the actual machinery.

There are two major requirements to leverage performance with the multi-device:

- Providing the multi-device (and hence the underlying devices) with enough data to crunch. As the inference requests are naturally independent data pieces (like video frames or images), multi-device performs load-balancing at the “requests” (outermost) level to minimize the scheduling overhead.

- Using inference requests for completion callbacks, as opposed to the legacy blocking Wait calls. This is critical for performance because with multi-device execution the order of requests completion doesn’t necessarily match the submission order (as different devices might have very different speed).

For example, consider a scenario of processing four cameras (and four inference requests) on a single CPU or GPU. With CPU+GPU capabilities, you may want to process more cameras (by having additional requests in flight) to keep the multi-device busy, or let the CPU and GPU process fewer cameras, improving the latency.

Multi-Device Performance

Below are multi-device performance results for a range of inference devices:

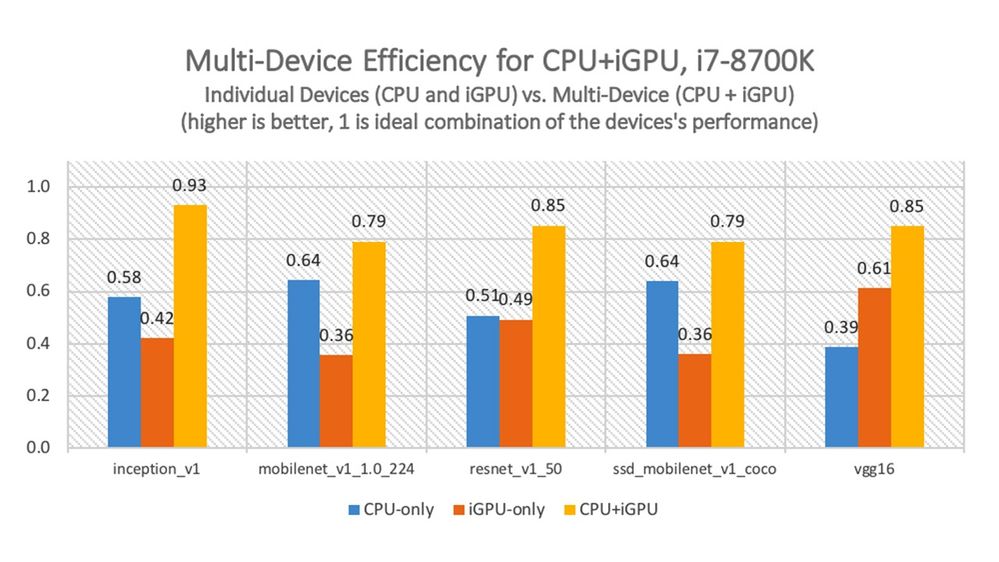

Figure 1. Multi-device execution on the CPU and integrated GPU of the Intel Core i7-8700K processor. ‘1’ represents the “ideal” performance (if the numbers from individual “CPU-only” and “GPU-only” devices were combined perfectly). Configuration: Intel® Core™ i7-8700k processor @ 3.20GHz with Gen9 Graphics, 16 GB RAM, OS: Ubuntu 16.04.3 LTS 64 bit, Kernel: 4.15.0-29-generic. Intel® Distribution of OpenVINO™ toolkit 2019 R2. See notices and disclaimers for details.

The actual multi-device performance is a fraction of the “ideal” performance that is different for different topologies. In this case, the CPU and GPU share the same die and actually compete for the memory bandwidth.

Also, the performance of both devices is affected by dynamic frequency scaling, potentially resulting in one or both devices falling out of turbo frequencies to manage power consumption and aggregated heat production. Even though both factors reduce overall performance, the multi-device efficiency ranges from 79% to 93%.

Finally, regardless of whether the CPU or GPU is faster for the specific model, the multi-device is always faster than best single-device configuration.

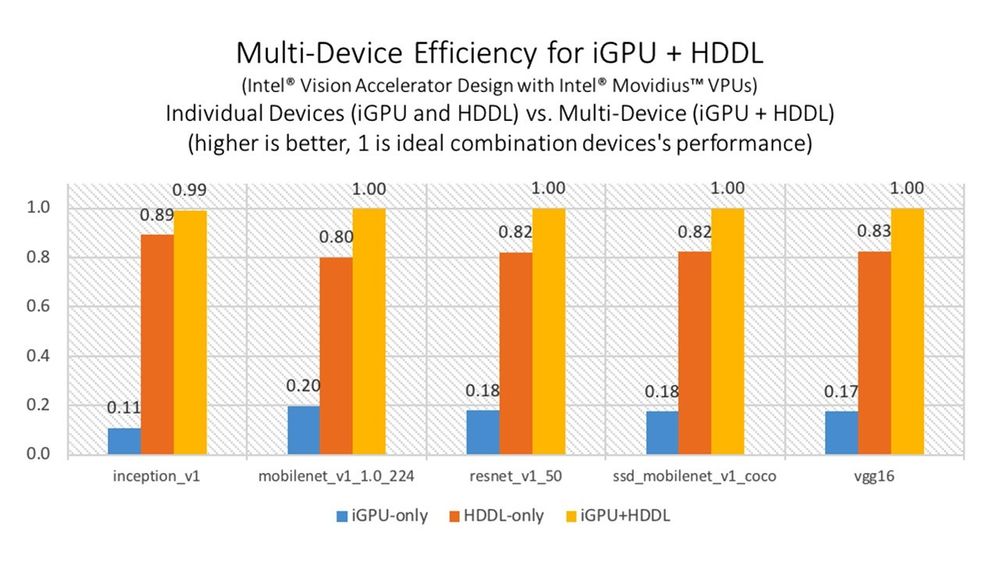

Figure 2 Multi-device execution on the integrated GPU and High-Density Deep Learning (HDDL, see Intel® Vision Accelerator Design with Intel® Vision Accelerator Intel® Movidius™ VPUs product details). ‘1’ represents the “ideal” performance (if the numbers from individual devices were combined perfectly). Configurations: Intel® Core™ i7-8700k processor @ 3.20GHz with Gen9 Graphics, 16 GB RAM, Intel® Vision Accelerator with 8 Intel® Movidius™ VPUs. OS: Ubuntu 16.04.3 LTS 64 bit, Kernel: 4.15.0-29-generic. Intel® Distribution of OpenVINO™ toolkit 2019 R2. See notices and disclaimers for details.

The iGPU and Intel® Vision Accelerator Design with Intel® Movidius™ VPUs delivered at least 99% combined performance efficiency for all example models when combined into the multi-device. Next, we’ll discuss how to reproduce numbers from this section.

Using the Multi-Device with Intel Distribution of OpenVINO Toolkit Samples and Benchmarking the Performance

Notice that every toolkit sample that supports the "-d" (which stands for "device") command-line option transparently accepts the multi-device. The Benchmark Application is the best reference to the optimal usage of the multi-device. Below is an example command-line to evaluate HDDL+GPU performance:

$ ./benchmark_app –d MULTI:HDDL,GPU –m -i

Numbers from the previous section were collected this way. You can use the input model with FP16 IR to work with multi-device, since in the latest Intel Distribution of OpenVINO toolkit release, all devices support it.

Conclusion

Using multiple accelerators leads to higher inference throughput, and other exciting performance opportunities yet would result in diverse and complicated design, balancing a lot of variables required to orchestrate the execution in the application.

The Intel Distribution of OpenVINO toolkit enables powerful inference performance across a wide set of models and application areas. Now, the new OpenVINO multi-device inference feature provides an application-transparent transparent way to automatic leveraging multiple accelerators. This brings substantial out-of-the-box performance improvements, without the need to re-architecture existing applications for any explicit multi-device logic.

Additional resources:

- General introduction to the Intel Distribution of OpenVINO toolkit:

https://docs.openvinotoolkit.org/latest/index.html - OpenVINO Multi-Device documentation:

https://docs.openvinotoolkit.org/latest/_docs_IE_DG_supported_plugins_MULTI.html

Notices and Disclaimers:

Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors.

Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information visit www.intel.com/benchmarks.

Test Configuration:

Intel® Core™ i7-8700 Processor @ 3.20GHz with 16 GB RAM, Intel® Vision Accelerator with 8 Intel Movidius VPUs, Gen9 Graphics (Intel® UHD Graphics 630), OS: Ubuntu 16.04.3 LTS 64 bit, Kernel: 4.15.0-29-generic. Intel Distribution of OpenVINO toolkit 2019 R2.

Performance results are based on testing as of July 16, 2019 by Intel Corporation and may not reflect all publicly available security updates. See configuration disclosure for details. No product or component can be absolutely secure. For more complete information about performance and benchmark results, visit www.intel.com/benchmarks.

Optimization Notice: Intel’s compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice.

Notice Revision #20110804

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.