Published October 5, 2021

Moshe Wasserblat is currently the research manager for Natural Language Processing (NLP) at Intel Labs.

Tango, x10 inference speedup vs. BERT with a cascade student-teacher model

In my last blog, Best practice for Text-Classification with Distillation – Word Order Sensitivity (WOS) (Part 3/4), I introduced a metric for estimating the complexity level of a task, and I described how to utilize that metric to estimate distillation performance. In this post, I present Tango architecture, a simple cascade student-teacher model, and exploit the simplicity of task instances to gain maximum throughput for text classification.

Tango Architecture

Schwartz et al., 2020 proposed a Transformer-based architecture, which uses an early exit strategy based on the confidence of the prediction in each layer. The Transformer dynamically expands/shrinks in size during inference based on the complexity of each inference sample.

Following this logic, we proposed a simple method that combines the advantages of the ‘early exit’ approach with the benefits of model distillation. In the former blogs, I had two observations; first, inference instances vary in their level of complexity (Blog 3/4), affecting the number of computational resources needed to classify them correctly. Second, datasets with a higher proportion of ‘complex’ instances require deeper and more contextually aware models (Blog 2/4). In this work, we leverage those observations by proposing Tango - a cascaded teacher-student model, Tango, in which a small and efficient student model processes ‘simple’ instances and ‘complex’ instances are processed by a more profound and less efficient teacher model.

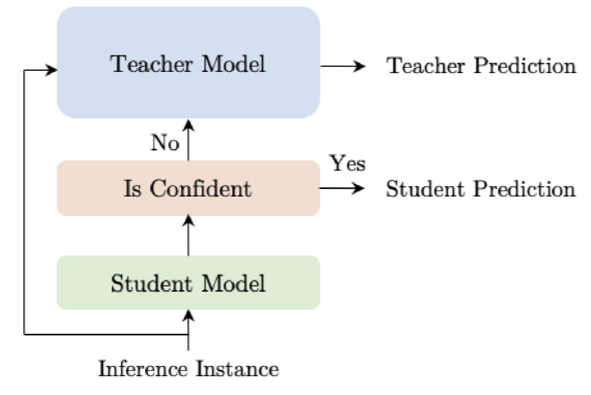

The following figure shows the Tango model: Each inference instance is processed by the efficient student model. If the student model is confident regarding its prediction (the instance is considered as ‘simple’) then the student model produces the final prediction, and the teacher model does not process the input instance. Otherwise, the instance is considered as ‘complex’, and the teacher model will process the instance and produce the final prediction.

For a dataset that consists of a majority of easy instances, we can assume most of the computing cycles account for the teacher since the student model’s architecture is considerably more efficient with higher throughput than the teacher model (e.g., Bi-LSTM vs. RoBERTa). For example, a dataset with 90% ‘simple’ instances, the Tango achieves ~x10 effective speed up vs. RoBERTa assuming the speedup ratio of *Bi-LSTM vs. RoBERTa >> x100.

Here’s an abstract view of the Tango architecture:

* In our experiment, we use BiLSTM as the student and RoBERTa as the teacher, where Bi-LSTM vs. RoBERTa CPU's speedup ratio = x160 (measured on Intel(R) Xeon(R) CLX Platinum 8280).

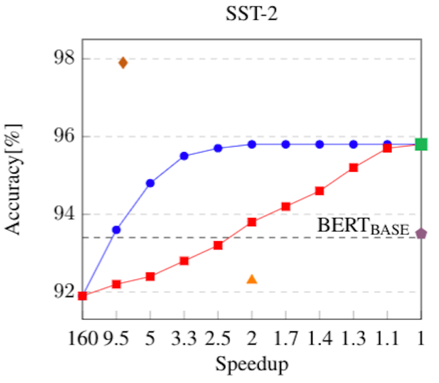

In the figure below, we show the Tango model accuracy as a function of CPU's speedup in relation to the RoBERTa model for GLUE's SST-2. Tango (blue circles, wherein each point represents a different confidence threshold) compared to the random decision baseline (red squares), RoBERTa (green square), BERT (purple pentagon), distilBERT (orange triangle) and the oracle (brown diamond). As an example, for GLUE's SST-2 Tango achieves BERT’s accuracy (93.5%) with a speedup of approximately x10, where DistilBERT achieves lower accuracy (92.3%) with x2 max speed-up.

Tango provides a better speed/accuracy tradeoff than DistilBERT while alleviating the need to retrain each point along the speed/accuracy curve.

Data-Aware inference and Future Research

Transformer-based models like BERT learn extensive knowledge of the language during pre-training, including syntactic structures and semantic cues. It is well known that after fine-tuning, BERT utilizes this knowledge to solve a given task at inference time. However, the early exit work shows that during inference BERT can suffice with only a part of its network for decoding specific instances. In continuation, our Tango work shows that it is possible to replace BERT with a shallower and more efficient neural network that would successfully handle ‘simple’ examples. So, in practice, it’s possible to dynamically adjust how much computation is required and achieve a high speedup gain with very small accuracy reductions compared to the original model.

In my blog series, I showed that efficiency is highly dependent on the task and data complexity. I hope to see the continued exploration of data-aware optimization techniques for dynamic adaptation of size and speed using Transformers in production. I also hope we’ll continue to advance our understanding of when a data instance is simple or hard for a given task and utilize this prediction for an early exit or efficient switch architecture.

Note: The work will be soon available on arXiv.

Special thanks to my collaborators Oren Pereg, Jonathan Mamou, and Roy Schwartz.

Mr. Moshe Wasserblat is currently Natural Language Processing (NLP) and Deep Learning (DL) research group manager at Intel’s AI Product group. In his former role he has been with NICE systems for more than 17 years and has founded the NICE’s Speech Analytics Research Team. His interests are in the field of Speech Processing and Natural Language Processing (NLP). He was the co-founder coordinator of EXCITEMENT FP7 ICT program and served as organizer and manager of several initiatives, including many Israeli Chief Scientist programs. He has filed more than 60 patents in the field of Language Technology and also has several publications in international conferences and journals. His areas of expertise include: Speech Recognition, Conversational Natural Language Processing, Emotion Detection, Speaker Separation, Speaker Recognition, Information Extraction, Data Mining, and Machine Learning.

Mr. Moshe Wasserblat is currently Natural Language Processing (NLP) and Deep Learning (DL) research group manager at Intel’s AI Product group. In his former role he has been with NICE systems for more than 17 years and has founded the NICE’s Speech Analytics Research Team. His interests are in the field of Speech Processing and Natural Language Processing (NLP). He was the co-founder coordinator of EXCITEMENT FP7 ICT program and served as organizer and manager of several initiatives, including many Israeli Chief Scientist programs. He has filed more than 60 patents in the field of Language Technology and also has several publications in international conferences and journals. His areas of expertise include: Speech Recognition, Conversational Natural Language Processing, Emotion Detection, Speaker Separation, Speaker Recognition, Information Extraction, Data Mining, and Machine Learning.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.