Deep Learning (DL) and Artificial Intelligence (AI) are quickly becoming ubiquitous. Naveen Rao, Intel's Artificial Intelligence Products Group's GM, recently stated that "there is a vast explosion of [AI] applications," and Andrew Ng calls AI “the new electricity”. Deep learning applications already exist in the cloud, home, car, our mobile devices, and various embedded IoT (Internet of Things) devices. These applications employ Deep Neural Networks (DNNs), which are notoriously time, compute, energy, and memory intensive. Applications need to strike a balance between accuracy, speed, and power consumption–taking into consideration the HW and SW constraints and the product eco-system.

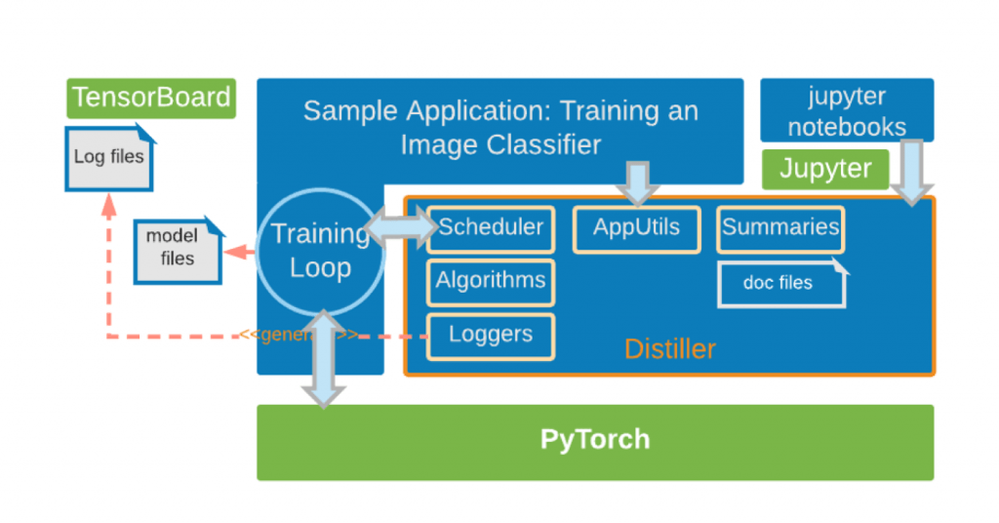

At Intel® AI Lab, we have recently open-sourced Neural Network Distiller, a Python* package for neural network compression research. Distiller provides a PyTorch* environment for prototyping and analyzing compression algorithms, such as sparsity-inducing methods and low-precision arithmetic. We think that DNN compression can be another catalyst that will help bring Deep Learning innovation to more industries and application domains, to make our lives easier, healthier, and more productive.

The Case for Compression

User-facing Deep Learning applications place a premium on the user-experience, and interactive applications are especially sensitive to the application response time. Google’s internal research found that even small service response delays have a significant impact on user engagement with applications and services. With more applications and services being powered by DL and AI, the importance of low-latency inference grows more important, and this is true for both cloud-based services and mobile applications.

Cloud applications processing batches of inputs, are more concerned about Total Cost of Ownership (TCO) than response latency. For example, a mortgage lending firm that uses Machine Learning (ML) and DL to evaluate the risk of mortgage applications, processes vast quantities of data. High throughput is valued more than low latency and keeping the energy bill under control, a main component of TCO, is a concern.

IoT edge devices usually collect vast amounts of sensor data to be analyzed by DL-powered applications. Directly transferring the data to the cloud for processing is often prohibitive due to the available network bandwidth or battery power. Therefore, employing DL at the edge, for example to preprocess sensor data to reduce its size, or to offer reduced functionality when the connection to the cloud is not available, can be very beneficial. DL, however, often requires compute, memory, and storage resources that are either not available to include or are expensive to add to IoT devices.

So, we see that whether we are deploying a deep learning application on a resource-constrained device or in the cloud, we can benefit from reducing the computation load, memory requirements, and energy consumption.

One approach to this is to design small network architectures from the get-go. SqueezeNet and MobileNets are examples of two architectures that explicitly targeted network size and speed. Another research effort by our colleagues at the Intel AI Lab shows a principled method for unsupervised structure learning of the deeper layers of DNNs, resulting in compact structures and equivalent performance to the original large structure.

A different approach to achieving reduced network sizes is to start with a predetermined, well-performing DNN architecture and to transform it through some algorithmic steps into a smaller DNN. This is referred to as DNN compression.

Compression is a process in which a DNN’s requirements for (at least one of) compute, storage, power, memory, and time resources are reduced while keeping the accuracy of its inference function within an acceptable range for a specific application. Often, these resource requirements are inter-related and reducing the requirement for one resource will also reduce another.

It is interesting to note that recent research has demonstrated empirically, that even small-by-design networks such as those mentioned above can be further compressed.

It shows that large-and-sparse models perform significantly better than their small-and-dense equivalents (the pairs of compared models have the same architecture and memory footprint).

Introducing Distiller

We’ve built Distiller with the following features and tools, keeping both DL researchers and engineers in mind:

- A framework for integrating pruning, regularization, and quantization algorithms

- A set of tools for analyzing and evaluating compression performance

- Example implementations of state-of-the-art compression algorithms

Pruning and regularization are two methods that can be used to induce sparsity in a DNN’s parameters tensors. Sparsity is a measure of how many elements in a tensor are exact zeros, relative to the tensor size. Sparse tensors can be stored more compactly in memory and can reduce the amount of compute and energy budgets required to carry out DNN operations. Quantization is a method to reduce the precision of the data type used in a DNN, leading again to reduced memory, energy and compute requirements. Distiller provides a growing set of state-of-the-art methods and algorithms for quantization, pruning (structured and fine-grained) and sparsity-inducing regularization–leading the way to faster, smaller, and more energy-efficient models.

To help you concentrate on your research, we’ve tried to provide the generic functionality, both high and low-level, that we think most people will need for compression research. Some examples:

- Certain compression methods dynamically remove filters and channels from Convolutional layers while a DNN is trained. Distiller will perform the changes in the configuration of the targeted layers, and in their parameter tensors as well. In addition, it will analyze the data-dependencies in the model and modify dependent layers as needed

- Distiller will automatically transform a model for quantization, by replacing specific layer types with their quantized counterparts. This saves you the hassle of manually converting each floating-point model you are using to its lower-precision form, and allows you to focus on developing the quantization method, and to scale and test your algorithm across many models

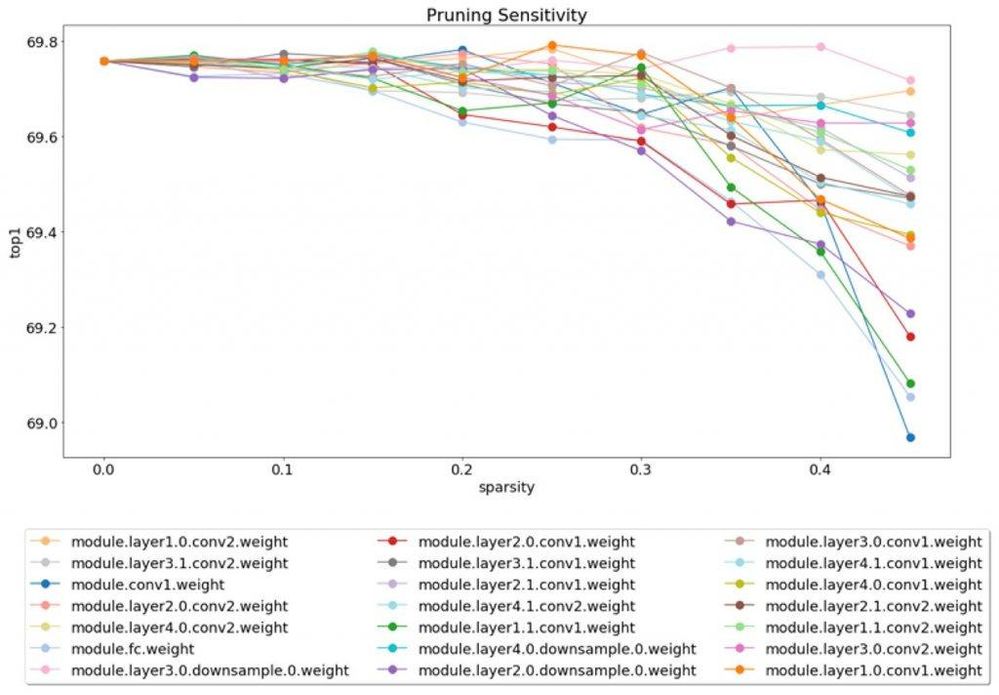

We’ve included Jupyter notebooks that demonstrate how to access statistics from the network model and compression process. For example, if you are planning to remove filters from your DNN, you might want to run a filter-pruning sensitivity analysis and view the results in a Jupyter notebook:

Distiller statistics are exported as Pandas DataFrames which are amenable to data-selection (indexing, slicing, etc.) and visualization.

Distiller comes with sample applications that employ some methods for compressing image-classification DNNs and language models. We’ve implemented a few compression research papers that can be used as a template for starting your own work. These are based on a couple of PyTorch’s example projects and show the simplicity of adding compression to pre-existing training applications.

Only the Beginning

Distiller is a research library for the community at large and is part of Intel AI Lab’s effort to help scientists and engineers train and deploy DL solutions, publish research, and reproduce the latest innovative algorithms from the AI community. We are currently working on adding more algorithms, more features, and more application domains.

If you are actively researching or implementing DNN compression, we hope that you will find Distiller useful and fork it to implement your own research; we also encourage you to send us pull-requests of your work. You will be able to share your ideas, implementations, and bug fixes with other like-minded engineers and researchers—a benefit to all! We take research reproducibility and transparency seriously, and we think that Distiller can be the virtual hub where researchers from across the globe share their implementations.

For more information about Distiller, you can refer to the documentation and code.

Geek On.

[1] Forrest N. Iandola, Song Han, Matthew W. Moskewicz, Khalid Ashraf, William J. Dally and Kurt Keutzer. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size.” arXiv:1602.07360 [cs.CV]

[2] Andrew G. Howard, Menglong Zhu, Bo Chen, Dmitry Kalenichenko, Weijun Wang, Tobias Weyand, Marco Andreetto and Hartwig Adam. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. (https://arxiv.org/abs/1704.04861).

[3] Michael Zhu and Suyog Gupta, “To prune, or not to prune: exploring the efficacy of pruning for model compression”, 2017 NIPS Workshop on Machine Learning of Phones and other Consumer Devices (https://arxiv.org/pdf/1710.01878.pdf)

[4] Sharan Narang, Gregory Diamos, Shubho Sengupta, and Erich Elsen. (2017). “Exploring Sparsity in Recurrent Neural Networks.” (https://arxiv.org/abs/1704.05119)

[5] Raanan Y. Yehezkel Rohekar, Guy Koren, Shami Nisimov and Gal Novik. “Unsupervised Deep Structure Learning by Recursive Independence Testing.”, 2017 NIPS Workshop on Bayesian Deep Learning (http://bayesiandeeplearning.org/2017/papers/18.pdf).

Intel and the Intel logo are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries.

© Intel Corporation

*Other names and brands may be claimed as the property of others.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.