Authors:

- Siddharth Mehta is a AI Algorithm Engineer within the IOTG Industrial Solution Division with primary focus in Robotics

- Mariano Phielipp is a Principal Engineer who leads a Deep Reinforcement Learning Research Team within Intel Labs

By speeding up inference with the Intel® OpenVINOTM toolkit, you can save time when training a robotics simulation

From the depths of the oceans to the blackest outposts of space, robots go where we can’t. They do the work that’s too dangerous or impossible for people, including maintaining infrastructure in hard-to-reach places. In factories, robots help to increase quality and safety on the assembly line.

Robots, especially industrial robotic arms, are great candidates for deep reinforcement learning. Deep reinforcement learning (DRL) uses experimentation to train a deep learning solution. Successful experiments lead to rewards, so the system is guided to learn the desired behavior. One of the attractions of deep reinforcement learning (DRL) is that it enables the machine learning solution to train itself.

In this blog, we’ll show you how we accelerated the training of a robot arm simulation using the Intel® Distribution of OpenVINOTM toolkit. The secret was to accelerate inference in one of the neural networks that is used intensively during the training process.

What’s our goal?

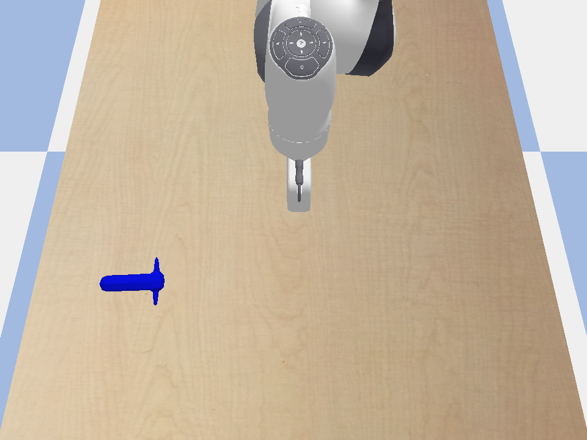

Robots can be trained using a simulation, which is more cost-effective and faster than using a real robot arm. For our research, we created a simulated robot environment with PyBullet.

Our goal is for the robot arm to learn to move towards the object on its workspace and then to hover over it (see Figure 2). This is the kind of task a robot arm might need to perform when carrying out repairs or assembly work.

The approach we describe here could also be used for deep reinforcement learning of other control problems, including video games.

How to accelerate deep reinforcement training with OpenVINO

Two neural networks make up our deep reinforcement learning solution:

- The Policy Network. Our robot is trying to learn a “policy,” which is a neural network it can use to decide how to move. The input is the x-y position of the robot arm, and the output is the robot’s movement action. The Policy Network is the neural network that the reinforcement algorithm tries to learn. Soft Actor Critic (SAC), Proximal Policy Optimization (PPO), and Deep Q-Network (DQN) are some of the advanced algorithms for generating the optimal Policy Network.

- The Reward Classifier Network is a network that generates the reward signal for the deep reinforcement learning training process. This network is built before the deep reinforcement learning begins. To train the Reward Classifier Network, we create multiple images of where the robot is achieving its task and where it is not. We then use them to train a classifier network so we can differentiate visually when the robot has completed the task and when it has not. This approach is especially useful in industrial robotics where the robot aims to manipulate an object using vision-based control.

During the training process for the Policy Network, the Reward Classifier Network is used for inference thousands and thousands of times. It follows, then, that speeding up the inference of the Reward Classifier Network can significantly improve the training time for the Policy Network. The Intel Distribution of OpenVINO toolkit can be used to speed up neural network inference on Intel® architecture.

We used OpenAI’s Gym toolkit, which is designed for developing and comparing reinforcement learning algorithms. We used the gym.env abstract base class to programmatically represent our environment. The step() function of this class defines the relationship between the state, the action, and the reward. The Reward Classifier Network is called in the step() function.

Below is the code for our step() function. The state of our problem is the global x-y position of the robot arm. The action is the dx, dy amount to move the robot arm.

def step(self, action):

p.configureDebugVisualizer(p.COV_ENABLE_SINGLE_STEP_RENDERING)

dx = action[0]

dy = action[1]

curr_pose = p.getLinkState(self.pandaUid, 11)

curr_position = curr_pose[0]

new_position = [curr_position[0] + dx, curr_position[1] + dy, self.hover_z]

action_taken = False

if (self.obs_low[0] <= new_position[0] <= self.obs_high[0]) and (self.obs_low[1] <= new_position[1] <= self.obs_high[1]):

self._move_robot(new_position)

action_taken = True

time_1 = time.time()

classifier_result, classifier_result_probabilities = self._reward_classifier()

time_2 = time.time()

self.inference_time += (time_2 - time_1)

observation = self._get_robot_position()[0:2]

info = {}

self.horizon = self.horizon + 1

done = False

if not classifier_result:

done = True

print("Goal Achieved")

reward = 100

else:

if action_taken:

reward = -1

else:

reward = -5

if self.horizon == self.max_episode_length:

done = True

return observation, reward, done, info

Here’s how to accelerate the Reward Classifier Network using the Intel Distribution of OpenVINO toolkit:

- Capture training images. We captured hundreds of images of the robot achieving the goal (hovering right over the object) and hundreds of images where it wasn’t.

- Train the Reward Classifier Network. The Intel Distribution of OpenVINO toolkit works with TensorFlow and PyTorch. We used PyTorch. Using Torchvision's Finetuning, we trained a SqueezeNet model to serve as our visual reward classifier network.

- Convert to ONNX. When using PyTorch, we need to convert the Reward Classifier Network model to ONNX representation for use with the Intel Distribution of OpenVINO toolkit. Previously, the ONNX model then had to be converted to an Intermediate Representation (IR) using the OpenVINO Model Optimizer, but this step is no longer necessary.

- Add the OpenVINO call in the code. The Intel Distribution of OpenVINO toolkit has an easy-to-learn Python API for interfacing with your custom gym.env class. We modified our _reward_classifier() function to use the Intel Distribution of OpenVINO toolkit for inference, where available. Here’s our function:

def _reward_classifier(self):

image = self._get_image()

if self.vino:

classifier_result = vino_inference(self.model, image)

else:

classifier_result = inference(self.model, image)

return classifier_result - Run the deep reinforcement learning training. Regardless of which reinforcement learning algorithm is used, the step() function will be called thousands of times during training, which means our newly optimized _reward_classifier() function will also be called thousands of times. Any speed increase from using the Intel Distribution of OpenVINO toolkit is amplified during the deep reinforcement learning training.

The same approach can be used to optimize reinforcement learning problems that use pretrained autoencoders for state-space reduction.

Results

We used the Stable Baselines 3 library of PyTorch reinforcement learning algorithms to train our robot arm simulation. Using SAC as our algorithm, we can solve our task in under 8000 steps. Figure 3 shows the training in action.

When training, the library prints out some metrics to let the user know how the training is progressing. The most important metric is the ep_rew_mean which quantifies how much reward the robot arm is accumulating. If this number continues to increase, then the robot is learning, and the algorithm is working.

To see the impact of using the Intel Distribution of OpenVINO toolkit, we ran the workload twice: first using native PyTorch for our reward classifier inferencing, and then using OpenVINO.

The Intel Distribution of OpenVINO toolkit accelerated our deep reinforcement learning workload by approximately 12 percent on average.

Try it yourself

This code repo shows how you can use the Intel Distribution of OpenVINO toolkit to accelerate deep reinforcement learning training. Specifically, this example is for reinforcement learning problems that use pretrained goal classifiers for their reward function.

This repository was validated using Python 3.8 on Ubuntu 20.04 and macOS Catalina 10.15.17 on a 2019 Macbook Pro with 2.6 Ghz 6-Core Intel i7 and 16 gM 2667 MHz DDR4

- Reproduce this repo. Git clone this library.

git clone https://github.com/intel/openvino-drl-training-demo

- Install the required software. We recommend using a Python virtual environment. Install all the Python packages using:

pip install -r requirements.txt

- Train the robot. Run the training using the following instruction, and note the total time printed at the end. The -g is an optional flag if you want to see the robot during its training.

python sac_training.py -g

- Run using OpenVINO. Run the same training but now using the Intel Distribution of OpenVINO toolkit as the inference engine for the Reward Classifier Network. The total time printed at the end should be lower than that of step 3.

python sac_training.py -ov -g

- See the trained robot. Run inference.py to see the final robot simulation.

python inference.py -ov -g

Conclusion

In this article we’ve shown how using the OpenVINO inference engine can reduce deep reinforcement learning training time. This improvement comes without the need to invest heavily in computational resources or custom code for accelerators.

Aside from robotics, the Intel Distribution of OpenVINO toolkit can also accelerate deep reinforcement learning training in other applications. In non-robotics use-cases, the OpenVINO toolkit can have a much larger impact on your workload, because the wait time for the robot to complete a move (even in a simulation) is often a bottleneck for the AI training system.

In some applications, a State Variational Auto Encoders (VAEs) Network is used to reduce the size of the state space. With robotics, the state will most likely be captured as an output from a camera sensor (RGB, pointcloud, or depth). The drawback of this is that images have huge state spaces. A 256 pixel x 256 pixel x 3 color image can have a huge number of unique possible states: It’s a number with more than 473,400 zeroes on it. Exploration of the state space is a key aspect of the deep reinforcement learning training algorithms, so large state spaces require more training time. That’s why using an auto encoder to reduce an image is becoming a more popular practice in visual reinforcement learning. The same approach we used in this article can be used to accelerate the VAE inference, to speed up the deep learning training.

Download the Intel Distribution of OpenVINO toolkit here.

__________________

Because of the randomness in the DRL algorithm, there is variance in the time taken for the simulated robot motion. We ran the workload three times without OpenVINO and three times with OpenVINO. We averaged the results with and without OpenVINO, and used those averages to calculate the average time saving which was 11.96 percent.

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.

Testing by Intel September 2021.

Configurations: Mac OS X laptop based on Intel® Core™ i7-9750H Processor (16GB 2667 MHz DDR4 RAM and 500GB storage). gym==0.18.0; openvino==2021.4.0; pybullet==3.1.9; stable-baselines3==1.0; torch==1.8.1

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See configuration disclosure for details. No product or component can be absolutely secure.

Intel technologies may require enabled hardware, software or service activation.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.