At Innovation 2022, there was one session in the AI track that really highlighted the maturation of end-to-end workflows: “Achieving efficient, productive and good quality End-to-End AI Pipelines” featuring Vrushabh Sanghavi from Intel, Mouli Narayanan from Zeblok Computational, and Anupam Datta from TruEra. A summary of the session follows, but you can view the full session recording here.

The AI Pipeline “Triathlon”

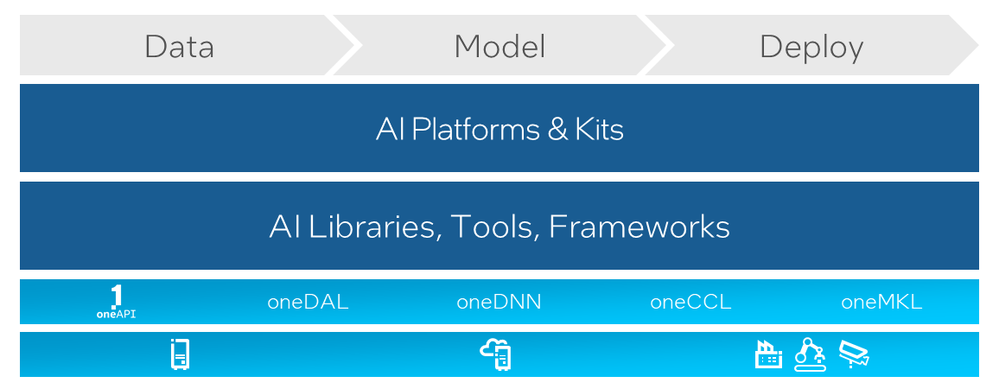

Vrushabh Sanghavi from Intel started off by likening the end-to-end AI pipeline to a triathlon – you need to be fast in all three phases. In the case of an AI pipeline, those phases are:

- Data: data ingestion, cleanup, transformation, manipulation

- Model: training the machine learning or deep learning model, evaluating results, iterating, then using it for inference

- Deploy: model packaging, serving, and endpoint deployment

Intel’s AI software suite spans this end-to-end pipeline and optimizes it from top to bottom.

Libraries such as oneAPI Deep Neural Network Library (oneDNN) and oneAPI Data Analytics Library (oneDAL) unlock your hardware’s potential with software optimizations applied to popular open source tools and frameworks. Techniques that can help improve model or workflow efficiency include model compression, for which you can use Intel® Neural Compressor, hyperparameter tuning and instance optimization, which is automated by SigOpt, or transfer learning.

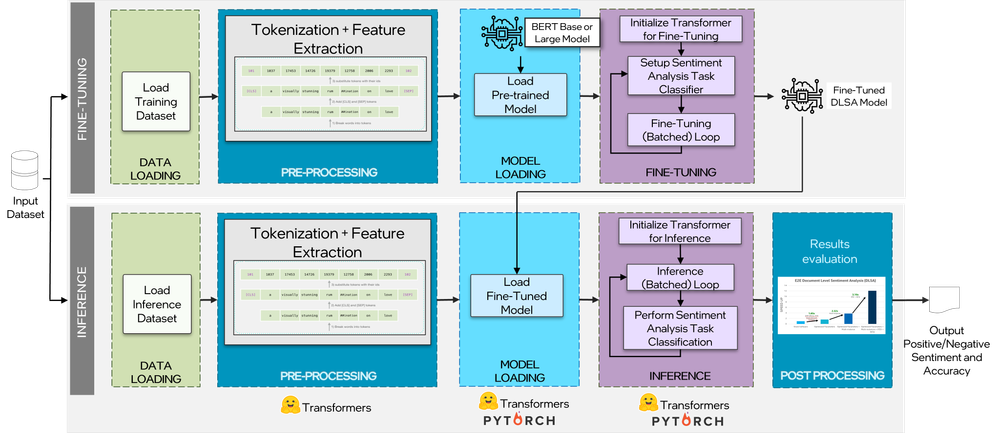

Vrushabh showed the effects of this suite of optimizations on use cases for machine learning with XGBoost, and deep learning for natural language processing document-level sentiment analysis (DLSA) using the Hugging Face* Transformers API.

The use of Hugging Face Transformers highlights the need for a robust ecosystem. No single vendor can provide everything needed for production usage across industry segments that have different requirements. Different vendors offer unique specialties that need to plug into existing pipelines and containers. The rest of this session featured two interesting solutions in the AI ecosystem.

AI Optimization-as-a-Service

Zeblok Computational helps ease compatibility of AI pipeline tools and assets. Mouli Narayanan, Zeblok founder and CEO, introduced Ai-Optimization-as-a-Service*, a new solution launching in early 2023. Built on their Ai-MicroCloud* technology, this enterprise offering executes AI pipelines across public and private cloud platforms, no matter the underlying hardware. This helps enterprises adapt to the needs of their projects, making this end-to-end suite of AI software more easily deployed in containers on whatever compute or data platform is required. He showed how this all works using the DLSA example that Vrushabh presented, dynamically composing containers and deploying them to both AWS* and Intel® Developer Cloud. This can save teams a lot of time and frustration, considering the amount and selection of different tools across the end-to-end pipeline.

AI Quality

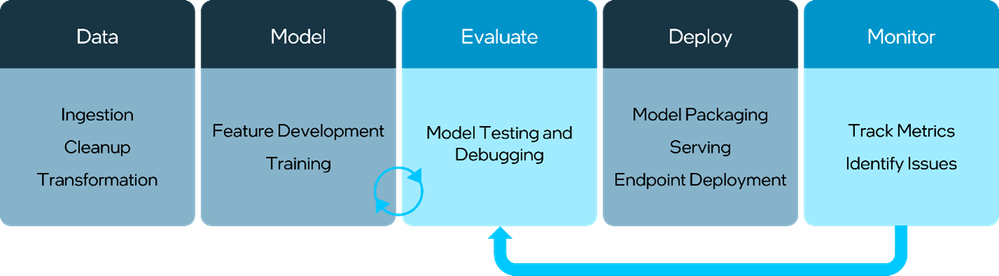

TruEra is a company built by AI industry veterans with the mission of ensuring that AI models in production continue to perform well, deliver business value, and be fair and non-discriminatory. This requires adding a couple important legs to the “triathlon”:

- Model Evaluation: test models’ quality right after they’re built, before deployment

- Production Monitoring: do they continue to perform well?

TruEra offers products for each task that work hand-in-hand with each other. For instance, TruEra Diagnostics* for development testing and debugging can also help identify what types of issues to track once the model is in production. And TruEra Monitoring* can help with root cause analysis to identify the features causing model drift or quality issues, so you can quickly re-train and re-deploy.

These steps are crucial for production deployment, especially in industries such as healthcare, banking, insurance, and manufacturing. As an example, Anupam Datta, co-founder, President and Chief Scientist at TruEra walked through the census example. This example tries to predict income given a census dataset. In this case, the model failed the tool’s “fairness test”, and the tool showed which features contributed most to the failure.

In the census dataset, it’s easy to overlook an innocuous-sounding feature like PERNUM (person number interviewed from the household), but once you see its effect that you can understand how it drives biased results. Anupam gave some other real-world examples of potential bias in models, and how this tool can help identify them. But he stressed the importance of human decision making – is the model truly biased or not, and how do you fix it? Tools like this are key to broader deployment of AI, because they help identify potential issues and impacts. This still requires human decision-making, but using computational resources to perform complex analysis so humans can focus where they are needed.

Ready for Production

This session nicely represented a broader theme across the AI sessions at Innovation 2022 – that we are starting to see that a production ecosystem emerge in AI. Intel software powered by oneAPI accelerates the core tools and framework to provide the flexibility to run on the best hardware architecture for the job. Zeblok Ai-Optimization-as-a-Service makes it easy to deploy containerized workflows on the platforms required for a project. And TruEra Diagnostics and Monitoring fill out the workflow with the tools needed to build trust in AI and to maintain its quality over its production lifecycle.

To learn more about how to optimize your AI pipelines, see Intel’s AI and machine learning development tools and resources.

Technical marketing manager for Intel AI/ML product and solutions. Previous to Intel, I spent 7.5 years at MathWorks in technical marketing for the HDL product line, and 20 years at Cadence Design Systems in various technical and marketing roles for synthesis, simulation, and other verification technologies.

Technical marketing manager for Intel AI/ML product and solutions. Previous to Intel, I spent 7.5 years at MathWorks in technical marketing for the HDL product line, and 20 years at Cadence Design Systems in various technical and marketing roles for synthesis, simulation, and other verification technologies.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.