The Intel Innovation 2022 conference “Innovation for the Future” track featured a significant amount of AI content. One of the most interesting presentations was by Jason Martin, Principal Engineer leading the Secure Intelligence Team at Intel Labs.

Responsible AI: The Future of Security and Privacy in AI/ML

While AI development was mostly in the realm of research, practices such as sharing open datasets, publishing models publicly, and using any compute resources available all helped drive forward the state of the art. AI is now increasingly deployed in production environments in the commercial, healthcare, government, and defense sectors and Intel provides developers with the AI software tools, framework optimizations, and industry-specific reference kits to enable this move from pilot to production. However, security practices that might be commonplace for software development have yet to be fully applied to AI. AI in many cases adds new opportunities for vulnerability.

Thankfully, Jason and his team perform research that tries to identify vulnerabilities, so they can develop techniques to test for and mitigate them in practice. Throughout his presentation, Jason used the term “machine learning supply chain”, which is a helpful way to think about all the areas to monitor. The assets in this system that need to be protected are:

- Training data - samples and labels

- Model architecture

- Model parameters

- Inference samples

- Output of the model – the prediction/decision

These all can be targets, and for a variety of reasons, via a variety of techniques. Jason’s presentation outlined a sampling of the types of attacks his team has researched and illustrated with examples. Here is a brief summary:

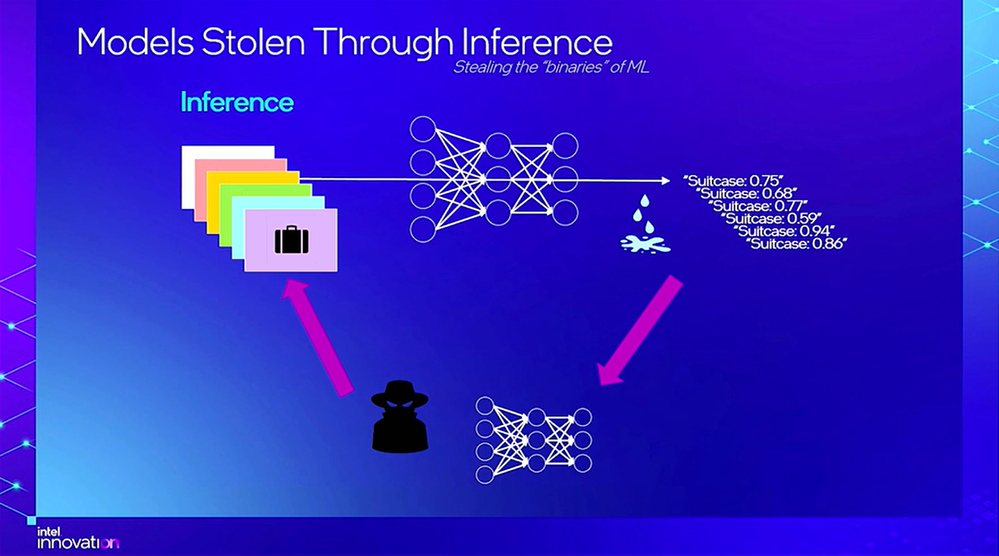

- Model theft: via API queries that probe the boundary conditions. After all, models are expensive to develop and train. You don’t need a bitwise copy of a model to extract its value. A benign example is knowledge distillation, a model compression technique. When this approach is used to steal a model, it’s called model extraction.

- Data theft: In addition to pure theft, since data has tremendous value, AI models built from the data present new ways to access specific information just from the output of the model:

o Model inversion, where an adversary reconstructs training data that leaked into the model when the model memorizes information about the data it was trained on.

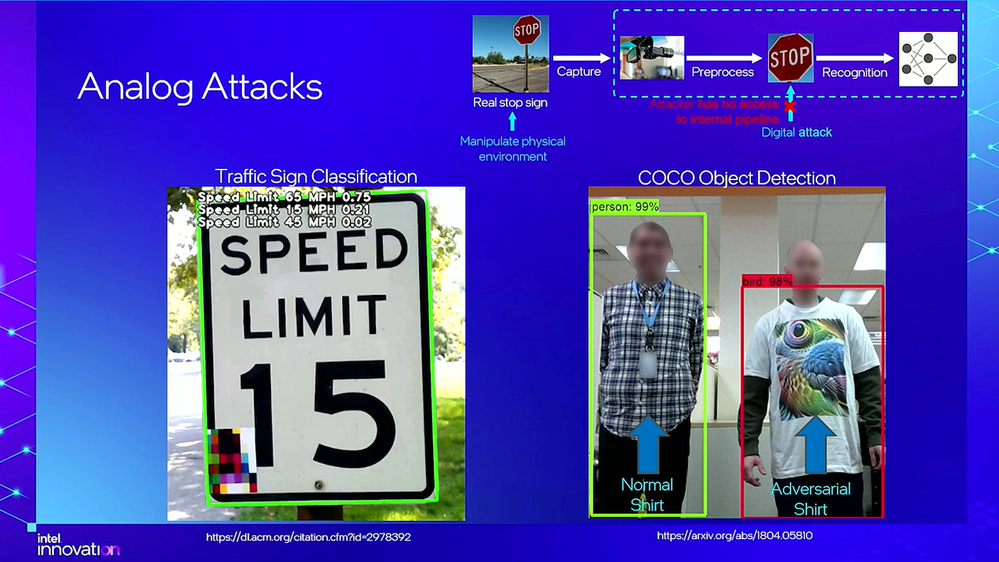

o Membership inference attack, where an adversary tries to determine if a data point was part of the dataset the model was trained on. - Tampering: to change the behavior of the model. This can happen during training, inference, or even manipulating the output of the model as it is used by its larger system. Jason illustrated types of tampering with examples that ranged from a t-shirt that can cause you to be classified as a bird, to more nefarious attacks such as malware and signal jamming.

At one point, Jason read the audience and paused to assure them, “don’t worry, we’re going to get to defenses.”

Given the large surface area of potential vulnerabilities, along with the constant evolution, there is no single solution. But you can get started securing your AI today.

- Protect your data. Use encryption and limit access to protect data at-rest. Intel® Software Guard Extensions (Intel® SGX) was initially developed by Intel Labs, and it protects confidentiality and integrity for data in-use. It isolates application and enables remote attestation of the workload, so only approved models and training procedures are executed. You can build your own security solution using SGX technology, or access via tools, frameworks, or an OS with it built-in.

- Some data need to remain isolated due to confidentiality requirements such as HIPAA or GDPR, or perhaps it’s just too proprietary or too large. But it can still be aggregated to build high-quality models through federated learning. OpenFL moves the computation to where the data resides, aggregating the partial models into a new global model. The Federated Tumor Segmentation (FeTS) project is an example of this, where aggregating models from multiple institutions improved model accuracy by 33%.

- Prevent model theft with the Intel® Distribution of OpenVINO™ Toolkit Security Add-On. This tool helps you control access to deployed OpenVINO models through secure packaging and secure model execution.

- Prevent model extraction attacks by reducing the amount of model output information, such as confidence scores. Other techniques for preventing model extraction involve rate-limiting queries and detecting out-of-distribution queries. Work is underway to enable model watermarking.

Adversarial attacks remain challenging. Intel is part of the Coalition for Content Provenance and Authenticity, to document data provenance to enable tracing the origins of data. And Intel is also part of the DARPA Guaranteeing AI Robustness Against Deception (GARD) initiative to reduce model poisoning attacks by training models to learn more robust features of the data that map to human visual systems. And Jason outlined some other techniques that his team is experimenting with.

Perhaps the key takeaway from this session is to adopt a security mindset. Where most AI development has been in very open research settings, developing production AI systems with proprietary or confidential data requires security built-in from the beginning of the lifecycle. Achieving complete security while innovating is quite difficult, but today’s tools will do a good job preventing many attacks. And if you design your systems to fail safely then you can also minimize negative consequences. As the AI industry continues to mature, more tools and best practices will become available.

We also encourage you to check out all of the cutting-edge security and privacy research happening at Intel Labs and to learn more about Intel’s AI developer tools and resources.

Technical marketing manager for Intel AI/ML product and solutions. Previous to Intel, I spent 7.5 years at MathWorks in technical marketing for the HDL product line, and 20 years at Cadence Design Systems in various technical and marketing roles for synthesis, simulation, and other verification technologies.

Technical marketing manager for Intel AI/ML product and solutions. Previous to Intel, I spent 7.5 years at MathWorks in technical marketing for the HDL product line, and 20 years at Cadence Design Systems in various technical and marketing roles for synthesis, simulation, and other verification technologies.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.