Key Takeaways

- Intel’s software engineers optimize and enable new hardware features in leading open source AI frameworks.

- Intel optimized AI software releases accelerate application performance running on Xeon Scalable processors.

- Extend your ROI with the AI enabled Intel hardware you already own as software releases further optimize performance.

Intel® Xeon® Scalable platforms provide the foundation for an evolutionary leap forward in data centric innovation. Intel® Deep Learning Boost is a new technology that has built-in AI acceleration to enhance artificial intelligence inference and training performance. Specifically, for the 3rd Generation Intel Xeon Scalable Processors (Cooper Lake) that was announced in June 2020, it was the industry’s first x86 support of Brain Floating Point 16-bit (blfoat16) and Vector Neural Network Instructions (VNNI). The enhancements in hardware architecture, coupled with software optimizations deliver up to 1.93x more performance in AI training and up to 1.9x more performance in AI inference compared to 2nd Generation Intel Xeon Scalable processors (Cascade Lake).

The focus of this blog is to bring to light that continued software optimizations can boost performance not only for the latest platforms, but also for the current install base from prior generations. For each magnitude of performance increase achieved by new hardware, software optimizations have the potential to multiply those results. However, new hardware platforms come to market roughly every 12-18 months. In between those new platform introductions, we continue to innovate on the software stack in order to unlock even more performance for the current install base. In addition, the open source software stacks such as TensorFlow, PyTorch are continuously driving innovations and changes into their implementation. Our software stack is aligned to address these rapid releases and accelerate our Intel optimized versions. We are also at the forefront of enabling new models and use cases published by the AI research community in industry and academia. This means customers can continue to extract value from their current platform investments.

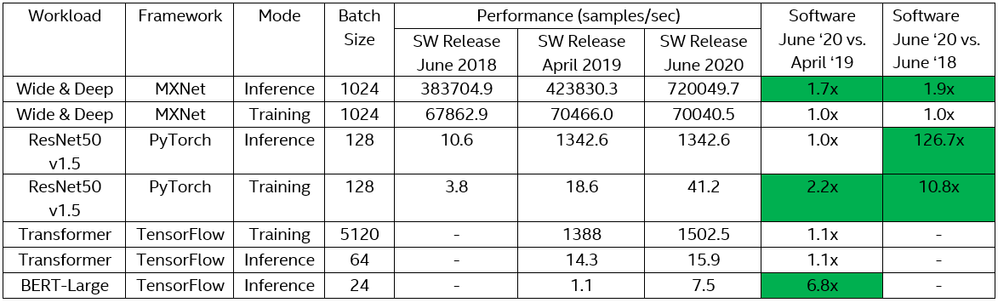

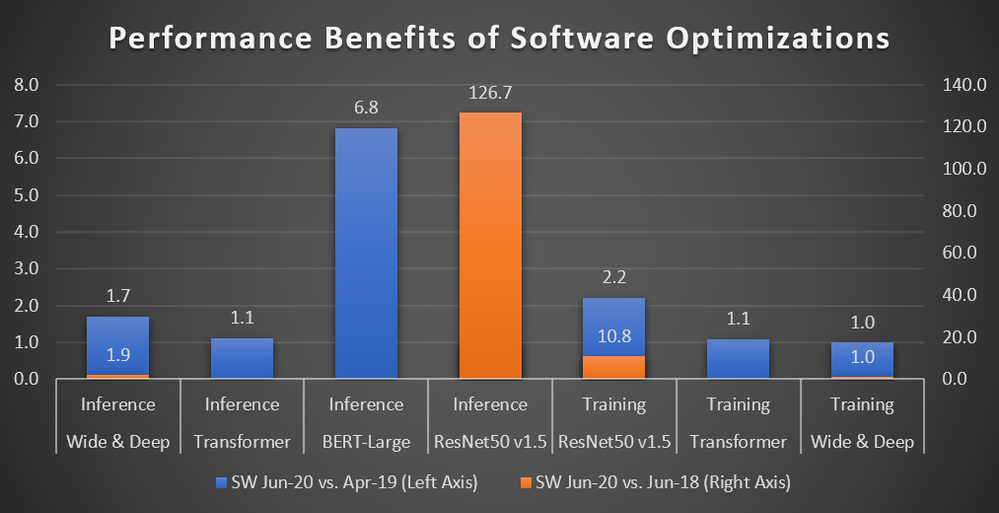

To highlight this point, we measured the performance on popular deep learning workloads. The table below includes image classification (ResNet50 v1.5), natural language processing (Transformer/BERT), and recommendation systems (Wide & Deep). It is important to note that the workloads were all run on 2nd generation Intel Xeon Scalable processors. The only variables are the software releases. Therefore, the performance gains in the resulting table are purely attributed to software optimizations.

Results

Deep Learning Workload Performance

These astounding performance gains are due to software optimizations used in Intel optimized deep learning frameworks (e.g.TensorFlow, PyTorch, and MXNet) as well as the Intel® Distribution of OpenVINOTM toolkit for deep learning inference. Listed are some of our approaches to software optimization:

- oneAPI Deep Neural Network Library (oneDNN) had been used in all popular deep learning frameworks and the primitives are being used to accelerate the execution of typical DNN operators, like Convolution, GEMM, BatchNorm, etc.

- Graph fusion is used to reduce memory footprint and save on memory bandwidth. In addition to graph fusion, constant folding (Convolution and BatchNorm) and common subexpression elimination are also used as pre-computation of graph execution.

- Runtime optimization achieved through better memory and thread management. Memory management helps to improve the cache utilization by re-using the memory as much as possible. Thread management allocates the thread resources more effectively for workload execution.

In addition to single node software optimizations, multi-node software optimizations are also explored to show better scale-up/scale-out efficiency. Data/model/hybrid parallelism is a well-known technique for multi-node training, together with computation/communication overlap (or pipeline overlap) and some novel communication collective algorithms (e.g., ring-based all-reduce).

How You Can Access Intel’s software Optimizations

Through our work in software, we continuously make performance improvements for deep learning frameworks. There are multiple ways to gain access to Intel’s software optimizations that enhance the current install base and benefit future platforms.

The Intel® AI Analytics Toolkit Powered by oneAPI gives developers, researchers, and data scientists familiar Python* tools to accelerate each step in the pipeline—training deep neural networks, integrating trained models into applications for inference, and executing functions for data analytics and machine learning workloads. The toolkit includes:

PyTorch Optimized for Intel® Technology. This includes Intel optimizations up-streamed to both the mainline Pytorch and the Intel extension of Pytorch that is intended to make Out of Box experience better for our customers.

Intel® Optimization for TensorFlow. In collaboration with Google*, TensorFlow has been directly optimized for Intel® architecture to achieve high performance on Intel® Xeon® Scalable processors. Intel also offers AI containers. We publish the docker image of Intel® Optimization of TensorFlow on DockHub. The following tags are used:

- 3rd Gen Intel Xeon Scalable Processors image: intel/intel-optimized-tensorflow:tensorflow-2.2-bf16-nightly

- 2nd Gen Intel Xeon Scalable Processors image: intelaipg/intel-optimized-tensorflow:latest-prs-b5d67b7-avx2-devel-mkl-py3

Model Zoo for Intel® Architecture. This repository contains links to pre-trained models, sample scripts, best practices, and step-by-step tutorials for greater than 40 popular open-source machine learning models optimized by Intel to run on Intel® Xeon® Scalable processors. We are also contributing to Google Model Garden by adding Intel optimized models.

The Intel® Low Precision Inference Toolkit accelerates deep learning inference workloads. This is developed to convert FP32 precision to int8 precision in order to assist customers with deploying low precision inference solutions rapidly.

The Intel® Distribution for Python enables customers to speed up computational packages without code changes.

Intel’s oneAPI Deep Neural Network Library (oneDNN) is an open-source performance library that contains basic building blocks for neural networks optimized for Intel Architecture Processors and Intel Processor Graphics. OneDNN is default for CPU in PyTorch and MXNet binaries and in the process to be added to Tensorflow.

Conclusion

Intel® Xeon® Scalable processors support with ease to run complex AI workloads on the same hardware as general purpose compute workloads. In addition to innovating and releasing AI features into Intel Xeon Scalable processors for each generation, Intel software optimizations take advantage of the hardware features and continue to bring significant performance speed up to popular AI workloads. Developers and end customers should stay up to date and take advantage of the Intel optimized frameworks and software toolkits that are designed to unleash the performance of Intel platforms.

About the Authors

Huma Abidi is a Senior Director of AI Software Products at Intel, responsible for strategy, roadmaps, requirements, validation and Benchmarking of DL, ML and Analytics Software Products. She leads a globally diverse team of engineers and technologists responsible for delivering AI products that enable customers to create AI solutions.

Haihao Shen is a senior deep learning engineer in Machine Learning Performance (MLP) with Intel Architecture, Graphics and Software Group (IAGS). He leads the benchmarking for deep learning frameworks and the development of low precision optimization tool. He has more than 10 years of experience working on software optimization and verification at Intel. Prior to joining Intel, he received his master degree from Shanghai Jiao Tong University.

References

- 3rd Generation Intel Xeon Scalable Processors Product Brief

- https://www.intel.com/content/dam/www/public/us/en/documents/product-overviews/dl-boost-product-overview.pdf

- TensorFlow Blog

- MXNet Blog

- https://www.intel.com/content/www/us/en/artificial-intelligence/posts/intel-facebook-boost-bfloat16.html

- Google: https://blog.tensorflow.org/2020/06/accelerating-ai-performance-on-3rd-gen-processors-with-tensorflow-bfloat16.html

- Tencent: https://www.intel.com/content/www/us/en/artificial-intelligence/posts/intel-xeon-text-to-speech.html

- Alibaba: https://www.intel.com/content/www/us/en/artificial-intelligence/posts/alibaba-blog.html

- Matroid: https://builders.intel.com/ai/testimonial

Configurations:

ResNet50 v1.5

- Jun-18: training, torch v0.4, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 python benchmark_.py --arch resnet50 --num-iters=20; inference, torch v0.4, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 python benchmark_.py --arch resnet50 --num-iters=20 –inference

- Apr-19: training, torch v1.01, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 python benchmark_.py --arch resnet50 --num-iters=20; inference: MLPerf v0.5 submission + patch: https://github.com/pytorch/pytorch/pull/25235, ./inferencer --net_conf resnet50 --log_level 0 --w 20 --batch_size 128 --iterations 1000 --device_type ideep --dummy_data true --random_multibatch false --numa_id 0 --init_net_path resnet50/init_net_int8.pbtxt --predict_net_path resnet50/predict_net_int8.pbtxt --shared_memory_option USE_LOCAL --shared_weight USE_LOCAL --data_order NHWC --quantized true

- Jun-20: training, https://github.com/pytorch/pytorch/tree/gh/xiaobingsuper/18/orig, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 python benchmark_.py --arch resnet50 --num-iters=20; inference, same as Apr-19

Transformer

- Apr-19: training, docker intelaipg/intel-optimized-tensorflow:latest-prs-b5d67b7-avx2-devel-mkl-py3, OMP_NUM_THREADS=28 python ./benchmarks/launch_benchmark.py --framework tensorflow --precision fp32 --mode training --model-name transformer_mlperf --num-intra-threads 28 --num-inter-threads 1 --data-location transformer_data --random_seed=11 train_steps=100 steps_between_eval=100 params=big save_checkpoints="No" do_eval="No" print_iter=10; inference, docker intelaipg/intel-optimized-tensorflow:latest-prs-b5d67b7-avx2-devel-mkl-py3, OMP_NUM_THREADS=28 python benchmarks/launch_benchmark.py --model-name transformer_lt_official --precision fp32 --mode inference --framework tensorflow --batch-size 64 --num-intra-threads 28 --num-inter-threads 1 --in-graph fp32_graphdef.pb --data-location transformer_lt_official_fp32_pretrained_model/data -- file=newstest2014.en file_out=translate.txt reference=newstest2014.de vocab_file=vocab.txt NOINSTALL=True

- Jun-20: training, docker i intel/intel-optimized-tensorflow:tensorflow-2.2-bf16-nightly, OMP_NUM_THREADS=28 python ./benchmarks/launch_benchmark.py --framework tensorflow --precision fp32 --mode training --model-name transformer_mlperf --num-intra-threads 28 --num-inter-threads 1 --data-location transformer_data --random_seed=11 train_steps=100 steps_between_eval=100 params=big save_checkpoints="No" do_eval="No" print_iter=10; inference, docker intelaipg/intel-optimized-tensorflow:latest-prs-b5d67b7-avx2-devel-mkl-py3, OMP_NUM_THREADS=28 python benchmarks/launch_benchmark.py --model-name transformer_lt_official --precision fp32 --mode inference --framework tensorflow --batch-size 64 --num-intra-threads 28 --num-inter-threads 1 --in-graph fp32_graphdef.pb --data-location transformer_lt_official_fp32_pretrained_model/data -- file=newstest2014.en file_out=translate.txt reference=newstest2014.de vocab_file=vocab.txt NOINSTALL=True

BERT

- Apr-19: inference, docker intelaipg/intel-optimized-tensorflow:latest-prs-b5d67b7-avx2-devel-mkl-py3, OMP_NUM_THREADS=28 python run_squad.py --init_checkpoint=/tf_dataset/dataset/data-bert-squad/squad-ckpts/model.ckpt-3649 --vocab_file=/tf_dataset/dataset/data-bert-squad/uncased_L-24_H-1024_A-16/vocab.txt --bert_config_file=/tf_dataset/dataset/data-bert-squad/uncased_L-24_H-1024_A-16/bert_config.json --predict_file=/tf_dataset/dataset/data-bert-squad/uncased_L-24_H-1024_A-16/dev-v1.1.json --precision=fp32 --output_dir=/root/logs --predict_batch_size=32 --do_predict=True --mode=benchmark

- Jun-20: inference, docker i intel/intel-optimized-tensorflow:tensorflow-2.2-bf16-nightly, OMP_NUM_THREADS=28 python launch_benchmark.py --model-name bert_large --precision fp32 --mode inference --framework tensorflow --batch-size 32 --socket-id 0 \

--docker-image intel/intel-optimized-tensorflow:tensorflow-2.2-bf16-nightly --data-location dataset/bert_large_wwm/wwm_uncased_L-24_H-1024_A-16 \

--checkpoint /tf_dataset/dataset/data-bert-squad/squad-ckpts --benchmark-only --verbose -- https_proxy=http://proxy.ra.intel.com:912 http_proxy=http://proxy.ra.intel.com:911 DEBIAN_FRONTEND=noninteractive init_checkpoint=model.ckpt-3649 infer_option=SQuAD - Wide&DeepJun-18: training, mxnet 1.3, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 python train.py, inference, mxnet 1.3, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 numactl --physcpubind=0-27 --membind=0 python inference.py --accuracy True

- Apr-19: training, mxnet 1.4, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 python train.py, inference, mxnet 1.4, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 numactl --physcpubind=0-27 --membind=0 python inference.py --accuracy True

- Jun-20: training, mxnet 1.7, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 python train.py, inference, mxnet 1.7, KMP_AFFINITY=granularity=fine,noduplicates,compact,1,0 OMP_NUM_THREADS=28 numactl --physcpubind=0-27 --membind=0 python inference.py --accuracy True

# training

python train.py

# inference

# fp32

numactl --physcpubind=0-23 --membind=0 python inference.py --accuracy True

# Int8

numactl --physcpubind=0-23 --membind=0 python inference.py --symbol-file=WD-quantized-162batches-naive-symbol.json --param-file=WD-quantized-0000.params --accuracy True

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.