Two key aspects of autonomous systems are perception and decision making. The first, perception, has benefited tremendously from advances in deep learning in areas such as computer vision. The second, decision making, falls largely under the umbrella of Reinforcement Learning (RL).

The main premise of RL is that an agent learns to shape its actions in order to maximize a reward simply through interacting with its environment — which is similar to how we learn as humans. The rapid development in deep learning techniques in recent years has improved the perceptive capabilities of autonomous agents - enabling them to process raw sights and sounds from their environment. Modern RL methods often leverage these capabilities to enable agents to interact with the environment more efficiently and make better decisions.

Earlier this year, Intel AI researchers presented CERL — a novel framework that allowed agents to learn challenging continuous control problems - e.g., training a 3D humanoid model to walk from scratch. We demonstrated that we could combine gradient based learning with evolutionary approaches to solve these problems in a data-efficient manner.

MERL: From Single Agents to Multiagent Systems

Multiagent problems add another layer of complexity, as agents need to strike a balance between acting in their own self-interest (local reward) versus the interest of the collective. For example, in soccer, we want our forward agents to dribble, shoot and score goals. However, the team as a whole needs to learn to win games. In some circumstances, such as protecting a lead in the final stages of a game, a forward agent may need to sacrifice its goal-scoring prerogative to instead drop back and defend the lead for the team.

The local rewards are usually dense, resulting in quick feedback. In contrast, the team rewards are usually much sparser. So, how does one get agents to utilize their dense local rewards to learn useful skills while simultaneously preventing “greedy,” self-interested strategies — and nudge them towards working as a team?

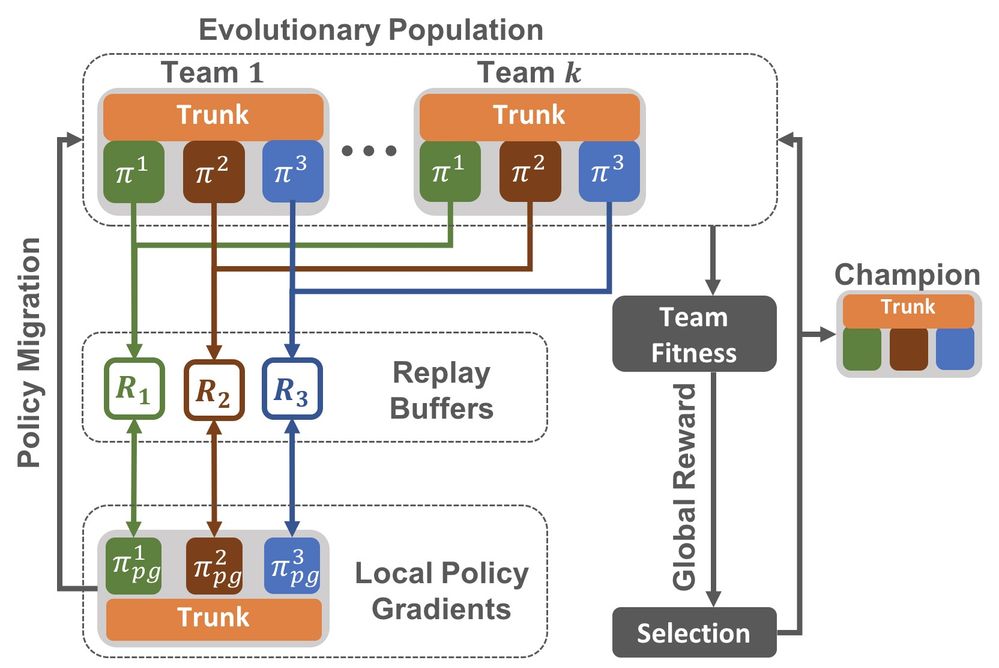

That’s why we’ve developed MERL, a scalable, data-efficient method for training a team of agents to jointly solve a coordination task. Fig 1 shows the basic strategy. A set of agents is represented as a multi-headed neural network with a common trunk. We split the learning objective into two optimization processes that operate simultaneously. For each agent, we use a policy gradient method to optimize its dense local rewards. For the sparser team objective, we utilize an evolutionary method similar to our approach in CERL. This enables us to optimize both objectives simultaneously without explicitly mixing them together. We construct a population of teams, where each team is evaluated on its performance on the actual task. Following each evaluation, strong teams are retained, weak teams are eliminated and new teams are formed by genetic operations like mutation and crossover on the elite survivors.

Periodically, agents that are trained using policy gradients are inserted into the evolutionary population to provide building blocks for the evolutionary search process. At any given time, the team with the highest score for the task is considered the champion team.

Figure 1: MERL Basic Strategy.

Additionally, each team member is allocated a shared “replay buffer”: a data repository where it can store its experiences as it explores. We construct as many shared buffers as there are team positions, so a team member can learn from the experiences of all of its versions across all of the teams. Using this split-level approach, we demonstrate state-of-the-art performance on a number of difficult benchmarks.

Benchmarking Performance

Figure 2: POI Navigation using MERL.

One example of such a task is shown in Fig 2. Four points of interest (POIs) are distributed in a square field. A set of agents must jointly “observe” these POIs. A POI is “observed” if at least one agent is able to reach it within a set time. Here, the individual objective is to learn skills like navigating to a POI while the team objective is to maximize the number of POIs visited. This implies that agents may have to sacrifice optimizing their own performance (e.g., reaching the closest POI if a teammate is better placed to do so) in the interest of the team. The red agent shown above, trained using MERL, initially goes towards the closest POI. However, after realizing that a teammate (yellow) is also heading towards the same POI, it switches targets and heads towards another POI — sacrificing its local reward for the betterment of the team.

A more complex coordination problem arises when multiple agents have to simultaneously reach a POI. We call this the coupling factor requirement. Figure 3 shows an example. The numbers within each POI denote the number of agents next to it. Once the required number of agents is able to simultaneously reach it, a POI is lit up to indicate that it has been “observed.”

Figure 3: MADDPG vs. MERL for Coupling 3.

Figure 3: MADDPG vs. MERL for Coupling 3.

In real life, this could be a search-and-rescue requirement. For example, lifting a heavy rock might require at least 3 robots, with any fewer resulting in zero rewards. Additionally, the agents do not know ahead of time what the coupling factor is. This makes it an especially difficult problem since successful trajectories — and hence learnable team rewards — become all the more sparse.

Here, we see that MADDPG, a state-of-the-art multiagent RL algorithm, fails to solve the coordination problem. The individual agents successfully learn to navigate but are unable to utilize it toward tackling the global objective. In contrast, MERL demonstrates clear behaviors of team-formation and collaborative pursuit of POIs. Two teams of three agents are formed; each team pursues different POIs to enable 75% coverage of the POIs. In our paper, we show that MERL scales to a coupling of seven, achieving a 50% observation rate, while other baselines fail completely beyond a coupling of two. We also show that these results hold consistently for a number of other multiagent RL coordination benchmarks.

Next steps

Our team at Intel AI continues to push the state-of-the-art for decision making systems. We are exploring similar problems involving multi-task learning in scenarios that have no well-defined reward feedback. We are also exploring the role of communication in solving such tasks. All of these represent a class of problems that are a step up from simple perception - and a step toward achieving cognitive systems that can perceive and act on their own.

Our paper will be presented at the Deep RL workshop at NeurIPS 2019. It was authored by Intel AI researchers Shauharda Khadka, Somdeb Majumdar and Santiago Miret along with our collaborators Stephen McAleer from University of California, Irvine and Kagan Tumer from Oregon State University.

Tests document performance of components on a particular test, in specific systems. Differences in hardware, software, or configuration will affect actual performance.

© Intel Corporation. Intel, the Intel logo, and Xeon are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries. Other names and brands may be claimed as the property of others.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.