On the surface, the AWS DeepLens allows those new to deep learning to easily create and deploy vision models accelerated by the OpenVINO toolkit and Model Optimizer. However, for more advanced users, there’s a lot more to be found under the hood. The DeepLens features an Intel Atom® x5 processor and utilizes the Compute Library for Deep Neural Networks (clDNN) with the OpenVINO toolkit for vision processing through AWS’s DeepLens packages. A great way to utilize the Model Optimizer is by treating the DeepLens as an Ubuntu* 16.04 Intel® NUC with a pre-attached camera and an Intel Atom processor.

Instead of demonstrating cloud deployment with Amazon Web Services (AWS), this tutorial approaches the DeepLens as an independent system and demonstrates how to seamlessly integrate it into a TensorFlow* image classification workflow with AWS’s wrapper for the Model Optimizer. As a demo, I built a complete application to sort waste into different categories to bolster sustainability at Intel offices. The code you’ll see in the following sections is more of a template; the base is correct but you’ll need to edit it to get it to run properly.

First, you’ll need a keyboard, mouse, and monitor to interface with the DeepLens. Hook it up directly like you would any regular computer and connect it to a network. The local account password will be the same as the default or the SSH password set during AWS configuration.

Creating a Virtual Environment with the Model Optimizer and awscam

The AWS DeepLens software package is only installed in Python* 2 on the DeepLens. While it most likely could be easily ported to Python 3, we’re going to stick with Python 2.

Before we begin, it’s good practice to update the AWS software to make sure that all the required files are in the correct place. Run the following:

sudo apt-get update

sudo apt-get upgrade awscam

To contain our environment and make sure other aspects of the DeepLens aren’t altered, we’ll create a Python virtual environment using the virtualenv package from the home directory.

pip2 install virtualenv

python -m virtualenv ~/venv

source ~/venv/bin/activate

We should now be in the new virtual environment. With a few commands, we can add the model optimizer packages to it. First, add the awscam by creating a path file that redirects to its location in /opt/awscam.

echo '/opt/awscam/lib' >> ~/venv/lib/python2.7/site-packages/awscam.pth

Next, copy the AWS model optimizer wrapper to the Python packages folder.

cp /usr/local/lib/python2.7/dist-packages/mo.py ~/venv/lib/python2.7/site-packages/mo.py

We can then install up to TensorFlow version 1.5 with DeepLens and some other packages used in this tutorial.

pip install tensorflow==1.5.0

pip install cv2

pip install pillow

apt-get install python-tk # Not usually necessary, most python installs have tkinter already!

Your virtual environment should now have all of the necessary packages. This local environment will allow for custom TensorFlow code, like CNN visualizations, to run easily on the device.

The Model Optimizer

The AWS packages we just added interface with the Model optimizer included within the OpenVINO toolkit. The awscam package and its getLastFrame function simply pulls frames from the video camera and converts them to an OpenCV representation. The mo package is more interesting. It essentially executes commands to feed specified models through the OpenVINO toolkit.

The Model Optimizer takes frozen models from a variety of supported platforms and converts them to an intermediate representation after analysis. It internally adjusts the model for optimal execution and performs operations like a conversion from FP32 to FP16 for faster execution. This intermediate representation can then take advantage of specialized Intel hardware-level acceleration. You can read more about the Model Optimizer here.

Using the AWS Wrappers

The DeepLens AWS wrappers we added to the virtual environment in the previous section make using the Model Optimizer for vision processing really easy. The >mo.optimize function takes in a pre-existing model of a supported topology and converts it to the intermediate representation. Then the awscam.Model function will take that intermediate representation and prepare it for inference with the returned object's doInference function. In the following five-line template we can optimize a model and run accelerated inference on the Intel Atom® x5 processor with Intel® Iris® graphics

error, model_path = mo.optimize(model_name, input_width, input_height, 'tf', aux_inputs=aux_inputs) # Creates IR

model = awscam.Model(model_path, {"GPU" : 1}) # Initializes optimized model on GPU

ret, frame = awscam.getLastFrame() # Gets a video frame from the camera

processed_frame = preprocess_image(frame) # Perform standard image preprocessing

inferOutput = model.doInference(processed_frame) # Runs the frame on the optimized model

The above is simplified, but as you will see the same principles can be easily applied to any project.

Custom Image Classification Workflow

Now that we’ve seen the basics of the Model Optimizer, we can use it and the DeepLens in conjunction to create a custom image classification workflow. Though these same principles can be applied to any image classification problem, I specifically looked at waste classification to get the DeepLens to determine whether or not waste was recyclable, compostable, or had to be thrown away. The initial network was trained using a transfer learning algorithm in Keras and was deployed to a simple Python app on the DeepLens that was used for inference and collecting more data.

Data Collection

The waste dataset I compiled was taken from a few sources. I wrote a Python script to download images from Google* and Bing* that satisfied specific search criteria. I wrote a different script to download images from the ImageNet database. I took some images from the trashnet dataset. I also used images of my own. Each image was fed to a processing function that performed resizing, padding, and rotation for uniformity. I ended up with a little over three thousand images total. For training, I uploaded the formatted dataset to AWS S3.

Network Tuning for the Model Optimizer

As the Model Optimizer supports the largest topologies in deep learning, you have a broad selection of frameworks to choose from. I choose Keras with a TensorFlow backend. The Keras framework on top of TensorFlow makes it really easy to quickly train models that can be deployed anywhere. All popular image models have pre-trained, easily instantiated versions within the Keras Applications package, including Inception, ResNet, VGG, mobileNet, and Inception-ResNet. I opted to use ResNet50 as it strikes a good balance between accuracy and inference speed (it’s also what DeepLens’ default image classification algorithm uses.

The Model Optimizer works with most models, but there are a few caveats you should keep in mind when training. Here are two that I found: 1. Models must be in a “frozen” state where weights cannot be trained. For example, the moving mean and variance values of batch norm layers have to be frozen. 2. Though most graph operations are supported, a few aren’t. When defining a custom model in Keras, I used a Flatten layer, which utilizes the tf.stack TensorFlow operation which isn’t supported. However, a quick switch to a Reshape Layer based off of tf.reshape fixed the issue. See this page for a list of supported TensorFlow layers and operations.

We can easily instantiate a model for transfer learning with gradient descent as follows:

import keras

base_model = keras.applications.resnet50.ResNet50(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

x = base_model.output

x = Reshape((2048,))(x)

predictions = Dense(num_classes, activation='softmax')(x)

model = Model(input=base_model.input, output=predictions)

sgd = keras.optimizers.SGD(lr=1e-3, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(optimizer=sgd, loss='categorical_crossentropy', metrics=['accuracy'])

The greatest part of doing image classification with Keras are the built-in ImageDataGenerator class that automatically augments images. Its documentation can be found here. I used these data generators for both the training and validation images in conjunction with their flow_from_directory function. It’s important to also apply the preprocessing function for the model you’re using to each image. Then, use a fit generator to train. Here’s an example.

train_data_gen = ImageDataGenerator(preprocessing_function=preprocess_input, .... # Insert params for image distortion)

train_batch_generator = train_data_gen.flow_from_directory(train_dir, target_size=(width, height), batch_size=batch_size)

# ...

model.fit_generator(train_batch_generator, ... # more training params, like epoch steps, validation gen, etc.)

I ran this on a training set of around 2600 images divided among 21 different types of waste and tuned hyper parameters using a separate validation set until I was satisfied with the model’s accuracy on a held-out test set. I trained with CPU on an AWS EC2 instance with a Deep Learning AMI running Intel MKL DNN optimized TensorFlow. However, you can use any tool you like. SageMaker, another AWS tool a bit closer to the DeepLens stack (with tensorflow support), allows experts to train on custom containers through a Jupyter Notebook interface. As transfer learning jobs are usually smaller, they can be done locally on most modern laptops with a bit of patience.

We can then use the Keras model save and model load functions to convert the Keras model to a frozen TensorFlow graph that can be fed to the model optimizer. It’s important to first save the Keras model as doing so automatically takes care of some freezing, especially for normalization layers.

from keras import backend as K

from tensorflow.python.framework import graph_util

from tensorflow.python.framework import graph_io

model.save("model.h5")

K.clear_session()

model = keras.models.load_model("model.h5")

sess = K.get_session()

constant_graph = graph_util.convert_variables_to_constants(sess, sess.graph.as_graph_def(), ["output"])

graph_io.write_graph(constant_graph, "output", "model.pb", as_text=False)

This final “model.pb” file will be sent to the Model Optimizer in the inference application.

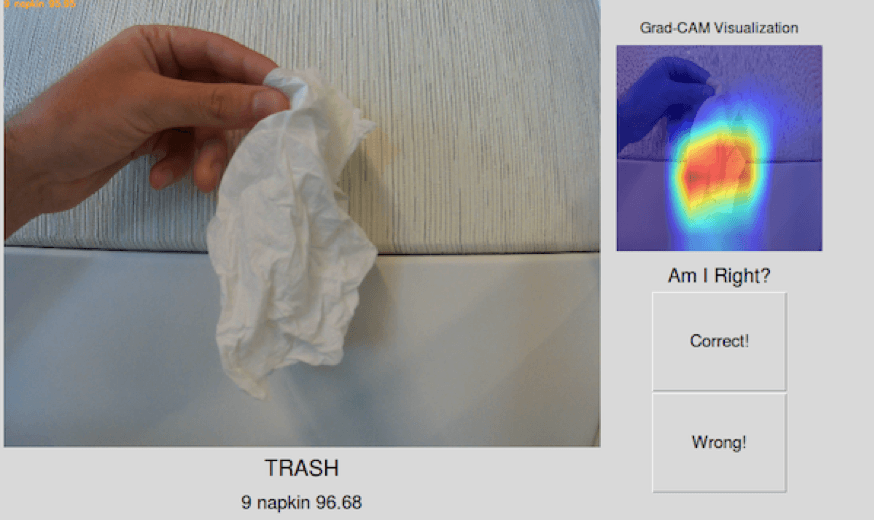

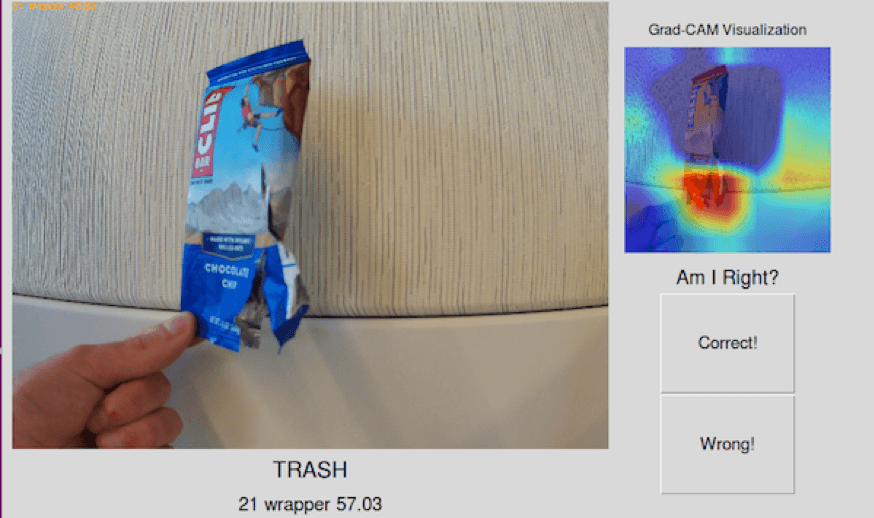

Creating a Native Threaded Application for Inference

Ultimately, I wanted my DeepLens to accomplish the eco-friendly goal of further improving recycling habits at the Intel office. Using a simple UI system, whose backend takes a nod from the default DeepLens image-classification lambda function, I could display to users which bin to place their waste in and allow them to provide corrections to the model for additional data collection. To do this, I used the Tkinter Python package along with Python’s built-in threading package.

Threads can be made easily in Python by extending the Thread class with the following format that overwrites the constructor and defines the run function:

class MyThread(Thread):

def __init__(self, # more params?):

Thread.__init__(self

# Finish initialization ...

def run(self

# Do the threads action.

It’s important to use threading to speed up the execution of the program as it allows for asynchronous actions. Remember that if you use an infinite loop within a thread, you need a way to stop it externally to get the application to quit properly. There were two principle threads outside of the main application: one handled inference via the Model Optimizer, and the other saved images. The thread for inference borrows heavily from the Model Optimizer code we looked at before.

class Inference_Thread(Thread):

def __init__(self, config):

Thread.__init__(self)

self.config = config

def run(self

# Get params from a config dictionary

error, model_path = mo.optimize(model_name, input_width, input_height, 'tf', aux_inputs=aux_inputs)

model = awscam.Model(model_path, {"GPU" : 1}) # Load the model to GPU

labels = # ... I recommend getting labels for your classes somehow. Mine are saved in a text file.

global RUN_THREADS

while RUN_THREADS:

ret, frame = awscam.getLastFrame()# Get a video frame

if ret == False:

raise Exception("Failed to get frame from the stream")

# Crop / Resize frame to fit model input requirement

frame = center_crop_to_ratio(frame) # Depends on your training data, mine was 4:3 aspect ratio

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) # AWSCAM outputs in BGR, Keras uses RGB input

processed_frame = preprocess_image(frame) # Same function as in training

inferOutput = model.doInference(processed_frame)

#run inference on the frame

top_result = model.parseResult("classification", inferOutput)["classification"][0]

# Use the inference results to make a global inference package with the image, label, probability etc.

# This is one place where I have a lot more code. You can also setup autocapture for high probability images.

global inference_package

inference_package = # .....

This thread will first create an optimized model with the Model Optimizer, and then will constantly pull frames from the DeepLens camera, run inference, and publish the frames / output to a globally scoped variable which the other threads will be able to pull from. The image saving thread is a lot simpler. It simply accepts an image path and saves the most recent image from the global inference package.

class Image_Writer(Thread):

def __init__(self, file_path):

Thread.__init__(self)

self.file_path = file_path

def run(self):

cv2.imwrite(self.file_path, cv2.cvtColor(inference_package["frame"], cv2.COLOR_RGB2BGR)

Both of these threads are dispatched and controlled by a basic Tkinter app running in the root thread that contains all of the UI. The app had the following template:

from PIL import Image

from PIL import ImageTk

import Tkinter as tk

class Application:

def __init__(self, config):

self.config = config

self.root = tk.Tk() # Initialize tk

self.panel = tk.Label(self.root) # Create the panel for the image to be displayed

btn_correct = tk.Button(button_frame, text="Correct!", command=self.save_correct, ...)

btn_incorrect = tk.Button(button_frame, text="Incorrect!", command=self.save_correct, ...)

# More UI setup in here... I have a lot more stuff!

self.root.wm_protocol("WM_DELETE_WINDOW", self.on_close)

self.inference_thread = InferenceThread(self.config) # Create Inference Thread

self.inference_thread.start()

self.video_loop()

def video_loop(self

# This function runs constantly on the main thread after initialization

global inference_package # Get information from the inference thread to display

if inference_package is not None:

img = Image.fromarray(inference_package["frame"])

imgtk = ImageTk.PhotoImage(image=img)

# Update UI with other information from inference package

self.panel.imgtk = imgtk # anchor the image so it isn't garbage collected

self.panel.config(image=imgtk)

self.root.after(50, self.video_loop)

def save_correct(self

writer_thread = ImageWriter(correct_file_path)

writer_thread.start()

def save_incorrect(self

writer_thread = ImageWriter(incorrect_file_path)

writer_thread.start()

def on_close():

# Stop thread loops

self.root.quit()

Following the template code, you should be able to create a basic GUI that achieves a fairly high framerate (~12-15 FPS), made possible by the DeepLens’ special acceleration using the OpenVINO toolkit. Though you could stop here, I decided to implement a few extra features. For example, my App has an autocapture feature that automatically saves high probability images to the appropriate folder on the included DeepLens SD Card.

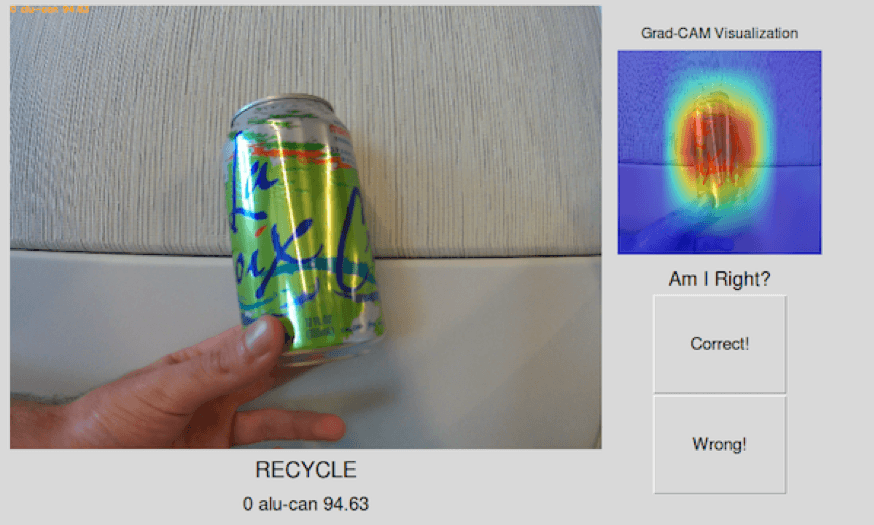

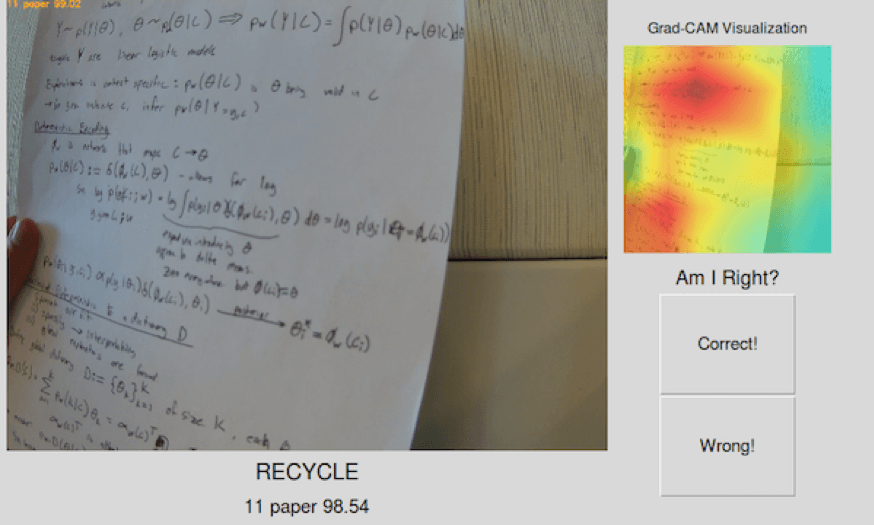

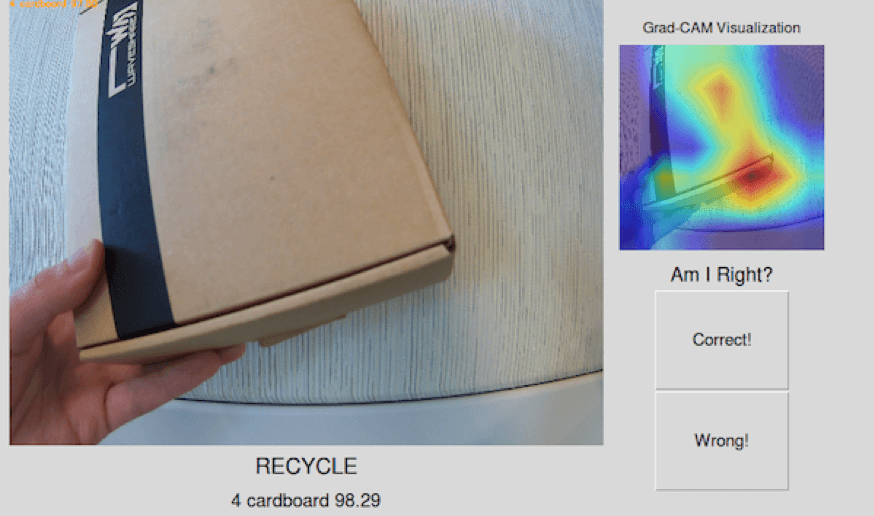

Fun with CNN Visualizations

In order to make sure the model was classifying objects from correct or reasonable image features, I decided to add a GradCam visualization to the app. GradCam takes the gradient of the logits of a target class (in our case the one with the highest probability) with respect to the network’s final convolution layer. This essentially highlights which network activations, and thus which regions of the original image, cause the final classification.

The code for GradCam is a little beyond the scope of this tutorial, but you can read more about it in the paper on arXiv. My implementation, which ran in a separate thread in the application, used TensorFlow and graph extensions to add an input for the desired class. Even though I was running both inference and visualization at the same time, the Intel Atom processor was able to handle it. This is the true power of the TensorFlow environment we previously made. It allows us to run custom graph operations locally. With the visualizations, I was able to confirm the model’s accuracy.

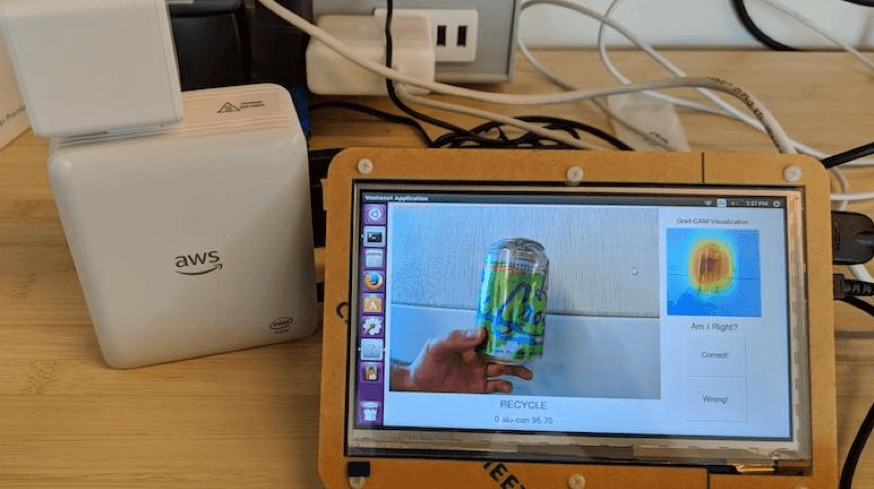

The Final Product

With the model trained and the app coded, the DeepLens becomes a local inference machine. I connected the DeepLens HDMI to a Raspberry Pi HID compliant touch screen monitor that would allow users to provide feedback to the model. If you wish to do something similar, make sure to pick up a powered USB hub, as the DeepLens’ USB ports can’t provide enough current to power most screens. With this setup, the DeepLens becomes the gift that keeps on giving: the inference with the model helps collect more data, which in turn leads to an even better model.

Here are a few more examples of the application in action:

As you can see, with an understanding of the Model Optimizer, you can really unlock the potential of the AWS DeepLens. Models trained anywhere with nearly any framework can combine with GUI programming and custom graph code to make a full-fledged (and optimized) image classification workflow.

Resources for More Depth

This tutorial covered a lot of topics in broad strokes. If you’re interested in learning more about how to create a deep learning workflow, here are more resources I used for the project. * Intel Model Optimizer Dev Guide * DeepLens: Custom Image Classification with SageMaker * DeepLens Developer Guide * Keras: Transfer Learning * GradCam Github Repo * TrashNet Dataset * Video Streams with CV and Tkinter * SageMaker with TensorFlow

Notices and Disclaimers

Intel, Intel Atom, Iris, and OpenVINO is a trademark of Intel Corporation or its subsidiaries in the U.S. and/or other countries.

*Other names and brands may be claimed as the property of others.

© Intel Corporation

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.