Vasudev Lal is an AI Research Scientist at Intel Labs, where he leads the Multimodal Cognitive AI team. His team develops AI systems that can synthesize concept-level understanding from multiple modalities.

The emergence of the Transformer architecture has disrupted deep learning and AI over the past five years. Transformers were initially invented for use in natural language processing but are now state-of-the-art across other modalities: vision, audio, video, and multimodal systems. The Multimodal Cognitive AI team at Intel Labs is especially interested in developing multimodal Transformer architectures that synthesize conceptual understanding through self-supervised learning across multiple modalities. Along with collaborators at Microsoft Research, we have previously developed architectures like KD-VLP and Bridge Tower that excel at tasks and various benchmarks including, Visual Question Answering (VQA2.0), Visual Commonsense Reasoning (VCR), and Image/Text Retrieval, amongst others. Multimodal systems developed by our team have previously made it to the winning list of NeurIPS22 WebQA competition, and are currently at #1 spot on public leaderboard of VisualCOMET task.

It is critical to develop tools and methodologies that help understand the inner workings of multimodal Transformers and how they bring about concept-level alignment across vision and language modalities. How does the attention mechanism in these Transformers bring about multimodal fusion? How does high-level objectness emerge in these Transformers from low-level perception? How are similar concepts in different modalities mapped to proximate representations? A thorough understanding of these and other phenomena is vital to understand the limitations of current architectures and guide the development of better multimodal architecture designs in the future. To contribute to this quest, we developed VL-InterpreT, an interactive tool that provides novel visualizations and analysis for interpreting the attentions and hidden representations in multimodal transformers. Our paper on VL-InterpreT won the Best Demo Award at CVPR 2022. A screencast of the system, along with the paper, and our open-sourced implementation is available at the Intel Labs GitHub.

VL-InterpreT helps visualize how the image-to-text component of the transformer’s attention varies, as the transformer considers individual words. For example, in the gif below, the visual attention in the image is on horses at the beginning of the sentence, but shifts to follow the subject of the text, as with the person standing on top of the golden ball for the word “someone” and so on. This shows the impressive ability of the multimodal Transformer to contextualize the word “someone” in the text to the person in the image standing on top of the ball.

In the example below for an image taken at the CVPR venue, the image regions in the column attend heavily to the phrase “a large column” in the text. This shows how attention components capture the conceptual alignment across modalities, and the image representation of the column will have heavy contribution to the updated representations of the textual phrase. This is how multimodal attention in Transformers carry out multimodal fusion.

We can also see how the concept of objectness emerges within deeper layers. We can monitor the average image-to-text component of the attention at each layer to the word ‘ball’. In the early layers of the model, the attention is diffused around the image. However, as the tokens are propagated to the deeper layers on the Transformer, the attention starts focusing to the region of the image with ball. This shows the emergence of high-level objectness in the deeper layers.

VL-InterpreT can be used to study pure vision-to-vision attention. In this example, it can be seen how patches belonging to the same object class (the two dogs, background grass) attend to a single patch of the same object.

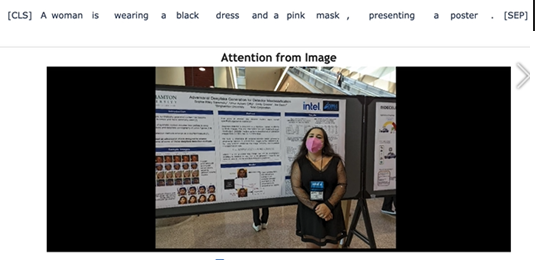

Apart from visualization and summary statistic on four components of attention in multimodal transformers, VL-InterpreT also allows probing of the hidden state representations of image and text tokens as they propagate through all layers of the Transformer. The following figure, shows how the representations of image patches and text tokens corresponding to the same concept are brought in proximity in deeper layers of the Transformer. The high dimension hidden state representations are projected to 2D using t-SNE for this visualization. Mouse hover on the text tokens (red points) display the corresponding word. Mouse hover on the image tokens (blue point) display the image with that image patch highlighted in blue. The gif shows how the representations of the text tokens (describing the researcher standing next to the poster) are proximate to representations of the image patches of the researcher. The representation of other parts of the image are further away from these text tokens.

VL-InterpreT allows for interactive analysis of attention and hidden representations in each layer of any multimodal Transformer. VL-InterpreT can be used to freely explore the interactions between and within different modalities to better understand the inner mechanisms of a transformer model, and to obtain insight into why certain predictions are made. We hope that this kind of analysis helps us, and other researchers, gain greater insights into the workings of Transformer models and help drive further improvement to these Transformer architectures.

Vasudev is an AI Research Scientist at Intel Labs where he leads the Multimodal Cognitive AI team. His team develops AI systems that can synthesize concept-level understanding from multiple modalities: vision, language, video and audio. His current research interests include equipping deep learning with mechanisms to inject external knowledge; self-supervised training at scale for continuous and high dimensional modalities like images, video and audio; mechanisms to combine deep learning with symbolic compute. Prior to joining Intel, Vasudev obtained his PhD in Electrical and Computer Engineering from the University of Michigan, Ann Arbor.

Vasudev is an AI Research Scientist at Intel Labs where he leads the Multimodal Cognitive AI team. His team develops AI systems that can synthesize concept-level understanding from multiple modalities: vision, language, video and audio. His current research interests include equipping deep learning with mechanisms to inject external knowledge; self-supervised training at scale for continuous and high dimensional modalities like images, video and audio; mechanisms to combine deep learning with symbolic compute. Prior to joining Intel, Vasudev obtained his PhD in Electrical and Computer Engineering from the University of Michigan, Ann Arbor.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.