Introduction

Groundbreaking breakthroughs in medical research have been enabled through machine learning. This paper discusses how the latest generation of Intel processors can be used in Amazon Web Services public and private environments to make new discoveries using advanced machine learning resources. In this paper we demonstrate how to use publicly available bone marrow datasets to efficiently train machine learning models using the latest technologies from Amazon and Intel.

Solution Overview

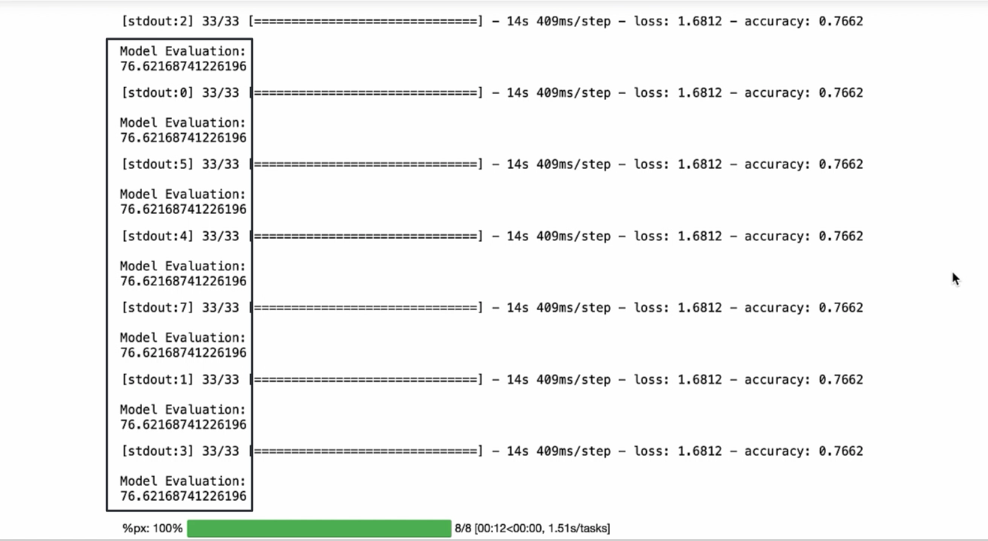

This paper discusses a real-world use case where we use advanced artificial intelligence technologies to train a bone marrow cell classification machine learning model from publicly available data. This trained model is then optimized using Intel OpenVino for inference. Then the model performance is evaluated for by comparing the Intel Cascade Lake processor with the latest generation Intel Ice Lake processor microarchitecture. And finally, the flexibility of Amazon Web Services’ Elastic Kubenetes Service will be reviewed as an open-source container orchestration platform for the artificial intelligence software components discussed herein.

Cloud Context

Amazon Web Services, AWS, is the world’s most comprehensive and broadly adopted cloud platform. AWS helps builders to create highly functional, secure, resilient, cost efficient and flexible application environments. Customers do not need to concern themselves with non-differentiating details of the infrastructure. Although the cloud abstracts the hardware and exposes the customizable software features that are important for your business, an understanding of the physical components is important in choosing optimal design patterns.

Hardware engineers want their products to deliver maximal potential performance to software. Likewise, software engineers seek to optimize their code to extract maximum possible efficiency from the hardware. When consuming cloud services in a hybrid model, cloud infrastructure architects need to consider the features of both hardware and software. An efficient IT infrastructure can realize the full potential of the supporting hardware and software. The hybrid application solution discussed in this white paper is a real-world example of Intel’s next generation Ice Lake Xeon processor with Amazon services to accelerate the training and inference of bone marrow classification machine learning models.

Solution Components:

Intel Ice Lake Processors:

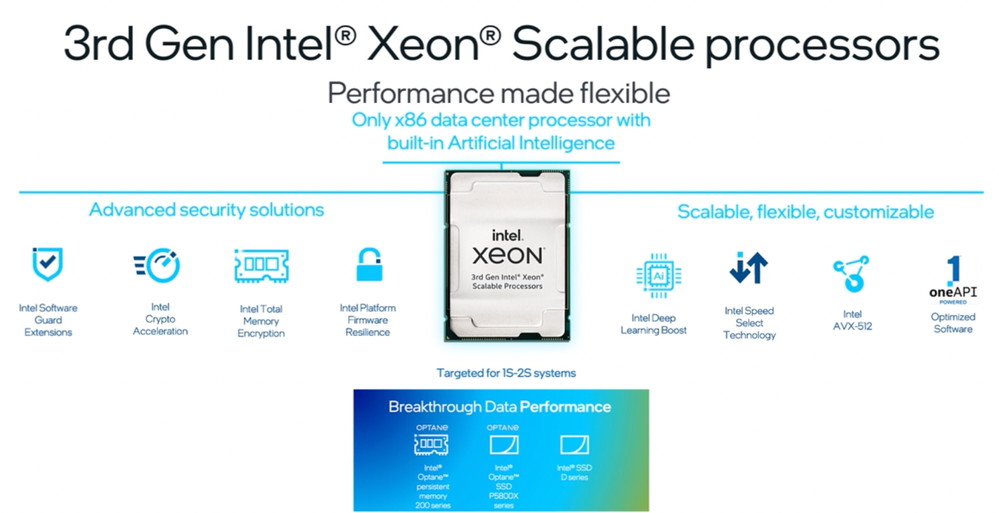

Intel Ice Lake 3rd generation Xeon scalable processors are optimized for cloud, enterprise, HPC, network, security, and IoT workloads with 8 to 40 powerful cores and a wide range of frequency, feature, and power levels. This is the only data center CPU with built-in AI acceleration, end-to-end data science tools, and an ecosystem of smart solutions. Intel’s ubiquity in both public clouds and on-premises makes it ideally suited for hybrid cloud environments.

Intel Ice Lake Processors have Deep Learning Boost (Intel DL Boost) acceleration built-in and provides the flexibility to run complex AI workloads on the same hardware as your existing workloads. In addition, these instances support int8 that leverages Vector Neural Network Instructions (VNNI) to enhance inference workloads through improved cache utilization, and the reduction of bandwidth bottlenecks.

Figure 1: Intel 3rd generation Ice Lake instances provides for flexible performance with many added capabilities

Amazon Elastic Kubernetes Service

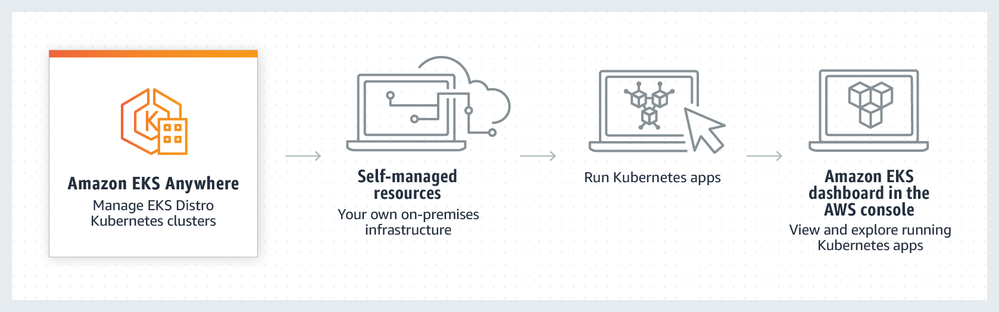

Amazon Elastic Kubernetes Service, Amazon EKS, is a managed container service to run and scale Kubernetes applications in the cloud. EKS Anywhere is an open-source deployment option for EKS that allows customers to create and operate Kubernetes clusters on-premises, with optional support offered by Amazon Web Services, AWS. EKS Anywhere supports deployment on bare metal hardware as well as VMware vSphere.

Amazon EKS Anywhere simplifies the creation and operation of on-premises Kubernetes clusters with default component configurations while providing tools for automating cluster management.

Figure 2: EKS-Anywhere brings the Amazon EKS service on premises

Amazon EKS Anywhere gives you on-premises Kubernetes operational tooling consistent with those available with Amazon EKS. EKS Anywhere builds on the strengths of Amazon’s open-source distribution of Kubernetes. This lets you quickly deploy clusters while AWS manages of testing and tracking updates, dependencies, and patches for Kubernetes. You can create Amazon EKS clusters in AWS and on your own on-premises hardware using the tooling of your choice.

Hybrid Cloud

Hybrid cloud is a recent concept where applications are running in a combination of cloud environments. Hybrid cloud computing is a necessity as some companies are averse to entirely relying on the public cloud. Many organizations have significant capital investments with their on-premises infrastructure. The most common example of hybrid cloud example is deploying a workload in both a public and a private cloud environment; for example, an on-premises data center using VMware vSphere that is integrated with other components hosted in AWS

AWS DL1 EC2 Instances

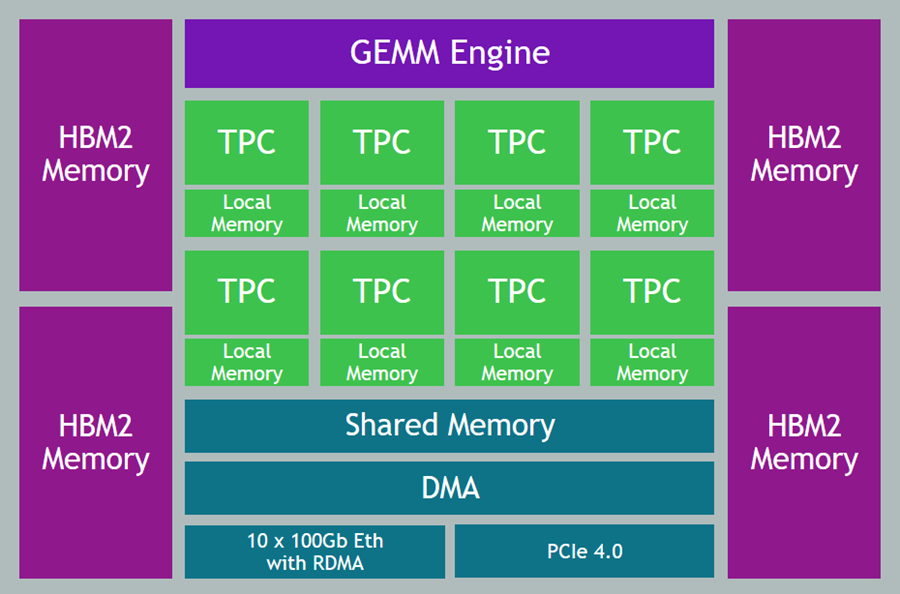

The Amazon Elastic Compute Cloud, EC2, DL1 instances powered by Gaudi accelerators from Habana Labs, an Intel company, deliver low cost-to-train deep learning models for natural language processing, object detection, and image recognition use cases. DL1 instances provide up to 40% better price performance for training deep learning models compared to current generation GPU-based EC2 instances.

Amazon EC2 DL1 instances feature 8 Gaudi accelerators with 32 GiB of high bandwidth memory (HBM) per accelerator, 768 GiB of system memory, custom 2nd generation Intel Xeon Scalable processors, 400 Gbps of networking throughput, and 4 TB of local NVMe storage. DL1 instances include the Habana SynapseAI® SDK, that is integrated with leading machine learning frameworks such as TensorFlow and PyTorch. (Amazon EC2 DL1 Instances, n.d.)

Figure 3: AWS DL1 instances using eight Gaudi processors well suited for Deep Learning Training

Benefits of DL1 Instances

- Cost: AWS DL1 compute instances deliver up to 40% better price performance for training deep learning models compared to our latest GPU-based EC2 instances. These instances feature Gaudi accelerators that are purpose-built for training deep learning models. You can also get further cost savings by using an EC2 Savings Plan or a Reserved Instance to significantly reduce the cost of training your deep learning models.

- Ease of Use: Developers across all levels of expertise can get started easily on AWS DL1 deep learning compute instances. They can continue to use their own workflow management services by using AWS DL Amazon Machine Images, AMI, and DL Containers to get started on DL1 instances. Advanced users can also build custom kernels to optimize their model performance using Gaudi’s programmable Tensor Processing Cores, TPCs. Using Habana SynapseAI® tools, customers can seamlessly migrate existing models running on GPU or CPU-based instances to DL1 compute instances with minimal code changes.

- Leading ML frameworks and models supported: DL1 compute instances support leading machine learning (ML) frameworks such as TensorFlow and PyTorch, enabling you to continue using your preferred ML workflows. You can access optimized models such as Mask R-CNN for object detection and BERT for natural language processing on Habana’s GitHub repository to quickly build, train, and deploy your models. SynapseAI’s rich Tensor Processing Core (TPC) kernel library supports a wide variety of operators and multiple data types for a range of model and performance needs.

Features

- Powered By Gaudi Accelerators: DL1 instances are powered by Gaudi accelerators from Habana Labs (an Intel company), that feature eight fully programmable TPCs and 32 GiB of high bandwidth memory per accelerator. These processors have a heterogeneous compute architecture to maximize training efficiency and a configurable centralized engine for matrix-math operations. They also have the industry’s only native integration of ten 100 Gigabit Ethernet ports on every Gaudi accelerator for low latency communication between accelerators.

- High Performance Networking and Storage: DL1 instances offer 400 Gbps of networking throughput and connectivity to Amazon Elastic Fabric Adapter (EFA) and Amazon Elastic Network Adapter (ENA) for applications that need access to high-speed networking. For fast access to large datasets, DL1 instances also include 4 TB of local NVMe storage and deliver 8 GB/sec read throughput.

- Habana SynapseAI® SDK: The SynapseAI® SDK is composed of a graph compiler and runtime, TPC kernel library, firmware, drivers, and tools. The SDK is integrated with leading frameworks such as TensorFlow and PyTorch. Using SynapseAI® tools, you can seamlessly migrate and run your existing models onto DL1 instances with minimal code changes.

…

Phase 1: Training with DL1 instances:

AWS Deep Learning AMIs (DLAMI) and AWS Deep Learning Containers (DLC) provide data scientists, ML practitioners, and researchers with machine and container images that are pre-installed with deep learning frameworks to make it easy to get started. This lets you skip the complicated process of building and optimizing your software environments from scratch. The SynapseAI SDK for the Gaudi accelerators is integrated into the AWS DL AMIs and DLCs enabling you to quickly get started with DL1 instances. This solution leverages these AMIs for training computer vision models.

Training Infrastructure:

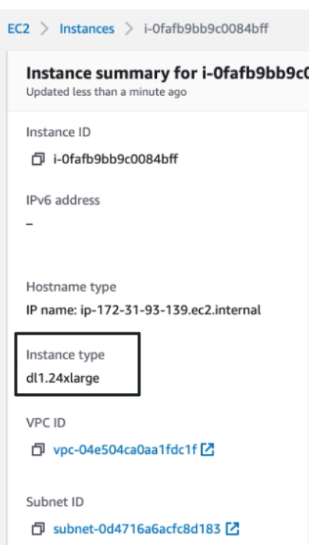

For the bone marrow classification testing, we used an Amazon EC2 DL1 compute instance. The training was completed with an Amazon EC2 DL1 instance, as shown below.

Figure 4: AWS DL1 training compute instance

The instance has 8 Intel Gaudi accelerators each with 32 GB of high bandwidth memory.

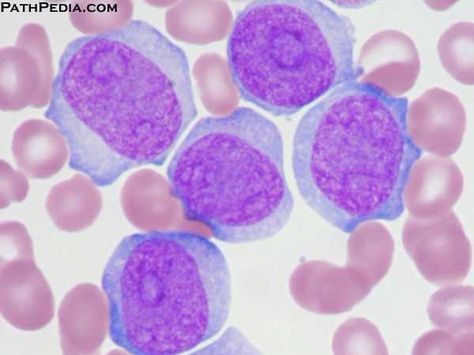

The Problem: Bone Marrow Cell Classification

A bone marrow biopsy is a procedure to collect and examine bone marrow — the spongy tissue inside some larger bones. This biopsy can determine whether the bone marrow is healthy and making normal amounts of blood cells. Doctors use these procedures to diagnose and monitor blood and marrow diseases, different types of cancer, as well as fevers of unknown origin. We used the publicly available Kaggle bone marrow cell classification dataset to train machine learning models to mimic the human behavior portrayed in the dataset.

Figure 5: Acute Monoblastic Leukemia cells (AML-M5a)

The Kaggle bone marrow cell classification dataset contains a collection of over 170,000 de-identified, expert-annotated cells from the bone marrow smears of 945 patients stained using the May-Grünwald-Giemsa/Pappenheim stain. The diagnosis distribution in the cohort included a variety of hematological diseases reflective of the sample entry of a large laboratory specialized in leukemia diagnostics. (Matek, Krappe, Münzenmayer, Haferlach, & Marr, An Expert-Annotated Dataset of Bone Marrow Cytology in Hematologic Malignancies, 2021) (Matek, Krappe, Münzenmayer, Haferlach, & Marr, Highly accurate differentiation of bone marrow cell morphologies using deep neural networks on a large image dataset, 2021) (Bone Marrow Cell Classification [Data Set], 2022)

All samples were processed in the Munich Leukemia Laboratory, MLL, using equipment developed at Fraunhofer IIS and post-processed using software developed at Helmholtz Munich. There are 21 sample classes. We trained the model to classify an image into one of these categories.

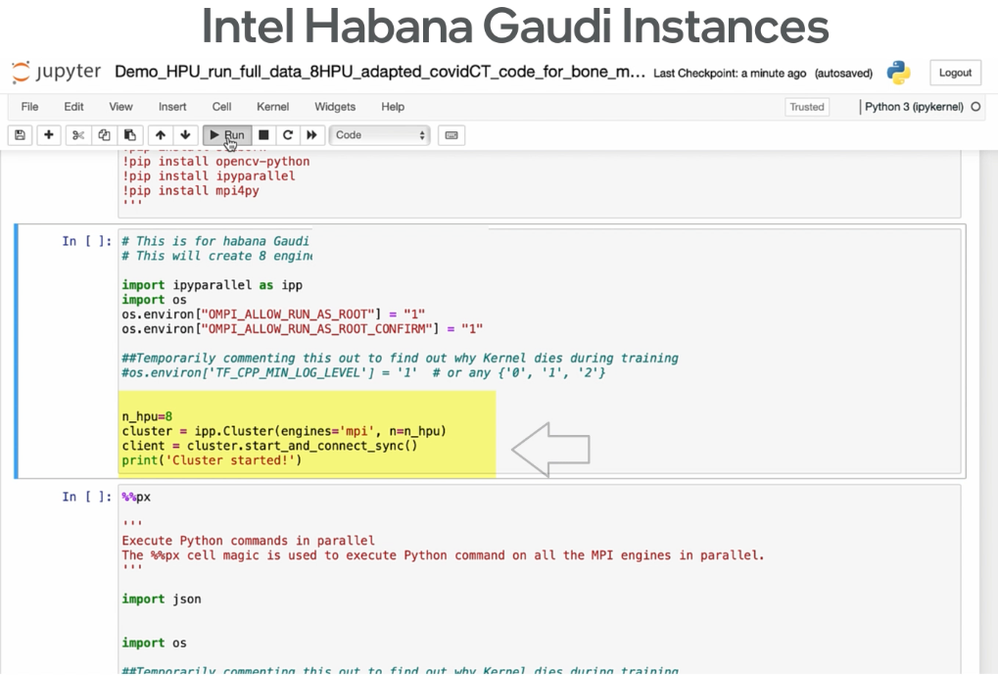

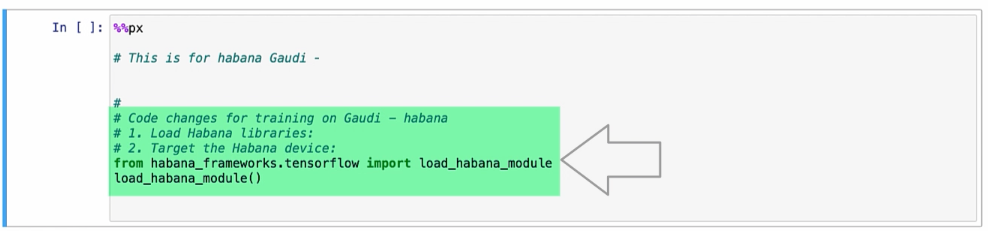

Changes needed to use Intel Habana Gaudi Instances:

Publicly available models can be easily adapted for training with Amazon DL1 compute instances. The AWS Deep Learning GPU AMI with TensorFlow 2.7 is in the AWS AMI library. (Deep Learning AMI Developer Guide, n.d.) Likewise, the docker image associated with the AMI is also readily available. (aws / deep-learning-containers, 2022) Just a few lines of code are needed to add support for the Habana Gaudi microarchitecture for training. A Jupyter notebook in AWS SageMaker example is shown below:

Figure 6: Minor code changes needed to train on DL1 instances

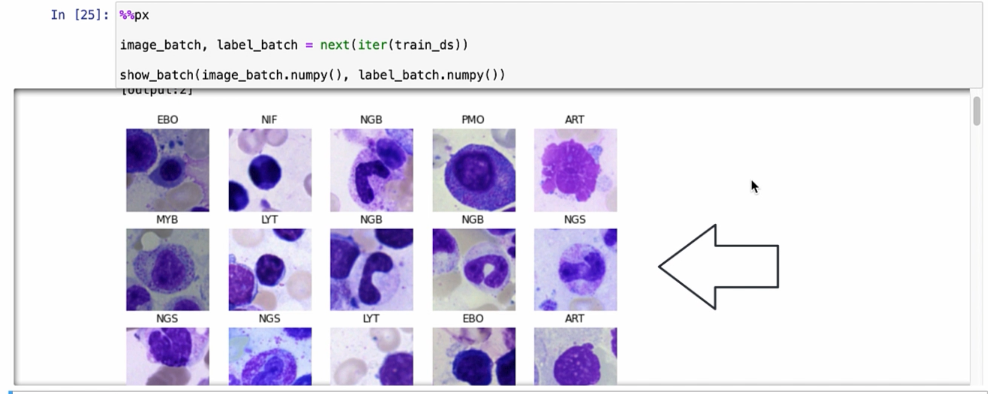

Some of the sample bone marrow images used in the training are shown in the Jupyter notebook.

Figure 7: Some of the bone marrow images used in the training

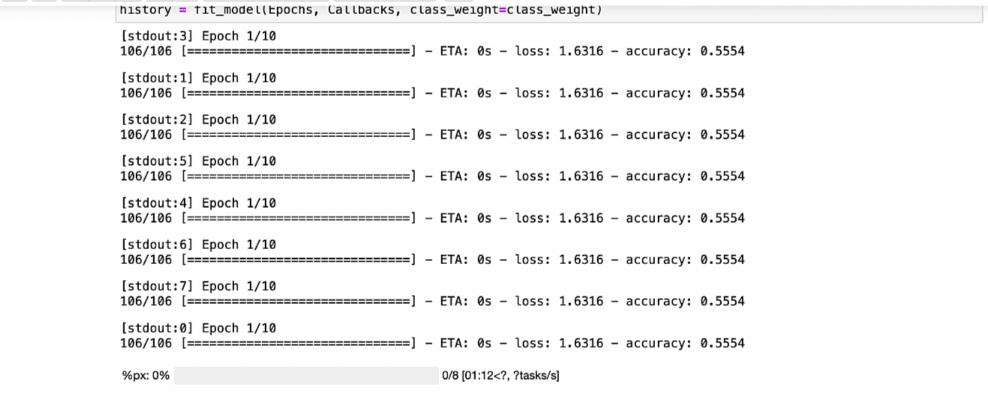

The model is trained in a distributed fashion across the eight Gaudi processors as shown below.

Figure 8: The bone marrow classification model is trained across the eight Intel Habana Gaudi1 processors

The initial accuracy of the model was calculated to be close to 70%. The model is then fine-tuned with hyper-parameter training and the accuracy is improved to 76% as shown.

Figure 9: Accuracy of fine-tuned model

After the fine-tuning phase is complete, the model can be saved for use in the inference phase. The model files can be packaged and distributed for inference across different edge locations.

Figure 10: Steps leveraged in the proof of concept for the solution

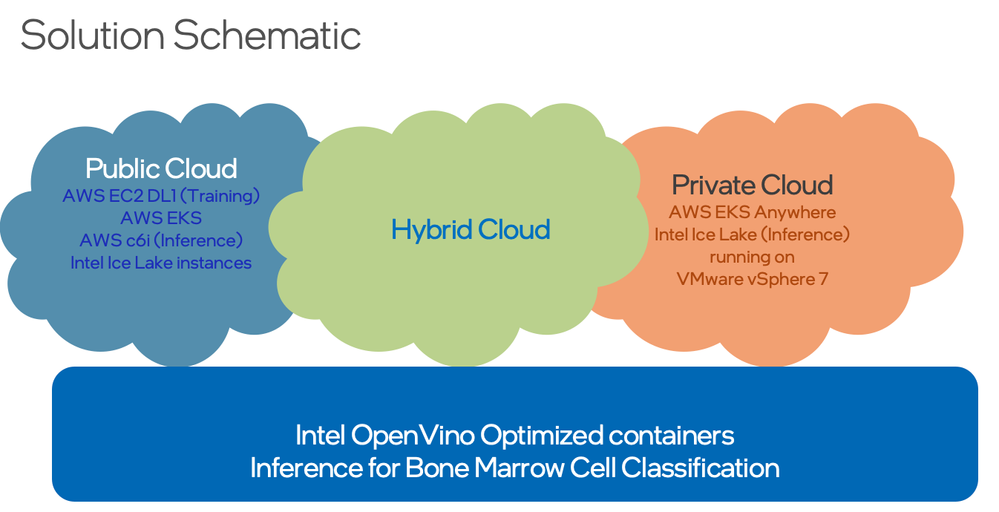

Intel OpenVino

The Intel OpenVino Toolkit provides a model optimizer to convert models from popular frameworks such as Caffe and TensorFlow. Intel OpenVino provides an inference engine that supports heterogeneous execution across computer vision accelerators from Intel, including CPUs, GPUs, and FPGAs.

The basic workflow with OpenVino is to use a framework, such as TensorFlow, to create and train a CNN inference model. In the previous we used the AWS Intel Gaudi DL1 instances to train the model

Then the model is run through a Model Optimizer to produce an optimized Intermediate Representation, IR, stored in .bin and .xml files for use with the Inference Engine. The User Application then loads and runs the optimized model on targeted devices using the Inference Engine and the IR files.

Figure 11: The Intel OpenVino toolkit

Computer Vision Solution with Intel and EKS Anywhere:

Intel’s approach allows developers to do more with existing architectures and with familiar tools. Intel CPUs offer a strong foundation for computer vision with built-in AI acceleration technologies. The Intel ecosystem provides the resources to meet application requirements while helping to streamline and simplify on-premises work and in AWS. Intel CPU-based environments provide power, performance, and flexibility to run computer vision-based AI inference consistently across different edge locations. This solution uses the trained model from the Amazon DL1 instances for a Computer Vision based application for bone marrow cell classification, combining Intel Technology with Amazon EKS & EKS Anywhere to run in a hybrid cloud environment.

Deployment of EKS Anywhere on-premises and in the cloud.

Kubernetes clusters were deployed across AWS and on-premises

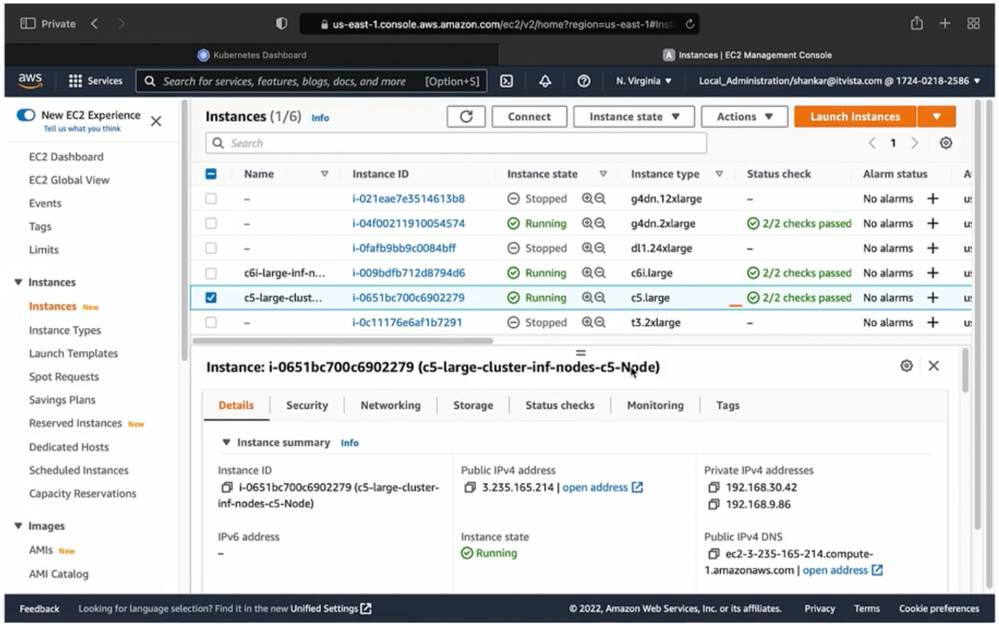

- Public Cloud EKS Cluster Creation: The AWS console was used to create and launch an EKS cluster with the latest Ice Lake based C6i instance type and the previous generation c5 instances with Cascade Lake processors. The SageMaker service was used to deploy endpoints for inference on these EKS clusters.

Figure 12: Amazon EKS cluster as seen in the AWS console

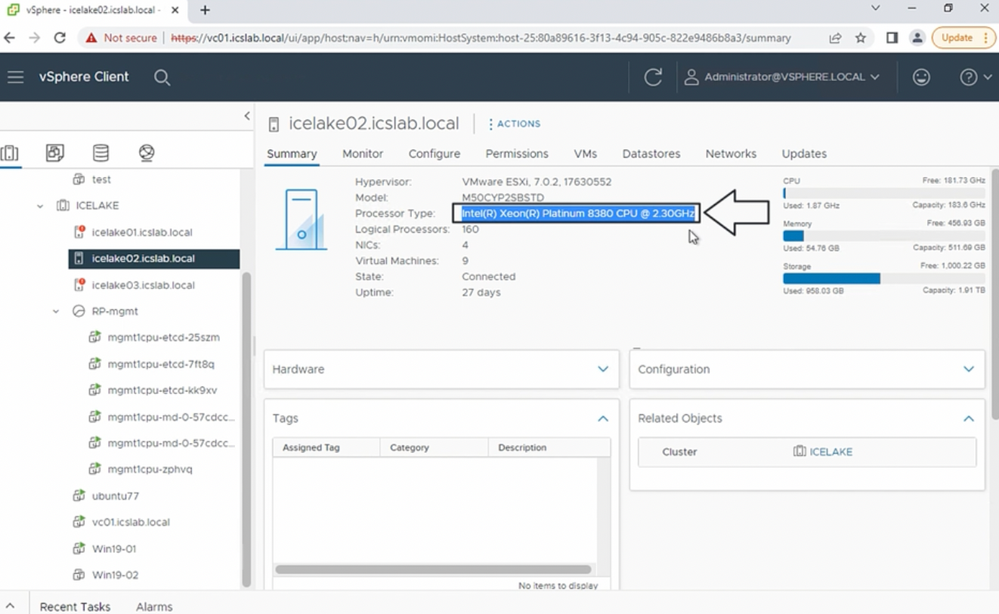

- On-premises EKS Anywhere Cluster: An EKS Anywhere cluster was created on-premises and deployed on a VMware vSphere 7 environment running on Intel Ice Lake processor-based server nodes. Deployment details of an EKS Anywhere cluster are beyond the scope of this paper. Further documentation is available online. (vSphere Configuration, 2022)

Figure 13: EKS Anywhere deployed in Intel Ice Lake based on premises environment on VMware vSphere 7

The trained model is optimized for inference on Intel based instances using the OpenVino optimizer.

Figure 14: OpenVino model optimization

Inference Testing:

The optimized inference model was then deployed across the two public cloud Kubernetes clusters and one EKS Anywhere on-premises cluster. The infrastructure was then validated for six batch and real-time inference use cases, all models were optimized using OpenVino:

|

EKS Cluster on AWS |

Batch Inference |

Real-time Inference |

|

Intel Cascade Lake c5.large |

OpenVino Optimized model |

OpenVino Optimized model |

|

Intel Ice Lake c6i.large |

OpenVino Optimized model |

OpenVino Optimized model |

|

EKS Anywhere cluster on premise |

||

|

Intel Ice Lake processor based VM |

OpenVino Optimized model |

OpenVino Optimized model |

Figure 15: Validation testing use cases

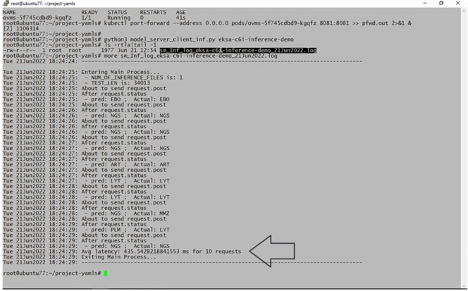

The OpenVino optimized model is made available for use through the OpenVINO Model Server in Kubernetes. The tests are then run to measure the latency of the inference across the different clusters.

Figure 16: Latency and throughput measurement for Inference

The results of the validation runs are shown in the following table. The batch inference documents the number of images processed per second. Higher numbers demonstrate better performance. The second column lists the real time inference, with the average latency across ten images. Lower numbers are preferred as they demonstrate faster throughput.

|

EKS Cluster on AWS |

Batch Inference |

Real-time Inference |

|

Intel Cascade Lake c5.large |

8.7766 |

527.349 |

|

Intel Ice Lake c6i.large |

9.4056 |

514.5784 |

|

EKS Anywhere cluster on premise |

||

|

Intel Ice Lake processor based VM |

19.3395 |

416.7342 |

Figure 17: images processed per second and average latency across different Kubernetes clusters

The results clearly show improvements in performance for Ice Lake based instances (c6i over c5) over the previous generation. Good performance was seen in the on-premises cluster with Ice Lake based virtual machines.

Summary & Call to Action:

AWS services like EKS Anywhere on Intel technology provides an optimal and effective combination to meet customer requirements across hybrid cloud environments. Customers and developers can use Intel Ice Lake Processor based instances with Amazon EKS across hybrid cloud environments. Through this solution, we have seen that.

- Amazon DL1 instances based on the Intel Gaudi platform can be effectively leveraged for Deep Learning training

- Common ML platforms such as TensorFlow and PyTorch are supported on Amazon DL1 instances

- Intel Ice Lake based instances are excellent building blocks for Amazon EKS and EKS Anywhere clusters

- Amazon EKS and EKS Anywhere provide the ability to consistently deploy and manage Kubernetes across a hybrid cloud environment

- Intel OpenVino can help optimize inference workloads running on Intel based instances in the AWS and on-premises

- Intel optimizations in HW and SW with Ice Lake processors can provide significant improvements in performance at lowered cost

Bibliography

Amazon EC2 DL1 Instance. (n.d.). Retrieved from https://aws.amazon.com/ec2/instance-types/dl1/

Aria, M. (2021, July 17). COVID-19 Lung CT Scans. Retrieved from Kaggle: https://www.kaggle.com/datasets/98953605ed45c4107bfa57bc1be54238e1113f732b820c6f485a975196c05c8d

Aria, M. (2021, June 7). COVID-19-CAD. Retrieved from GitHub: https://github.com/MehradAria/COVID-19-CADaws/deep-learning-containers (2022). Retrieved from GitHub: https://github.com/aws/deep-learning-containers

Bone Marrow Cell Classification [Data Set]. (2022, August). Retrieved from Kaggle: https://www.kaggle.com/datasets/andrewmvd/bone-marrow-cell-classification

Deep Learning AMI Developer Guide. (n.d.). Retrieved from AWS Documentation: https://docs.aws.amazon.com/dlami/latest/devguide/appendix-ami-release-notes.html

Ghaderzadeh, M., Asadi, F., Jafari, R., Bashash, D., Abolghasemi, H., & Aria, M. (2021, April 26). Deep Convolutional Neural Network-Based Computer-Aided Detection System for COVID-19 Using Multiple Lung Scans: Design and Implementation Study. Retrieved from J Med Internet Res: https://pubmed.ncbi.nlm.nih.gov/33848973/

Matek, C., Krappe, S., Münzenmayer, C., Haferlach, T., & Marr, C. (2021). An Expert-Annotated Dataset of Bone Marrow Cytology in Hematologic Malignancies. Retrieved from The Cancer Imaging Archive: https://doi.org/10.7937/TCIA.AXH3-T579

Matek, C., Krappe, S., Münzenmayer, C., Haferlach, T., & Marr, C. (2021). Highly accurate differentiation of bone marrow cell morphologies using deep neural networks on a large image dataset. Retrieved from The Cancer Imaging Archive: https://doi.org/10.1182/blood.2020010568

vSphere Configuration. (2022). Retrieved from Amazon EKS Anywhere Documentation: https://anywhere.eks.amazonaws.com/docs/reference/clusterspec/vsphere/

Collaborators:

This was a collaborative project with Nathan Walker (Solution Architect, AWS). Nathan Walker is a Senior Solutions Architect with Amazon Web Services. He has more than 20 years of experience in infrastructure architecture, storage solutions, and datacenter planning. Nathan is currently working with AWS’ startup customers to provide differentiating solutions to help accelerate their go to market activities.

Mohan Potheri is a Cloud Solutions Architect with more than 20 years in IT infrastructure, with in depth experience on Cloud architecture. He currently focuses on educating customers and partners on Intel capabilities and optimizations available on Amazon AWS. He is actively engaged with the Intel and AWS Partner communities to develop compelling solutions with Intel and AWS. He is a VMware vExpert (VCDX#98) with extensive knowledge on premises and hybrid cloud. He also has extensive experience with business critical applications such as SAP, Oracle, SQL and Java across UNIX, Linux and Windows environments. Mohan Potheri is an expert on AI/ML, HPC and has been a speaker in multiple conferences such as VMWorld, GTC, ISC and other Partner events.

Mohan Potheri is a Cloud Solutions Architect with more than 20 years in IT infrastructure, with in depth experience on Cloud architecture. He currently focuses on educating customers and partners on Intel capabilities and optimizations available on Amazon AWS. He is actively engaged with the Intel and AWS Partner communities to develop compelling solutions with Intel and AWS. He is a VMware vExpert (VCDX#98) with extensive knowledge on premises and hybrid cloud. He also has extensive experience with business critical applications such as SAP, Oracle, SQL and Java across UNIX, Linux and Windows environments. Mohan Potheri is an expert on AI/ML, HPC and has been a speaker in multiple conferences such as VMWorld, GTC, ISC and other Partner events.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.