Authors:

- Nikita Sanjay Shiledarbaxi

- Rob Mueller-Albrecht

Neural networks are the keystone of deep learning algorithms. Training neural networks is compute intensive and therefore needs an effective distribution of the computation to achieve reasonable training times. This requires processors to communicate during the computation process. Since communication is generally a collective operation (several processors participate simultaneously), optimizing communication primitives and patterns used in collective communications (Allreduce, Reduce-Scatter etc.) is critical to achieving optimal outcomes in Deep Learning and Machine Learning workloads.

This blog will introduce you to Intel® oneAPI Collective Communications Library (oneCCL) , which enables optimized implementation of communication patterns resulting in scalable and efficient training of deep neural networks in a distributed environment.

What is oneCCL?

oneCCL is a flexible, performant library for Machine Learning and Deep Learning workloads and is one of several performance libraries that are part of the oneAPI programming model. oneCCL provides a set of optimized implementations of Collective Communications primitives, exposed through an API that is easy to consume by Deep Learning Framework developers.

It enables an efficient implementation of several communication operations across multiple nodes, resulting in deep learning optimizations. It allows asynchronous and out-of-order execution of operations and utilizes one or more cores as required, for optimal usage of the network. It also supports collectives in low-precision data types.

oneCCL Feature Highlights

Following are the core features of oneCCL:

- Built on top of the Intel® MPI and libfabrics communication interfaces.

- Supports various interconnection standards such as Ethernet, Cornelis Networks, and InfiniBand.

- Provides a collective API which, interoperating with SYCL, efficiently implements crucial collective operations in deep learning such as all-reduce, all-gather and reduce-scatter.

- Easily binds with distributed training and deep learning frameworks such as Horovod and PyTorch, respectively.

- Supports bfloat16 floating-point format and allows creating custom datatypes of desired sizes. Explore all the supported datatypes here.

oneCCL API Concepts and Parameters

In this section, you will familiarize yourself with some of the abstract concepts used by oneCCL for optimized collective operations.

- Rank: An addressable entity in a communication operation.

- Device: An abstraction of a participant device such as a CPU or a GPU involved in the communication operation.

- Communicator: Defines a collection of communicating ranks. Communication operations between homogenous oneCCL are defined by the communicator class.

- Key-Value Store: An interface that sets up communication between the constituent ranks while grouping them to form a communicator.

- Stream: An abstraction that captures the execution context for communication operations.

- Event: An abstraction that captures the synchronization context for communication operations.

Each oneCCL device corresponds to a rank. Multiple ranks communicate with each other through an interface called a key-value store and a group of ranks known as a communicator is formed. While carrying out a communication operation among a communicator's ranks, you must pass a stream object containing the execution steps to the communicator. The communicator returns an event object which tracks the progress of the operation. You can also pass a vector of event objects to the communicator for providing it with input dependencies required for the operation.

oneCCL Collective Communication Operations

oneCCL enables performing several collective communication operations among the devices of a communicator - ‘collective’ in the sense that all the participating ranks should make calls to perform such operations.

- Allgatherv: This operation allows accumulating data from all the ranks within a communicator into a common buffer. Regardless of the proportion of data contributed by each rank, all of them will have the same data in their output buffers at the end of the operation.

- Allreduce: This operation globally performs a reduction operation (max, sum, multiply etc.) across all the ranks of communicator. The resulting value is returned to each rank at the end of the operation.

- Reduce: This operation also globally performs a reduction operation across all the ranks of communicator like Allreduce but returns the resulting value only to the root rank.

- ReduceScatter: This operation also performs a reduction across all the ranks of a communicator, but only a sub-portion of the result is returned to each rank.

- Alltoallv: Through this operation, every rank sends a separate data block to every other block within the communicator. If ith rank sends jth data block of its send buffer, then it is received by the ith data block of the jth rank.

- Broadcast: Through this operation, one of the ranks known as the root of a communicator distributes data to all other ranks within that communicator.

- Barrier synchronization: This operation involves reduction followed by broadcast. It is executed across all the ranks of a communicator. However, any rank calling the barrier() method is blocked and only after all the ranks within the communicator have called it, is the operation completed.

Visit this link to dive deeper into communication operations.

For each type of supported communication operations, oneCCL specifies operation attribute classes such as allgather_attr, allreduce_attr, reduce_attr, reduce_scatter_attr, alltoallv_attr, broadcast_attr and barrier_attr. Using these classes, you can customize the behavior of the corresponding communication operation. Detailed information on attribute identifiers of these classes is available here.

The event class of oneCCL enables tracking the event of completion of each communication operation. For instance, while performing the all-reduce operation, the event::wait() method pauses all other operations till the all-reduce finishes. If the event::test() method is used, then it returns a Boolean value where True means the all-reduce operation has completed while False means it is still in progress. Thus, you can wait for the completion of an operation in a blocking or a non-blocking way. Check out this page for more details on operation progress tracking.

Error Handling by oneCCL

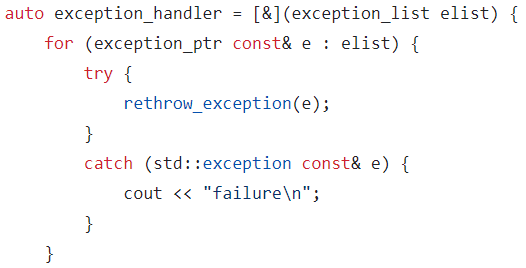

When an error occurs, oneCCL passes it to a point where a function call catches it using standard C++ error handling technique. A code snippet below shows how a oneCCL error is ultimately caught by the std::exception class of C++.

oneCCL has a hierarchy of exception classes in which ccl::exception is the base class inherited from the standard C++ std::exception class. It reports an unspecified error. Other exception classes derived from the base class reports errors encountered in different scenarios as follows:

- ccl::invalid_argument: when arguments given to an operation are unacceptable

- ccl::host_bad_alloc: when error occurs while allocating memory on the host

- ccl::unimplemented: when the requested operation cannot be implemented

- ccl::unsupported: when the requested operation is not supported

Wrap-up

In this blog, we talked about oneCCL library as a means of performing scalable and efficient communication operations. Get started with oneCCL today and leverage it for improving your Machine Learning workloads in multi-nodal environments. We also encourage you to explore the full suite of Intel developer tools for building your AI, HPC, and rendering applications and learn about the unified, open, standards-based oneAPI programming model that forms their foundation.

Please also let us know of any topic you would like us to dig into.

Stay tuned for more in this series of interesting blogs on oneAPI Base Toolkit components!

Get the software

You can download stand-alone version of oneCCL or get it included as a part of the Intel® oneAPI Base ToolKit. Want to install oneCCL using Command Line Interface (CLI)? Follow these simple steps!

Useful resources

- oneCCL official page

- oneCCL documentation

- oneAPI Base Toolkit

- oneCCL GitHub repo

- oneAPI specification guide

Acknowledgements

We would like to thank Chandan Damannagari and Navin Samuel for their contributions to the blog.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.