Validating Redis Enterprise Operator on Bare-Metal with Red Hat OpenShift

Background

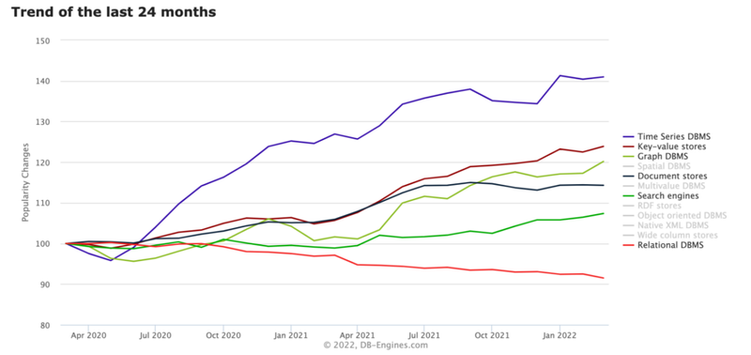

In the last 24 months, DB-Engines make it clear that the fastest-growing data models are key-value, search, document, graph and time series. Fewer developers are choosing to model their applications with relational databases. Popular applications that require user interaction generate multiple calls to the database, resulting in overhead and round trip latency between the end-user and application server, may cause an impact to the user experience, and may result in a loss of revenue for the service provider.

Figure 1 - Fastest Growing Data Models

Red Hat OpenShift is designed for automation, but scaling stateful applications may be challenging, typically requiring manual intervention. Removing a Pod means losing its data and disrupting the system. This is one of the key reasons why the Operator framework was developed. Operators were developed to handle stateful applications that the default Kubernetes controllers are not able to handle. With in-memory databases rising in popularity, it makes a lot of sense for Redis Labs to release a Redis Enterprise Operator.

In This Blog You Will Learn How To

- Deploy a Red Hat OpenShift 3-node compact bare-metal cluster

- Install Red Hat OpenShift Data Foundation & configure a Back Store

- Install Redis Enterprise Operator

- Optimize the Redis cluster & create a simple database

- Setup memtier_benchmark and test functionality/performance

Deploy Red Hat OpenShift on Bare-Metal Servers

The following hardware was provided by Supermicro to setup the remote demo lab:

- 1G Switch, SSE-G3648B

- 100G Switch Supermicro, SSE-C3632S(R)

- 2U 4-Node BigTwin, SYS-220BT-HNTR

Note: Nodes A, B and C were used for a 3-node compact cluster (each subsystem runs as a master + worker node)

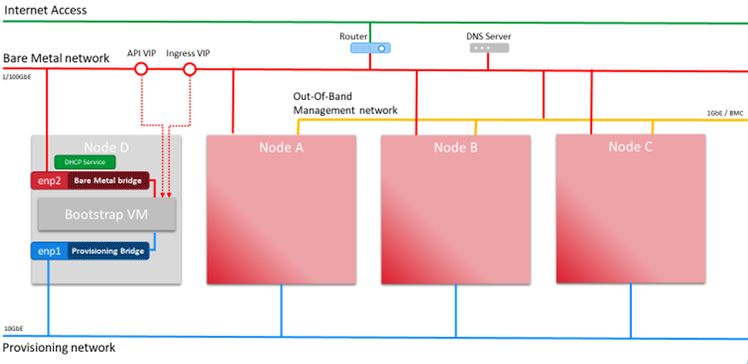

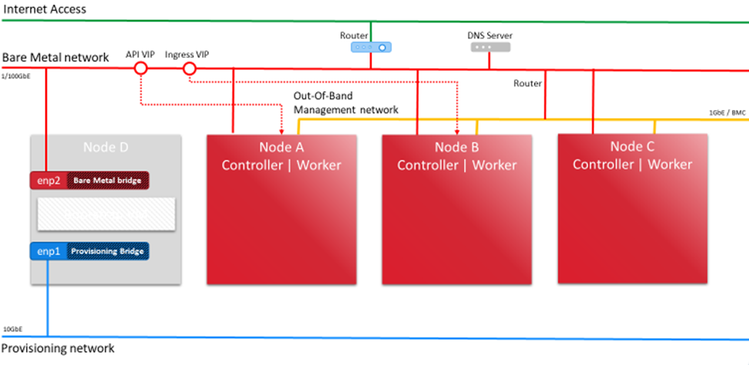

Figure 2 - OCP 4.8 IPI Network Overview

1. Assign the network switches to each network:

- BMC network (1G switch 172.24.121.0/24, Internet access)

- 100G network (100G Supermicro SSE-C3632S(R) switch, 172.24.121.0/24, Internet access)

2. Assign IP addresses to the BMC LAN ports on an X12 BigTwin®:

- Node A 172.24.121.51

- Node B 172.24.121.52

- Node C 172.24.121.53

- Node D 172.24.121.54

3. Setup the DNS records for Red Hat OpenShift API VIP, Ingress VIP, and the nodes.

|

Domain name |

IP address |

|

bt01.mwc.openshift.smc |

172.24.121.51 |

|

bt02.mwc.openshift.smc |

172.24.121.52 |

|

bt03.mwc.openshift.smc |

172.24.121.53 |

|

bt04.mwc.openshift.smc |

172.24.121.54 |

|

*.apps.mwc.openshift.smc |

172.24.121.66 |

|

api.mwc.openshift.smc |

172.24.121.65 |

Table 1-1. DNS Records

4. Reserve the DHCP IP addresses for the node BMC and OS LAN ports.

|

Node |

OS IP address |

BMC IP address |

Note |

|

Node A |

172.24.121.61 |

172.24.121.51 |

|

|

Node B |

172.24.121.62 |

172.24.121.52 |

|

|

Node C |

172.24.121.63 |

172.24.121.53 |

|

|

Node D |

172.24.121.64 |

172.24.121.54 |

Bastion node |

Table 1-2. DHCP IP Addresses

Prepare Bastion Node with Red Hat Enterprise Linux 8

1. Install RHEL 8.3 to M.2 NVMe RAID VD on node D by BMC Virtual Media with ISO image via iKVM

2. Set up network 172.24.121.64/255.255.255.0,

Gateway 172.24.121.1

DNS 172.24.88.10, 8.8.8.8, 1.1.1.1

3. Use Red Hat Subscription Manager to register the provisioner node:

$ sudo subscription-manager register --username=<username> --password=<pass> --auto-attach

4. Add OCP/ODF 4.8 & ansible-2.9 into repolist, then check

$ sudo subscription-manager repos \

--disable='*' \

--enable=rhel-8-for-x86_64-appstream-rpms \

--enable=rhel-8-for-x86_64-baseos-rpms \

--enable=rhel-8-for-x86_64-highavailability-rpms \

--enable=ansible-2.9-for-rhel-8-x86_64-rpms \

--enable=rhocp-4.8-for-rhel-8-x86_64-rpms

Output:

Updating Subscription Management repositories.

repo id repo name

ansible-2.9-for-rhel-8-x86_64-rpms Red Hat Ansible Engine 2.9 for RHEL 8 x86_64 (RPMs)

rhel-8-for-x86_64-appstream-rpms Red Hat Enterprise Linux 8 for x86_64 - AppStream (RPMs)

rhel-8-for-x86_64-baseos-rpms Red Hat Enterprise Linux 8 for x86_64 - BaseOS (RPMs)

rhel-8-for-x86_64-highavailability-rpms Red Hat Enterprise Linux 8 for x86_64 - High Availability (RPMs)

rhocp-4.8-for-rhel-8-x86_64-rpms Red Hat OpenShift Container Platform 4.8 for RHEL 8 x86_64 (RPMs)

5. Log in to the Bastion node (node D) via ssh

6. Run yum update -y for latest patches and update repos

$ sudo yum update -y

7. Enable Cockpit on RHEL8

$ sudo systemctl enable cockpit.socket

$ sudo systemctl start cockpit.socket

8. Add Virtual Machine, Container applications on RHEL Cockpit web UI

9. Upload the CentOS 8.3 LiveCD ISO image for the next step

10. Generate an SSH key pair and share the local SSH pub key to node D

11. Create a non-root user (kni) and provide that user with sudo privileges:

$ sudo su -

# useradd kni

# passwd kni

# echo "kni ALL=(root) NOPASSWD:ALL" | tee -a /etc/sudoers.d/kni

# chmod 0440 /etc/sudoers.d/kni

12. Create an ssh key for the new user and login as kni

# su - kni -c "ssh-keygen -t ed25519 -f /home/kni/.ssh/id_rsa -N ''"

# su - kni

13. Install the following packages:

$ sudo dnf install -y libvirt qemu-kvm mkisofs python3-devel jq ipmitool

14. Modify the user to add the libvirt group to the newly created user:

$ sudo usermod --append --groups libvirt kni

$ sudo usermod --append --groups libvirt twinuser

15. Restart firewalld and enable the http service:

$ sudo systemctl start firewalld

$ sudo firewall-cmd --zone=public --add-service=http --permanent

$ sudo firewall-cmd –reload

16. Start and enable the libvirtd service:

$ sudo systemctl enable libvirtd --now

$ sudo systemctl status libvirtd

Output:

libvirtd.service - Virtualization daemon

Loaded: loaded (/usr/lib/systemd/system/libvirtd.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2021-11-08 13:56:42 PST; 15s ago

Docs: man:libvirtd(8)

https://libvirt.org

Main PID: 18184 (libvirtd)

Tasks: 19 (limit: 32768)

Memory: 61.1M

CGroup: /system.slice/libvirtd.service

├─ 4169 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/libvirt_leaseshelper

├─ 4170 /usr/sbin/dnsmasq --conf-file=/var/lib/libvirt/dnsmasq/default.conf --leasefile-ro --dhcp-script=/usr/libexec/libvirt_leaseshelper

└─18184 /usr/sbin/libvirtd --timeout 120

Nov 08 13:56:42 bastion1.openshift.smc systemd[1]: Starting Virtualization daemon...

Nov 08 13:56:42 bastion1.openshift.smc systemd[1]: Started Virtualization daemon.

Nov 08 13:56:42 bastion1.openshift.smc dnsmasq[4169]: read /etc/hosts - 2 addresses

Nov 08 13:56:42 bastion1.openshift.smc dnsmasq[4169]: read /var/lib/libvirt/dnsmasq/default.addnhosts - 0 addresses

Nov 08 13:56:42 bastion1.openshift.smc dnsmasq-dhcp[4169]: read /var/lib/libvirt/dnsmasq/default.hostsfile

17. Create the default storage pool and start it:

$ sudo virsh pool-define-as --name default --type dir --target /var/lib/libvirt/images

$ sudo virsh pool-start default

$ sudo virsh pool-autostart default

18. Install a DHCP serve

$ sudo yum install dhcp-server

$ sudo vi /etc/dhcp/dhcpd.conf #### Copy etc-dhcp-dhcpd.conf to /etc/dhcp/dhcpd.conf

$sudo systemctl enable --now dhcpd

19. If you have the firewalld running, allow the service port to be accessible from your network.

$ sudo firewall-cmd --add-service=dhcp --permanent

$ sudo firewall-cmd --reload

Prepare Target Hardware

1. Update SYS-220BT-HNTR BMC and BIOS firmware

- BMC version 1.02.04 (built on 10/27/2021)

- BIOS version 1.2 (built on 2/12/2022)

2. Wipe the OS drive on the target node with CentOS 8.3 LiveCD by BMC VirtualMedia

$ sudo wipefs -a /dev/nvme0n1

/dev/nvme0n1

$ sudo wipefs -a /dev/nvme1n1

/dev/nvme1n1

$ sudo wipefs -a /dev/nvme2n1

/dev/nvme2n1

3. Collect MAC addresses for NIC1 (connect to 100G switch) and NIC2 (connect to 25G switch)

$ ip a

4. Reboot the system and enter BIOS

5. Switch Virtual Media -- "UEFI USB CD/DVD:UEFI: ATEN Virtual CDROM YS0J" as 2nd boot order

6. Set Boot mode as Dual (prefer UEFI)

7. Clean up PXE list

Prepare the Provisioning Environment

1. Configure network bridges for OpenShift Bootstrap VM.

2. Export the baremetal network NIC name:

$ export PUB_CONN=baremetal

3. Configure the baremetal network:

$ sudo nohup bash -c "

nmcli con down \"$PUB_CONN\"

nmcli con delete \"$PUB_CONN\" # RHEL 8.1 appends the word \"System\" in

front of the connection, delete in case it exists

nmcli con down \"System $PUB_CONN\"

nmcli con delete \"System $PUB_CONN\"

nmcli connection add ifname baremetal type bridge con-name baremetal

nmcli con add type bridge-slave ifname \"$PUB_CONN\" master baremetal

pkill dhclient;dhclient baremetal

"

4. Export the provisioning network:

$ export PROV_CONN=provisioning

5. Configure the provisioning network:

sudo nohup bash -c "

nmcli con down \"$PROV_CONN\"

nmcli con delete \"$PROV_CONN\"

nmcli connection add ifname provisioning type bridge con-name provisioning

nmcli con add type bridge-slave ifname \"$PROV_CONN\" master provisioning

nmcli connection modify provisioning ipv6.addresses fd00:1101::1/64 ipv6.method manual

nmcli con down provisioning

nmcli con up provisioning

"

6. Verify the connection bridges have been properly created.

$ sudo nmcli con show

NAME UUID TYPE DEVICE

baremetal

7. Get pull-secret.txt from Red Hat Cloud console

8. Retrieve the Red Hat OpenShift Container Platform installer using the following steps:

$ export VERSION=4.8.27

$ export RELEASE_IMAGE=$(curl -s https://mirror.openshift.com/pub/openshift-v4/clients/ocp/$VERSION/release.txt | grep 'Pull From: quay.io' | awk -F ' ' '{print $3}')

9. Extract the Red Hat OpenShift Container Platform installer

10. After retrieving the installer, the next step is to extract it.

11. Set the environment variables:

$ export cmd=openshift-baremetal-install

$ export pullsecret_file=~/pull-secret.txt

$ export extract_dir=$(pwd)

12. Get oc binary

$ curl -s https://mirror.openshift.com/pub/openshift-v4/clients/ocp/$VERSION/openshift-client-linux.tar.gz | tar zxvf - oc

$ sudo cp oc /usr/local/bin

13. Get the installer

$ oc adm release extract --registry-config "${pullsecret_file}" --command=$cmd --to "${extract_dir}" ${RELEASE_IMAGE}

$ sudo cp openshift-baremetal-install /usr/local/bin

14. Create a Red Hat Enterprise Linux Core OS image cache

a. Install Podman

$ sudo dnf install -y podman

b. Open firewall port 8080 to be used for RHCOS image caching

$ sudo firewall-cmd --add-port=8080/tcp --zone=public –permanent

$ sudo firewall-cmd –reload

c. Create a directory to store the bootstraposimage and clusterosimage:

$ mkdir /home/kni/rhcos_image_cache

d. Check the storage devices’ capacity

$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 87G 0 87G 0% /dev

tmpfs 87G 0 87G 0% /dev/shm

tmpfs 87G 19M 87G 1% /run

tmpfs 87G 0 87G 0% /sys/fs/cgroup

/dev/mapper/rhel-root 70G 7.3G 63G 11% /

/dev/nvme1n1p1 3.1T 22G 3.1T 1% /var/lib/libvirt/images

/dev/sda1 1014M 326M 689M 33% /boot

/dev/mapper/rhel-home 149G 1.9G 147G 2% /home

tmpfs 18G 20K 18G 1% /run/user/42

tmpfs 18G 4.0K 18G 1% /run/user/1000

e. Set the appropriate SELinux context for the newly created directory:

$ sudo semanage fcontext -a -t httpd_sys_content_t "/home/kni/rhcos_image_cache(/.*)?"

$ sudo restorecon -Rv rhcos_image_cache/

f. Relabel /home/kni/rhcos_image_cache from unconfined_u:object_r:user_home_t:s0 to unconfined_u:object_r:httpd_sys_content_t:s0

g. Get the commit ID from the installer:

$ export COMMIT_ID=$(/usr/local/bin/openshift-baremetal-install version | grep '^built from commit' | awk '{print $4}')

The ID determines which images the installer needs to download.

h. Get the URI for the RHCOS image that the installer will deploy on the nodes:

$ export RHCOS_OPENSTACK_URI=$(curl -s -S https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json | jq .images.openstack.path | sed 's/"//g')

i. Get the URI for the RHCOS image that the installer will deploy on the bootstrap VM:

$ export RHCOS_QEMU_URI=$(curl -s -S https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json | jq .images.qemu.path | sed 's/"//g')

j. Get the path where the images are published:

$ export RHCOS_PATH=$(curl -s -S https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json | jq .baseURI | sed 's/"//g')

k. Get the SHA hash for the RHCOS image that will be deployed on the bootstrap VM:

$ export RHCOS_QEMU_SHA_UNCOMPRESSED=$(curl -s -S https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json | jq -r '.images.qemu["uncompressed-sha256"]')

l. Get the SHA hash for the RHCOS image that will be deployed on the nodes

$ export RHCOS_OPENSTACK_SHA_COMPRESSED=$(curl -s -S https://raw.githubusercontent.com/openshift/installer/$COMMIT_ID/data/data/rhcos.json | jq -r '.images.openstack.sha256')

m. Download the images and place them in the /home/kni/rhcos_image_cache directory:

$ curl -L ${RHCOS_PATH}${RHCOS_QEMU_URI} -o /home/kni/rhcos_image_cache/${RHCOS_QEMU_URI}

$ curl -L ${RHCOS_PATH}${RHCOS_OPENSTACK_URI} -o /home/kni/rhcos_image_cache/${RHCOS_OPENSTACK_URI}

n. Confirm SELinux type is of httpd_sys_content_t for the newly created files:

$ ls -Z /home/kni/rhcos_image_cache

o. Create the pod

$ podman run -d --name rhcos_image_cache -v /home/kni/rhcos_image_cache:/var/www/html -p 8080:8080/tcp registry.centos.org/centos/httpd-24-centos7:latest

#####

The above command creates a caching webserver with the name rhcos_image_cache, which serves the images for deployment. The first image ${RHCOS_PATH}${RHCOS_QEMU_URI}?sha256=${RHCOS_QEMU_SHA_UNCOMPRESSED} is the bootstrapOSImage and the second image ${RHCOS_PATH}${RHCOS_OPENSTACK_URI}?sha256=${RHCOS_OPENSTACK_SHA_COMPRESSED} is the clusterOSImage in the install-config.yaml file.

#####

p. Generate the bootstrapOSImage and clusterOSImage configuration:

$ export BAREMETAL_IP=$(ip addr show dev baremetal | awk '/inet /{print $2}' | cut -d"/" -f1)

$ export RHCOS_OPENSTACK_SHA256=$(zcat /home/kni/rhcos_image_cache/${RHCOS_OPENSTACK_URI} | sha256sum | awk '{print $1}')

$ export RHCOS_QEMU_SHA256=$(zcat /home/kni/rhcos_image_cache/${RHCOS_QEMU_URI} | sha256sum | awk '{print $1}')

$ export CLUSTER_OS_IMAGE="http://${BAREMETAL_IP}:8080/${RHCOS_OPENSTACK_URI}?sha256=${RHCOS_OPENSTACK_SHA256}"

$ export BOOTSTRAP_OS_IMAGE="http://${BAREMETAL_IP}:8080/${RHCOS_QEMU_URI}?sha256=${RHCOS_QEMU_SHA256}"

$ echo "${RHCOS_OPENSTACK_SHA256} ${RHCOS_OPENSTACK_URI}" > /home/kni/rhcos_image_cache/rhcos-ootpa-latest.qcow2.md5sum

$ echo " bootstrapOSImage: ${BOOTSTRAP_OS_IMAGE}"

bootstrapOSImage: http://172.24.121.64:8080/rhcos-48.84.202109241901-0-qemu.x86_64.qcow2.gz?sha256=50377ba9c5cb92c649c7d9e31b508185241a3c204b34dd991fcb3cf0adc53983

$ echo " clusterOSImage: ${CLUSTER_OS_IMAGE}"

clusterOSImage: http://172.24.121.64:8080/rhcos-48.84.202109241901-0-openstack.x86_64.qcow2.gz?sha256=e0a1d8a99c5869150a56b8de475ea7952ca2fa3aacad7ca48533d1176df503ab

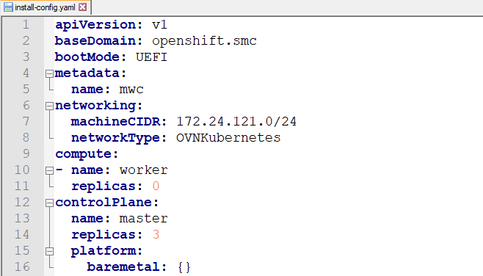

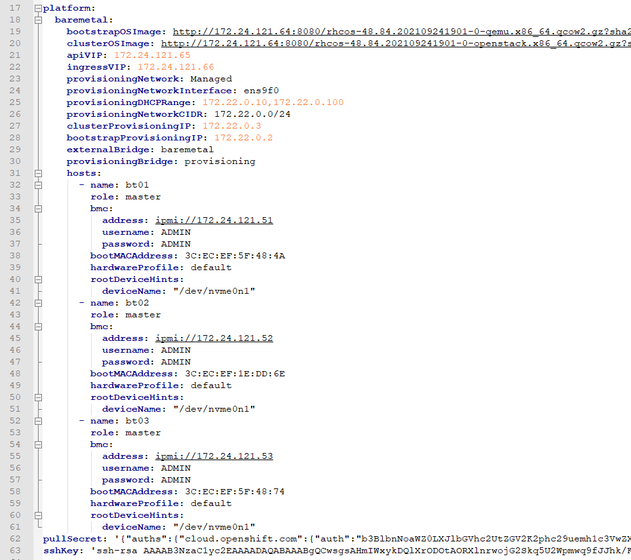

- Prepare the configuration files

- Prepare the config yaml file as install-config.yaml. In this deployment, Redfish Virtual Media was used on a baremetal network.

Hence, the “ipmi” will be replaced by “redfish-virtualmedia”

b. Add the provisioningNetwork: "Disabled" below the ingressVIP attribute

c. Add “disableCertificateVerification: True” below the password attribute since our BMC uses self-signed SSL certificates

d. Put the NIC1 (connected to baremetal network) MAC address on the bootMACAddress attribute.

e. Put the worker’s replicas attribute to be 0 for the compact cluster as Figure 3.1

compute:

- name: worker

replicas: 0

Figure 3.1 – Worker Replica Values = 0

Figure 3.2 – 3-Node Configuration in yaml File

f. Create a directory to store the cluster configurations.

$ mkdir ~/clusterconfigs

$ cp install-config.yaml ~/clusterconfigs

16. Ensure all bare metal nodes are powered off prior to installing the OpenShift Container Platform cluster.

$ ipmitool -I lanplus -U <user> -P <password> -H <management-server-ip> power off

17. Remove old bootstrap resources if any are left over from a previous deployment attempt.

$ for i in $(sudo virsh list | tail -n +3 | grep bootstrap | awk {'print $2'});

do

sudo virsh destroy $i;

sudo virsh undefine $i;

sudo virsh vol-delete $i --pool $i;

sudo virsh vol-delete $i.ign --pool $i;

sudo virsh pool-destroy $i;

sudo virsh pool-undefine $i;

done

18. Clean up and prepare env.

$ openshift-baremetal-install --dir ~/clusterconfigs --log-level debug destroy cluster

$ cp install-config.yaml ./clusterconfigs/install-config.yaml

19. Create a cluster with openshift-baremetal-install

$ openshift-baremetal-install --dir ~/clusterconfigs --log-level debug create cluster

20. If the installer times out, use the following command to wait for bootstrap VM to complete jobs.

Suggest to use iKVM to run the command to avoid the SSH session timeout.

$ openshift-baremetal-install --dir ~/clusterconfigs wait-for bootstrap-complete

21. Once the installer completes, it will show a message like the following;

DEBUG Cluster is initialized

INFO Waiting up to 10m0s for the openshift-console route to be created...

DEBUG Route found in openshift-console namespace: console

DEBUG OpenShift console route is admitted

INFO Install complete!

INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/home/kni/clusterconfigs/auth/kubeconfig'

INFO Access the OpenShift web-console here: https://console-openshift-console.apps.compact.openshift.smc

INFO Login to the console with user: "kubeadmin", and password: "XXXXX-yyyyy-ZZZZZ-aaaaa"

DEBUG Time elapsed per stage:

DEBUG Cluster Operators: 18m0s

INFO Time elapsed: 18m0s

Figure 3.3 – Red Hat OpenShift 4.8 IPI Network Overview

Post-Installation Configuration

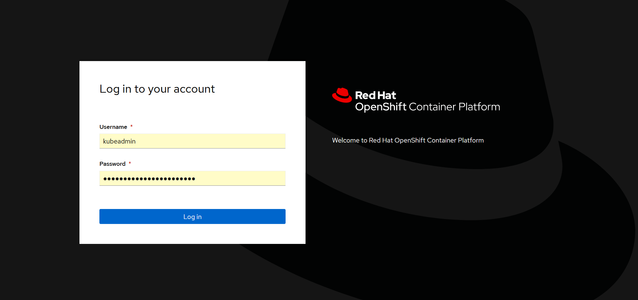

- Login to the Red Hat OpenShift web console with the kubeadmin credential

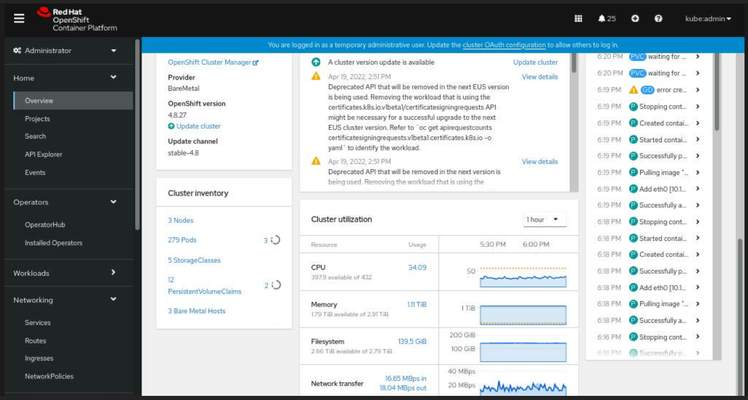

Figure 4.1 - Red Hat OpenShift Web Console View

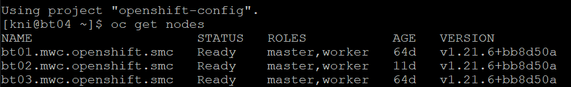

Figure 4.2 - Node Roles of Red Hat OpenShift Cluster

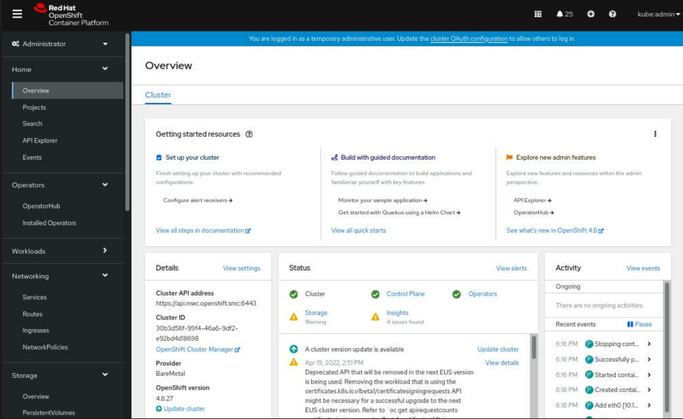

Figure 4.3 - Red Hat OpenShift Administrator Overview

Figure 4.4 - Red Hat OpenShift Cluster Inventory

2. Install httpd-tools for creating an htpasswd-ocp file for a cluster OAuth configuration as the identity provider

$ sudo dnf -y install httpd-tools

$ htpasswd -c ~/installer/htpasswd-ocp twinuser

3. Add Identity Provider – HTPasswd

Figure 4.5 - Add Identity Provider

4. Upload htpasswd-ocp

Figure 4.6 - Upload Password File

5. Add role-binding to the users

$ oc adm policy add-cluster-role-to-user cluster-admin twinuser

$ oc adm policy add-cluster-role-to-user cluster-admin redisuser

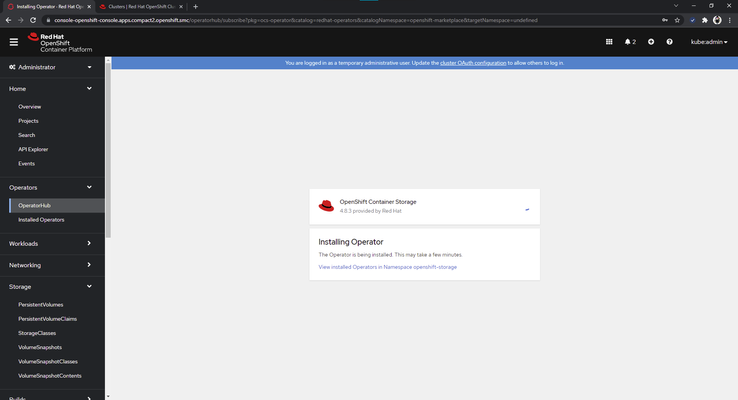

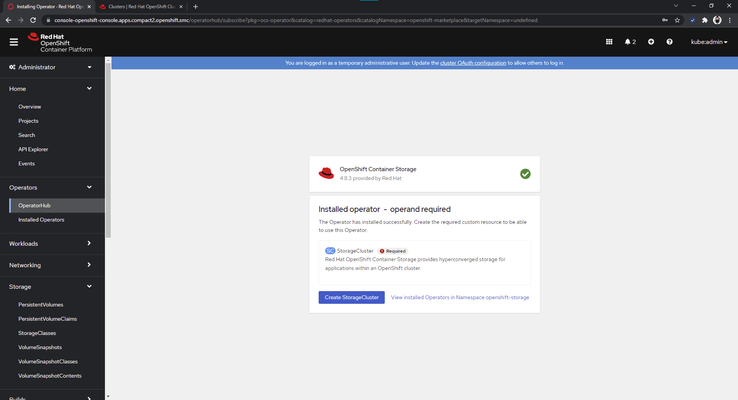

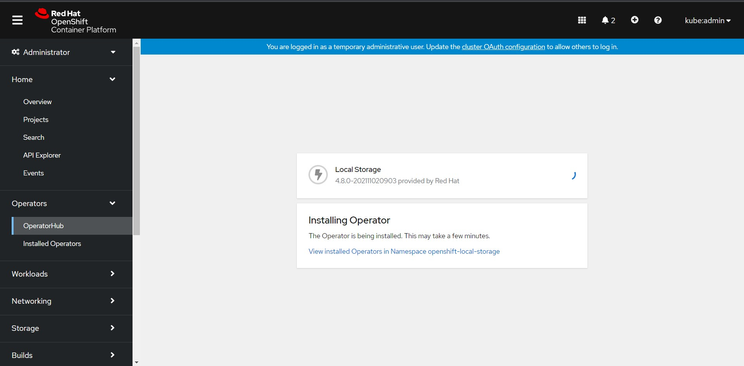

6. Install Red Hat OpenShift Container Storage Operator*:

*Note: Test configuration used OpenShift Container Storage version 4.8.9

Figure 4.7 - Installing Container Storage Operator

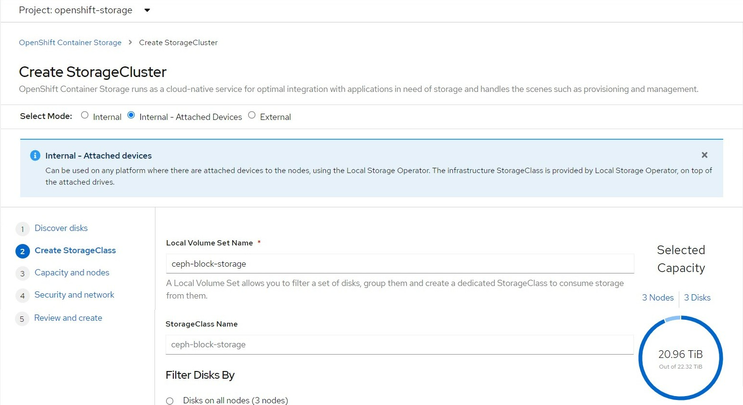

Figure 4.8 - Installing Hyperconverged Storage

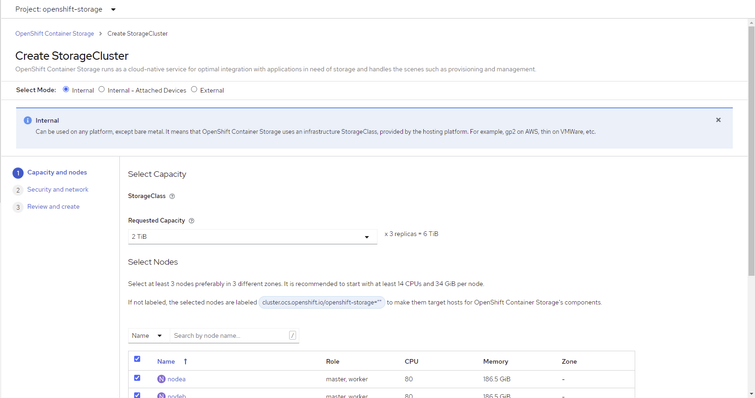

Figure 4.9 - Create Storage Cluster

7. Install LocalStorage Operator for Local Storage

Figure 4.10 - Create Storage Cluster

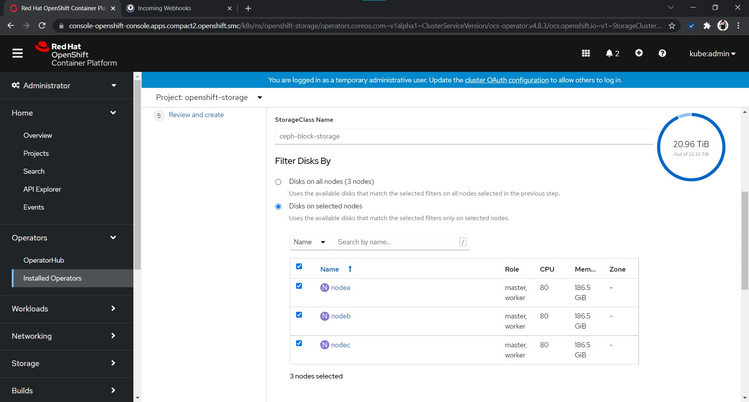

8. Create StorageCluster with the ”Internal – Attached Devices”

Figure 4.11 - Choosing Internal – Attached Devices

Figure 4.12 - Choosing Disks on Selected Nodes

Figure 4.13 - Choosing Disks on Selected Nodes Continued

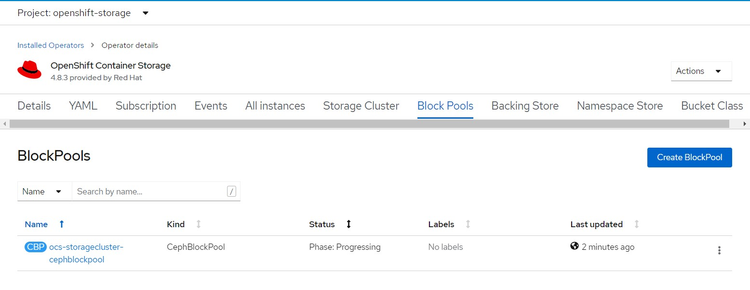

9. Red Hat OpenShift Data Foundation 4.8.3 operator creates Block Pools

Figure 4.14 - Block Pools Creation

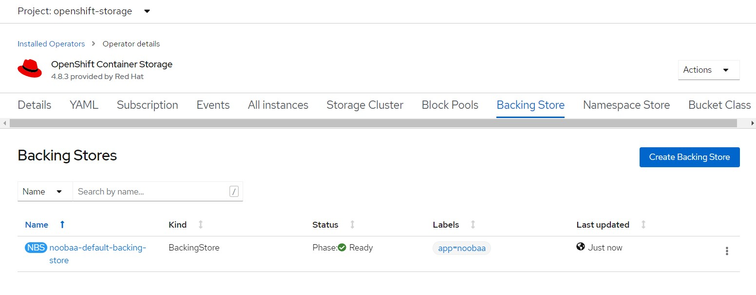

10. Red Hat OpenShift Data Foundation 4.8.3 operator creates Backing Store for Object Storage service

Figure 4.15 - Backing Store Creation

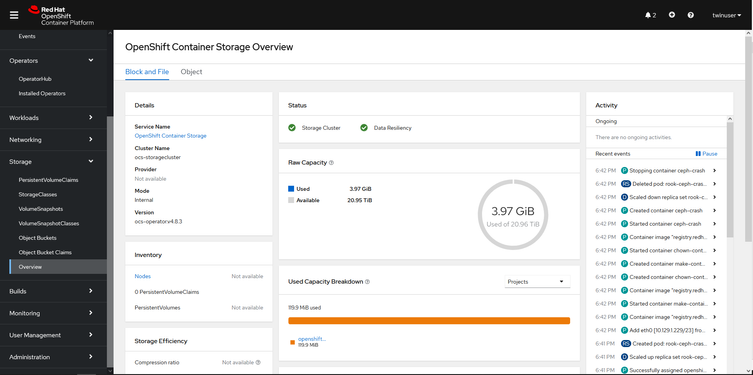

11. Check Red Hat OpenShift Data Foundation Overview

Figure 4.16 - Red Hat OpenShift Data Foundation: Block and File View

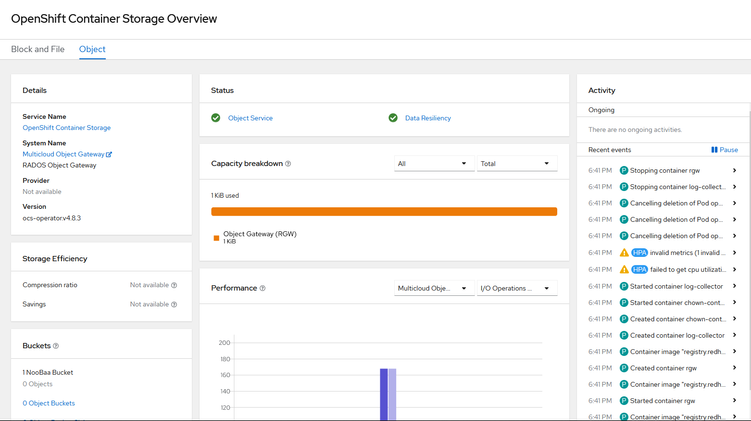

12. Check Object Service

Figure 4.17 - Red Hat OpenShift Data Foundation: Object View

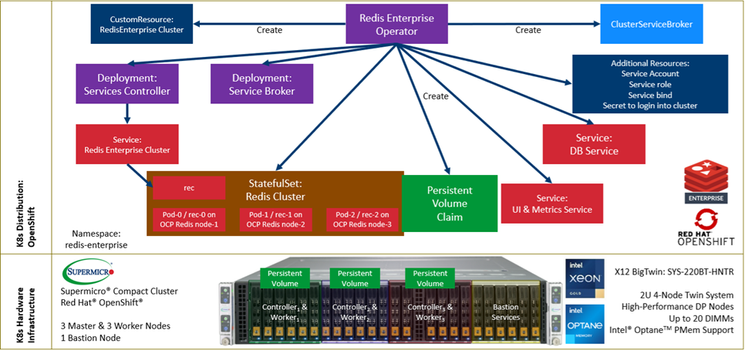

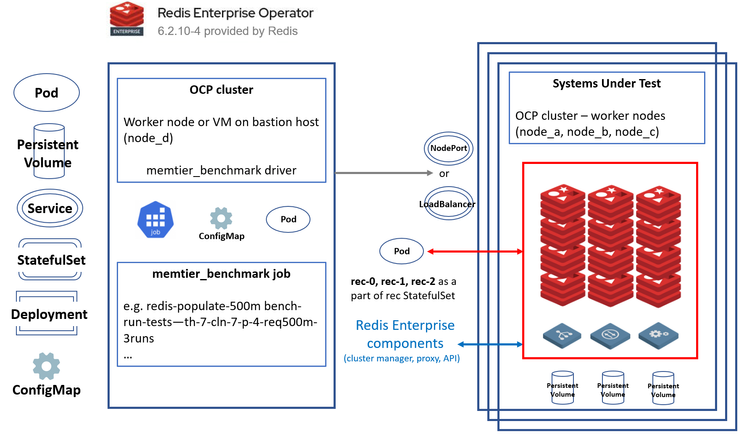

Redis Cluster Topology

1. Red Hat OpenShift Compact Cluster Topology

Figure 5.1 - Redis Cluster Topology on Supermicro Compact Cluster

Figure 5.2 - Kubernetes Abstraction for Test Cluster

2. Redis Enterprise Operator and Redis Enterprise Cluster objects/entities:

[redisuser@redisbench ~]$ oc get all -l name=redis-enterprise-operator

NAME READY STATUS RESTARTS AGE

pod/redis-enterprise-operator-7c4fdb7987-4drvv 2/2 Running 0 43h

NAME DESIRED CURRENT READY AGE

replicaset.apps/redis-enterprise-operator-7c4fdb7987 1 1 1 2d1h

[redisuser@redisbench ~]$ oc get all -l app=redis-enterprise

NAME READY STATUS RESTARTS AGE

pod/rec-0 2/2 Running 0 21h

pod/rec-1 2/2 Running 0 21h

pod/rec-2 2/2 Running 0 21h

pod/rec-services-rigger-7bfc6bb7f8-szqd5 1/1 Running 0 21h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/rec ClusterIP None <none> 9443/TCP,8001/TCP,8070/TCP 21h

service/rec-ui ClusterIP 172.30.190.185 <none> 8443/TCP 21h

service/redb-headless ClusterIP None <none> 13062/TCP 21h

service/redb-load-balancer LoadBalancer 172.30.54.186 <pending> 13062:30056/TCP 21h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/rec-services-rigger 1/1 1 1 21h

NAME DESIRED CURRENT READY AGE

replicaset.apps/rec-services-rigger-7bfc6bb7f8 1 1 1 21h

NAME READY AGE

statefulset.apps/rec 3/3 21h

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

route.route.openshift.io/rec-ui rec-ui-redis-ent3.apps.mwc.openshift.smc rec-ui ui passthrough/Redirect None

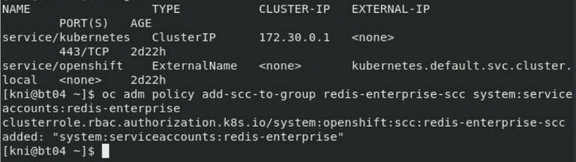

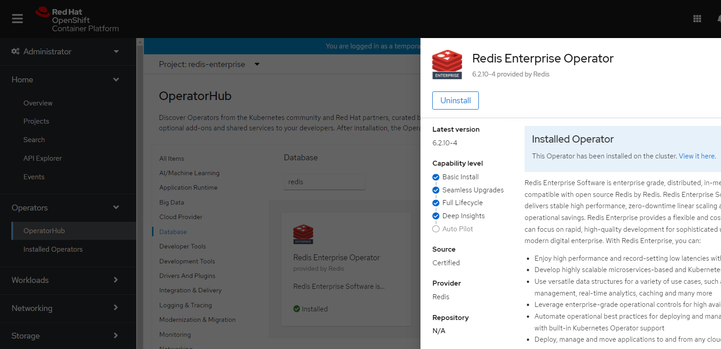

Install Redis Enterprise Operator and Configure Redis Cluster

1. Install Redis Enterprise Operator from OperatorHub Marketplace

- Select or create a new project (namespace) e.g.

$ oc create namespace redis-enterprise

b. If the name of Redis Enterprise Cluster in the spec will be changed from default one – please issue

$ oc adm policy add-scc-to-group redis-enterprise-scc system:serviceaccounts:redis-enterprise

Figure 6.1 - Install Redis Enterprise Operator

NOTE: <redis-enterprise> here is the actual name of the project/namespace.

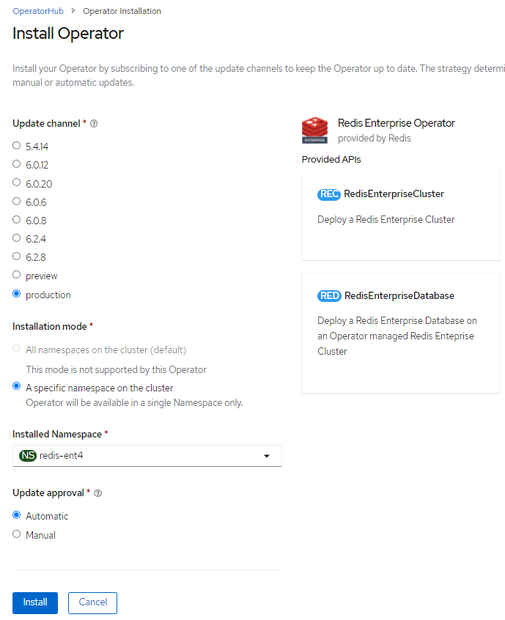

- In RH OCP UI go to Operator 🡪 OperatorHub and search for “Redis Enterprise”, select Redis Enterprise Operator and click on “Install” button

Figure 6.2 - OperatorHub

- Select install mode and updates related options:

Figure 6.3 - Install Operator

2. Setup Redis Enterprise Cluster

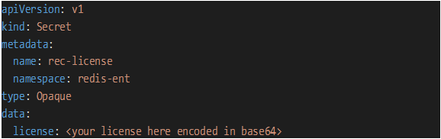

a) Prepare Red Hat OpenShift secret with Redis Enterprise License

license.yml:

Figure 6.4 - Redis Enterprise License

b) oc apply -f license.yml

c) Prepare yaml file for RedisEnterpriseCluster (cluster.yml )

For Example, Redis Enterprise Operator may look like below:

Figure 6.5 - RedisEnterpriseCluster yaml file

Note:

redisEnterpriseNodeResources: limits: memory is contributing to the calculation of the total possible dataset (Redis single DB size)

d) Create RedisEnterpriseCluster

$ oc apply -f cluster.yml

e) Create rec-ui route to have browser access to Redis Enterprise UI

$ oc create route passthrough <name e.g. rec-ui> --service=rec-ui --insecure-policy=redirect --

Output:

route.route.openshift.io/rec-ui created

To get url – issue ‘oc get routes’ and look for rec-ui entry

Make sure to prepend https:// to address/string in the HOST/PORT column

Note: Credentials – user and password are kept within ‘rec’ secret

Optimize Redis Enterprise Cluster and Create Database

- Tune the cluster – Use rladmin commands from one of the pods, e.g rec-0 (oc rsh po/rec-0):

a. Increase proxy threads count (rladmin) to better deal with expected load

$ rladmin tune proxy all max_threads 32

Configuring proxies:

- proxy:1,2,3: ok

$ rladmin tune proxy all threads 32

Configuring proxies:

- proxy:1,2,3: ok

b. Change default shard placement policy from dense to sparse

$ rladmin tune cluster default_shards_placement sparse

Finished successfully

c. Set default Redis version to 6.2

$ rladmin tune cluster redis_upgrade_policy latest; rladmin tune cluster default_redis_version 6.2

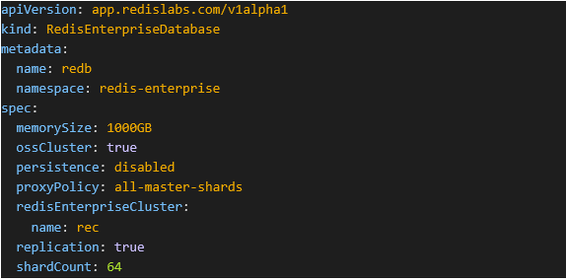

- Setup Redis Enterprise Database (REDB)

a) Create db.yml

Figure 7 - Setup Redis Enterprise Database yaml file

Note:

memorySize when replication is set to true – one has to remember that this is the limit for the sum of both primary shards and replica shards – so the total size across all master shards will be memorySize/2 (the maximum dataset size you can store in the database is 50% of memorySize).

b) Apply created manifest for the RedisEnterpriseDatabase

$ oc apply -f db.yml

Note: One can leverage OpenShift UI to create Redis Enterprise resources via Redis Enterprise Operator or use oc and use as templates yaml files provided here

c) If in db.yml there is lack of entry “ossCluster: true” -> Tune the database - set OSS mode. To enable the OSS Cluster API for the database, run:

$ rladmin tune db <database name or ID> oss_cluster enabled

Tuning database: BDB oss_cluster set to enabled.

Finished successfully

Setup memtier_benchmark Jobs

- Setup environment for tests

Redis database population and load tests are being executed from one of the OCP cluster nodes using k8s jobs leveraging docker image redislabs/memtier_benchmark:edge

Jobs for DB population and benchmark tests are using yaml templates processed by kustomize plugin (oc create -k <directory with templates> )

To pin a benchmark job to a given node, the node needs to labelled:

$ oc label node bt03.mwc.openshift.smc node=redis-client

node/bt03.mwc.openshift.smc labeled

2. Setup Docker and on the bastion host to leverage playbooks running and parsing results of memtier_benchmark

Make sure there is and Docker available on the bastion host. Install it if it’s not yet there.

Before execution of provided ansible-playbooks:

- Prepare Inventory file

To create an `inventory` file, copy the content of `inventory.example` file and replace the example hostnames for `[computes]`, `[utility]` and `[clients]` groups with own hostnames or IPs. Utility host is corresponding to bastion host/Ansible controller VM.

* The `[computes]` group specifies k8s nodes, where redis-cluster(s) instances are deployed.

* The `[utility]` group specifies a node, which runs the playbooks (Ansible controller). The node can reside outside of the k8s cluster, but it needs to be able to communicate with the cluster.

* The `[clients]` group specifies k8s nodes, which run memtier_benchmark instances.

- Change default values of environment variables

There are two ways to change default values of environment variables:

Note: by passing new values as command-line arguments when invoking playbooks:

$ <variable1>=<value1> <variable2>=<value2> ansible-playbook -i inventory <playbook_name>.yml

* by modifying content of `vars/*` files. To change the value, find corresponding variable name (named according to convention: `<variable name>_default`) in `vars/vars.yml` and overwrite its value. A share of the environment variables is described in [Environment variables (selection)](#environment-variables-selection) paragraph.

e.g. below is section for memtier_benchmark specific params:

threads_default: 7

clients_default: 7

datasize_default: 100

pipeline_default: 4

requests_default: 500000000

run_count_default: 3

test_time_default: 300

ratio_default: "1:4"

key_pattern_default: "G:G"

- Prepare the bastion host (Install required python packages & required Ansible collections)

$ ansible-playbook -i inventory setup.yml

from the directory with ansible-playbook’s

3. Populate Redis database

$ run_id=<run_identifier> ansible-playbook -i inventory populate_ocp.yml

4. Run benchmarks

$ run_id=<run_identifier> ansible-playbook -i inventory run_benchmark_ocp.yml

5. Clean (flush) Redis database

$ ansible-playbook -i inventory flushdb.yml

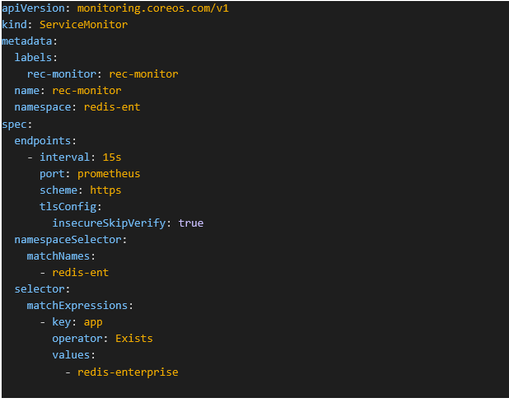

Telemetry – Prometheus and Grafana

-

Navigate to OperatorHub and install community Prometheus Operator

-

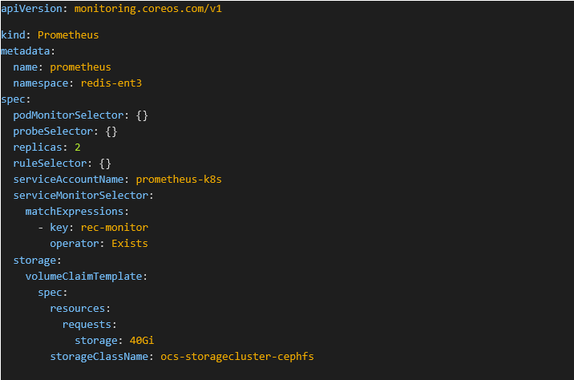

Setup Prometheus service monitor to collect data exported by redis-exporter included in redis enterprise cluster

Figure 8.1 - Setup Redis Monitor

3. Set up Prometheus instance, e.g.

Figure 8.2 - Setup Prometheus Instance

4. Navigate to OperatorHub and install community-powered Grafana operator.

5. Create Grafana instance

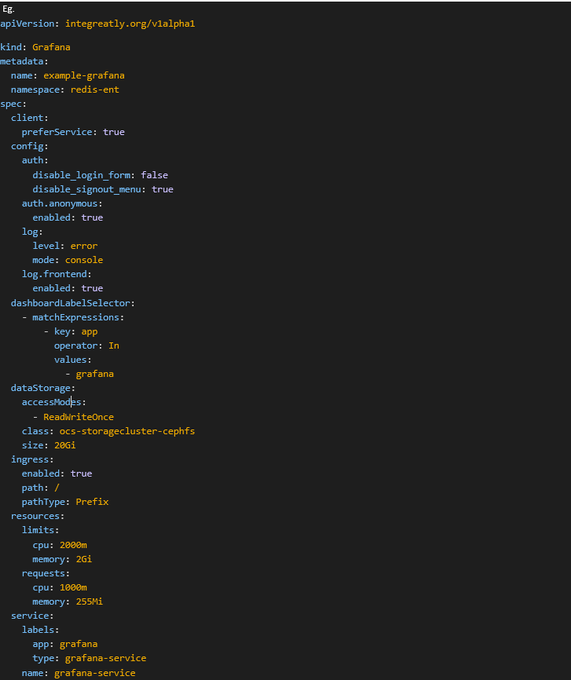

Figure 8.3 - Setup Grafana Instance

6. Add data sources

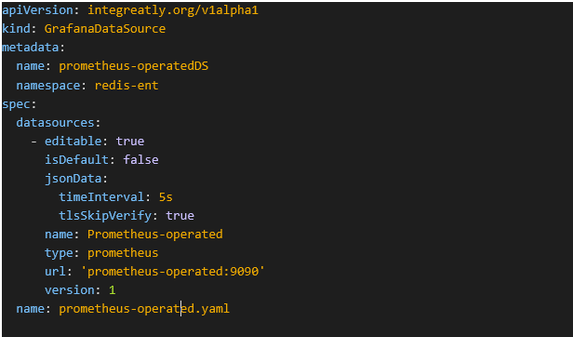

a) Add Prometheus from step 2

Figure 8.4 - Add Data Source from Prometheus Instance

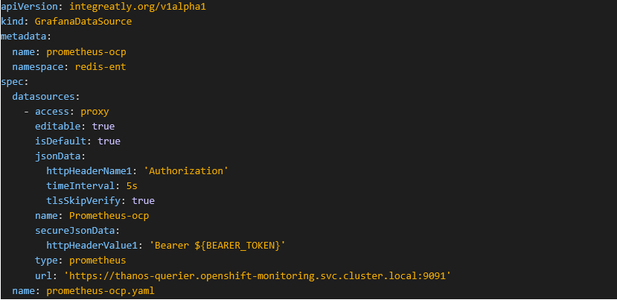

b) Add built-in Prometheus

Grant cluster-monitoring-view cluster role for service account used by custom Grafana instance

$ oc adm policy add-cluster-role-to-user cluster-monitoring-view -z grafana-serviceaccount

c) Create the GrafanaDataSource object.

Figure 8.5 - Create GrafanaDataSource Object

Substitute ${BEARER_TOKEN} with token retrieved by this command:

$ oc serviceaccounts get-token grafana-serviceaccount -n redis-enterprise

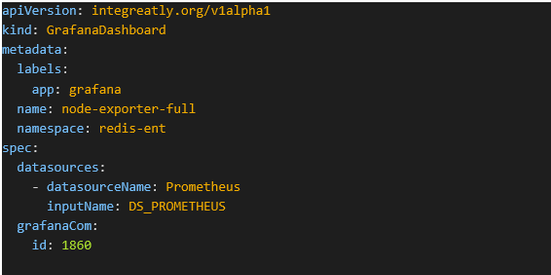

7. You can add Grafana dashboards either from Grafana-operator CDR or with Grafana GUI exposed by default grafana-route, after login to with credentials in ‘grafana-admin-credentials’ secret.

Figure 8.6 - Add Grafana Dashboard

8. Add Grafana apikey to post annotations.

a) Create with command line

$ grafana_cred=`oc get secret grafana-admin-credentials -o go-template --template="{{.data.GF_SECURITY_ADMIN_PASSWORD|base64decode}}"`

curl --insecure -X POST -H "Content-Type: application/json" -d '{"name":"annotation_apikey", "role": "Editor"}' https://admin:$grafana_cred@grafana-route-redis-ent.apps.mwc.openshift.smc/api/auth/keys

Output:

{"id":2,"name":"annotation_apikey","key":"eyJrIjoiaFJRU2s4TDJTdVZJSkUwb1o1dDJNQTlrM1VVaTU3THIiLCJuIjoiYW5ub3RhdGlvbl9hcGlrZXkyIiwiaWQiOjF9"}

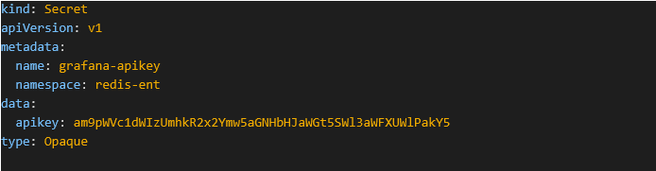

b) Add the key to the secret

Figure 8.7 - Add Grafana apikey to Post Annotations

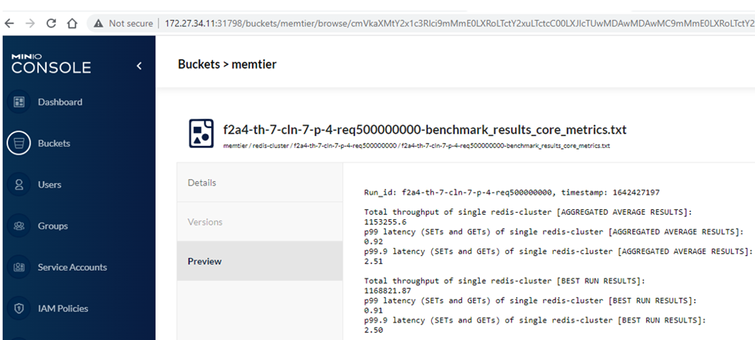

Memtier Benchmark Results

Benchmark logs and run metadata are stored `{{ logdir_default }}/{{ log_dir_structure }}` directory on bastion host (variables values are set in `vars/vars.yml`)

The benchmark results are also stored in the Red Hat OpenShift cluster as long as the kubernetes jobs are not cleaned up. The playbook exports them to local files on the bastion host VM and combine the parsed results with additional metadata like Grafana urls to Redis and OS level metrics dashboards.

Exemplary results:

cat def-vars15-benchmark_results_core_metrics.txt

Run_id: def-vars15, timestamp: 1641390131

Total throughput of single redis-cluster [AGGREGATED AVERAGE RESULTS]:

1187561.27

p99 latency (SETs and GETs) of single redis-cluster [AGGREGATED AVERAGE RESULTS]:

0.94

Total throughput of single redis-cluster [BEST RUN RESULTS]:

1219660.06

p99 latency (SETs and GETs) of single redis-cluster [BEST RUN RESULTS]:

0.95

#Cluster Overview:

#Cluster Databases

#Cluster Nodes

#Redis

#OCP (k8s) cluster nodes

Storing results on S3 MinIO:

The benchmark will be executed with “--extra-vars "@vars/s3_vars.yml"

After setting up MinIO deployment (e.g. via Bitnami helm chart) and populating S3 variables in vars/s3_vars.yml file one can access MinIO UI console and get to memtier_benchmark results via web browser

Steps:

- Install helm on ansible-host

Source: https://helm.sh/docs/intro/install/#from-script

2. Setup helm repo

Source: https://bitnami.com/stack/minio/helm

3. Install MinIO

helm install minio-via-helm --set persistence.storageClass=ocs-storagecluster-cephfs --set service.type=NodePort bitnami/minio

To get your credentials run:

export ROOT_USER=$(kubectl get secret --namespace redis-enterprise minio-via-helm -o jsonpath="{.data.root-user}" | base64 --decode)

export ROOT_PASSWORD=$(kubectl get secret --namespace redis-enterprise minio-via-helm -o jsonpath="{.data.root-password}" | base64 --decode)

To access the MinIOweb UI:

- Get the MinIO® URL:

export NODE_PORT=$(kubectl get --namespace redis-enterprise -o jsonpath="{.spec.ports[0].nodePort}" services minio-via-helm)

export NODE_IP=$(kubectl get nodes --namespace redis-enterprise -o jsonpath="{.items[0].status.addresses[0].address}")

echo "minio® web URL: http://$NODE_IP:$NODE_PORT/minio"

Example of MinIO Console: http://172.24.121.61:32645/buckets

Figure 9 - Storing Results on S3 MinIO

Appendix

List of Hardware Used in Compact Cluster

Base configuration for Red Hat OpenShift & Redis Enterprise Cluster:

System: SYS-220BT-HNTR

CPU: Dual Intel Gold Xeon Scalable Processors 8352V

Network Controller Cards: Intel E810 series

Data Drive: 2x Intel P5510 3.84TB NVMe PCI-E Gen4 Drives

Boot Drives: 2x M.2 NVMe Drives with HW RAID 1 support via AOC-SMG3-2M2-B

SSE-C3632S(R): 100GbE QSFP28 Cumulus-interfaced switch (Bare-Metal Network)

SSE-G3648B(R): 1/10GbE Base-T Cumulus-interfaced switch (BMC Management Switch)

Test Plan for Red Hat OpenShift & Redis Enterprise

Utilize Red Hat OpenShift for orchestration and container technologies to package & support more memory capacity and multi-tenancy (additional shards, more databases) with Intel PMem 200 Series on a 3-node X12 Supermicro BigTwin Compact Cluster.

For systems with 20 DIMMs, 1DPC is typically recommended for optimal memory performance across 8-memory channels per socket. Configuration 1 was used as a baseline to measure the optimal memory performance with memtier_benchmark 1.3.0 (edge). Configuration 2 was used to measure the memory performance with twice the capacity by using DDR4 on 4-memory channels and PMem on the other 4-memory channels per socket to double the memory capacity.

|

Config |

Memory Configuration |

PMem Mode |

Usable Memory (GB) |

Container Type |

|

1 |

512GB 16x 32GB DDR4 |

N/A |

1.5TB across 3 nodes |

Bare-Metal on Red Hat OpenShift |

|

2 |

1024GB 8x 32GB DDR4-3200 8x 128GB PMem-3200 PMem to DRAM: 4:1 |

Memory Mode |

3TB across 3 nodes |

Bare-Metal on Red Hat OpenShift |

Table 2. Config Info

References

Supermicro BigTwin Multi-Node Servers: https://www.supermicro.com/en/products/bigtwin/

Supermicro Redis Solutions: https://www.supermicro.com/en/solutions/redis

Written by Mayur Shetty, Principle Solution Architect, Red Hat

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.