- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi, dear Intel team,

I'm working on optimizing 1024 x 1024 matrix mulplication on Intel Gen9 GPU. Here is my pseudo code:

numTiles = 1024 /4

__local Asub, Bsub, Ctemp

for t=0, t++, t<NumTiles {

Asub[4][4] = load 4X4 SP float data from matrix A (using vload4)

Bsub[4][4] = load 4X4 SP float data from matrix B (using vload4)

C_temp[4][4] += Asub * Bsub }

C_sub = C_temp (using vstore4)

For one work item, the Asub and Bsub will go through 4 rows of matrix A and 4 columns of matrix B, to get final C_sub 4X4 elements.

By using Vtune amplifier's "dynamic instruction count" analyzer tool, I found that the 4X4 data loading from global memory to local memory consumes a lot of instructions counts. Could I re-write my code, to do prefetch, to hide the loading latency between global memory and local memory? Maybe like this:

numTiles = 1024 /4

int t = 0

__local Asub_current, Bsub_current, Asub_new, Bsub_new, Ctemp

Asub_current[4][4] = load 4X4 SP float data from matrix A (using vload4)

Bsub_current[4][4] = load 4X4 SP float data from matrix B (using vload4)

for t=1, t++, t<=NumTiles {

Asub_new[4][4] = load 4X4 SP float data from matrix A (using vload4)

Bsub_new[4][4] = load 4X4 SP float data from matrix B (using vload4)

C_temp[4][4] += Asub_current * Bsub_current

Asub_current = Asub_new

Bsub_current = Bsub_new }

C_sub = C_temp (using vstore4)

My understanding is, when GPU begins to load Asub_new and Bsub_new, it doesn't need to wait till loading is done, but it could begin mulplication immediately. After the mulplication is done, GPU could load new data into current matrics. Is this possible? If not, how could I program, to achieve "prefetch" to hide data transfer latency?

Thanks a lot!

By the way, by using subgroup and subgroup shuffle, I can achieve 313GFLOPS on my UHD 630 GPU for 1024x1024 matrix mulplication, which is 66% of its top performance. Vtune dynamic instruction count analyzer shows that subgroup_block read is much more efficient than vloadn. But I want to avoid using subgroup functions to get eaiser portability. Currently I can achieve 150GFLOPS from my first pseudo code. Still working on it.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in the Intel forums.

Could you please provide us with the complete reproducer code and steps to reproduce the issue at our end?

Could you also please provide us with the OS details, and version of Intel oneAPI you have been using?

Thanks & Regards

Shivani

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi,

Please see attached file. Just run the make_run.sh.

The program supports these inputs:

-w matrix A width

-h matrix A height/matrix B width

-s matrix B height

-m global work item number in x dimention

-n global work item number in y dimention

-x local work item number in x dimention

-y local work item number in y dimention

-d display results or not

-e calculate matrix C gold result or not. (very slow).

For example, in the make_run.sh, it runs like this:

./hello_world -w 1024 -h 1024 -s 1024 -m 256 -n 256 -x 16 -y 16 -d 0 -e 0

Matrix height/width must be 4 times of global item numbler, because each work item computes 4X4 elements of result matrix C.

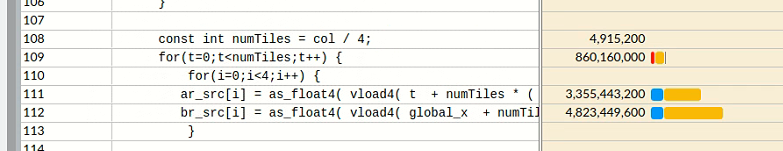

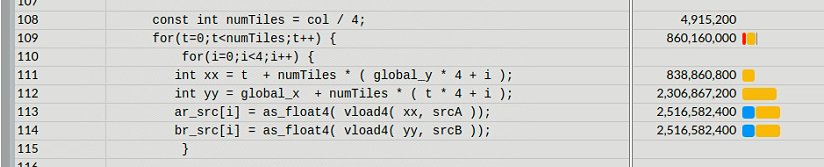

Vtune shows that the vload4 consumes a lot of instruction counts:

If we use 2 variables to see the detail:

OS: ubuntu 18.04.

Kernel: 5.6.15-050615-generic

gcc version 7.5.0 (Ubuntu 7.5.0-3ubuntu1~18.04)

Intel oneAPI is not used, since I just use GCC to compile the code.

Platform info:

Platform Host timer resolution 1ns

Platform Extensions function suffix INTEL

Platform Name Intel(R) CPU Runtime for OpenCL(TM) Applications

Platform Vendor Intel(R) Corporation

Platform Version OpenCL 2.1 LINUX

Platform Profile FULL_PROFILE

Platform Host timer resolution 1ns

Platform Extensions function suffix INTEL

Platform Name Intel(R) OpenCL HD Graphics

Number of devices 1

Device Name Intel(R) UHD Graphics 630 [0x9bc8]

Device Vendor Intel(R) Corporation

Device Vendor ID 0x8086

Device Version OpenCL 3.0 NEO

Driver Version 21.38.21026

Device OpenCL C Version OpenCL C 3.0

Device Type GPU

Device Profile FULL_PROFILE

Device Available Yes

Compiler Available Yes

Linker Available Yes

Max compute units 24

Max clock frequency 1200MHz

Device Partition (core)

Max number of sub-devices 0

Supported partition types None

Max work item dimensions 3

Max work item sizes 256x256x256

Max work group size 256

Preferred work group size multiple 32

Max sub-groups per work group 32

Sub-group sizes (Intel) 8, 16, 3

And, one question, is it possible, to do compute and transfer overlap, to hide global memory to local memory transfer latency? I did some research and just found some info about Nvidia device on how to hide system DDR to accelerator card GDDR latency through PCIE.

Thanks a lot!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page