- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've said this before, but I feel I need to say it again...

Please add precise bitrate control back. It was working so perfectly right before you removed it.

What is the point of using a mix stream if you don't save any bandwidth????

All I want is a 1080p mix stream, with a bitrate under 2Mbps (maybe even under 1.5Mbps!) . Youtube uses a bitrate of about 2.8Mbps for their 1080p high quality video, what the hell makes you think that a bitrate of up to 4.5Mbps is necessary for a bunch of people using webcams?

I'm very puzzled as to why this was taken out when it was working so well. Video bandwidth is a HUGE web expense, and not being able to manage it effectively can cost an enormous amount of money. If I could adjust the bitrate of the mix stream PROPERLY, I could save so much in bandwidth costs if I end up using this software.

What use is this feature at all? It only makes sense for use over LAN.

You know what mix resolution stays under 2Mbps during movement???? 360p. That stays at 1.7Mbps.... But that is just insane. Youtube uses 61KB/s for their 360p, so why the hell does a 360p mix stream need to use 213KB/s!!!!!!!!!!

I want some answers. Why why WHY do you even bother with this feature if you aren't saving bandwidth? I don't think I'm doing anything wrong, I'm using the basic example over my home network to test. Am I missing something here?

What's so frustrating is you REMOVED the feature. You had it done, it was working great. Why did you think it was necessary to remove it? Is a bitrate cap still available that I can trigger manually somehow?

Sorry for ranting I just don't understand and was really looking forward to taking advantage of mix streams.

- Tags:

- HTML5

- JavaScript*

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for you feedback Stephen, before answering your question, we'd like to double confirm with you about following questions:

1. What MCU version are you using?

2. You mentioned bitrate control worked well before, what's the version and how do you use it?

2. What's your room settings for media mixing in 3000/console (resolution? quality level? )? and what options do you provide for subscribe API?

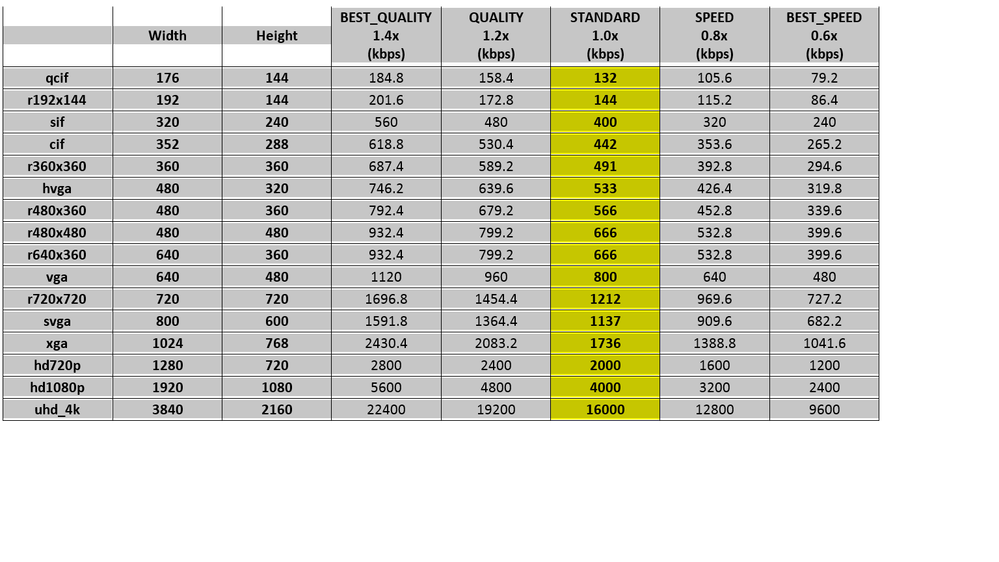

MCU's bitrate control is aligned with following data, bitrate for subscribed 1080p mix stream with best_speed should be around 2400 kbps, during our test, this bitrate control works well in v3.5, please provide your detailed scenario to help us reproduce this issue

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

j

Qiujiao W. (Intel) wrote:

1. What MCU version are you using?

2. You mentioned bitrate control worked well before, what's the version and how do you use it?

3. What's your room settings for media mixing in 3000/console (resolution? quality level? )? and what options do you provide for subscribe API?

1. I am using v3.5 on CentOS, not with hardware acceleration, but it does have the cisco H.264 installed.

2. I can't remember, it may have been version 2.8 or something like that. Back then, I was able to specify the exact bitrate and through the same testing methods I was able to conclude it was working very well.

3. I am using a fresh install of the basic example, and I modified the default room in the console, only changing the mix resolution to 1080p and changing the quality level to bestspeed. I made sure to apply it, and I restarted the server too. I am only just now looking at the subscribe API. My understanding was that if I chose 'bestspeed' inside the console, then when I press the 1920x1080 button on the basic example then it would use 'bestspeed'. Is that correct? I am surprised that the client gets to choose the quality rather than the server itself. I have tried adding "betterspeed" into this conference.subscribe but I notice no difference in behaviour.

When I subscribe to the mix at 360p, and I consistently make movement on the webcam, I noticed a 1704kbps download rate.

When I subscribe to the mix at 1080p, and I make no movement at all, I notice a download rate of 900kbps. But as I make movement it jumps up to 2700kbps and 3300kbps and I saw it top at 4500kbps.

I was using a separate laptop and watching the network information on the Task Manager.

Now, maybe this feature just isn't for me, the only thing that draws me to using an MCU solution is if I can get a MAJOR reduction in bandwidth. If I can't get that, then I would much rather use a SFU solution.

I have a new question.

I was thinking instead I would just forward the streams instead, and limit the upload bitrate on the client's side... then I noticed this in the changelog:

Removed APIs:

- ConferenceClient.setVideoBitrate

Does this just mean that we will set max bandwidth inside ConferenceClient.publish instead?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OKAY so setting qualitylevel and bitratemultiplier in conference.subscribe DOES WORK.

I didn't realise that "BestSpeed" is case sensitive.

After setting that, it does seem to only hit a maximum of 2400kbps when I click on 1080p.

Why do you have the quality levels choosable on the console if the client can choose whatever they wish? That's quite confusing, what is the point of having it in the console, what does it do? Is it supposed to just act as the 'default' if nothing is specified? If so, that was failing.

I wish we could lock these options down.

I think you are trying to make this a bit too simple by assuming the correct bitrate, and assuming that every resolution lower than what is chosen should be available. If someone is going to the effort of implementing an MCU for their webrtc experience then surely they are willing to set their own codec, bitrate and resolution settings manually. Once HEVC and VP9 become more common, these assumed bitrate multipliers aren't going to make sense.

From the :3000/console, please let me specify:

- The allowed codecs.

- The allowed resolutions (e.g. let me have 1080p, and 480p, and nothing in-between, if I wish)

- The bitrates allowed for each resolution, on a certain codec.

Right now, in a scenario where I use this for a video chatroom website, I cannot lock the MCU down to just making 1 encoding. Instead, if I want a 1080p encoding of H.264, I have to allow SIX resolutions in total being accessible, and then it seems SIX separate quality options for each. So if someone wanted to abuse the server, they could start 72 separate encodings (if we include vp8 as well) per room.

And it would also be nice if you could enforce a upload bitrate limit (and maybe resolution too) for user's webcams from the server side. Because if I'm going to forward the streams instead, I'd like assurance I'm not going to be forwarding a 4K 50mbps stream onto someone.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page