- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

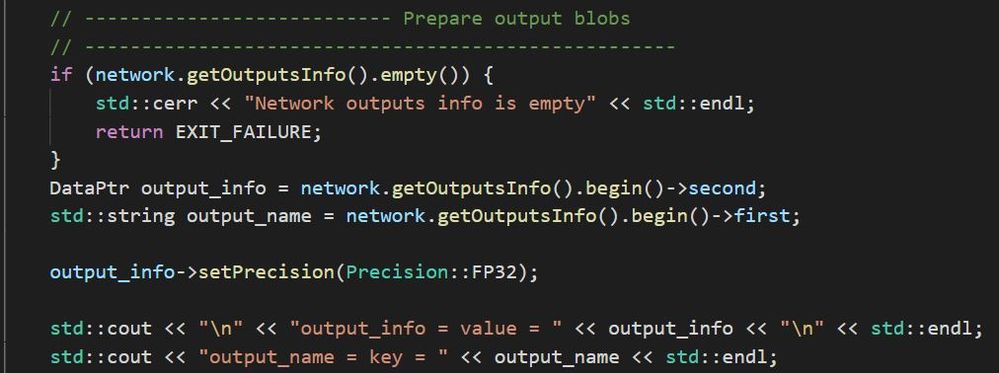

I am working through the C++ Hello Classification example

I am confused by these 2 lines:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Adammpolak,

Thanks for your patience and sorry for the inconvenience. Hope you are doing well.

output_info = network.getOutputsInfo().begin()->second;

output_name = network.getOutputsInfo().begin()->first;

Basically, this network.getOutputsInfo() will get the network output Data node information. The received info is stored in the output_info. In the model, all data is stored in dictionary form, {object: info}, though this function is to get that information.

Regarding output configuration, e.g. output_info->setPrecision(Precision::FP32);

The developer set a default configuration of user did not specify the config, such as CPU or MYRIAD devices and other configuration.

output_info = network.getOutputsInfo().begin()->second;

output_name = network.getOutputsInfo().begin()->first;

This 2 lines does not impact in code breakdown, it just to collect information from the model. On another note, Based on the following thread, the lines were used to get key and value: https://stackoverflow.com/questions/4826404/getting-first-value-from-map-in-c

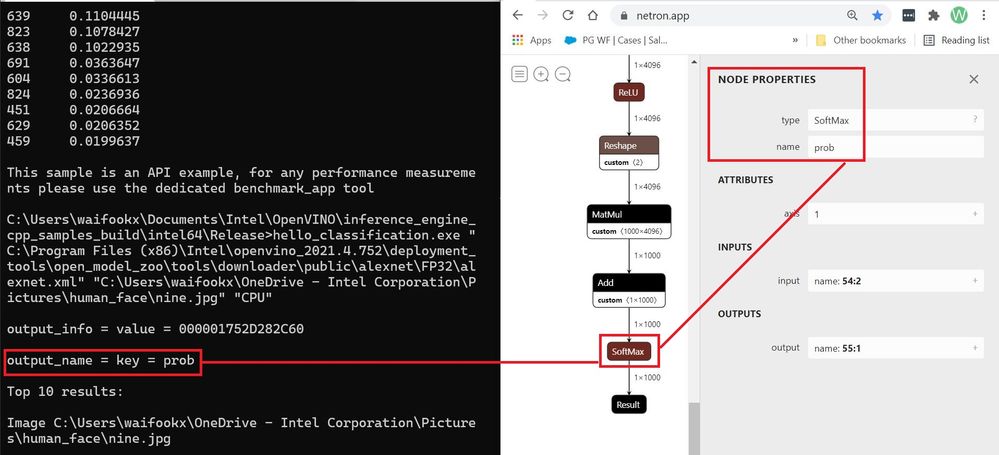

On the other hand, I have replicated it from my side. Here are the findings:

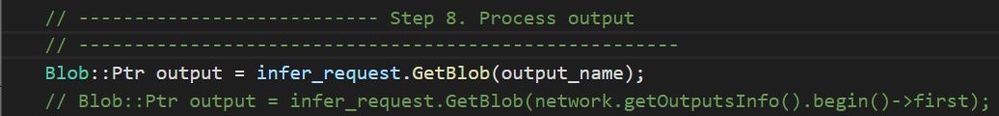

Step 3b - Configure output was removed in the script. While replacing the following code:

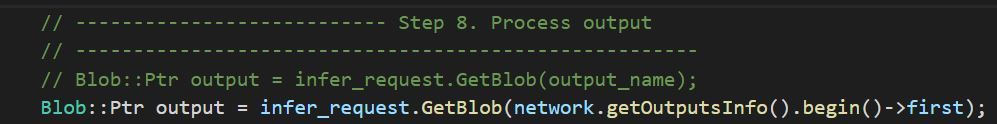

Blob::Ptr output = infer_request.GetBlob(output_name)

to the following code in Step 8 - Process output:

Blob::Ptr output = infer_request.GetBlob(network.getOutputsInfo().begin()->first);

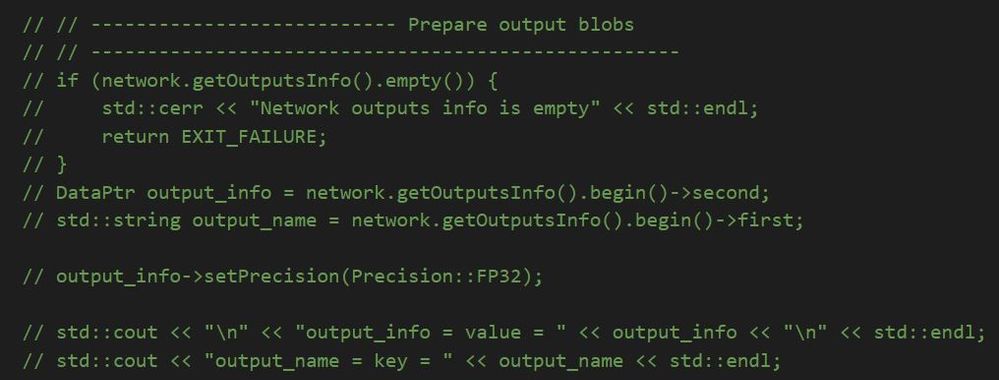

Included these 2 lines:

Excluded these 2 lines:

Hope this information help. Have a great day.

Regards,

Wan

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adammpolak,

Thank you for reaching out to us and thank you for using the Intel Distribution of OpenVINO™ toolkit!

To answer your first question, these two lines were used to configure the output of the model. It request output information using the InferenceEngine::CNNNetwork::getOutputsInfo() method.

To answer your second question, these two lines are not required because they are optional step to implement a typical inference pipeline with the Inference Engine C++ API.

To answer your third question, output_name use the getOutputsInfo() method to find out OpenVINO output names for using them later when calling InferenceEngine::InferRequest::GetBlob or InferenceEngine::InferRequest::SetBlob.

On another note, the following configuration options are available when you declare output_info:

1. Set precision. By default, the output precision is set to Precision::FP32.

2. Set layout. By default, the output layout depends on the number of its dimensions as shown as follow:

|

No of Dimension |

5 |

4 |

3 |

2 |

1 |

|

Layout |

NCDHW |

NCHW |

CHW |

NC |

C |

I highly recommend you read through Integrate Inference Engine with Your C++ Application to know more about the typical Inference Engine C++ API workflow.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your response! I appreciate you answering each of my questions (thank you!) but to be honest I was looking for more insight into what is actually going on.

i.e. "What happens if I press this button?" I am hoping to get more than: "The button will be pressed."

To answer your first question, these two lines were used to configure the output of the model. It request output information using the InferenceEngine::CNNNetwork::getOutputsInfo() method.

What does configuring the output of the model mean? Does it mean that it changes the size, the type of inference that is run, where it is saved? What is being configured here? How does the output change if I had not used these 2 lines, would the example break?

To answer your second question, these two lines are not required because they are optional step to implement a typical inference pipeline with the Inference Engine C++ API.

Sorry, is this always optional (?), for any type of inference I want to run, or do some inferences require this configuration? What changes in the inference pipeline if these are not included in the example? What benefit does this configuration provide in this example?

To answer your third question, output_name use the getOutputsInfo() method to find out OpenVINO output names for using them later when calling InferenceEngine::InferRequest::GetBlob or InferenceEngine::InferRequest::SetBlob.

So these 2 methods: network.getOutputsInfo().begin()->second; network.getOutputsInfo().begin()->first; just provide info and name, so they can be used to call other things down stream?

What would happen if I did not have these names, would I not be able to GetBlob?

Why are they called "first" and "second"? Are these arbitrary method names that actually mean get output info and get output name?

I appreciate the link to additional resources such as: Integrate Inference Engine with Your C++ Application but unfortunately in that material there is no information regarding the use of ->second and -> first and what they mean.

Hoping you could provide some insight into what the purpose of those methods are!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Adammpolak,

Thanks for your patience and sorry for the inconvenience. Hope you are doing well.

output_info = network.getOutputsInfo().begin()->second;

output_name = network.getOutputsInfo().begin()->first;

Basically, this network.getOutputsInfo() will get the network output Data node information. The received info is stored in the output_info. In the model, all data is stored in dictionary form, {object: info}, though this function is to get that information.

Regarding output configuration, e.g. output_info->setPrecision(Precision::FP32);

The developer set a default configuration of user did not specify the config, such as CPU or MYRIAD devices and other configuration.

output_info = network.getOutputsInfo().begin()->second;

output_name = network.getOutputsInfo().begin()->first;

This 2 lines does not impact in code breakdown, it just to collect information from the model. On another note, Based on the following thread, the lines were used to get key and value: https://stackoverflow.com/questions/4826404/getting-first-value-from-map-in-c

On the other hand, I have replicated it from my side. Here are the findings:

Step 3b - Configure output was removed in the script. While replacing the following code:

Blob::Ptr output = infer_request.GetBlob(output_name)

to the following code in Step 8 - Process output:

Blob::Ptr output = infer_request.GetBlob(network.getOutputsInfo().begin()->first);

Included these 2 lines:

Excluded these 2 lines:

Hope this information help. Have a great day.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adammpolak,

This thread will no longer be monitored since this issue has been resolved.

If you need any additional information from Intel, please submit a new question.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page