- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

We are trying to optimize our instances for coldstarts and found model caching like an immediate solution, however, both on servers and local deployments, the cold start performance for all models we test is consistently worse.

This is what our initialization code looks like:

core = ov.Core()

core.set_property({props.cache_dir: path_to_cache_dir})

compiled_model = core.compile_model(model=model, device_name=device_name)

After enabling cache, we can see that there is a blob file under the cache folder, however, the time it takes to load a model after caching is enabled increases by approximately 18% in our tests.

We are also trying to get rid of the non-cached files on instances where we are confident that a cached version of the model was previously generated by another service. It seems like this might not be possible, is that expected? Currently it seems like we would be using close to twice the amount of storage when caching is enabled.

At some point we considered that maybe caching wasn't supported in our test devices but this property is set to true:

caching_supported = 'EXPORT_IMPORT' in core.get_property("CPU", device.capabilities)

We suspect that the runtime is failing to load the cached version, however, no errors or traces are thrown so it's hard for us to diagnose what might be wrong or whether there might be a regression in the new SDK (2023.2).

Hardware used:

= Intel desktop CPUs (from 8th gen to 13th gen, i913900k)

= Undisclosed server CPUs

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi polmian,

Thank you for reaching out to us.

Please share the following information for further investigation from our side:

- Complete sample code for when the issue occurs.

- Model files, source repository for the models or its use case.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey,

It's not easy for us to share the models or code due to NDA & IP Contracts with our customers, however, we can provide a repro via email or dm that isolates the issue.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi polmian,

We understand your concerns. However, you can also provide the base model repository that was used for training instead of your own model if you wish. With that said, you can reach out to me directly through hairulx.hadee.roslee@intel.com

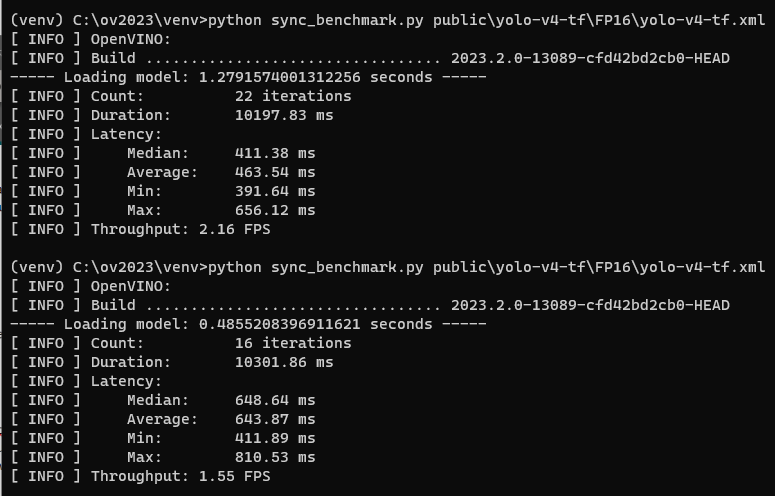

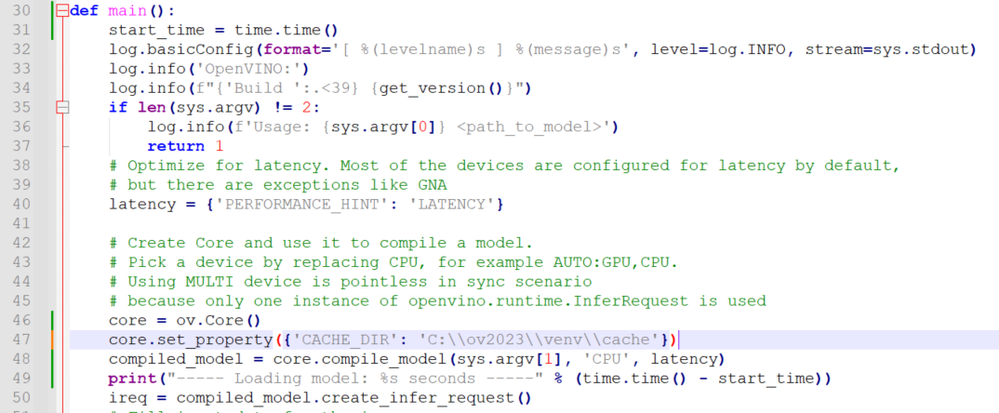

On another note, I've validated the cache feature using a modified sync_benchmark.py sample with Open Model Zoo's yolo-v4-tf model. From my testing, the time to load the model was reduced when using the cache as shown below:

Here is the portion of modified code for the sync_benchmark.py sample:

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi polmian,

This thread will no longer be monitored since we have provided information. If you need any additional information from Intel, please submit a new question.

Regards,

Hairul

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page