- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

While doing INT8 Calibration getting this error-

What does it specify? Is it that the IR version need to be upgraded? But I am actually using latest 2021.3.9 model optimizer to get the IR.

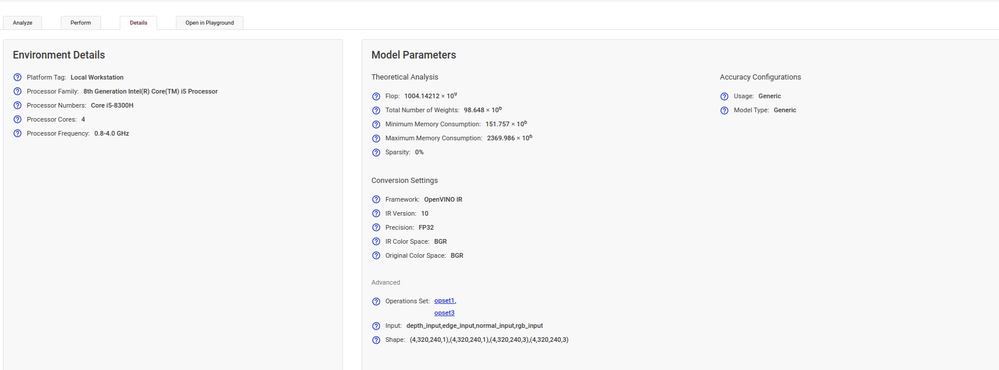

Attaching model specifications as image for your reference-

Error during INT8 Calibration-

Per-layer Accumulated Metrics

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Reshu Singh,

Thank you for reaching out. We would like to know more information about your workaround.

Which model are you use to do the INT8 Calibration ?

Did you follow the steps given in INT8 Calibration ?

For your information, INT8 calibration is not available in the following cases:

- your configuration uses a generated dataset

- your configuration uses a model with Intermediate Representation (IR) versions lower than 10

- your model is already calibrated

- you run the configuration on an Intel® Processor Graphics, Intel® Movidius™ Neural Compute Stick 2, or Intel® Vision Accelerator Design with Intel® Movidius™ VPUs plugin

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Syamimi_Intel !

The model I am using is my own custom made, not from inbuilt available with OpenVino Toolkit.

For information in the following cases:

- my configuration uses a custom uploaded dataset -

- my configuration uses a model with Intermediate Representation (IR) version = 10

- my model is not already calibrated

- I am running the configuration on an Intel® Processor

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear @resh ,

Thank you for your question.

Seems like you are observing a defect, that is going to be fixed in the upcoming releases. During the calibration of the model the layer analysis information is not available, and of course another message should be shown.

Does the information on layer runtime analysis become available after model is calibrated?

Regards,

Marat.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The model calibration actually fails and no info related to layer runtime is shown as such.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So info is not available as calibration failed. Are there any error logs on failed calibration?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let me share the screenshots of DL Workbench while performing INT8 Calibration [Step By Step]-

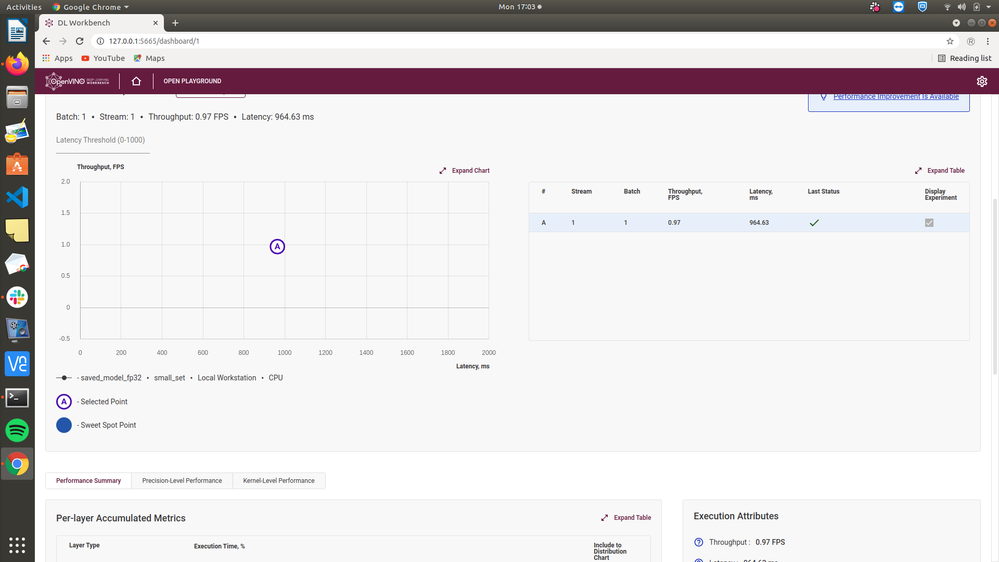

Fig 1. IR Loading (FP32 format) to get the metrics on DL Workbench

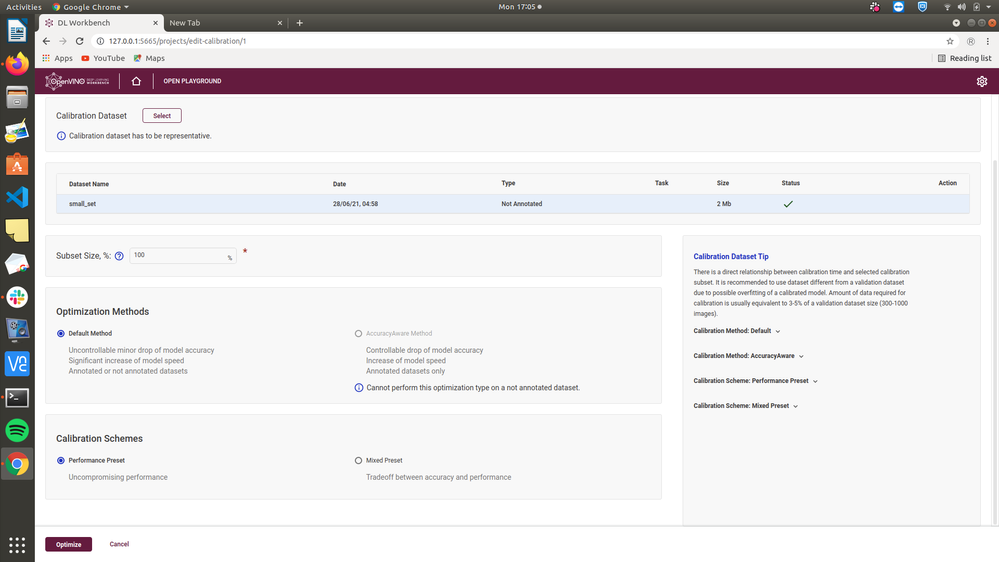

Fig2: INT8 Calibration over the FP32 IR loaded

F

Fig3: See Precision Distribution and Precision Transition Matrix Errors below-

Also , for my custom model, I am using 4 inputs for the model , so at the infernce time also I have loaded 4 images to get the metrics. Let me know if any other info is required @Syamimi_Intel , @Marat .

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It would be ideal if you could share the model with us, so we can reproduce and investigate its calibration failure.

If it is not possible, could you provide the log file? For that click settings icon (gear at the right top corner) and download the log file by clicking 'Download Log' button.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @resh,

According to the logs you have attached calibration failed with the following error:

RuntimeError: IEEngine supports networks with single input or net with 2 inputs. In second case there are image input and image info input Actual inputs number: 4

As you said, you model contains 4 inputs, and more information on inputs is needed for calibration.

In order to calibrate your model you should:

1. Provide annotated dataset;

2. Provide accuracy configuration;

Regards,

Marat.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Marat

"RuntimeError: IEEngine supports networks with single input or net with 2 inputs. In second case there are image input and image info input Actual inputs number: 4"

Does it mean that networks with >2 inputs are not handled in IEEngine?

Thanks,

Reshu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, this actually means that model with more than 2 inputs cannot be calibrated on not annotated dataset.

If you want to calibrate it you should provide annotated dataset in one of the supported formats and set the accuracy configuration for your model.

Refer to this article on obtaining dataset and this one for accuracy measurement for more information.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Marat !

Now , I got annotations in COCO JSON format , so my directory has four different folders for four different inputs and one "annotation" folder , which I have zipped and uploaded, Upload is successful and DL Workbench even detects it as "COCO" type. But still INT8 Calib is failing.

Update: I have upgraded DL Workbench version to 2021.4

So , the logs in 2021.4 version are here-

The -result-archive argument is not set. The default value /home/workbench/.workbench/models/1/21/job_artifacts/artifact.tar.gz will be used.

[setupvars.sh] OpenVINO environment initialized

0%| |00:00 0%| |00:00 0%| |00:00Traceback (most recent call last):

File "/usr/local/bin/pot", line 11, in <module>

load_entry_point('pot==1.0', 'console_scripts', 'pot')()

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/app/run.py", line 36, in main

app(sys.argv[1:])

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/app/run.py", line 60, in app

metrics = optimize(config)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/app/run.py", line 138, in optimize

compressed_model = pipeline.run(model)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/compression/pipeline/pipeline.py", line 54, in run

result = self.collect_statistics_and_run(model, current_algo_seq)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/compression/pipeline/pipeline.py", line 64, in collect_statistics_and_run

model = algo.run(model)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/compression/algorithms/quantization/default/algorithm.py", line 94, in run

self.algorithms[1].algo_collector.compute_statistics(model)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/compression/statistics/collector.py", line 70, in compute_statistics

_, stats_ = self._engine.predict(combined_stats, sampler)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/compression/engines/ac_engine.py", line 166, in predict

stdout_redirect(self._model_evaluator.process_dataset_async, **args)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/compression/utils/logger.py", line 129, in stdout_redirect

res = fn(*args, **kwargs)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/libs/open_model_zoo/tools/accuracy_checker/accuracy_checker/evaluators/quantization_model_evaluator.py", line 142, in process_dataset_async

self._fill_free_irs(free_irs, queued_irs, infer_requests_pool, dataset_iterator, **kwargs)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/libs/open_model_zoo/tools/accuracy_checker/accuracy_checker/evaluators/quantization_model_evaluator.py", line 329, in _fill_free_irs

batch_input, batch_meta = self._get_batch_input(batch_inputs, batch_annotation)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/libs/open_model_zoo/tools/accuracy_checker/accuracy_checker/evaluators/quantization_model_evaluator.py", line 80, in _get_batch_input

filled_inputs = self.input_feeder.fill_inputs(batch_input)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/libs/open_model_zoo/tools/accuracy_checker/accuracy_checker/launcher/input_feeder.py", line 193, in fill_inputs

inputs = self.fill_non_constant_inputs(data_representation_batch)

File "/opt/intel/openvino/deployment_tools/tools/post_training_optimization_toolkit/libs/open_model_zoo/tools/accuracy_checker/accuracy_checker/launcher/input_feeder.py", line 165, in fill_non_constant_inputs

'Please provide regular expression for matching in config.'.format(input_layer))

libs.open_model_zoo.tools.accuracy_checker.accuracy_checker.config.config_validator.ConfigError: Impossible to choose correct data for layer depth_input.Please provide regular expression for matching in config.

Can you describe the reason?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @resh,

The first question is whether https://community.intel.com/t5/Intel-Distribution-of-OpenVINO/DL-Workbench-2021-4-Issue/m-p/1296552#M24408 is the same issue or not. To me, it sounds like different issues, correct me if I am wrong.

Regarding your error 'Please provide regular expression for matching in config.'.format(input_layer)), it seems that there are some issues in the accuracy configuration. In particular, it seems that you model has several inputs and one of them is not properly described in the accuracy configuration. Accuracy Checker does not know what data to feed to that input.

Have you tried to measure accuracy before making the calibration? I am afraid you will not be able to make accuracy meausrement as well due to accuracy configuration errors. It will be much easier for you to debug and find the optimal configuration by measuring accuracy. Then you can switch back to the calibration flow and it should work.

--

Alexander Demidovskij

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Reshu Singh,

I found that you also posted a similar question at case number 05132480. We will treat these as one, please continue further communication at the following 05132480 case.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Reshu Singh,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Regards,

Syamimi

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page