- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi,

from documentation openVINO supports channelwise quantization:

-

Per-channel quantization of weights of Convolutional and Fully-Connected layers.

-

Per-channel quantization of activations for channel-wise and element-wise operations, e.g. Depthwise Convolution, Eltwise Add/Mul, ScaleShift.

-

Symmetric and asymmetric quantization of weights and activations with the support of per-channel scales and zero-points.

But when I tried to feed Model Optimizer with QuantizeLinear-DequantizeLinear nodes with tensor-valued scale/zero_point parameters I got the following error:

.../mo/extensions/front/onnx/quantize_dequantize_linear.py", line 52, in replace_sub_graph

if q_scale.value == dq_scale.value and q_zerop.value == dq_zerop.value:

ValueError: The truth value of an array with more than one element is ambiguous. Use a.any() or a.all()quantize_dequantize_linear.py, line 52 looks like:

# only constant as for zero_point/scale supported

if q_scale.soft_get('type') == 'Const' and dq_scale.soft_get('type') == 'Const' and \

q_zerop.soft_get('type') == 'Const' and dq_zerop.soft_get('type') == 'Const':

# only patterns with same scale/zero_point values for Q and DQ are supported

if q_scale.value == dq_scale.value and q_zerop.value == dq_zerop.value:

log.debug('Found Q-DQ pattern after {}'.format(name))From this code I can deduce that Model Optimizer (openVINO) only supports scalar quantization.

Are there ways to enable channelwise quantization?

Thanks in advance.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Arseny,

Thanks for reaching out to us.

Could you share your model and the steps to reproduce with us for further understanding and investigation?

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peh,

thanks for your reply.

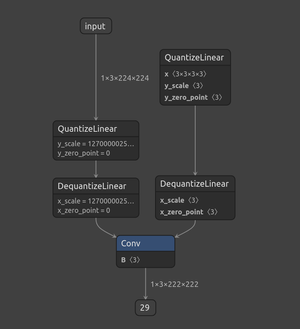

The simplest example - conv2d (conv.onnx.tar.gz in attachments):

- The left branch is scalar quantized: y_scale and y_zero_point are scalars.

- The right branch is channelwise quantized: y_scale and y_zero_point are 1D-tensors.

When I put this model into Model Optimizer I get the following error:

bash$ mo --input_model=conv.onnx

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /.../conv.onnx

- Path for generated IR: /.../.

- IR output name: conv

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

ONNX specific parameters:

- Inference Engine found in: /.../venv39/lib/python3.9/site-packages/openvino

Inference Engine version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

Model Optimizer version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

[ ERROR ] -------------------------------------------------

[ ERROR ] ----------------- INTERNAL ERROR ----------------

[ ERROR ] Unexpected exception happened.

[ ERROR ] Please contact Model Optimizer developers and forward the following information:

[ ERROR ] Exception occurred during running replacer "None (<class 'extensions.front.onnx.quantize_dequantize_linear.QuantizeDequantizeLinear'>)": The truth value of an array with more than one element is ambiguous. Use a.any() or a.all()

[ ERROR ] Traceback (most recent call last):

File "/.../venv39/lib/python3.9/site-packages/mo/mo/utils/class_registration.py", line 278, in apply_transform

for_graph_and_each_sub_graph_recursively(graph, replacer.find_and_replace_pattern)

File "/.../venv39/lib/python3.9/site-packages/mo/mo/middle/pattern_match.py", line 46, in for_graph_and_each_sub_graph_recursively

func(graph)

File "/.../venv39/lib/python3.9/site-packages/mo/mo/front/common/replacement.py", line 136, in find_and_replace_pattern

apply_pattern(graph, action=self.replace_sub_graph, **self.pattern())

File "/.../venv39/lib/python3.9/site-packages/mo/mo/middle/pattern_match.py", line 83, in apply_pattern

action(graph, match)

File "/.../venv39/lib/python3.9/site-packages/mo/extensions/front/onnx/quantize_dequantize_linear.py", line 52, in replace_sub_graph

if q_scale.value == dq_scale.value and q_zerop.value == dq_zerop.value:

ValueError: The truth value of an array with more than one element is ambiguous. Use a.any() or a.all()

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/.../venv39/lib/python3.9/site-packages/mo/mo/main.py", line 394, in main

ret_code = driver(argv)

File "/.../venv39/lib/python3.9/site-packages/mo/mo/main.py", line 356, in driver

ret_res = emit_ir(prepare_ir(argv), argv)

File "/.../venv39/lib/python3.9/site-packages/mo/mo/main.py", line 252, in prepare_ir

graph = unified_pipeline(argv)

File "/.../venv39/lib/python3.9/site-packages/mo/mo/pipeline/unified.py", line 13, in unified_pipeline

class_registration.apply_replacements(graph, [

File "/.../venv39/lib/python3.9/site-packages/mo/mo/utils/class_registration.py", line 328, in apply_replacements

apply_replacements_list(graph, replacers_order)

File "/.../venv39/lib/python3.9/site-packages/mo/mo/utils/class_registration.py", line 314, in apply_replacements_list

apply_transform(

File "/.../venv39/lib/python3.9/site-packages/mo/mo/utils/logger.py", line 111, in wrapper

function(*args, **kwargs)

File "/.../venv39/lib/python3.9/site-packages/mo/mo/utils/class_registration.py", line 302, in apply_transform

raise Exception('Exception occurred during running replacer "{} ({})": {}'.format(

Exception: Exception occurred during running replacer "None (<class 'extensions.front.onnx.quantize_dequantize_linear.QuantizeDequantizeLinear'>)": The truth value of an array with more than one element is ambiguous. Use a.any() or a.all()

[ ERROR ] ---------------- END OF BUG REPORT --------------

[ ERROR ] -------------------------------------------------

When I use scalar quantization for weights (right branch), Model Optimizer successfully optimizes model and I can start inference or analyze the model in Workbench.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Arseny,

Thanks for sharing your model with us.

However, I unable to load your model with Netron.

Error loading model. Archive contains no model files in 'conv.onnx'.

I would like to request you to archive your model into TAR file and share with us again.

Thanks,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Peh,

my mistake, please try again.

Arseny

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Arseny,

Thanks for sharing the model again. Now, I can reproduce the issue when converting the ONNX model to IR.

I try to change the codes to a.any() and a.all() but it seems scalar quantization is only supported as you mentioned earlier.

if np.all(q_scale.value == dq_scale.value, q_zerop.value == dq_zerop.value):

Received error: TypeError: an integer is required

if np.any(q_scale.value == dq_scale.value, q_zerop.value == dq_zerop.value):

Received error: Only integer scalar arrays can be converted to a scalar index

In a meanwhile, I am going to reach out to our Engineering team for any possible way for enabling channelwise quantization.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Arseny,

Sorry for my late response.

I have verified that your issue is fixed in the latest OpenVINO GitHub and will be available on the next release which is OpenVINO 2022.1.

For now, there are two options for you:

- Wait for the next release (release date yet to be known)

- Download the latest branch from the OpenVINO™ GitHub repository and build from open source.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi Peh,

thanks for your reply.

We have fixed channelwise quantization for 2021.4, could you invite reviewers?

https://github.com/openvinotoolkit/openvino/pull/9804

https://github.com/openvinotoolkit/openvino/pull/9798

Nobody checks our merge requests.

Regards,

Arseny

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Arseny,

For all external PRs initiated in OpenVINO GitHub, we have a process whereby our development team will review, validate, and approve accordingly. You may add a comment on your PR thread for better visibility.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Arseny,

This thread will no longer be monitored since we have provided clarification and a suggestion. If you need any additional information from Intel, please submit a new question.

Regards,

Munesh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page