- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Team,

I tried to follow the tutorial to implement the OpenVINO Model Server, but I got an error.

https://github.com/openvinotoolkit/model_server/blob/main/docs/ovms_quickstart.md

https://www.youtube.com/watch?v=AfytPrAVdfc

The error when running the inference service command line;

$ python3 face_detection.py --batch_size 1 --width 600 --height 400 --input_images_dir images --output_dir results

Request shape (1, 3, 400, 600)

Traceback (most recent call last):

File "face_detection.py", line 79, in <module>

result = stub.Predict(request, 10.0) # result includes a dictionary with all model outputs

File "/home/mostafa/.local/lib/python3.8/site-packages/grpc/_channel.py", line 690, in __call__

return _end_unary_response_blocking(state, call, False, None)

File "/home/mostafa/.local/lib/python3.8/site-packages/grpc/_channel.py", line 592, in _end_unary_response_blocking

raise _Rendezvous(state, None, None, deadline)

grpc._channel._Rendezvous: <_Rendezvous of RPC that terminated with:

status = StatusCode.NOT_FOUND

details = "Model with requested version is not found"

debug_error_string = "{"created":"@1638208144.932722687","description":"Error received from peer ipv6:[::1]:9000","file":"src/core/lib/surface/call.cc","file_line":1055,"grpc_message":"Model with requested version is not found","grpc_status":5}"

Seeking your support to fix it, please

BR,

Mostafa

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mostafa,

Thanks for reaching out.

We have verified the tutorial and we observe a similar issue as you when running the inference. We will further investigate this and get back to you with the information soon.

By the way, did you encounter any error at any steps in the tutorial before running Step 7 (Run Inference)?

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

Thanks for your reply.

No other error except that one.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Aznie,

Have you got the chance to check the issue, please?

Many Thanks,

Mostafa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mostafa-,

Thank you for reaching out to us.

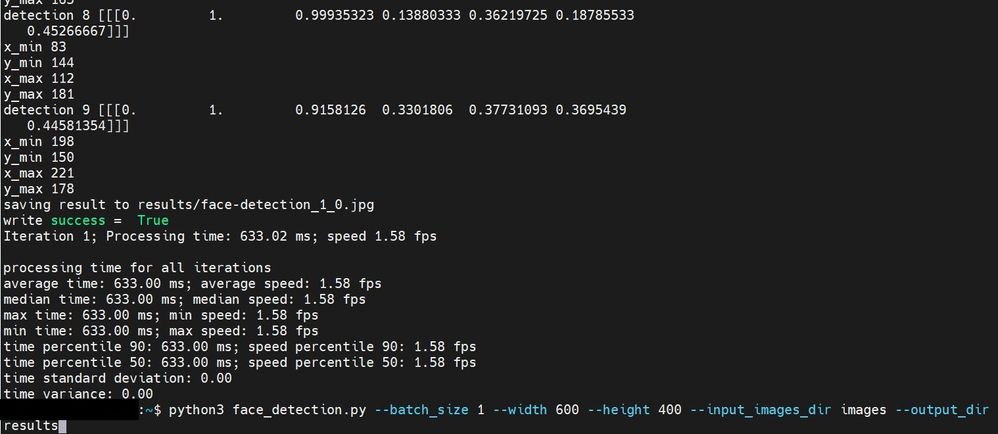

For your information, we have validated the OpenVINO™ Model Server Quickstart. The results are shown as follow:

Based on your error message, the issue might be due to port or proxy. Please check the proxy setting and eliminate the proxy if possible.

On another note, you may refer to the following threads for more information related to your issue:

https://github.com/openvinotoolkit/model_server/issues/50

https://github.com/tensorflow/serving/issues/1770

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mostafa-,

Thank you for your question.

If you need any additional information from Intel, please submit a new question as this thread is no longer being monitored.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page