- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am trying to use DL Workbench to do the INT8 optimization for my model, MobileNetV2 (trained from Tensorflow). I wish to optimize it using AccuracyAware but it showed as follows:

[RUN COMMAND] + pot --direct-dump --progress-bar --output-dir /opt/intel/openvino_2022/tools/workbench/wb/data/int8_calibration_artifacts/53/job_artifacts --config /opt/intel/openvino_2022/tools/workbench/wb/data/int8_calibration_artifacts/53/scripts/int8_calibration.config.json

/usr/local/lib/python3.8/dist-packages/openvino/offline_transformations/init.py:10: FutureWarning: The module is private and following namespace offline_transformations will be removed in the future, use openvino.runtime.passes instead!

warnings.warn(

0%| |00:00

0%| |00:00

0%| |00:00Traceback (most recent call last):

File "/usr/local/bin/pot", line 8, in

sys.exit(main())

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/app/run.py", line 26, in main

app(sys.argv[1:])

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/app/run.py", line 56, in app

metrics = optimize(config)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/app/run.py", line 119, in optimize

compressed_model = pipeline.run(model)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/pipeline/pipeline.py", line 49, in run

model = self.collect_statistics_and_run(model, current_algo_seq)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/pipeline/pipeline.py", line 62, in collect_statistics_and_run

model = algo.run(model)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/algorithms/quantization/accuracy_aware/algorithm.py", line 51, in run

return self._accuracy_aware_algo.run(model)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/algorithms/quantization/accuracy_aware_common/algorithm.py", line 141, in run

self._baseline_metric, self._original_per_sample_metrics = self._collect_baseline(model, print_progress)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/algorithms/quantization/accuracy_aware_common/algorithm.py", line 225, in _collect_baseline

return self._evaluate_model(model=model,

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/algorithms/quantization/accuracy_aware_common/algorithm.py", line 534, in _evaluate_model

metrics, metrics_per_sample = evaluate_model(model, self._engine, self.dataset_size,

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/algorithms/quantization/accuracy_aware_common/utils.py", line 284, in evaluate_model

(metrics_per_sample, metrics), raw_output = engine.predict(stats_layout=stats_layout,

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/engines/ac_engine.py", line 192, in predict

stdout_redirect(self.model_evaluator.process_dataset_async, **args)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/pot/utils/logger.py", line 120, in stdout_redirect

res = fn(*args, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/evaluators/quantization_model_evaluator.py", line 130, in process_dataset_async

self.process_dataset_async_infer_queue(

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/evaluators/quantization_model_evaluator.py", line 202, in process_dataset_async_infer_queue

infer_queue.start_async(*self.launcher.prepare_data_for_request(

File "/usr/local/lib/python3.8/dist-packages/openvino/runtime/ie_api.py", line 334, in start_async

normalize_inputs(self[self.get_idle_request_id()], inputs),

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/evaluators/quantization_model_evaluator.py", line 176, in completion_callback

metrics_result, _ = self.metric_executor.update_metrics_on_batch(

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/metrics/metric_executor.py", line 104, in update_metrics_on_batch

results[input_id] = self.update_metrics_on_object(single_annotation, single_prediction)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/metrics/metric_executor.py", line 82, in update_metrics_on_object

metric_results.append(metric.metric_fn.submit(annotation, prediction))

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/metrics/metric.py", line 248, in submit

metric_result = self.update(annotation, prediction)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/metrics/classification.py", line 89, in update

accuracy = self.accuracy.update(annotation.label, prediction.top_k(self.top_k))

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/accuracy_checker/representation/classification_representation.py", line 60, in top_k

return np.argpartition(self.scores, -k)[-k:]

File "<__array_function__ internals>", line 180, in argpartition

File "/usr/local/lib/python3.8/dist-packages/numpy/core/fromnumeric.py", line 845, in argpartition

return _wrapfunc(a, 'argpartition', kth, axis=axis, kind=kind, order=order)

File "/usr/local/lib/python3.8/dist-packages/numpy/core/fromnumeric.py", line 57, in _wrapfunc

return bound(*args, **kwds)

ValueError: kth(=-3) out of bounds (2)

FATAL: exception not rethrown

/opt/intel/openvino_2022/tools/workbench/wb/data/int8_calibration_artifacts/53/scripts/job.sh: line 68: 7357 Aborted pot --direct-dump --progress-bar --output-dir ${ARTIFACTS_PATH} --config ${JOB_BUNDLE_PATH}/scripts/int8_calibration.config.json

Can I know how to resolve it?

Then I tried the default setting and it can optimize the model into int8 without error.

But when I run the following code to inference with the optimized bin & xml file, I found that the accuracy dropped significantly, from 95% to 50% . Can I know is it something normal ? or my following code has problem ?

...

ie = IECore()

net = ie.read_network(model=model_xml, weights=model_bin)

net.batch_size = batch_size

exec_net = ie.load_network(network=net, device_name=device)

nq = exec_net.get_metric("OPTIMAL_NUMBER_OF_INFER_REQUESTS")

input_blob = next(iter(net.input_info))

out_blob = next(iter(net.outputs))

image = cv2.imread(filename)

blob = cv2.dnn.blobFromImages(image,

scalefactor=1.0/255.0,

size=image_size,

mean=(0, 0, 0),

swapRB=True,

crop=False)

start = time.time()

outputs = exec_net.infer(inputs={input_blob: blob})

end = time.time()

output_node_name = list(outputs.keys())[0]

outputs = outputs[output_node_name]

...

I believe OpenVINO could perform much better in this situation, but I not sure how to do it. I have googled for some days but I still unable to find a straightforward sample or example for using OpenVINO to do [Tensorflow PB file to INT8] optimization and inference it using offline image. I saw some examples about converting to FP16 and some of them about using Tensorflow SavedModel, but I cannot find the PB to INT8 bin/xml and run with opencv image loading.

If anyone has such information, can please share it to me? I greatly appreciate it.

Thank you very much

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sunny84,

Greetings to you.

Could you please share your model and annotated dataset with us for further investigation?

Meanwhile, please try to Configure INT8 Calibration Settings and see if you are able to convert your model into INT8 and have the expected accuracy.

Regards,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Zulkifli,

Thank you very much for your kind reply.

Attached is all the related files (Tensorflow PB file, Tensorflow inference code, OpenVINO optimized files, OpenVINO inference code, original dataset, DL workbench required imagenet format and etc)

I am using Tensorflow 2.5 to train model and output PB.

Due to my original dataset has some confidentiality, so I trained a new model for it using fruit dataset (apple and banana).

In the Tensorflow inference result, it can achieve 100% accuracy, however, after optimized, the overall accuracy dropped to 86% using default calibration setting. (Another dataset drops from 95% to 50%)

Is it correct for my calibration way and inference code, according to this result?

Thank you.

Best regards,

Sunny

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sunny84

We apologize for the late response because the team is investigating the issue. Based on the response I received from the developer, INT8 Quantization doesn't guarantee accurate results for every model.

FYI DL workbench is based on POT which is no longer supported, so we are advised to switch to NNCF ways, which can follow the example code in the GitHub (https://github.com/openvinotoolkit/nncf).

Another request I received from the developer is for the Dataset, we need around 200-300 images, the dataset you shared with us is only 20+ which is not enough and will affect the accuracy too.

Let me know if you can provide us the image and we can help you check on the quantization of the model.

Hope to hear from you soon.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sunny84,

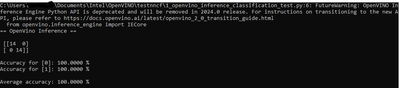

I have Quantized your model to INT8 with NNCF and I can see the results show 100 accuracy.

Below is my scrip for quantization using NNCF

import nncf

import openvino.runtime as ov

import torch

import numpy as np

from torchvision import datasets, transforms

from pathlib import Path

ROOT = Path(__file__).parent.resolve()

# Instantiate your uncompressed model

model = ov.Core().read_model(r"db0_mobilenetv2_704x480_fp32.xml")

# Provide validation part of the dataset to collect statistics needed for the compression algorithm

val_dataset = datasets.ImageFolder(r"Dataset0_Fruit_ObjectClassification_train_val_test\train", transform=transforms.Compose([transforms.ToTensor()]))

dataset_loader = torch.utils.data.DataLoader(val_dataset, batch_size=1)

# Step 1: Initialize transformation function

def transform_fn(data_item):

images, _ = data_item

npy_array = images.numpy()[0, :, :, :]

npy_array_rs = np.resize(npy_array, (1, 480, 704, 3))

return npy_array_rs

# Step 2: Initialize NNCF Dataset

calibration_dataset = nncf.Dataset(dataset_loader, transform_fn)

# Step 3: Run the quantization pipeline

quantized_model = nncf.quantize(model, calibration_dataset)

quantized_model_path = Path(f"{ROOT}/mobilenetv2_nncf_newint8.xml")

ov.save_model(quantized_model, str(quantized_model_path), compress_to_fp16=False)

Below are the test results using the quantized model with NNFC and 1_openvino_inference_classification_test.py

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, this thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page