- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Expert,

I am trying to convert license-plate-recognition-barrier-0007 raw model to IR using mo_tf.py script and I bumped into the following errors:

C:\Program Files (x86)\IntelSWTools\openvino_2021\deployment_tools\model_optimizer>python mo_tf.py --input_model "C:\Users\allensen\Documents\Intel\Models\public\license-plate-recognition-barrier-0007\graph.pb.frozen" --data_type=FP32 --model_name vehicle-license-plate-detection-barrier-0123_FP32 --output_dir "C:\Users\allensen\Documents\Intel\IR_Models"

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: C:\Users\allensen\Documents\Intel\Models\public\license-plate-recognition-barrier-0007\graph.pb.frozen

- Path for generated IR: C:\Users\allensen\Documents\Intel\IR_Models

- IR output name: vehicle-license-plate-detection-barrier-0123_FP32

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

- Inference Engine found in: C:\Program Files (x86)\IntelSWTools\openvino_2021\python\python3.7\openvino

Inference Engine version: 2.1.2021.3.0-2787-60059f2c755-releases/2021/3

Model Optimizer version: 2021.3.0-2787-60059f2c755-releases/2021/3

[ ERROR ] Shape [-1 24 94 3] is not fully defined for output 0 of "input". Use --input_shape with positive integers to override model input shapes.

[ ERROR ] Cannot infer shapes or values for node "input".

[ ERROR ] Not all output shapes were inferred or fully defined for node "input".

For more information please refer to Model Optimizer FAQ, question #40. (https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=40#question-40)

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Parameter.infer at 0x00000165C95634C8>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'extensions.middle.PartialInfer.PartialInfer'>): Stopped shape/value propagation at "input" node.

For more information please refer to Model Optimizer FAQ, question #38. (https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=38#question-38)

Can you let me know if I am missing anything like the input_checkpoint entry?

Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I managed to convert the file by these commands:

C:\Program Files (x86)\IntelSWTools\openvino_2021\deployment_tools\model_optimizer>python mo_tf.py --input_model "C:\Users\allensen\Documents\Intel\Models\public\license-plate-recognition-barrier-0007\graph.pb.frozen" --data_type=FP32 --model_name vehicle-license-plate-detection-barrier-0123_FP32 --output_dir "C:\Users\allensen\Documents\Intel\IR_Models" --input_shape=[1,24,94,3]

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: C:\Users\allensen\Documents\Intel\Models\public\license-plate-recognition-barrier-0007\graph.pb.frozen

- Path for generated IR: C:\Users\allensen\Documents\Intel\IR_Models

- IR output name: vehicle-license-plate-detection-barrier-0123_FP32

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,24,94,3]

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

- Inference Engine found in: C:\Program Files (x86)\IntelSWTools\openvino_2021\python\python3.7\openvino

Inference Engine version: 2.1.2021.3.0-2787-60059f2c755-releases/2021/3

Model Optimizer version: 2021.3.0-2787-60059f2c755-releases/2021/3

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: C:\Users\allensen\Documents\Intel\IR_Models\vehicle-license-plate-detection-barrier-0123_FP32.xml

[ SUCCESS ] BIN file: C:\Users\allensen\Documents\Intel\IR_Models\vehicle-license-plate-detection-barrier-0123_FP32.bin

[ SUCCESS ] Total execution time: 13.88 seconds.

It's been a while, check for a new version of Intel(R) Distribution of OpenVINO(TM) toolkit here https://software.intel.com/content/www/us/en/develop/tools/openvino-toolkit/download.html?cid=other&source=prod&campid=ww_2021_bu_IOTG_OpenVINO-2021-3&content=upg_all&medium=organic or on the GitHub*

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Expert,

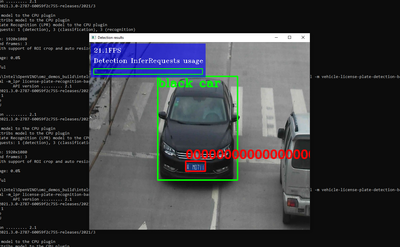

There is something wrong with execution code or the pre-trained model?

It is unable to detect the car plate number correctly with license-plate-recognition-barrier-0007 model.

Please see the attached file.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darkhouse,

Thank you for reaching out.

I have tested this demo and getting the same output as you. We will investigate this issue and will get back to you with further information soon. Meanwhile, for the license-plate-recognition-barrier-0007 model, you could use converter.py script to directly convert the model into Intermediate Representation (IR) format. The IR generated will be FP16,FP32 and also INT8.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DarkHorse just curious, you ask for conversion of license-plate-recognition-barrier-0007 while in your log the output IR name is vehicle-license-plate-detection-barrier-0123, which is different model:

@DarkHorse wrote:

[ SUCCESS ] XML file: C:\Users\allensen\Documents\Intel\IR_Models\vehicle-license-plate-detection-barrier-0123_FP32.xml

[ SUCCESS ] BIN file: C:\Users\allensen\Documents\Intel\IR_Models\vehicle-license-plate-detection-barrier-0123_FP32.bin

So, my question is are you intentionally renamed model at conversion?

If you are not sure how to call model optimizer from command line and your interest is limited to Open Model Zoo models, I would recommend you to use OMZ downloader, converter and potentially quantizer scripts, which will simplify those routine tasks for downloading, conversion and quantization of models.

You may see an example of call model conversion for OMZ models in each model description, it is a simple as:

python3 <omz_dir>/tools/downloader/converter.py --name <model_name>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Vladimir_Dudnik ,

I did a typo mistakes for the output name for license-plate-recognition-barrier-0007 , the correct conversion steps are below:

By the way, i am using the mo_tf.py script which can be found under model_optimizer, so I assume it should be the same right as converter.py script.

C:\Program Files (x86)\IntelSWTools\openvino_2021\deployment_tools\model_optimizer>python mo_tf.py --input_model "C:\Users\allensen\Documents\Intel\Models\public\license-plate-recognition-barrier-0007\graph.pb.frozen" --data_type=FP32 --model_name license-plate-recognition-barrier-0007_FP32 --output_dir "C:\Users\allensen\Documents\Intel\IR_Models" --input_shape=[1,24,94,3]

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\_distributor_init.py:32: UserWarning: loaded more than 1 DLL from .libs:

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.GK7GX5KEQ4F6UYO3P26ULGBQYHGQO7J4.gfortran-win_amd64.dll

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.WCDJNK7YVMPZQ2ME2ZZHJJRJ3JIKNDB7.gfortran-win_amd64.dll

stacklevel=1)

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: C:\Users\allensen\Documents\Intel\Models\public\license-plate-recognition-barrier-0007\graph.pb.frozen

- Path for generated IR: C:\Users\allensen\Documents\Intel\IR_Models

- IR output name: license-plate-recognition-barrier-0007_FP32

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,24,94,3]

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\_distributor_init.py:32: UserWarning: loaded more than 1 DLL from .libs:

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.GK7GX5KEQ4F6UYO3P26ULGBQYHGQO7J4.gfortran-win_amd64.dll

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.WCDJNK7YVMPZQ2ME2ZZHJJRJ3JIKNDB7.gfortran-win_amd64.dll

stacklevel=1)

- Inference Engine found in: C:\Program Files (x86)\IntelSWTools\openvino_2021\python\python3.7\openvino

Inference Engine version: 2.1.2021.3.0-2787-60059f2c755-releases/2021/3

Model Optimizer version: 2021.3.0-2787-60059f2c755-releases/2021/3

2021-07-20 21:14:47.052631: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'cudart64_110.dll'; dlerror: cudart64_110.dll not found

2021-07-20 21:14:47.087600: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\_distributor_init.py:32: UserWarning: loaded more than 1 DLL from .libs:

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.GK7GX5KEQ4F6UYO3P26ULGBQYHGQO7J4.gfortran-win_amd64.dll

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.WCDJNK7YVMPZQ2ME2ZZHJJRJ3JIKNDB7.gfortran-win_amd64.dll

stacklevel=1)

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\_distributor_init.py:32: UserWarning: loaded more than 1 DLL from .libs:

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.GK7GX5KEQ4F6UYO3P26ULGBQYHGQO7J4.gfortran-win_amd64.dll

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.WCDJNK7YVMPZQ2ME2ZZHJJRJ3JIKNDB7.gfortran-win_amd64.dll

stacklevel=1)

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\_distributor_init.py:32: UserWarning: loaded more than 1 DLL from .libs:

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.GK7GX5KEQ4F6UYO3P26ULGBQYHGQO7J4.gfortran-win_amd64.dll

C:\Users\allensen\AppData\Local\Programs\Python\Python37\lib\site-packages\numpy\.libs\libopenblas.WCDJNK7YVMPZQ2ME2ZZHJJRJ3JIKNDB7.gfortran-win_amd64.dll

stacklevel=1)

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: C:\Users\allensen\Documents\Intel\IR_Models\license-plate-recognition-barrier-0007_FP32.xml

[ SUCCESS ] BIN file: C:\Users\allensen\Documents\Intel\IR_Models\license-plate-recognition-barrier-0007_FP32.bin

[ SUCCESS ] Total execution time: 16.74 seconds.

It's been a while, check for a new version of Intel(R) Distribution of OpenVINO(TM) toolkit here https://software.intel.com/content/www/us/en/develop/tools/openvino-toolkit/download.html?cid=other&source=prod&campid=ww_2021_bu_IOTG_OpenVINO-2021-3&content=upg_all&medium=organic or on the GitHub*

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darkhouse,

I suspect this could be a bug in OpenVINO. I have run the demo with license-plate-recognition-barrier-0001 model and it worked properly. For now, I would suggest you use the license-plate-recognition-barrier-0001 model and we will report this bug to our developer.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darkhouse,

We have validated that the issue happened to start from OpenVINO 2021.1, the type of output layer for this model (license-plate-recognition-barrier-0007) changed from FP32 to I32. Our development team has performed some fixed for the demo and model description in PR-2634. The fix will be official released in OpenVINO 2021.4.1.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darkhouse,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page