- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi every body,

I am doing a project to classify images with NCS2. I successfully classify images when I used only one NCS2, and the inference time is ~0.37(s).

The inference time is calculated as the following lines of code:

time_pre = time.time()

outputs = exec_net.infer({'data':inputs})

time_post = time.time()

inferrenc_time = time_pre - time_post

However, when I used 2 NSC2 and configured openVino IECore like below:

plugin = IECore()

net = plugin.read_network(model=model_xml, weights=model_bin)

exec_neto = plugin.load_network(network=net, device_name='HETERO:MYRIAD.1.1.2-ma2480,MYRIAD.1.2-ma2480')

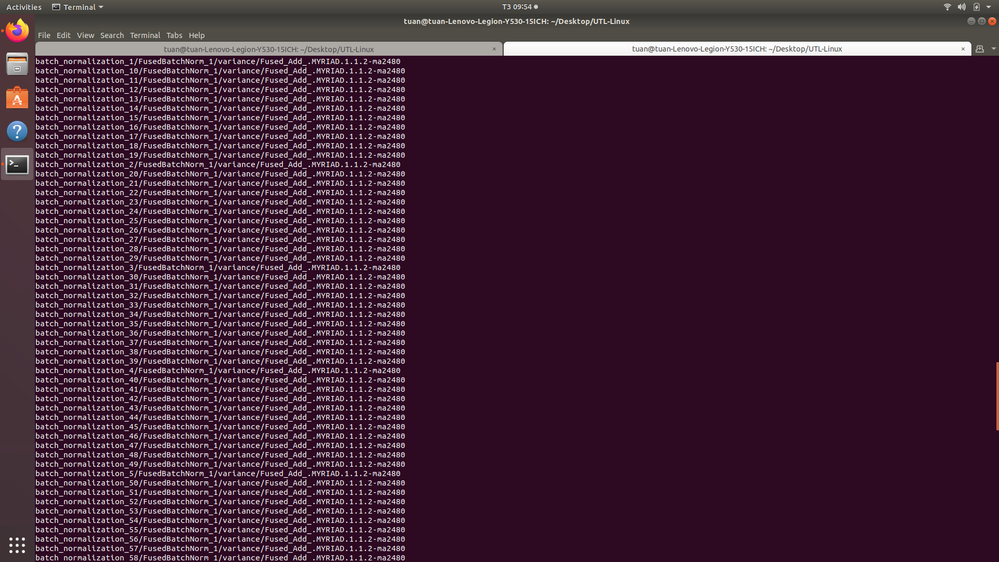

The inference time doesn't reduce. It is still ~ 0.37(s). I also check the query layers map, and all layers are always assigned to one NCS2 device (Please refer to the image)

How can I change the setting to speed up my system by using multiple NCS2?

I referred to this tutorial but I didn't success:

https://www.coursera.org/lecture/int-openvino/using-multiple-devices-s976F

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi BuuVo,

The heterogeneous plugin enables computing for inference on one network on several devices. Purposes to execute networks in the heterogeneous mode are as follows:

· To utilize accelerators power and calculate heaviest parts of the network on the accelerator and execute not supported layers on fallback devices like CPU

· To utilize all available hardware more efficiently during one inference

However, since you are using a hetero plugin with identical devices it is only natural that the execution will be done in the first device.

A single inference can be split into multiple inferences to be handled by separate devices. Please refer to the following sections, "Annotation of Layers per Device and Default Fallback Policy", "Details of Splitting Network and Execution" and "Execution Precision" in the following link:

https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_supported_plugins_HETERO.html

Please also refer to the following link for an example of implementation.

https://github.com/yuanyuanli85/open_model_zoo/tree/ncs2/demos/python_demos

You can go through this community discussion that might be helpful to you as well.

Please refer to the Multi-Device Plugin and Hello Query Device C++ Sample pages for additional relevant information.

https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_supported_plugins_MULTI.html

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any Help?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi BuuVo,

We are currently working on this. We will get back to you shortly.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi BuuVo,

The heterogeneous plugin enables computing for inference on one network on several devices. Purposes to execute networks in the heterogeneous mode are as follows:

· To utilize accelerators power and calculate heaviest parts of the network on the accelerator and execute not supported layers on fallback devices like CPU

· To utilize all available hardware more efficiently during one inference

However, since you are using a hetero plugin with identical devices it is only natural that the execution will be done in the first device.

A single inference can be split into multiple inferences to be handled by separate devices. Please refer to the following sections, "Annotation of Layers per Device and Default Fallback Policy", "Details of Splitting Network and Execution" and "Execution Precision" in the following link:

https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_supported_plugins_HETERO.html

Please also refer to the following link for an example of implementation.

https://github.com/yuanyuanli85/open_model_zoo/tree/ncs2/demos/python_demos

You can go through this community discussion that might be helpful to you as well.

Please refer to the Multi-Device Plugin and Hello Query Device C++ Sample pages for additional relevant information.

https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_supported_plugins_MULTI.html

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Buu Vo,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page