- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

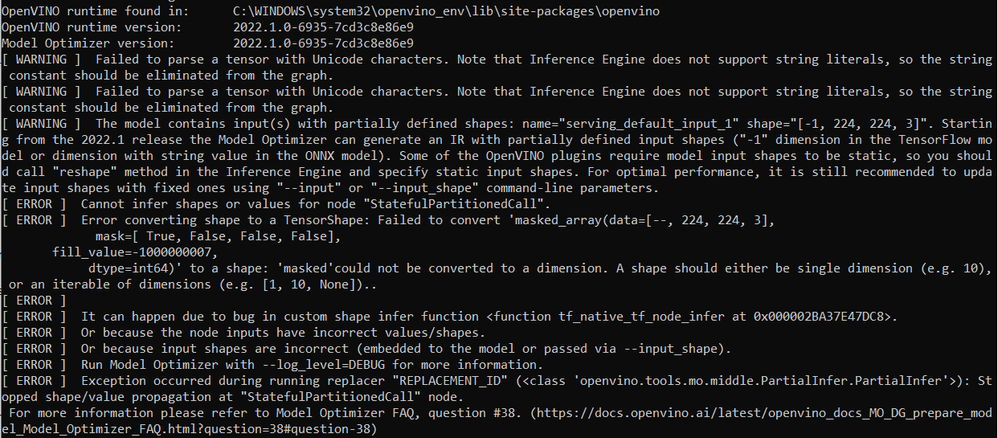

I am trying to converting my SavedModel using Model Optimizer, but I encounter this error.

OV version- 2022.1.0.643

(plastic) itxotic@itxotic:/media/itxotic/HDD/emoji-project/Bare Electrodes/DL$ mo --saved_model_dir model

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: None

- Path for generated IR: /media/itxotic/HDD/emoji-project/Bare Electrodes/DL/.

- IR output name: saved_model

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Source layout: Not specified

- Target layout: Not specified

- Layout: Not specified

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- User transformations: Not specified

- Reverse input channels: False

- Enable IR generation for fixed input shape: False

- Use the transformations config file: None

Advanced parameters:

- Force the usage of legacy Frontend of Model Optimizer for model conversion into IR: False

- Force the usage of new Frontend of Model Optimizer for model conversion into IR: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

OpenVINO runtime found in: /opt/intel/openvino_2022/python/python3.8/openvino

OpenVINO runtime version: 2022.1.0-7019-cdb9bec7210-releases/2022/1

Model Optimizer version: 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ WARNING ] Failed to parse a tensor with Unicode characters. Note that Inference Engine does not support string literals, so the string constant should be eliminated from the graph.

[ WARNING ] Failed to parse a tensor with Unicode characters. Note that Inference Engine does not support string literals, so the string constant should be eliminated from the graph.

[ WARNING ] The model contains input(s) with partially defined shapes: name="serving_default_input_1" shape="[-1, 224, 224, 3]". Starting from the 2022.1 release the Model Optimizer can generate an IR with partially defined input shapes ("-1" dimension in the TensorFlow model or dimension with string value in the ONNX model). Some of the OpenVINO plugins require model input shapes to be static, so you should call "reshape" method in the Inference Engine and specify static input shapes. For optimal performance, it is still recommended to update input shapes with fixed ones using "--input" or "--input_shape" command-line parameters.

[ ERROR ] Cannot infer shapes or values for node "StatefulPartitionedCall".

[ ERROR ] Error converting shape to a TensorShape: Failed to convert 'masked_array(data=[--, 224, 224, 3],

mask=[ True, False, False, False],

fill_value=-1000000007)' to a shape: 'masked'could not be converted to a dimension. A shape should either be single dimension (e.g. 10), or an iterable of dimensions (e.g. [1, 10, None])..

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function tf_native_tf_node_infer at 0x7ff1ef133310>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'openvino.tools.mo.middle.PartialInfer.PartialInfer'>): Stopped shape/value propagation at "StatefulPartitionedCall" node.

For more information please refer to Model Optimizer FAQ, question #38. (https://docs.openvino.ai/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=38#question-38)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Afiq,

Sorry for the delay in response.

OpenVINO does not yet support this model/topology, our development team is working on it. See the release notes for new features and improvements in OpenVINO.

For your reference, Intel provides pre-trained EfficientNet models from the Open Model Zoo that may be of interest to you.

Sincerely,

Zulkifli

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Afiq,

Thank you for reaching out to us.

We do provide documentation on how to convert a TensorFlow 2 model in SavedModel Format that you can refer to.

Please share with us your model, the MO command used to convert the model, and the link to the original model for replication purposes.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Link to the Saved_model : https://1drv.ms/u/s!Ajnxd5Cr74oWkcsNlGQ4es0p5sxeYQ?e=A5A6LW

MO command used :

mo --saved_model_dir model

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Afiq,

We faced the same errors when converting your model to IR.

Can you share with us the link to the original model or the source of your model?

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

model=tf.keras.applications.EfficientNetB0(

include_top=True,

weights=None,

pooling=max,

classes=2,

classifier_activation="softmax"

)i got the pretrained model by running this line of code

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Afiq,

Sorry for the delay in response.

OpenVINO does not yet support this model/topology, our development team is working on it. See the release notes for new features and improvements in OpenVINO.

For your reference, Intel provides pre-trained EfficientNet models from the Open Model Zoo that may be of interest to you.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using efficientnet b0, shouldn't it be able to support it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Afiq,

It's not that the entire model topology is not supported, but it is more related to layers in the model that are not yet compatible with Model Optimizer architecture and our development team is working to enable this. However, we are unable to comment on any timeline of when it will be enabled.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Afiq,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question

Sincerely,

Zulkifli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page