- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

System information (version)

- OpenVINO = 2021.1.110

- Operating System / Platform => ubuntu 18.04

- Problem classification: Model Conversion

- Framework: NNCF (Pytorch 1.8.1)

- Model name: Squeezenet (Classification)

- CPU: Intel core i7 9700k

Detailed description

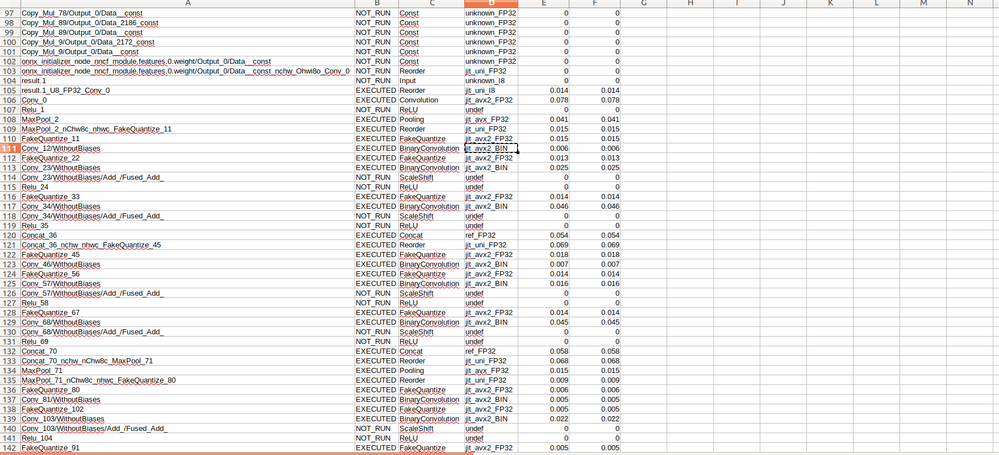

I trained a squeezenet classification model using given training pipeline script in nncf examples with XNOR binarization as compression method, keeping first conv layer as fp32 and rest of the net in binary, converted to onnx and then to IR via model optimizer and ran benchmarks with the OV's benchmark tool and found that in addition to fake quantize, there are reorders happening before every binary conv layer execution which are costly and essentially taking away the speed up that could be achieved through binarization as a result, the FPS are even lower that a full FP32 model (benchmark report attached).

As you can see below before every binary conv, a reorder is happening from nchw8c to nhwc, although as per docs of binary conv here, the binary conv layer can accept NCHW format.

Concern is, why the model optimizer/Inferece Engine is adding reorders before every bin conv and is there a way to avoid these?

Detailed benchmark counters for full FP32 model and binary model are attached for reference.

I've also created an issue for this on the github repo, posting here as well for better reach/response

Regards,

Raza

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Reza Ghulam Jilani,

Greetings to you.

We are investigating this issue. We notice that you open another ticket on Github #5817, we will treat these cases as one.

Regards,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please find below the link to relevent onnx model files for your referece:

https://drive.google.com/drive/folders/1EzkohZOjGg-Cul0IoLok9QJu1NO0GMQ2?usp=sharing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Ghulam Jilani Raza,

Thank you for your patience. The dev team is investigating the issue and will provide the finding in this GitHub thread #5817.

Regards,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Ghulam Jilani Raza,

We will continue the investigation on GitHub. Therefore, we will close this thread and focus on GitHub:

https://github.com/openvinotoolkit/openvino/issues/5817

Hence, this thread will no longer be monitored by Intel. If you need any additional information from Intel, please submit a new question.

Sincerely,

Zulkifli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page