- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Environment Info

- Windows 10 laptop

- Neural Compute Stick v2

- Python 3.5

- OpenVINO R5 (2018.R5 released in late December) for inference engine.

- Model Optimizer from R4

- YOLOv3 Model

- Input Size = (320, 320)

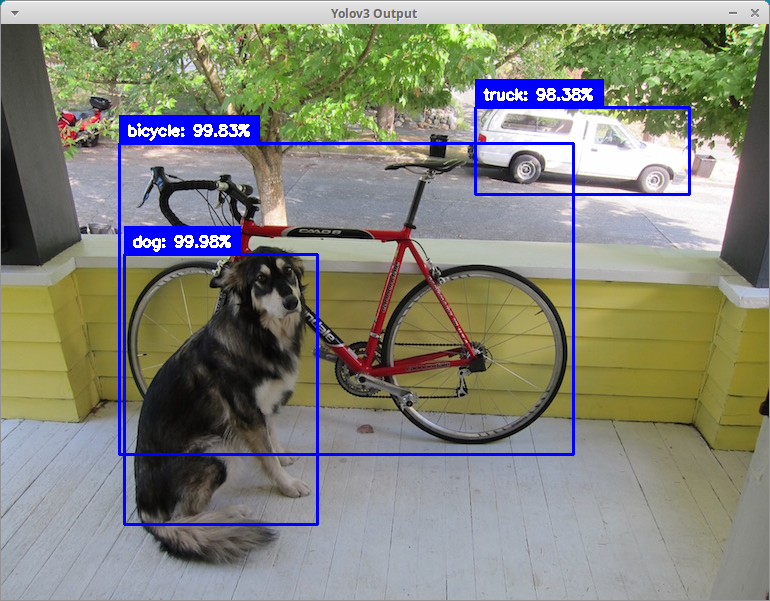

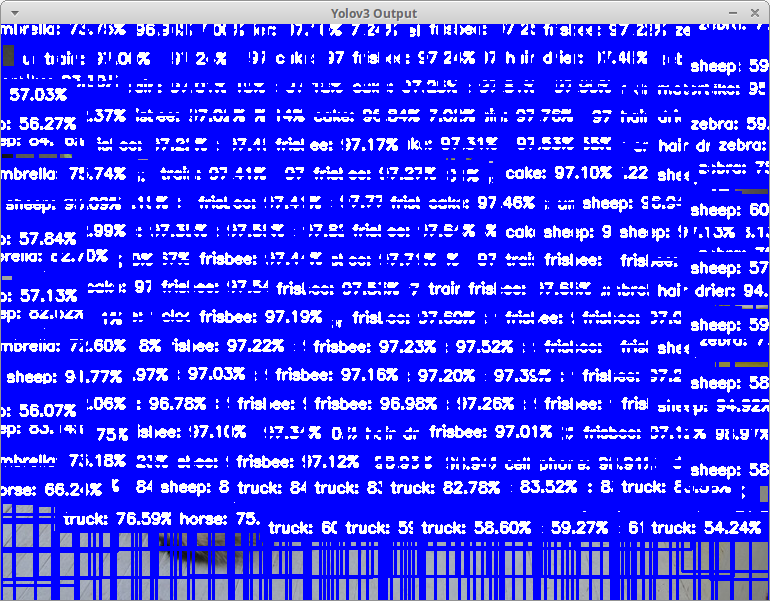

Problem #1: NCS2 Wrong Inference Output for YOLOv3

I can run the YOLOv3 object detector successfully on the NCS v1, but the test failed on NCS v2. I tried other object detectors (SSD_MobileNetv1, SSD_Inceptionv2) and they are working fine on both NCS sticks. The NCS2 gives wrong output. I cannot figure out the difference in case of the YOLOv3 model between the 2 stick versions.

I attached the code along with the model I am using. You only need to run "python yolov3_test.py" and the code will run inference on the famous image "dog.jpg" and finally draws the bboxes on the image (you need to press a key to end the program).

Problem #2: Model Optimizer Problem in R5

I have a problem with the Model Optimizer in the R5 release. All models generated with R5 model_optimizer are not working at all on any device (NCS1, NCS2 and GPU).

Using the Model Optimizer from R4 (while keep using the inference engine from R5) is working well for me on all devices.

Please help me to solve the 2 issues.

Regards,

Ahmed Ezzat

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- My environment

OpenVINO R5 5.0.1

Ubuntu 16.04

- NCS1

- NCS2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is exactly what I am seeing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ahmed,

> Problem #2: Model Optimizer Problem in R5

Looks like an installation / environment issue causing mixed env picking up wrong libraries.

I would uninstall all SDKs old and new, reboot and clean re-install the very latest. Then please try to build and run the samples, do they work? Then I would use the model optimizer again for more extensive tests. Post here command line and output logs in case of issues.

Cheers,

nikos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi nikos,

For problem #1, the model is working on NCS1 but output garbage on NCS2 for the attached binary. The model optimizer is , for me, a side problem for now.

Do you have any IDEA? Is this a problem in the plugin library of NCS2?

Regards,

Ahmed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ahmed,

I got also a NCS2, using it with OpenVino R5. I could run some Object Detections on it. You can refer to this thread to see our discussion about Mobilnet+SSD object detections. Actually I could easily convert a caffe model to IR and easily use it (even you do not need to convert caffe model to IR, they are converted internally). Reading inference result of IR model was same as original Caffe model.

However, for Yolo3, I have also problem. I could convert the model to IR, but can use inference result. it's format is changed.... I asked a question here and wait for help.

g

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

nikos wrote:Hi Ahmed,

> Problem #2: Model Optimizer Problem in R5

Looks like an installation / environment issue causing mixed env picking up wrong libraries.

I would uninstall all SDKs old and new, reboot and clean re-install the very latest. Then please try to build and run the samples, do they work? Then I would use the model optimizer again for more extensive tests. Post here command line and output logs in case of issues.

Cheers,

nikos

I installed the latest SDK (R5.01) on Ubuntu, recompiled the yolov3 model and I still get corrupted results on both NCS1 and 2 (This is for the model optimizer issue). I attached the new xml file generated from the model optimizer 1.5.12 which is different from the original model generated from model optimizer 1.4.

In conclusion,

# The yolov3 file generated by model optimizer 1.4.x is working fine on NCS1 but not NCS2.

# The file generated from model optimizer 1.5.12 is not working fine at both NCS sticks.

Regards,

Ahmed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Intel guys,

Please I need help in this issue. It is blocking me from completing a protoype for demo.

Can you please have a look?

Regards,

Ahmed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ahmed,

Do you have any update? it is around 2 months that I got stuck with this Yolo3+NCS2. Then for now I moved to Mobilnet+SSD. that works fine with around 20-25 FPS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Hamzeh,

I think there is an internal problem in the NCS2 inference engine plugin running the YOLO.

see https://software.intel.com/en-us/node/805425 for more information.

I changed the negative slope as noticed (changed to be 0.101) and I got some improvement, but still a lot of false positives.

Regards,

Ahmed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again Ahmed,

Sorry, this site did not send me email notification, and I did not notice your message (but I was waiting for it!).

I am not DNN expert, and I don't know what is the exact source of problem of Yolov3 on NCS2, its architecture, or incompatibility of Darknet model type.

Then I tried to find caffe model of Yolov3, and the only promising one I found was this one. It works fine on Ubuntu, but can't be ported to NCS2, because the guy wrote the model in a way that can be read only with caffe.net reader method, (not cv2.dnn.readnet), and then I could not use it on NCS2 (refer to forum of that page to see discussions). There is something called "Caffe python layer" there...

Have you tried Pinto project? while ago, I checked it but it had accuracy problem too. But he had some updates a few days ago, and I am going to test it.

if it is not working, I guess it is better to not waste time on this, and wait for some update from intel. and I continue to use older and less accurate Mobilent+SSD over NCS2 which works very well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

has anyone been able to find out the problem of this?

I'm facing the same issue here as my model works the Movidius NCS. Then I bought the NCS2 to give the model a boost but the output from exec_net are not the same anymore and this is causing thousands of false positive.

When trying the NCS2 with the model from this repo here https://github.com/PINTO0309/OpenVINO-YoloV3 it worked fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I run into similar issue. I converted pretrained darknet COCO and Openimages models to IR (FP16) and that works on NCS2. But when i convert my own trained model, it gives lot of false positives. Same issue on Windows and on RPi. However if i convert my darknet model to IR FP32 then that works (inference on CPU). So i guess it has something to do with float conversion.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mine is the same.

Hyodo, Katsuya wrote:- My environment

OpenVINO R5 5.0.1

Ubuntu 16.04

- NCS1

- NCS2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Nikos,

Could you help me with the questions in this thread?

Thank you.

nikos wrote:Hi Ahmed,

> Problem #2: Model Optimizer Problem in R5

Looks like an installation / environment issue causing mixed env picking up wrong libraries.

I would uninstall all SDKs old and new, reboot and clean re-install the very latest. Then please try to build and run the samples, do they work? Then I would use the model optimizer again for more extensive tests. Post here command line and output logs in case of issues.

Cheers,

nikos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found a solution for my issue. I was using the older 2018 year version of Openvino. When i upgraded to 2019 R1 then there was no more false positives. Looks like the issue was in the Myriad plugin or somewhere else in the inference code but not in the model or model converter because i was able to use the "old" IR files with updated SW.

Release description also says that some things have been fixed/improved:

https://software.intel.com/en-us/articles/OpenVINO-RelNotes#inpage-nav-2-4-5

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone. Please see this thread. I just tested yolov3 on OpenVino 2019 R1, it works fine.

https://software.intel.com/en-us/forums/computer-vision/topic/807383

Thanks,

Shubha

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page