- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear all,

I am getting strange results when using the SDE to obtain the FLOPS of a Fortran90 code.

I am using also the script from https://github.com/It4innovations/Intel-SDE-FLOPS.

I have written the equivalent test code in C and in Fortran90. While I get 'sensible' results with the C version, I get funny results on the Fortran version.

The codes are:

int main() {

int i;

int imax = 101;

float a,b,c;

b = 0.0; c = 1.5 ; a = 1.2;

for (i = 1; i < imax; ++i)

{ b = b+c*a; }

return 0;

}

program main

implicit none

integer, parameter :: sp = kind(1.0)

integer, parameter :: jmax = 100

integer :: j

real(kind=sp) :: a,b,c

b = 0.0; c = 1.5 ; a = 1.2

do j = 1, jmax

b = b+c*a

enddo

end program main

I run the SDE using an script:

```bash

ifort -xhost testFLOPS0.f90 -o a.out;

./sde64 -iform -mix -dyn_mask_profile -- ./a.out

python intel_sde_flops.py

gcc test0.c -march=native -o app

./sde64 -iform -mix -dyn_mask_profile -- ./app

python intel_sde_flops.py

```

The results are:

* Single prec. FLOPs: 200 / 0

* Double prec. FLOPs: 0 / 5

* Total instructions executed: 164309 / 452071

* Total FMA instructions executed: 0 / 0

Where the first value is for the C code and the second for the Fortran (using ifort from intel).

As I said, the C results seem correct to me: 200 FLOP (100 add and 100 mult), but the Fortran90 do not make any sense: 5 Double precision ???

I check the python script, it is just looking for *_fp_single and _fp_double and adding up. The scrip seems fine (I checked manually the txt file generated by the SDE).

As I said earlier, the code I want to benchmark is written in Fortran90.

Any idea what it is going on?

Thanks in advance,

Jofre

- Tags:

- Intel® Advanced Vector Extensions (Intel® AVX)

- Intel® Streaming SIMD Extensions

- Parallel Computing

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

An optimizing compiler, such as Intel Visual Fortran incorporates dead code elimination. This optimization is extended to code that generates results that are not used (read useless code). In your test code you will need to use the result of the code in some manner. I suggest you insert after the do loop something like:

if(b == 1.0) print *,"Not going to print"

Additionally, the loop count of 100 is too small to obtain anything meaningful from the test run.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello jofre,

I'm the author of the Intel-SDE-FLOPS script and came across this post. I can confirm Jim's answer.

What happens is that you compile the Fortran code with the Intel Fortran Compiler which has a default optimization level of -O2 (applies dead code elimination). The C version of the code you compile with GCC, which has a default optimization level of -O0 (no optimization).

As a result you don't see the expected number of FLOPs in the Fortran version because the Intel Fortran compiler removed your do-loop. For the C version, there was no optimization applied by GCC, which leaves even dead (invariant) instructions in the final object code.

To compare apples to apples, I'd suggest you use "ifort -O0 ..." here, and you should see 200 FLOPs even for the Fortran version.

Best regards,

Georg Zitzlsberger

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can also declare the variable "b" as volatile and observe the results. I presume that "b" declared volatile may prevent the do-loop elimination.

P.s.

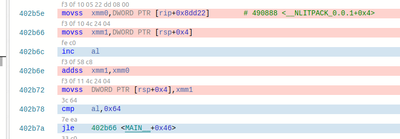

I quickly verified the "volatile" keyword and its presence prevented do-loop elimination. The optimization level was set to "O2" and Goldbolt snippet clearly shows the do-loop implementation .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't understand the point of trying to measure FLOPS with optimization disabled. It's like running a race with your feet tied together. What do you think this is going to tell you?

Writing good benchmarks is HARD! Amateurs needn't apply.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page