- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A colleague wrote a small MPI Isend/Recv test case to try to reproduce a performance issue with an application when using RoCE, but the test case hangs with large message sizes when run with 2 or more processes per node across 2 or more nodes. The same test case runs successfully with large message sizes in an environment with Infiniband.

Initially it hung with message sizes larger than 16K but usage of the FI_OFI_RXM_BUFFER_SIZE variable allowed increasing the message size to about 750K. We were trying to get to 1 MB, but no matter how large FI_OFI_RXM_BUFFER_SIZE is set to, the test hangs with a message size of 1 MB. Are there other MPI settings or OS settings that may need to be increased? I also tried setting FI_OFI_RXM_SAR_LIMIT, but that didn't help. Here are the current set of MPI options for the test when using RoCE:

mpi_flags='-genv I_MPI_OFI_PROVIDER=verbs -genv FI_VERBS_IFACE=vlan50 -genv I_MPI_OFI_LIBRARY_INTERNAL=1 -genv I_MPI_FABRICS=shm:ofi -genv FI_OFI_RXM_BUFFER_SIZE=2000000 -genv FI_OFI_RXM_SAR_LIMIT=4000000 -genv I_MPI_DEBUG=30 -genv FI_LOG_LEVEL=debug'

The environment is SLES 15 SP2 with Intel OneAPI Toolkit version 2021.2, with Mellanox CX-6 network adapters in Ethernet mode and 100 Gb Aruba switches. The NICs and switches have been configured for RoCE traffic per guidelines from Mellanox and our Aruba engineering team.

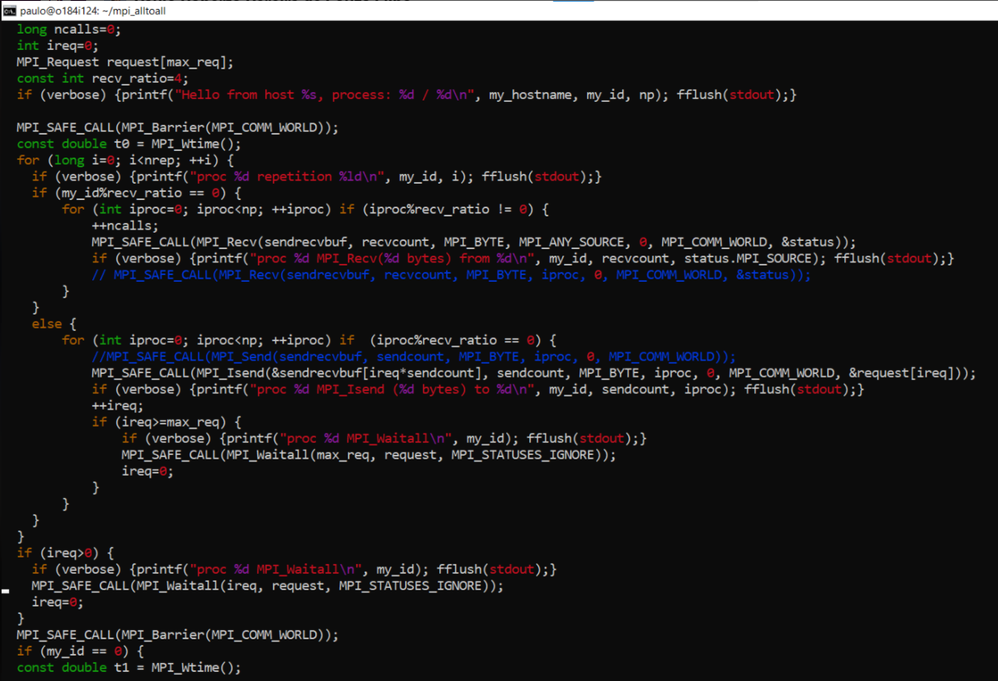

A screenshot of the main loop of MPI code (I will get the full source code from my colleague), along with the output of the test when the message size is 1 MB and I_MPI_DEBUG=30 and FI_LOG_LEVEL=debug are attached. The script that is used to run the test is shown below. The script input parameters are the number of repetitions and message size.

#!/bin/bash

cf_args=()

while [ $# -gt 0 ]; do

cf_args+=("$1")

shift

done

source /opt/intel/oneapi/mpi/2021.3.0/env/vars.sh

set -e

set -u

mpirun --version 2>&1 | grep -i "intel.*mpi"

hostlist='-hostlist perfcomp3,perfcomp4'

mpi_flags='-genv I_MPI_OFI_PROVIDER=verbs -genv FI_VERBS_IFACE=vlan50 -genv I_MPI_OFI_LIBRARY_INTERNAL=1 -genv I_MPI_FABRICS=shm:ofi -genv FI_OFI_RXM_BUFFER_SIZE=2000000 -genv I_MPI_OFI_PROVIDER=verbs -genv FI_OFI_RXM_SAR_LIMIT=4000000 -genv I_MPI_DEBUG=30 -genv FI_LOG_LEVEL=debug -genv I_MPI_OFI_PROVIDER_DUMP=1'

echo "$hostlist"

mpirun -ppn 1 \

$hostlist $mpi_flags \

hostname

num_nodes=$(mpirun -ppn 1 $hostlist $mpi_flags hostname | sort -u | wc -l)

echo "num_nodes=$num_nodes"

mpirun -ppn 2 \

$hostlist $mpi_flags \

singularity run -H `pwd` \

/var/tmp/paulo/gromacs/gromacs_tau.sif \

v4/mpi_isend_recv "${cf_args[@]}"

Link Copied

- « Previous

-

- 1

- 2

- Next »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, the issue is resolved by using Intel MPI 2021.6. There appears to be a bug in 2021.5.1, since the test case generates a segfault when using that version of Intel MPI with either the verbs or mlx provider. Also, the test case hangs when using 2021.3 or 2021.4 with the verbs provider.

Also, the issue only occurred when using RoCE. The same test case ran successfully when using IB with 2021.3.1. It also runs successfully with Open MPI 4.1.2.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »