- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel System Programming Manual on Skylake reports that there are just 4 programmable counters per thread. How is it then possible to collect, e.g. all FRONTEND_RETIRED.* events which are much more then 4?

Currently I think of it as programming IA32_PERFEVTSELx, then reading IA32_PMCx. But there are just 4 IA32_PERFEVTSELx while FRONTEND_RETIRED.* events are more then 10.

- Tags:

- Parallel Computing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With HyperThreading enabled, you only have four programmable performance counters per logical processor (8 with HyperThreading disabled in the BIOS). Analyses that require more than four programmable counters need to use either counter multiplexing or multiple runs with different counter sets.

Many tools support counter multiplexing. Intel's VTune uses counter multiplexing for most of its analysis types -- I was just reviewing some VTune analyses using the "general exploration" target and saw that a total of 106 programmable performance counter events were used during the run.

Even the Linux "perf stat" (or "perf record") facility allows multiplexing -- simply specify all the events you want to record on the command line and the driver will switch between groups, attempting to measure each of the events for approximately the same fraction of the total time.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With HyperThreading enabled, you only have four programmable performance counters per logical processor (8 with HyperThreading disabled in the BIOS). Analyses that require more than four programmable counters need to use either counter multiplexing or multiple runs with different counter sets.

Many tools support counter multiplexing. Intel's VTune uses counter multiplexing for most of its analysis types -- I was just reviewing some VTune analyses using the "general exploration" target and saw that a total of 106 programmable performance counter events were used during the run.

Even the Linux "perf stat" (or "perf record") facility allows multiplexing -- simply specify all the events you want to record on the command line and the driver will switch between groups, attempting to measure each of the events for approximately the same fraction of the total time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm interested in the total overhead of some kind of round-robin like multiplexing algorithm. I suppose that aforementioned overhead may be as high as 100-200 cycles per each batch of 4 performance events. The perf_events has an implementation of counters multiplexing access and it would be interesting to have a look at its source code.

During my own analysis be it perf or VTune I only use 4 or 8 performance events per each sampling session.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would not expect much overhead with counter multiplexing relative to the overhead of counter virtualization.

With counter virtualization you have to do a save and restore of the performance counter programming and values on context switches. The last time I measured this on a Linux system, the overhead of the kernel timer interrupt averaged about 6240 core cycles, with the counters attributing 5240 cycles to kernel mode and 1100 cycles to user mode. If I recall correctly, 9 of 10 interrupts are lighter-weight, the the 10th interrupt costing several times more. Maybe 4000 cycles for the light-weight interrupts and over 20,000 cycles for the heavier interrupt (which presumably includes a call to the scheduler).

The RDMSR and WRMSR instructions have variable overhead, depending on the target MSR and the requesting core. For the "local" performance counter MSRs, I seem to recall average latencies in the 250 cycle range, but I could be misremembering.... These can only be executed in the kernel, and even in the kernel are typically executed via an expensive interprocessor interrupt (to execute on the correct target core), so it requires a specialized kernel module to even test this overhead....

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@McCalpinJohn wrote:

I would not expect much overhead with counter multiplexing relative to the overhead of counter virtualization.

With counter virtualization you have to do a save and restore of the performance counter programming and values on context switches. The last time I measured this on a Linux system, the overhead of the kernel timer interrupt averaged about 6240 core cycles, with the counters attributing 5240 cycles to kernel mode and 1100 cycles to user mode. If I recall correctly, 9 of 10 interrupts are lighter-weight, the the 10th interrupt costing several times more. Maybe 4000 cycles for the light-weight interrupts and over 20,000 cycles for the heavier interrupt (which presumably includes a call to the scheduler).

The RDMSR and WRMSR instructions have variable overhead, depending on the target MSR and the requesting core. For the "local" performance counter MSRs, I seem to recall average latencies in the 250 cycle range, but I could be misremembering.... These can only be executed in the kernel, and even in the kernel are typically executed via an expensive interprocessor interrupt (to execute on the correct target core), so it requires a specialized kernel module to even test this overhead....

Now I see, that my rough estimation (100-200 cycles) was incorrect and the overhead might be larger. I suppose, that counter multiplexed access may be done at the time of overflow when the overflow value would be stored in local buffer and after that this counter would be frozen by accessing appropriate MSR register. The next operation would be a counter reprogramming to count the other event and setting the last saved value (from the previous overflow) and this will require an additional MSR access. So there would be at least 2 MSR accesses per each counter multiplexing activity. So it could be circa 500-700 cycles per each counter multiplexing -- still much lower value than counters virtualization.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

and the driver will switch between groups, attempting to measure each of the events for approximately the same fraction of the total time.

Intel "sep" driver (or rather its user mode interface) documentation states, that multiplexing is alternating at the rate of 50 samples. It was not stated how it is done in case of different counter overflow periods for performance events being in the same group.

In my own performance analysis (VTune, perf) I usually prefer to execute multiple runs with 4 or 8 performance events per run.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> In my own performance analysis (VTune, perf) I usually prefer to execute multiple runs with 4 or 8 performance events per run.

I certainly prefer that approach as well, but it requires that the performance characteristics of the code be highly repeatable. Multiplexing is critical when you need more than one set of events from the same run.

The performance variability that I am looking for is often due to the different sets physical addresses assigned for the different runs -- performance characteristics are relatively stable *within* an execution, but vary *across* executions.

In any case, there is not one "cookbook" approach that can be successfully applied to all scenarios,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The performance variability that I am looking for is often due to the different sets physical addresses assigned for the different runs -- performance characteristics are relatively stable *within* an execution, but vary *across* executions.

Completely agree with your opinion, even when I use multiplexing I observed a large variation of performance events between the consecutive executions, usually being non-Gaussian distributed (log-normal, or multi-modal).

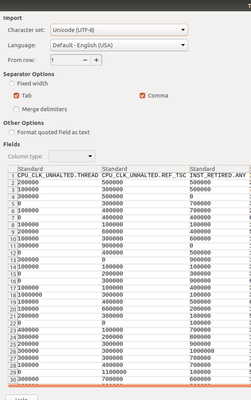

For example this screenshot is a part of Intel FlexRAN "LdpcOrthogonalLayerInt16Aligned<(int)4, Is16vec32>" function (32 samples collected by the VTune (per-driverless collector [16 performance events mulitplexed]) and strange 0 results (actually a zero sample).

I suspect the issue of skid, when during the counter overflow the due to imprecise interrupt (PMI) the next IP (possibly after the return of PMI handler) did not fell in the range of aforementioned function.

Have you seen this kind of behavior?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I expect that sort of variability for sampling-based performance monitoring. Although the reported counts may look large, they are typically actually small counts multiplying a large overflow interval. In your case it looks like mostly single-digit counts for each row, each multiplied by the overflow interval of 100,000.

Assuming that each row of your table contains the counts for a separate trial of the code, the overall statistics look like I would expect from a binomial distribution with a small number of trials. The zero values are probably due to the way the sampling is triggered -- perhaps by the overflow of any of the counters?

- Tags:

- I think

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your response.

The single test consist of 1000 loop iterations of 5G uplink layer 1 simulation. The test is executed in Docker environment, hence PEBS performance events are not available to count. I do not have any possibility to minimize a random noise (e.g. boost frequency variation, lack of warm up loop for avx512 heavy floating-point penalty,non-deterministic core power and thermal characteristics). The counters were set to measure only user mode activity as expected from simulation code.

I agree, that 32 samples are not enough and at least 100-400 samples should be collected in order to observe more accurately the distribution trend.

The zero values are quiet perplexing and quite irrational in some cases, and their distribution is random and their count increases in case of user-mode counting only. In case of both kernel mode and user mode counting the number of 0 values is very low, but the results are skewed mainly by PMI interrupt handler, apic_timer interrupt and other kernel activity.

In other case where FlexRAN main hotspot function 'matrix_inv_cholesky_16_16' (kernel mode and user mode counting enabled) VTune assigned x87 non-arithmetic uops for the address range of aforementioned function. I suppose, that maybe FXSAVE,FXRSTR instruction or their uops where or maybe other x87 instruction uops overflowed the counter and IP (due to skid) was resolved to address range of matrix_inv_cholesky_16_16 function, or more probable due to counter overflowing caused by the kernel activity in context of matrix invert function (whose context was resolved by getting next IP (return of PMI handler) ) and mapping it the address range of that function.

Unfortunately Intel did not comment, that issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sampling is usually a quick way to find hotspots, and it can be a great way to find unexpected interference (from the OS or from other processes), but the slow convergence of the statistics makes it a poor approach to detailed understanding. Once I find the areas of interest, I typically switch to inline instrumentation -- RDTSCP to start and RDPMC if it is possible to configure strict core binding.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once I find the areas of interest, I typically switch to inline instrumentation -- RDTSCP to start and RDPMC if it is possible to configure strict core binding.

I'm considering this method also.

I found little nice library called "libpfc" which enables a programmable counters configuration, by the means of small kernel module. Have not tried yet, but it seems as interesting method to measure at code block/loop level at possible a small overhead.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are lots of software libraries available. PAPI is probably the oldest. LIKWID is popular.

Either can probably do what you need. I use my own inline instrumentation (e.g., https://github.com/jdmccalpin/low-overhead-timers) to avoid the kernel calls needed by most of the packages to virtualize the counters. In rough terms this reduces the overhead for a performance counter read from a few thousand cycles to about 25 cycles (on SKX).

A discussion of some of the issues in making very fine-granularity performance counter measurements on Intel processors is at https://sites.utexas.edu/jdm4372/2018/07/23/comments-on-timing-short-code-sections-on-intel-processors/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have not used PAPI and LIKWID.

Both of libraries has a large overhead especially in case of LIKWID marker functions whose overhead is around 2000 cycles, as stated in this relevant link

In case of fine granularity measurement your "low-overhead-timers" are good option and I will use them for my own projects performance measurements.

I have a look at your "periodic-performance-counters" project and I did not fully understand the arguments passed to /dev/cpu/*/msr driver. I saw, that you defined only MSR registers addresses https://github.com/jdmccalpin/periodic-performance-counters/blob/master/MSR_defs.h.

To be more clear -- can I use the raw performance events encoding as it is used by the perf-stat, perf-record or Intel sep utility?

For example how can I pass this raw event to function pread?

cpu/period=0x4e20,event=0x79,umask=0x8,name=\'IDQ.DSB_UOPS\'/u

Thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The "periodic-performance-counters" infrastructure uses run-time input files to select the performance counter events. These are the "*.input" files in the main directory (they should be in the "Example" directory because I have to specify the event names in both the "*.input" file(s) and in the corresponding "Example/*_event_names.lua" file(s) that are used by the post-processing script. With a little effort, I probably could have written the Lua code to parse the event names "*.input" files, but I never got around to it....)

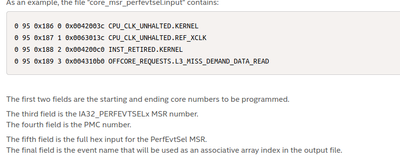

As an example, the file "core_msr_perfevtsel.input" contains:

0 95 0x186 0 0x0042003c CPU_CLK_UNHALTED.KERNEL

0 95 0x187 1 0x0063013c CPU_CLK_UNHALTED.REF_XCLK

0 95 0x188 2 0x004200c0 INST_RETIRED.KERNEL

0 95 0x189 3 0x004310b0 OFFCORE_REQUESTS.L3_MISS_DEMAND_DATA_READ

The first two fields are the starting and ending core numbers to be programmed.

The third field is the IA32_PERFEVTSELx MSR number.

The fourth field is the PMC number.

The fifth field is the full hex input for the PerfEvtSel MSR.

The final field is the event name that will be used as an associative array index in the output file.

For events that require auxiliary control registers, there is a separate input file "core_msr_control.input" that can also be used. The other input files use a similar-looking structure, but are set up to specify each socket/box/counter separately (rather than with implied looping).

I use the "low-overhead-counters" package a lot more (very close to every day) but the periodic performance counters package has also been quite useful for me. I use it not only for interval sampling in the background, but also as a framework for function-level testing. For example, I replaced the "nanosleep()" call with a call to the Intel MKL DGEMM routine to collect ensembles of counter data (multiple samples per execution and multiple executions to see the effect of different virtual-to-physical address assignments).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have analyzed your periodic-counters implementation in-depth, but the only one unanswered problem bothers me.

Which method did you use for creating the hexadecimal encoding of performance event?

I was reading the Intel SDM (chapter 18) and have not been able to find the meaning of hex values like: 0x42, 0x63, 0x43

The last 4 hex digits denote event code and umask, but the first two digits denote exactly what -- maybe specific PerfEventSelx MSR register?

I replaced the "nanosleep()" call with a call to the Intel MKL DGEMM routine to collect ensembles of counter data (multiple samples per execution and multiple executions to see the effect of different virtual-to-physical address assignments).

Was that a multithreaded version of MKL_DGEMM?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The hex values in my input files are the full representation of an IA32_PERFEVTSEL MSR, so no sub-fields are explicitly represented. (Sub-fields on nibble boundaries are obvious in the hex encoding of the full register, but sub-fields on other boundaries are less visible.)

So the second event I listed expands to:

$ echo 0063013c | bits32

3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0

------------------------------------------------

0 0 0 0 0 0 0 0 0 1 1 0 0 0 1 1 0 0 0 0 0 0 0 1 0 0 1 1 1 1 0 0This sets bit 22 (AnyThread), 21 (Enable), 17 (OS), 16 (USR), with bits 15:8 providing the Umask (0x01), and bits 7:0 providing the EventSelect (0x3c).

I developed the habit of working with the full PerfEvtSel register contents because so many tools provided only partial support for controlling the other fields. This has been especially true when processors and/or software are new -- which is when I need full access to the counter functionality the most!

Re: DGEMM --- the periodic-performance-counters infrastructure is set up to read the counters on all cores, CHAs, and IMCs, so my primary use was with multi-threaded DGEMM. For most of my work I would install measurement instrumentation deeper inside the routine I am studying, but for DGEMM that would require more hacking on the binary than I was ready to undertake. Mostly I was just trying to understand DGEMM performance variability on my Xeon Platinum 8160 processors, and then verifying that severe Snoop Filter Conflicts leading to L3 conflicts in the L2 victim stream was the source of the problem. (https://sites.utexas.edu/jdm4372/2019/01/07/sc18-paper-hpl-and-dgemm-performance-variability-on-intel-xeon-platinum-8160-processors/)

The periodic-performance-counters infrastructure is still quite useful for single-threaded studies (including DGEMM), since all activity outside of the L2 caches is distributed across all of the CHAs, L3's and IMC's on the chip.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>>The hex values in my input files are the full representation of an IA32_PERFEVTSEL MSR, so no sub-fields are explicitly represented. (Sub-fields on nibble boundaries are obvious in the hex encoding of the full register, but sub-fields on other boundaries are less visible.)

So the second event I listed expands to:>>>

This is the information, that I was looking for!! Now everything is clear and understood.

I was led astray by the perf and VTune CLI tools which as you mentioned operate only on event code, umask and additional "event modifiers".

>>>The periodic-performance-counters infrastructure is still quite useful for single-threaded studies>>>

I envision the usage (in context of my analysis [single Cascade-Lake 28 core server]) of periodic performance counters as lower latency and overhead complement to perf and VTune.

>>>For most of my work I would install measurement instrumentation deeper inside the routine I am studying>>>

I presume that you use the low-overhead-counters at such scope?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

> I envision the usage (in context of my analysis [single Cascade-Lake 28 core server])

> of periodic performance counters as lower latency and overhead complement to perf and VTune.

I don't know if the current implementation of periodic-performance-counters has lower latency/overhead than either perf or VTune (sep) -- my goals in developing the package were slightly different:

- provide full control over all of the performance counters for the node

- core, IMC, CHA, PCU

- additional SW-based counters as available (e.g., InfiniBand procfs interfaces)

- provide a time series of samples with user-selectable intervals

- allow 1-second intervals with no more than ~1% overhead on a single core

- use an output format that enables both easy "manual" review (e.g., "grep") and easy scripting.

The current version is incomplete (e.g., no UPI counters yet), but comes close enough to meeting the other goals. If I recall correctly, the average overhead varied between 0.7% and 1.2% (i.e., 7 milliseconds to 12 milliseconds per sample with a 1-second sampling rate). (Here "overhead" is defined as elapsed time on one core to read the performance counters on all cores, all uncore boxes, etc.)

One downside of my approach is the large amount of output generated. My study for the SC18 paper generated about 240 GB of performance counter data from about 25,000 runs.

> I presume that you use the low-overhead-counters at such scope?

Yes. Many of my codes start with either

#include "low-overhead-timers.h"or

#include "low-overhead-timers.c"I spent quite a while making sure that the syntax used in the "low-overhead-timers.c" file would work correctly and with very close to the lowest possible latency with both the Intel and GCC compilers for both separate compilation (using the header file) and for inline compilation (including the C source directly).

Overhead for low-overhead-counters is dramatically lower than for periodic-performance-counters because low-overhead-counters is implemented with only user-mode instructions (RDPMC and RDTSC(P)). On SKX, the RDPMC instruction requires about 24 core cycles, while the /dev/cpu/*/msr device driver calls in periodic-performance-counters took an average of 6100 to over 40000 TSC cycles (average 12300) when running on a busy system (executing MKL DGEMM or HPL on all cores).

I am sure that the overhead in periodic-performance-counters can be reduced significantly, but that has not been a priority. Using RDPMC requires being bound to a specific core (this is a significant fraction of the overhead of the /dev/cpu/*/msr path), so the code would have to have one thread per logical processor to avoid the interprocessor interrupts for rebinding. The overhead of reading the programmable plus fixed-function counters is about 58% of the total overhead of periodic-performance-counters, so even if those reads became free the overhead reduction would be well under a factor of 2. The only way to make the overhead a lot lower would be to install a kernel module that supports making many MSR reads with a single kernel call. The "msr-batch" function in https://github.com/LLNL/msr-safe should do this, but I have not yet tried to port that kernel module to my systems.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>>I spent quite a while making sure that the syntax used in the "low-overhead-timers.c" file would work correctly and with very close to the lowest possible latency with both the Intel and GCC compilers for both separate compilation (using the header file) and for inline compilation (including the C source directly).>>>

I think, that using "low-overhead-timers" is a way to go for precise performance measurement at the function/loop scope and I will try to use it for single computational kernel measurement and for instrumentation insertion at the scope of many detected by the VTune hotspots (loops and functions)

I have the last question in regards to your methodology.

If I remember correctly the RDPMC instruction when executed from the user mode space reads only fixed counters. In order to access the programmable counters from the user-mode CR4.PCE bit should be set 1.

If I understood your method correctly, then you do not set CR4.PCE bit explicitly and instead the msr-tools are used to program the specified performance events and later the user-mode executed RDPMC reads those counters. In this scenario the overhead is as very low (as you stated: 24 cycles).

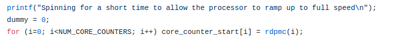

For example after programming a few events, later the RDPMC instruction can receive a simple loop induction variable as counter index, just as the following code snippet demonstrates?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CR4.PCE must be set within the kernel, and I don't know of any interface that would allow a user to request that it be set. Ten years ago CR4.PCE was *cleared* by default in most Linux distributions. I don't remember exactly when this changed, but it has been *set* by default on every Linux distribution I have looked at in the last 6+ years.

When using the low-overhead timers, it is important to avoid using the "perf" subsystem. "Perf" has an irritating tendency to disable the fixed-function counters upon exit, forcing the user to re-write IA32_FIXED_CTR_CTRL (MSR 0x38d) to the desired value (typically 0x333). Of course it also disables the programmable performance counters on exit, but it generally does that just by flipping the "enable" bit in each of the IA32_PERFEVTSELx registers, rather than by disabling everything via IA32_PERF_GLOBAL_CTRL (MSR 0x38f). I try to remember to fix these two MSRs in any script that programs the individual PerfEvtSel MSRs.

IA32_PERF_GLOBAL_CTRL should be 0x7000000FF on cores with HT disabled and 0x70000000F on cores with HT enabled.

If the NMI Watchdog is enabled, you will typically see MSR 0x38d set to something like 0x0b0. This enables interrupt on overflow for the CPU cycles not halted counter. The "right" way to deal with this is to disable the NMI watchdog and re-enable it after you are finished. A "good enough" way is to note that if the NMI watchdog never receives any interrupts, it can't cause any problem -- so just overwrite MSR 0x38d without any of the overflow bits set (e.g., 0x333) and everything works fine.

The source code for the "rdpmc()" function in low-overhead-counters takes an "int" argument, puts the value in %eac, executes RDPMC, then packs %eax:%edx into a 64-bit return -- so you can generate the index any way you want. If the value you provide is out-of-range, the hardware will raise a #GP(0) exception. The programmable counters use indices 0-3 (with HT enabled) or 0-7 (with HT disabled).

extern inline __attribute__((always_inline)) unsigned long rdpmc(int c)

{

unsigned long a, d;

__asm__ volatile("rdpmc" : "=a" (a), "=d" (d) : "c" (c));

return (a | (d << 32));

}The fixed-function counters use "special" counter numbers on all recent processors: "1<<30 + [0,1,2]".

If you can remember that convention, you can pass these values to the "rdpmc(int c)" function, but I also provide explicit function names that I find easier to remember, e.g.:

extern inline __attribute__((always_inline)) unsigned long rdpmc_actual_cycles()

{

unsigned long a, d, c;

c = (1UL<<30)+1;

__asm__ volatile("rdpmc" : "=a" (a), "=d" (d) : "c" (c));

return (a | (d << 32));

}.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page