- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Intel team.

I've made this code which works correctly (result = 19) when working on a CPU. But for some reason if I try it out on a GPU, then it returns 25 (these tests have been done in the DevCloud with the nodes that have the gen9 GPUs, selecting between the GPU and CPU with the gpu or cpu_selector feature when creating the queue).

I've checked out every variable, whether it is in device's or host's memory, etc. I've tried to change every "malloc_device" for "malloc_host", so everything was done in the host's memory, still the same thing happens.

The only difference I've discovered is that the value returned by the "gesvd_scratchpad_size()" function is very different between the two (1000~ in GPU and 6000~ in CPU), but I've forced the value of the CPU on the GPU and nothing changes, so it doesn't seem to be the matter.

I'm not using accessors nor malloc_shared, but malloc_device and malloc_host.

The body of the code is from line 276 to 531.

I've attached everything in "For_Intel.zip". To execute the code, just follow these steps:

1. Compile with "make" command, as usual.

2. Execute with "./vd Cuprite 5 output" (the "output" part is useless but needed).

3. The code will return the result ("res"), which should be 19.

4. To repeat the process, you may use "make clean" before compiling again.

What is happening in my code?

Thank you very much.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you putting the code as qsub on a job queue?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I use the interactive mode, compile the code, and then launch it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

We are able to reproduce the issue from our end.

We are working on your issue, we will get back to you soon.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidya, any news?

Looking forward to your answer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vidya. I have a couple updates about this issue:

I have run two different implementations (SYCL / DPC++ with oneMKL DPC++ API, and OpenMP Offloading with oneMKL C API) of a different code in the gen9 GPU. Again, it returns a different result than the other versions I have of the same code (versions that I have run in all the other DevCloud CPUs, and on a couple NVIDIA GPUs, all of these give the same result).

The curious thing is that I compared the results given by these two versions (whose results differ from the rest) and guess what, they are the same! So the gen9 is giving a different result than it should, but its giving the same result in every implementation it is used, independently from the programming paradigm used.

So my hypothesis here is that the gen9 GPU may have something different in its behaviour that I'm not aware of.

It would be super useful if you could, if possible, run the code I attached in the first message on a dedicated Intel GPU, instead of the integrated gen9, to see if the same result is given (I believe there are no dedicated Intel GPUs in the DevCloud that I can try myself).

Looking forward to your answer.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adrian,

Apologies for the delay and I really appreciate your efforts.

We tried running the code on different nodes of devcloud as well as tested it on different machines. In local machines with the same gen9 iGPU the issue is not reproducible. And on devcloud, on some nodes (here s001-n140), we are getting the same results for both CPU as well as GPU and on some other nodes, we are getting different results.

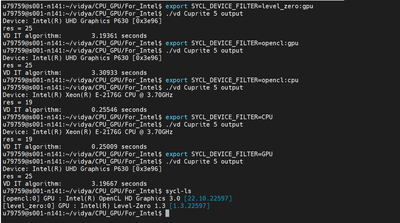

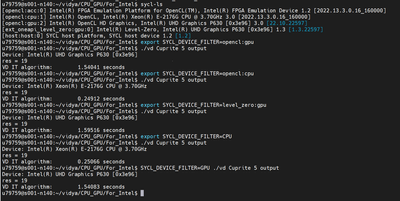

Here are the screenshots for both cases.

Node which gives different results:

Node which gives the same results:

As you can see, in both the screenshots the results are from Intel(R) UHD Graphics P630 device.

So the issue behind the different results of the code might be due to some nodes in DevCloud.

Please give it a try and do let me know if you have any issues in doing so.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adrian,

Reminder:

As we haven't heard back from you, could you please provide us with an update regarding the issue?

Please let us know if you need any help in this case.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vidya, thank you for answering. I haven't been able to answer sooner as I wasn't in my working place, sorry.

Yes, I have tried the codes on that node and they work!! Every code I have tried has worked corectly but one.

I'm sending you this code, it is the same code but with the use of oneMKL parallel functions + OpenMP Offload feature instead of SYCL and oneMKL. It should give the same result but it gives 85. I've tried it out on two nodes (140 and 159) and the result is the same.

I have not been able to find the error in the code so I'm sending it to you so hopefully you can run it on local and see if it works correctly (result = 19).

The code is compiled and run as the one in the first message.

Thank you very much Vidya!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey! Here is another version of the code, I think I found an error and solved it. It would be great if you could try both versions in local.

Looking forward to your answer.

Thank you Vidya!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adrian,

Thanks for sharing the details.

>>...if you could try both versions in local.

I tried running both versions in local machine and could see that for the code which you have shared in earlier post (For_Intel_2.zip) the result is 27 and for the code in the previous post (For_Intel_New_Version.zip) the result is 25.

In devcloud the results are 85 for the code attached in For_Intel_2.zip and 83 for the code in For_Intel_New_Version.zip file.

>> I think I found an error and solved it.

Could you please let us know what are the changes that you have made?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vidya! Happy to hear from you again!

I see... how curious. It looks like there is an offset in the DevCloud (in this case, 58) from the "true" output of the program.

More than an error what I did is a change of the distribution of the work between the host and the device. In the first code, the "count" variable editing is done in the device. In the newer version what I did is move that work to the host, but therefore I also had to add some "#pragma omp target update from..." to keep the host variables up to date. These changes are done around the line 429.

Following the above reasoning, if the offset is actually happening that means that the result on the DevCloud should output 77 (19 (the correct result) + 58 (the offset)). I have created another version which is very simplified (attached as "For_Intel_Basic_Version.zip"). In this new version, I do all the "kernels" and computing in the host, and only the oneMKL functions are executed in the device. As you can see, all there is to this code is lots of data movement to keep both ends coherent, but all the computing other than the functions is done sequentially on the host, with no parallel optimization.

This new code outputs also 83 in the DevCloud. I would like you to also run it in local, to see if 83 in the DevCloud always corresponds to 25 in local.

The last thing I have though about is about the problem being in the oneMKL functions executed in the device. By this I mean that there may be a problem when executing oneMKL functions in the gen9 GPU. The parameters in the oneMKL calls are 100% correct as they are the same in other version that works (this version is the same (OpenMP + MKL) but for CPU execution, not for GPU Offloading, it is also attached as "For_Intel_CPU_version.zip", in case you want to check it out and compare both versions).

Do you think that could be a possibility?

Thank you very much!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adrian, @gamersensual14

Thanks for sharing the test codes and I really appreciate your efforts.

Could you please try changing the line in VD.cpp (line 439 I guess) from For_Intel_Basic_version zip file as shown below?

#pragma omp target update from(CovEigVal, CorrEigVal)

After the above change, please try running the code and see if you still get incorrect results.

>> oneMKL functions executed in the device. By this I mean that there may be a problem when executing oneMKL functions in the gen9 GPU

Well, I don't think the problem is with gen9 GPU because it is indeed a supported device as per the system requirements of oneMKL.

I guess the problem lies in the way we update the values from device to host. Because after the above change I could see the output as 19 in both DevCloud as well as in local machine.

Please give it a try and do let me know if it did not work.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidya!

As you said, it worked and returned 19. The problem now is that I fail to understand why all the other "update" clauses I used have to specify the size and these last two do not.

For example, this line:

#pragma omp target update to(image[:lines*samples*bands])

Is not replaceable for this:

#pragma omp target update to(image)

With this last line, the program returns 1.

The same goes for the other lines, for example, you can't either remove the size from this line:

#pragma omp target update from(Cov[:bands*bands])

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Adrian,

Thanks for the details and for all the information.

We are working on it and we will get back to you soon.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidya!

OK I have a few things to tell you... first of all, the code finally works!!! Yes! Lets go step by step:

About the #pragma update thing, it turns out that that pragma does not work with pointers, but just static arrays or variables. If you want to copy arrays dinamically allocated with the use of pointers you have to use the OpenMP function called omp_target_memcpy(). In case anyone reading this needs an example of this function, I have uploaded "NUEVA PRUEBA.zip", which contains an original code that uses this function in both directions (host -> device, device -> host). I needed that data movement for doing tests, but I didn't use this resource in the last version of the code.

Now about the code, it had two errors:

1. The first one is that the variable "mean" in the first loop after entering the data region wasn't working as I expected. I changed it to a single variable "mean" (it was an array) and made it private to each thread. It worked.

2. The second error is the strange one, as I'm using OMP Offloading feature, I'm told at multiple Intel pages to use the "pragma omp target variant dispatch use_device_ptr(...)" and within that call a function, in order to tell the compiler I want that function to be executed in the device. Well it works with the CBLAS call to dgemm(), but fails in the LAPACKE calls to dgesvd(). By replacing the "variant dispatch" with "data" it works.

Not only that, but also the efficiency is so much better, here are the execution times. These executions have been done only changing the first cblas call (in one version with "data", in the other with "variant dispatch"); the LAPACKE calls are always within data regions, for the code to return the correct result (tests done in the node s001-n178, as it seems to work just fine, like the s001-n140):

With "data": 1,04 sec

With "variant dispatch": 2,47 sec

So I ended up changing all the "variant dispatch" for "data", so the three function calls that take place in the code are within a "#pragma omp target data use_device_ptr(...)" region.

After all of that, the question is: am I doing something wrong with the use of the LAPACKE routines in the GPU? Am I forgetting something? Because they just don't work if executed within a "variant dispatch" region.

I have uploaded both versions of the code, one with all the "data", and the other with all the "variant dispatch" in "For Intel WORKS.zip".

Please tell me if I have to change something in my code for it to be correctly programmed using the OMP Offloading feature, or if the error is somewhere else.

Looking forward to your answer.

Best regards,

Adrian

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Adrian,

Thank you for your patient.

Intel oneAPI is constantly evolving and growing. We want to let you know that, with the latest oneAPI version (oneAPI 2023.2), we didn't reproduce the issue anymore in my side. Please let us know if the issue still exist in your test, we will happy to support you.

Best Regards,

Ruqiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear oneMKL users,

oneMKL new version(oneMKL 2024.0) is available now in our website.

Thank you for reaching us. This issue is closing and we will no longer respond to this thread. If you require additional assistance from Intel, please start a new thread. Any further interaction in this thread will be considered community only.

Best Regards,

Ruqiu

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page