- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the Question said, will the pattern emitted by D400 series influence the structure light emitted by sr300 when they used at the same time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

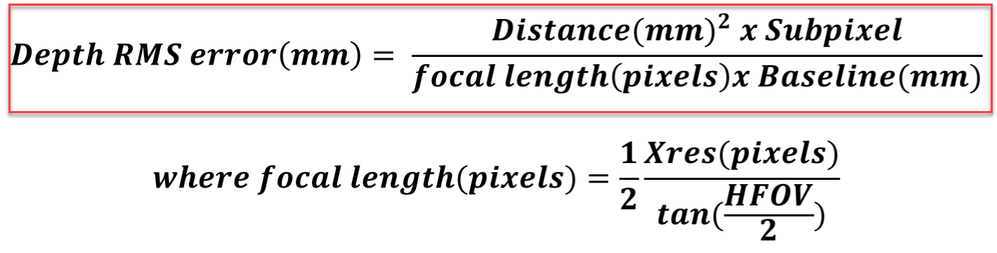

It is not an easy formula to calculate. I will try to break down its elements.

Distance (mm)2 presumably is the square of the distance in mm of an observed coordinate from the camera. So if something was 1 meter away (1000 mm) then mm squared would be 1000x1000 = 1000000 mm

Subpixel is very hard to find an easily understandable explanation for. In terms of RMS error, it usually refers to a subpixel accuracy value. Intel's camera tuning guide says: "For a well-textured target, you can expect to see subpixel < 0.1 and even approaching 0.05".

In general, the more texture detail that the camera can read from a surface, the better.

Baseline usually refers to the distance between the center of the left and right imager sensors on a stereo camera.

Focal length is, I believe in this case, the multiple of an image's pixel width (fx) and pixel height (fy). The values can be different but are commonly close. The RealSense SDK's automated RMS error calculation routine in its Depth Quality Tool seems to multiply pixel width to calculate the focal length. If pixel width and pixel height are close, it would make sense to just multiply width x width to keep the calculation simple.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

400 Series cameras such as the D415 and D435 do not interfere with each other, but they can interfere with non-400 Series cameras.

In 2012, Microsoft did research into breaking up interference in the Kinect v.1 structured light camera with vibrating motors.

https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/shake27n27Sense.pdf

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does this mean I can't use both D400 series and sr300 in a robot?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Technically you can match different RealSense camera types on a robot, and others have done this in the past (for example, an R200 and ZR300). The SR300 is likely to experience interference from the 400 Series camera though. You would also get interference if you used two SR300 cameras instead of a 400 Series, as the SR300 does not have anti-interference technology.

Other than trying to use Microsoft's interference break-up technique with a structured light camera such as the SR300, another possibility for greater control over the projected pattern that has been discussed in the past is has been to use an external shutter. People tend to build these themselves in 'do it yourself' (DIY) projects. You can find out more by googling for 'external shutter camera diy'.

Could you tell us more please about why you wish to use an SR300. If it is because there is software you want to use that does not work with newer cameras, there may be alternative software for the 400 Series that you could use. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm doing a project of robot with a mobile platform and two robot arms. I want a camera to do SLAM set up in the platform, and a camera to do manipulation set up in the tool end of robot arm. So the two camera will see a same place at the same time. I have test the D435, the accuracy of the point cloud is not good in close range. So I need a camera have a better performance in close range to do something like object scanning and will not interface with D400 series.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You could try adjusting the 'depth units' of the D435 to match the scale of the depth units on the SR300 (which has better accuracy at very close range because of its depth unit value).

https://forums.intel.com/s/question/0D70P000006B2MfSAK

The D415 model is more suited to object scanning than the D435. It typically has a higher image quality too, though it has a smaller field of view (~ 70 degrees instead of the D435's ~ 90 degrees) and a longer minimum distance (30 cm from the object instead of the D435's 20 cm). The ideal setup may therefore be a D415 with its depth units adjusted to match those of the SR300.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm confused about the D415 more suited for scanning. The min range of D415 is 30 and D435 is 20,. Firstly, the accuracy become worse when distance become longer. Secondly, D415 has a higher image quality, so combine the two factor, Is D415 still more suited for scanning?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The amount of noise over distance in the D415 and D435 models is related to something called RMS Error. Over distance the error becomes greater and greater, becoming particularly noticable around 3 meters away and increasing further after that. At a short distance from the camera though, the RMS error of the D415 and D435 does not have much difference between them. If you look at the curve of RMS error over distance in a diagram in the link below, the D435 has roughly twice the RMS error of the D415.

This is due to the hardware design of the D435. I believe that the wider field of view of the D435 is a factor in this.

https://forums.intel.com/s/question/0D70P000005PV8fSAG

For object scanning, D415 is the recommended option, yes.

It is also worth mentioning that if the object that you are observing becomes closer to the camera than the minimum distance, the image will start to break up. A simple way to reduce the minimum distance is to use a lower resolution. A more complex way is to increase the 'Disparity Shift' value so that the camera's minimum distance (MinZ) reduces, whilst the maximum observable distance (MaxZ) reduces too (though reduced observation range is not so much of a problem when working at close range)..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Where can I download the texture file?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like the download of this image was removed when Intel recently changed the design of the RealSense websites. The Chief Technical Officer of the RealSense Group provides a similar texture in the link below though. Right-click on the image and select Save Image from the menu to save the image file.

https://github.com/IntelRealSense/librealsense/issues/1267#issuecomment-372715603

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as I know you can only have one active preset at a time. You can create your own preset file though and try to balance some of your priorities in one preset. You could create it manually in a text editor. The easiest way to do it though will be to define the settings in the options menus of the RealSense Viewer program and then save the settings as a 'JSON' preset file using the save / load buttons beside the drop-down menu where you select a preset.

Dorodnic the RealSense SDK Manager provides a short script for loading a JSON here:

https://github.com/IntelRealSense/librealsense/issues/3037#issuecomment-452732168

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

What's more, Can you trouble explain the formula of Depth RMS error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is not an easy formula to calculate. I will try to break down its elements.

Distance (mm)2 presumably is the square of the distance in mm of an observed coordinate from the camera. So if something was 1 meter away (1000 mm) then mm squared would be 1000x1000 = 1000000 mm

Subpixel is very hard to find an easily understandable explanation for. In terms of RMS error, it usually refers to a subpixel accuracy value. Intel's camera tuning guide says: "For a well-textured target, you can expect to see subpixel < 0.1 and even approaching 0.05".

In general, the more texture detail that the camera can read from a surface, the better.

Baseline usually refers to the distance between the center of the left and right imager sensors on a stereo camera.

Focal length is, I believe in this case, the multiple of an image's pixel width (fx) and pixel height (fy). The values can be different but are commonly close. The RealSense SDK's automated RMS error calculation routine in its Depth Quality Tool seems to multiply pixel width to calculate the focal length. If pixel width and pixel height are close, it would make sense to just multiply width x width to keep the calculation simple.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page