- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm trying to migrate CUDA-project using dpct, but here just got the cmake files.

intel@intel-NUC8L1OFST:src$ intercept-build make

make: *** No targets specified and no makefile found. Stop.

My question is how to migrate CMAKE project from CUDA to sycl/dpcpp codes on LINUX platform?

like this:

Appreciate your help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We tried to reproduce your issue. We were able to successfully migrate the code.

>> Could you please provide your instructions on migration on https://github.com/saifullah3396/octomap.git I posted before?

According to "octomap/CMakeLists.txt:38: # __CUDA_SUPPORT__ = enable CUDA parallelization (experimental, defaults to OFF)", CUDA support is OFF by default in the CMakeLists.txt.

Please ensure CMake is configured with CUDA support before proceeding with the migration.

Now, please run the below commands:

# Generate compile_commands.json:

intercept-build make

# Then, migrate the entire code base with the compile_commands database file:

build$ dpct -p compile_commands.json --out-root=dpct_out --cuda-include-path=<cuda-path>/include/ --in-root=..

# or manually with:

src$ dpct TArray.cu CudaOctomapUpdater.cu --cuda-include-path=<cuda-path>/include/ --in-root=.. --extra-arg="-I<project-path>/octomap/octomap/include/"Please note that before running intercept-build, it is a best practice to clean any existing build files using "make clean".

Hope the provided details will help you to resolve your issues.

Thanks & Regards,

Manjula

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

>>make: *** No targets specified and no makefile found. Stop.

This error specifies that there is no "Makefile" available to build your application, which is also evident by the absence of a "Makefile" in your screenshot of the application directory.

Please check whether the binary executable can be successfully generated for your application before proceeding with the migration to SYCL/DPCPP. This will ensure the correctness of your build process.

Please note that the "intercept-build" requires a promptly working "Makefile" to create the compilation database.

>>My question is how to migrate CMAKE project from CUDA to sycl/dpcpp codes on LINUX platform?

Have you tried Configuring CMake and generate makefile.

Kindly refer the following resources for streamlining your migration.

https://techdecoded.intel.io/essentials/migrating-existing-cuda-code-to-data-parallel-c/#gs.lpa009

https://www.intel.cn/content/www/cn/zh/developer/articles/technical/intel-dpcpp-compatibility-tool-best-practices.html

If the issue still persists, please provide us a small reproducer (steps to reproduce it if any) so that we can work on it from our end.

Regards,

Manjula.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

thanks for your help.

I tried your suggestion, and my steps listed here

intel@intel-NUC8L1OFST:workspace$ git clone https://github.com/saifullah3396/octomap.git

intel@intel-NUC8L1OFST:workspace$ cd octomap/

intel@intel-NUC8L1OFST:octomap$ git checkout cuda-devel

Prepare:

intel@intel-NUC8L1OFST:octomap$ source /opt/intel/oneapi/setvars.sh

intel@intel-NUC8L1OFST:octomap$ export PATH=$PATH:/usr/local/cuda/bin/

intel@intel-NUC8L1OFST:octomap$ mkdir build && cd build/

intel@intel-NUC8L1OFST:build$ cmake ../

intel@intel-NUC8L1OFST:build$ intercept-build make

[ 2%] Built target octomath-static

[ 5%] Built target octomath

[ 14%] Built target octomap-static

[ 16%] Built target octree2pointcloud

[ 19%] Built target simple_example

[ 21%] Built target graph2tree

[ 24%] Built target log2graph

[ 26%] Built target binvox2bt

[ 35%] Built target octomap

[ 37%] Built target intersection_example

[ 39%] Built target bt2vrml

[ 42%] Built target edit_octree

[ 45%] Built target convert_octree

[ 47%] Built target eval_octree_accuracy

[ 49%] Built target compare_octrees

[ 51%] Built target normals_example

[ 53%] Built target test_raycasting

[ 54%] Built target test_iterators

[ 55%] Built target test_scans

[ 56%] Built target test_pruning

[ 58%] Built target test_io

[ 59%] Built target test_changedkeys

[ 60%] Built target test_color_tree

[ 61%] Built target color_tree_histogram

[ 63%] Built target test_mapcollection

[ 65%] Built target unit_tests

[ 70%] Built target octovis-shared

[ 89%] Built target octovis

[ 94%] Built target octovis-static

[ 95%] Built target dynamicedt3d-static

[ 96%] Built target dynamicedt3d

[ 97%] Built target exampleEDTOctomapStamped

[ 99%] Built target exampleEDTOctomap

[100%] Built target exampleEDT3D

intel@intel-NUC8L1OFST:build$ cat compile_commands.json

[]

// Here the json file is empty.

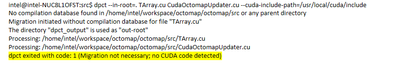

intel@intel-NUC8L1OFST:build$ dpct -p compile_commands.json --in-root=. --out-root=migration

dpct exited with code: 1 (Migration not necessary; no CUDA code detected)

// Shows that no CUDA code

Next, i located the CUDA code and try to migrate them manually

intel@intel-NUC8L1OFST:build$ cd ../octomap/src/

Actually, the image is the one I sent on my last topic.

Run

intel@intel-NUC8L1OFST:src$ dpct --in-root=. TArray.cu CudaOctomapUpdater.cu --cuda-include-path=/usr/local/cuda/include

No compilation database found in /home/intel/workspace/octomap/octomap/src or any parent directory

Migration initiated without compilation database for file "TArray.cu"

The directory "dpct_output" is used as "out-root"

Processing: /home/intel/workspace/octomap/octomap/src/TArray.cu

Processing: /home/intel/workspace/octomap/octomap/src/CudaOctomapUpdater.cu

dpct exited with code: 1 (Migration not necessary; no CUDA code det ected)

It also returns the NO Cuda code.

But It indeed has CUDA code in file https://github.com/saifullah3396/octomap/blob/cuda-devel/octomap/src/CudaOctomapUpdater.cu

cudaCheckErrors(cudaMallocManaged(&free_hash_arr_device_, sizeof(UnsignedArrayCuda)));

cudaCheckErrors(cudaMemcpy(free_hash_arr_device_, &free_hash_arr_host, sizeof(UnsignedArrayCuda), cudaMemcpyHostToDevice));

cudaCheckErrors(cudaMallocManaged(&occupied_hash_arr_device_, sizeof(UnsignedArrayCuda)));

cudaCheckErrors(cudaMemcpy(occupied_hash_arr_device_, &occupied_hash_arr_host, sizeof(UnsignedArrayCuda), cudaMemcpyHostToDevice));

TArray<KeyHash> free_hashes(max_hash_elements_);

TArray<KeyHash> occupied_hashes(max_hash_elements_);

free_hashes.allocateDevice();

occupied_hashes.allocateDevice();

cudaCheckErrors(cudaMallocManaged(&free_hashes_device_, sizeof(TArray<KeyHash>)));

cudaCheckErrors(cudaMemcpy(free_hashes_device_, &free_hashes, sizeof(TArray<KeyHash>), cudaMemcpyHostToDevice));

cudaCheckErrors(cudaMallocManaged(&occupied_hashes_device_, sizeof(TArray<KeyHash>)));

cudaCheckErrors(cudaMemcpy(occupied_hashes_device_, &occupied_hashes, sizeof(TArray<KeyHash>), cudaMemcpyHostToDevice));

Did dpct support these codes or I get anything wrong?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing the detailed steps to reproduce your issue.

We tried to reproduce your issue on our end, but we were able to successfully generate a valid compile_commands.json file with contents.

Please find the attached JSON file which was generated on our end.

To validate the correctness of toolkit installation, could you please try migrating a simple vector_add from NVIDIA Samples and let us know if you are facing the same issue or not.

Please find the link for CUDA Samples below.

https://github.com/NVIDIA/cuda-samples/tree/master/Samples/0_Introduction/vectorAdd

Regards,

Manjula

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Manjula,

Here're my step to validate the correctness of toolkit installation from your requirement.

It also works for me.

intel@intel-NUC8L1OFST:~$ git clone https://github.com/NVIDIA/cuda-samples

intel@intel-NUC8L1OFST:~$ cd cuda-samples/Samples/0_Introduction/vectorAdd/

intel@intel-NUC8L1OFST:vectorAdd$ ls

Makefile README.md vectorAdd_vs2017.sln vectorAdd_vs2019.sln vectorAdd_vs2022.sln

NsightEclipse.xml vectorAdd.cu vectorAdd_vs2017.vcxproj vectorAdd_vs2019.vcxproj vectorAdd_vs2022.vcxproj

intel@intel-NUC8L1OFST:vectorAdd$ source /opt/intel/oneapi/setvars.sh

:: initializing oneAPI environment ...

-bash: BASH_VERSION = 5.0.17(1)-release

:: advisor -- latest

:: ccl -- latest

:: compiler -- latest

:: dal -- latest

:: debugger -- latest

:: dev-utilities -- latest

:: dnnl -- latest

:: dpcpp-ct -- latest

:: dpl -- latest

:: intelpython -- latest

:: ipp -- latest

:: ippcp -- latest

:: ipp -- latest

:: mkl -- latest

:: mpi -- latest

:: tbb -- latest

:: vpl -- latest

:: vtune -- latest

:: oneAPI environment initialized ::

intel@intel-NUC8L1OFST:vectorAdd$ intercept-build make

/usr/local/cuda/bin/nvcc -ccbin g++ -I../../../Common -m64 --threads 0 --std=c++11 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_37,code=sm_37 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_80,code=sm_80 -gencode arch=compute_86,code=sm_86 -gencode arch=compute_86,code=compute_86 -o vectorAdd.o -c vectorAdd.cu

/usr/local/cuda/bin/nvcc -ccbin g++ -m64 -gencode arch=compute_35,code=sm_35 -gencode arch=compute_37,code=sm_37 -gencode arch=compute_50,code=sm_50 -gencode arch=compute_52,code=sm_52 -gencode arch=compute_60,code=sm_60 -gencode arch=compute_61,code=sm_61 -gencode arch=compute_70,code=sm_70 -gencode arch=compute_75,code=sm_75 -gencode arch=compute_80,code=sm_80 -gencode arch=compute_86,code=sm_86 -gencode arch=compute_86,code=compute_86 -o vectorAdd vectorAdd.o

mkdir -p ../../../bin/x86_64/linux/release

cp vectorAdd ../../../bin/x86_64/linux/release

intel@intel-NUC8L1OFST:vectorAdd$ cat compile_commands.json

[

{

"command": "nvcc -c -I../../../Common -m64 --std=c++11 -o vectorAdd.o -D__CUDACC__=1 vectorAdd.cu",

"directory": "/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd",

"file": "/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu"

}

]

intel@intel-NUC8L1OFST:vectorAdd$ dpct -p compile_commands.json --in-root=. --out-root=migration

Processing: /home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:92:9: warning: DPCT1003:0: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaMalloc((void **)&d_A, size);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:96:13: warning: DPCT1009:1: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:102:9: warning: DPCT1003:2: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaMalloc((void **)&d_B, size);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:106:13: warning: DPCT1009:3: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:112:9: warning: DPCT1003:4: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaMalloc((void **)&d_C, size);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:116:13: warning: DPCT1009:5: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:124:9: warning: DPCT1003:6: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaMemcpy(d_A, h_A, size, cudaMemcpyHostToDevice);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:129:13: warning: DPCT1009:7: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:133:9: warning: DPCT1003:8: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaMemcpy(d_B, h_B, size, cudaMemcpyHostToDevice);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:138:13: warning: DPCT1009:9: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:147:3: warning: DPCT1049:10: The workgroup size passed to the SYCL kernel may exceed the limit. To get the device limit, query info::device::max_work_group_size. Adjust the workgroup size if needed.

vectorAdd<<<blocksPerGrid, threadsPerBlock>>>(d_A, d_B, d_C, numElements);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:148:9: warning: DPCT1010:11: SYCL uses exceptions to report errors and does not use the error codes. The call was replaced with 0. You need to rewrite this code.

err = cudaGetLastError();

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:152:13: warning: DPCT1009:12: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:159:9: warning: DPCT1003:13: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaMemcpy(h_C, d_C, size, cudaMemcpyDeviceToHost);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:164:13: warning: DPCT1009:14: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:179:9: warning: DPCT1003:15: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaFree(d_A);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:183:13: warning: DPCT1009:16: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:187:9: warning: DPCT1003:17: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaFree(d_B);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:191:13: warning: DPCT1009:18: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:195:9: warning: DPCT1003:19: Migrated API does not return error code. (*, 0) is inserted. You may need to rewrite this code.

err = cudaFree(d_C);

^

/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd/vectorAdd.cu:199:13: warning: DPCT1009:20: SYCL uses exceptions to report errors and does not use the error codes. The original code was commented out and a warning string was inserted. You need to rewrite this code.

cudaGetErrorString(err));

^

Processed 1 file(s) in -in-root folder "/home/intel/cuda-samples/Samples/0_Introduction/vectorAdd"

See Diagnostics Reference to resolve warnings and complete the migration:

https://software.intel.com/content/www/us/en/develop/documentation/intel-dpcpp-compatibility-tool-user-guide/top/diagnostics-reference.html

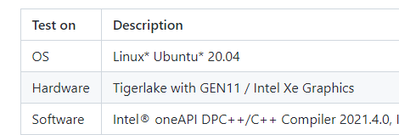

BTW, My platform is

And my last issue contains that the dpct cannot find any CUDA code after processing migration:

Could you please provide your instructions on migration on https://github.com/saifullah3396/octomap.git I posted before?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We tried to reproduce your issue. We were able to successfully migrate the code.

>> Could you please provide your instructions on migration on https://github.com/saifullah3396/octomap.git I posted before?

According to "octomap/CMakeLists.txt:38: # __CUDA_SUPPORT__ = enable CUDA parallelization (experimental, defaults to OFF)", CUDA support is OFF by default in the CMakeLists.txt.

Please ensure CMake is configured with CUDA support before proceeding with the migration.

Now, please run the below commands:

# Generate compile_commands.json:

intercept-build make

# Then, migrate the entire code base with the compile_commands database file:

build$ dpct -p compile_commands.json --out-root=dpct_out --cuda-include-path=<cuda-path>/include/ --in-root=..

# or manually with:

src$ dpct TArray.cu CudaOctomapUpdater.cu --cuda-include-path=<cuda-path>/include/ --in-root=.. --extra-arg="-I<project-path>/octomap/octomap/include/"Please note that before running intercept-build, it is a best practice to clean any existing build files using "make clean".

Hope the provided details will help you to resolve your issues.

Thanks & Regards,

Manjula

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

it still cannot work on my emvironment.

1. > Please note that before running intercept-build, it is a best practice to clean any existing build files using "make clean".

I did the deleting on the old codes and clone again

2. > Please ensure CMake is configured with CUDA support before proceeding with the migration.

Changed the line from

SET(__CUDA_SUPPORT__ FALSE CACHE BOOL "Enable/disable CUDA parallelization")

SET(__CUDA_SUPPORT__ TRUE CACHE BOOL "Enable/disable CUDA parallelization")

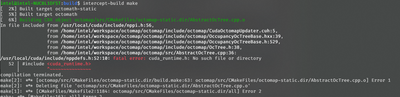

'cmake' is OK for me but when I run 'intercept-build make', I got

Added the full path to cuda include folder, and continue to run intercept-build make

3. > src$ dpct TArray.cu CudaOctomapUpdater.cu --cuda-include-path=<cuda-path>/include/ --in-root=.. --extra-arg="-I<project-path>/octomap/octomap/include/"

For my execution, run

intel@intel-NUC8L1OFST:src$ dpct TArray.cu CudaOctomapUpdater.cu --cuda-include-path=/usr/local/cuda/include/ --in-root=.. --extra-arg="-I/home/intel/workspace/octomap/octomap/include/"

Got no CUDA code detected again.

Can you provide me with your whole detailed steps? Hence I can compare them with mine and may find the gap.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

My partner could go further by following your suggestion on the migration of CMake project while on cuda file is still getting no cuda file detected.

Thanks for your replies. Maybe this issue is also related to my environment. We'll go for a try and the current issue is resolved now.

Thanks again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for accepting our solution. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel. Have a nice day!

Regards,

Manjula

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page