- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My code:

void OnDrawGizmos() {

Vector3 fwd = transform.TransformDirection(Vector3.forward);

Gizmos.color = Color.cyan;

Gizmos.DrawRay(transform.position, fwd * 500);

}

The idea is simple. Raycast a line precisely lined up with the camera view that extends from the RealSense hand tracking object (a sprite that looks like a hand). Move that hand object around the screen to select and interact with various UI graphics or physics colliders with which the ray collides.

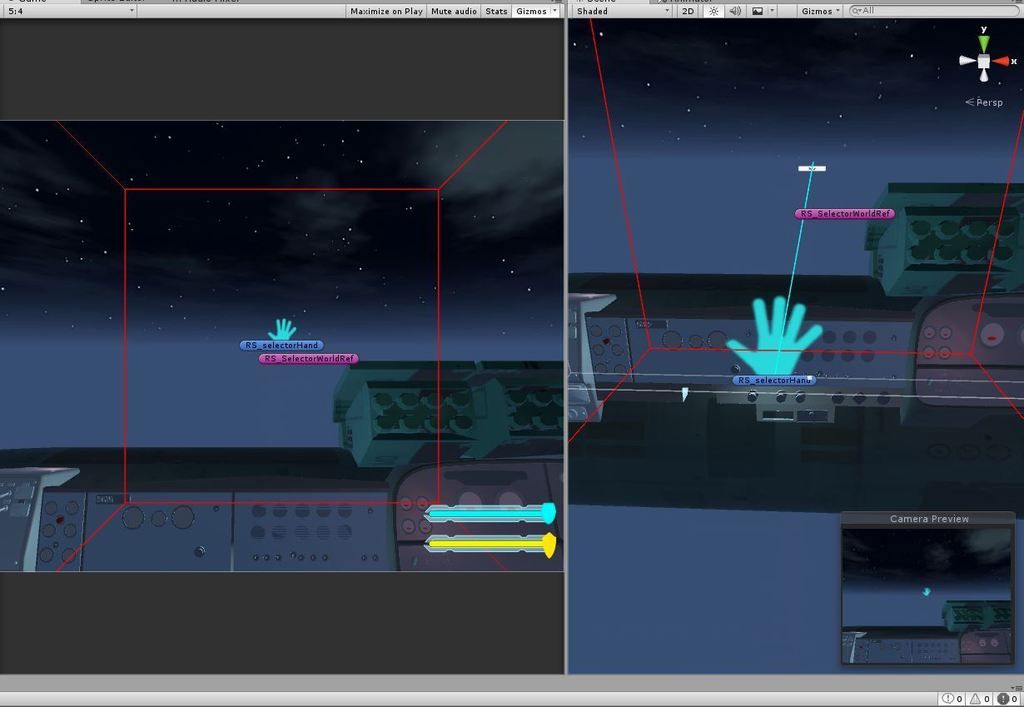

Initial position:

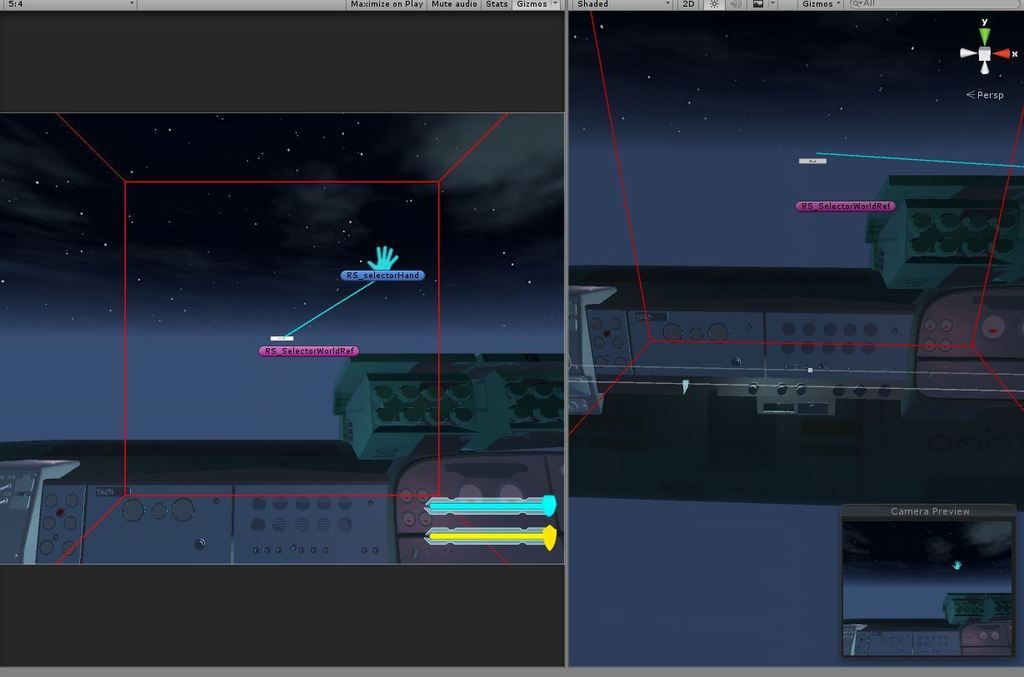

Moving the hand around, target apparently locked at initial position:

Moving the hand around, target apparently locked at initial position:

I'm using the RSUnityToolkit Tracking Action to move the mouse-pointer-esque, hand-tracked sprite. When I run this visual debug gizmo in play mode, the raycast initializes perfectly in line with the camera and the local origin, but that's because my hand tracking sprite icon starts there. Moving the hand tracking icon around, it's clear that the point remains locked in the distance at that point. This is not what I want. I want the raycast to match the perspective of the camera. If the user hovers the tracked sprite's pivot directly over an object on screen that may be selected with a gesture (or whatever), the user should be able to interact with that object.

That's all I want to do! Target an object with Hand Tracking. Maybe it's the way my object is set up in the scene? Maybe it's the way the Tracking Action references the central position? Do I really have to dig into the Tracking Action rule to figure this out, or is my idea of forward relative to the camera just incorrect?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It wasn't clear to me whether your sprite hand had depth and could move from the foreground to the background and back again, or whether it was locked to a 2D left-right-up-down plane like the mouse pointer on a computer screen.

It the cursor is locked to a 2D plane, it seems to me that rather than using ray-casting for detection, a simpler solution might be to adjust the collider field of each UI element to extend to the foreground of the screen where the pointer is. Then when the pointer passes through a Trigger-enabled field projected by a UI element, that element will be activated.

Using this method, if your pointer *does* have screen depth and can go to the background and back then it will still work, since the pointer will activate the trigger when it goes through the side of the collider field.

I adapted your own image to help illustrate what I mean (sorry for not embedding the image, the forum keeps saying I'm unauthorized when I try to embed!)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

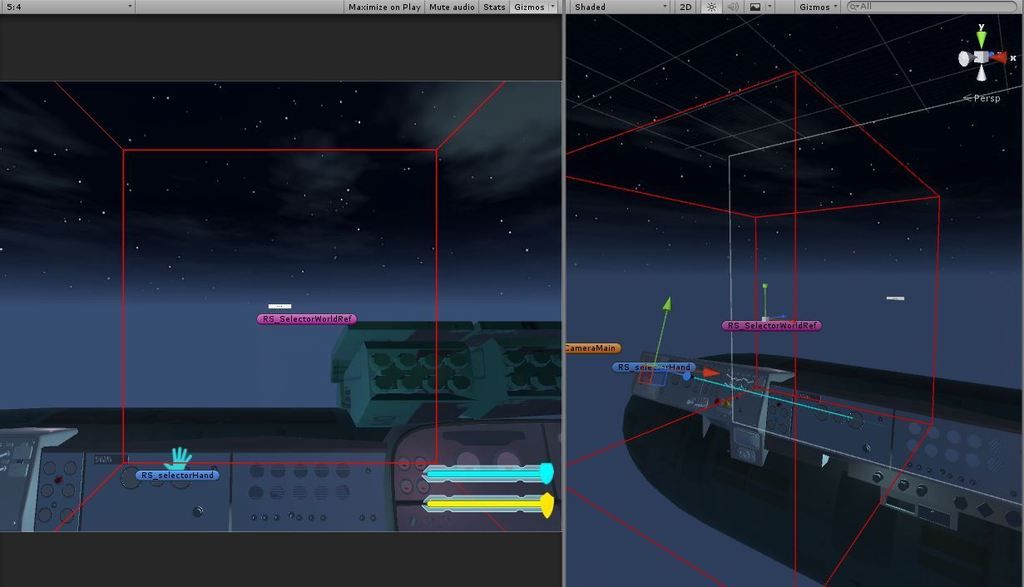

Problem solved.

I wanted my hand sprite to "look" in the same direction as the camera, which requires the hand sprite to rotate a little bit... so it can look in the required direction. Basically instead of wanting the hand sprite to "LookAt" the camera, I want the opposite of LookAt, which can be done using LookRotation. I'm using the Camera's forward direction, which should be the same as Vector3.forward

void Update () {

transform.rotation = Quaternion.LookRotation(transform.position - Camera.main.transform.position);

}

void OnDrawGizmos() {

Vector3 fwd = transform.TransformDirection(Vector3.forward);

Gizmos.color = Color.cyan;

Gizmos.DrawRay(transform.position, fwd * 500);

}

Pointing into the world space using a hand that moves around in the space not only on X and Y, but also in and out on Z. Pretty cool.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

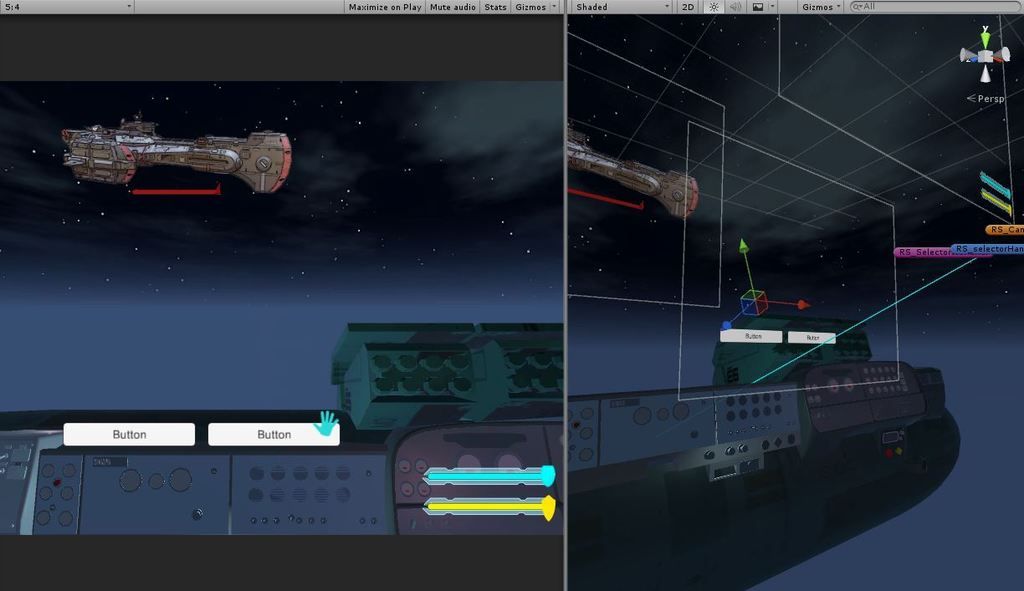

Nice image! Are you building a spaceship simulation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Marty, those aren't interactive labels though. Those are just tags I'm using for empty game objects that can't be seen that have been visualized in play mode for debugging. I've got a great solution for the UI using multiple canvas layers! I'm using a "Screen Space - Overlay" (the default setting) for non-interactive UI elements, such as the shield and hull integrity sliders you can see on the bottom right of the screen. UI elements with which the hand selector should interact I've organized onto a second canvas with a "Screen Space - Camera" render mode setting. The elements on the "Screen Space - Camera" canvas are far enough away from the hand selector to be behind it. Other interactive elements existing in 3D space, as well as child UI canvas objects of things in world space, etc., can be targeted and animated using the hand selector raycast. Just so you can see, here's what the UI components might look like... without the empty game object labels. :) The new UI layers are just plain awesome.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't dealt much with UI elements in Unity thus far. It's something I'm due to have a go at later in my project. That's the good thing about knowledge sharing here - we're each at different points in our projects, learning different things, and we can each fill in the pieces that the other doesn't have yet. Kind of like trading pokemon or swapping collectable cards. :)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page